Article Directory

Cache of background:

We do a simple test to read a local file to make a collect operation:

val rdd=sc.textFile("file:///home/hadoop/data/input.txt")

val rdd=sc.textFile("file:///home/hadoop/data/input.txt")

Above we performed the same operation twice, the log observation we found that this sentence Submitting ResultStage 0 (file: ///home/hadoop/data/input.txt MapPartitionsRDD [1] at textFile at: 25), which has no missing parents, every time to read input.txt local file, where we can think of what problems? If my file is large, each have carried out the same operation on the same action RDD, so every time to local reading the file, get the same results. This ongoing operation is repeated, time-consuming waste of resources ah. This time we might think of the RDD can be kept in memory of it? The answer is yes, this is what we should learn from the cache.

Cache role:

Through the above explanation we know, sometimes a lot of places will be used with a RDD, then each place will be encountered when operating the calculation of Action the same operator several times, this will cause inefficiencies. RDD can persist to memory or disk through cache operation.

Now we said above by way of example, the cache operation performed rdd

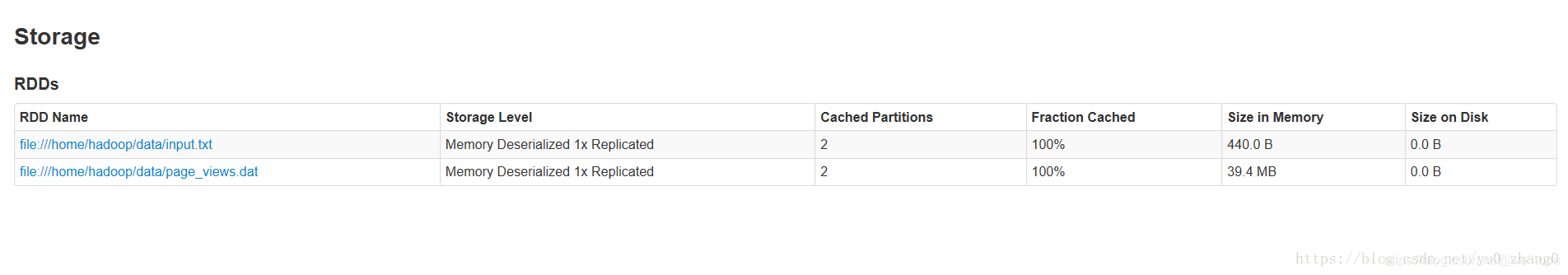

rdd.cache this time we open our 192.168.137.130:4040 interface to see if there's just a file cache storage interface, and found none. This time we operate a action rdd.count. Continue to view storage is not a thing, huh,

and gave us a lot of information list, store level (explain later), size (large than source files will find this is a tune advantage) and so on.

Here what can small partners can think of it? cacha is a Tranformation or an Action it? I believe everyone should know.

This method is also a cache Tranformation, when first encountered when the operator Action will be persistent, so that the first time we had not seen in a cache operation ui result, operations have been count.

Source detailed analysis:

Spark Version: 2.2.0

source code analysis

/**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def cache(): this.type = persist()

As is evident from source cache () call persist (), you want to know the difference between the two need to look at the function persist :( Here's the comment cache storage level)

/**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def persist(): this.type = persist(StorageLevel.MEMORY_ONLY)

You can see the persist () internal calls persist (StorageLevel.MEMORY_ONLY), it is not on the face and upper Kazakhstan, where we can draw the difference between cache and persist in the: cache only a default cache level MEMORY_ONLY, and may persist other cache level setting according to the situation.

I believe that small partners must be very curious about this cache level in the end how many do? We continue to hate to see the source code:

**

* :: DeveloperApi ::

* Flags for controlling the storage of an RDD. Each StorageLevel records whether to use memory,

* or ExternalBlockStore, whether to drop the RDD to disk if it falls out of memory or

* ExternalBlockStore, whether to keep the data in memory in a serialized format, and whether

* to replicate the RDD partitions on multiple nodes.

*

* The [[org.apache.spark.storage.StorageLevel]] singleton object contains some static constants

* for commonly useful storage levels. To create your own storage level object, use the

* factory method of the singleton object (`StorageLevel(...)`).

*/

@DeveloperApi

class StorageLevel private(

private var _useDisk: Boolean,

private var _useMemory: Boolean,

private var _useOffHeap: Boolean,

private var _deserialized: Boolean,

private var _replication: Int = 1)

extends Externalizable

Let's take a look at the type of storage, source code, we can see there are five parameters, representing:

the usedisk : a hard disk (external memory);

useMemory : use of memory;

useOffHeap : Use external heap memory, which is the concept of the Java virtual machine inside, outside heap memory allocation means that the memory objects in the heap outside the Java virtual machine, which are directly affected by the operating system memory management (instead of the virtual machine). The result of this is to keep a small heap of garbage collection to reduce the impact on the application. This memory will be used but frequently also cause the OOM, which is referenced by the object in the heap memory DirectByteBuffer, stack and heap to avoid back and forth outside the data copying;

deserialized : deserialized, the inverse of the sequence of processes (the Serialization) is a mechanism provided java, object represented as a series of bytes; and deserialization says bytes recovery process of the object. Object serialization mechanism is permanent, the object and its properties can be saved up, and can directly restore the object after deserialization;

Replication : back up (backup on the plurality of nodes, as a default).

We then look at the cache level:

/**

* Various [[org.apache.spark.storage.StorageLevel]] defined and utility functions for creating

* new storage levels.

*/

object StorageLevel {

val NONE = new StorageLevel(false, false, false, false)

val DISK_ONLY = new StorageLevel(true, false, false, false)

val DISK_ONLY_2 = new StorageLevel(true, false, false, false, 2)

val MEMORY_ONLY = new StorageLevel(false, true, false, true)

val MEMORY_ONLY_2 = new StorageLevel(false, true, false, true, 2)

val MEMORY_ONLY_SER = new StorageLevel(false, true, false, false)

val MEMORY_ONLY_SER_2 = new StorageLevel(false, true, false, false, 2)

val MEMORY_AND_DISK = new StorageLevel(true, true, false, true)

val MEMORY_AND_DISK_2 = new StorageLevel(true, true, false, true, 2)

val MEMORY_AND_DISK_SER = new StorageLevel(true, true, false, false)

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)

Here you can see a list of 12 kinds of cache levels, ** but what difference does it make? ** see behind each cache level related StorageLevel a constructor, which contains four or five parameters, and above said corresponding storage type, because there is a four parameters are the default worth it.

Well, here I would like to ask a question of the small partners, these types of storage What does it mean? How to choose?

The official website for a detailed explanation. I introduce a few here, interested students can go to the official website to see Ha.

MEMORY_ONLY

using deserialized Java object format, the data stored in memory. If not enough memory to store all the data, some of the partitions will not be cached, and will be recalculated when needed. This is the default level.

MEMORY_AND_DISK

use of anti-serialized Java object format, try to priority data stored in memory. If not enough memory to store all the data, writes the data to a disk file, the next execution count midnight on the RDD, persistent disk file data is read out of use.

MEMORY_ONLY_SER ((Java and Scala))

the basic meaning of the same MEMORY_ONLY. The only difference is that the data will be serialized in RDD, each Partition RDD will be serialized into a byte array. This approach is more save memory, but will increase the burden on cpu.

A storage level of understanding of the difference between a simple case of sensory line:

19M page_views.dat

val rdd1=sc.textFile("file:///home/hadoop/data/page_views.dat")

rdd1.persist().count

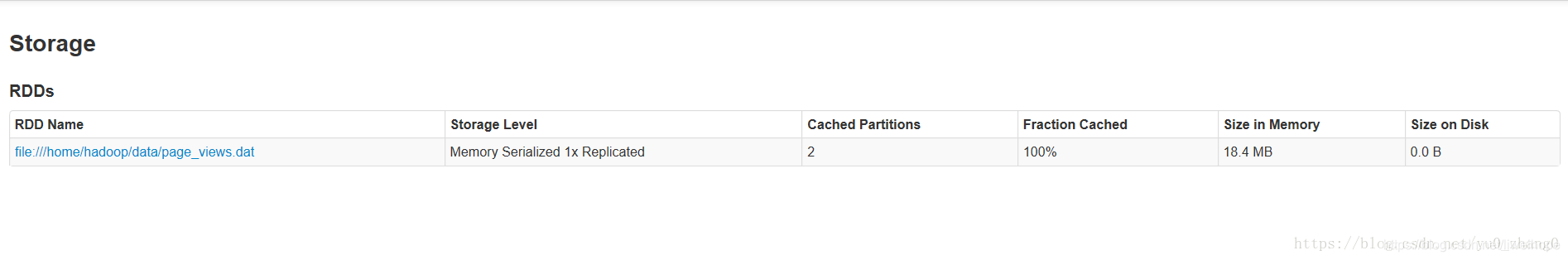

ui view the cache size:

is not significantly bigger, let's delete cache rdd1.unpersist ()

Use MEMORY_ONLY_SER level

import org.apache.spark.storage.StorageLevel

rdd1.persist(StorageLevel.MEMORY_ONLY_SER)

rdd1.count

Here I compare with these two methods, you can try other ways.

How do I choose? Haha official website said.

You can use between memory and CPU efficiency to make different trade-offs of different choices.

-

1. Under default, the highest performance, of course, MEMORY_ONLY, but only if you have enough memory large enough to be more than enough to store all data in the entire RDD. Since no serialization and deserialization operation, to avoid the performance overhead of this part; RDD subsequent operation of the operator, are not required to read data from a disk file data based on the operation of pure memory, high performance; does not require copying copy of the data, and remote transmission to other nodes. But here it must be noted that, in the actual production environment, I am afraid that this strategy can be directly used scenes still limited, if the data RDD relatively long time (such as several billion), the direct use of this persistence level, will OOM lead to the JVM memory overflow exception.

-

2. If there is a memory overflow when using MEMORY_ONLY level, it is recommended to try to use MEMORY_ONLY_SER level. This level will then RDD serialized data stored in memory, then each partition is just only a byte array, reduces the number of objects, and reduces memory footprint. This extra level of performance overhead than MEMORY_ONLY, mainly serialization and de-serialization overhead. However, the operator may operate the subsequent memory-based, so overall performance is still relatively high. In addition, problems may occur above, if the amount of data RDD is too much, then he may cause memory overflow OOM exception.

-

3. Do not leak to disk, unless you count in memory requires a lot of cost, or you can filter large amounts of data, save a relatively important part in memory. Otherwise, the calculation is stored on disk can be slow, drastically reduced performance.

-

4. suffix _2 level, you must make a copy of all data sent to other nodes, data replication and network transmission can result in a large performance overhead, unless it is a job requirement for high availability, it is not advisable use.

Delete the data cache:

automatic monitoring using spark cache on each node, and in (LRU) the least recently used way to discard the old data partition. If you want to manually delete the RDD, rather than waiting for it to fall out from the cache, use RDD.unpersist () method.

Reprinted from the original: Cache, the persist difference Spark core, as well as cache levels Detailed