This article was shared by Huang Shancheng, a senior development engineer at Bilibili. The original title was "Ten Thousands of Long-term Message System". This article has been typeset and content optimized.

1 Introduction

In today's digital entertainment era, barrages have become one of the indispensable interactive elements on live broadcast platforms.

By sending barrages, sending gifts, etc., users can show their thoughts, comments and interactive content on the live broadcast screen in real time, thus enriching the user viewing experience. In this process, pushing interactive information to the terminal in real time requires the use of long connections.

A long connection, as the name suggests, is a network data channel maintained with the server during the lifetime of the application, and can support full-duplex uplink and downlink data transmission. The biggest difference between it and the short connection service in the request response mode is that it can provide the server with the ability to actively push data to users in real time.

This article will introduce the architectural design and practice of the tens of millions of long-connection real-time messaging system implemented by Bilibili based on golang, including the framework design of the long-connection service, as well as related optimizations for stability and high throughput.

2. Related articles

- " The evolution of Bilibili's microservice-based API gateway from 0 to 1 "

- " Graphite Document Standalone 500,000 WebSocket Long Connection Architecture Practice "

- " Technical Practice of Baidu Unified Socket Long Connection Component from 0 to 1 "

- " Tantan's IM Long Connection Technology Practice: Technology Selection, Architecture Design, and Performance Optimization "

- " iQiyi WebSocket real-time push gateway technology practice "

- " LinkedIn's Web-side Instant Messaging Practice: Achieving Hundreds of Thousands of Long Connections on a Single Machine "

- " A secure and scalable subscription/push service implementation idea based on long connections "

- " Technical Practice Sharing of Meizu's Real-time Message Push Architecture with 25 Million Long Connections "

- " Exclusive Interview with Meizu Architect: Experience of Real-time Message Push System with Massive Long Connections "

3. Architecture design

3.1 Overview

The long connection service is a long connection shared by multiple business parties.

Because when designing, it is necessary to take into account the demands of different business parties and different business scenarios for long-term connection services. At the same time, the boundaries of long-term connection services must also be considered to avoid intervening in business logic and affecting the subsequent iteration and development of long-term connection services.

Long connection services are mainly divided into three aspects:

- 1) Establishment, maintenance, and management of long connections;

- 2) Downlink data push;

- 3) Uplink data forwarding (currently only heartbeat, no actual business scenario requirements).

3.2 Overall architecture

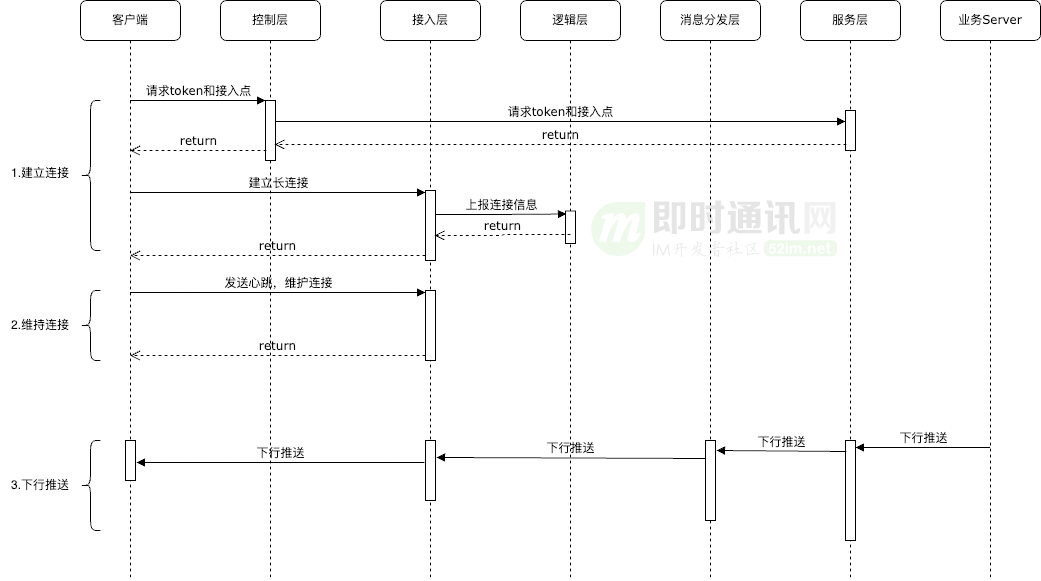

The overall structure of the long connection service is shown in the figure above. The overall service includes the following parts.

1) Control layer: pre-call for connection establishment, mainly for access legality verification, identity verification and routing control.

main duty:

- 1) User identity authentication;

- 2) Encrypt assembly data and generate legal token;

- 3) Dynamic scheduling and allocation of access nodes.

2) Access layer: core service of long connection, mainly responsible for uninstalling certificates, protocol docking and long connection maintenance.

main duty:

- 1) Uninstall certificates and protocols;

- 2) Responsible for establishing and maintaining connections with clients, and managing the mapping relationship between connection id and roomid;

- 3) Process uplink and downlink messages.

3) Logic layer: Simplified access layer, mainly for long-term connection business functions.

main duty:

- 1) Online number reporting records;

- 2) Record the mapping relationship between each attribute of the connection ID and each node.

- 4) Message distribution layer: messages are pushed to the access layer.

main duty:

- 1) Message encapsulation, compression and aggregation are pushed to the corresponding edge nodes;

5) Service layer: Business service docking layer, providing an entrance for downstream message push.

main duty:

- 1) Manage and control business push permissions;

- 2) Message detection and reassembly;

- 3) Message flow is limited according to certain policies to protect its own system.

3.3 Core process

Long connections mainly consist of three core processes:

- 1) Establishing a connection : initiated by the client, first through the control layer to obtain the legal token and access point configuration of the device;

- 2) Maintain the connection : mainly the client initiates heartbeats regularly to ensure that the long connection is active;

- 3) Downlink push : Downlink push is initiated by the business server. The service layer determines the connection identifier and access node according to the relevant identifier. Through the message distribution layer, the push is sent to the corresponding access layer, written to the designated connection, and then distributed. to the client.

3.4 Function list

Combined with the business scenario of station B, downlink data push provides the following general functions:

- 1) User-level messages: designated to be pushed to certain users (such as sending invitation pk messages to a certain anchor);

- 2) Device-level messages: Develop and push to certain devices (for example, push client log reporting instructions for unlogged devices);

- 3) Room-level messages: push messages to the connections in a certain room (such as pushing barrage messages to all online users in the live broadcast room);

- 4) Partition messages: Push messages to rooms in a certain partition (for example, push a certain revenue activity to all rooms that are broadcast in a certain partition);

- 5) District-wide news: Push messages to all platform users (such as push event notifications to all online users).

4. High throughput technology design

As the business develops and expands, there are more and more online users, and the pressure on the long-term connection system is increasing, especially the live broadcast of popular events. For example, during the S-Games, the number of people online on the entire platform reached tens of millions, and the message throughput reached hundreds of millions. The average delay in message distribution of the Changlian system is about 1 second, and the message arrival rate reaches 99%. The following is a detailed analysis of the measures Changlian has taken.

4.1 Network protocol

Choosing the right network protocol is critical to the performance of a long-connection system:

- 1) TCP protocol : It can provide reliable connection and data transmission, and is suitable for scenarios that require high data reliability;

- 2) UDP protocol : It is an unreliable protocol, but has high transmission efficiency and is suitable for scenarios that do not require high data reliability;

- 3) WebSocket protocol : It also realizes two-way communication without adding too much overhead, and is more used on the web side.

The access layer is split into protocol module and connection module:

- 1) Protocol module : interacts with specific communication layer protocols and encapsulates the interface and logical differences of different communication protocols.

- 2) Connection module : maintains the status of long-term connection business connections, supports requesting uplink, downlink and other business logic, maintains the attributes of the connection, and the binding relationship with the room ID.

Regarding point 1) above , the protocol module also provides a unified data interface to the connection module, including connection establishment, data reading, writing, etc. If new protocols are added in the future, as long as the adaptation is done in the protocol module, it will not affect the long-term business logic of other modules.

Advantage of:

- 1) Business logic and communication protocols are isolated to facilitate iterative addition of communication protocols and simplify the implementation difficulty of compatibility with multiple communication protocols;

- 2) The control layer can issue better communication protocols based on the actual situation of the client.

4.2 Load balancing

Load balancing technology can be used to distribute requests to different server nodes for processing, avoiding excessive load on a single node and improving the scalability and stability of the system.

Long-term connection adds a control layer for load balancing. The control layer provides an http short connection interface and dynamically selects the appropriate access node based on the actual situation of the client and each edge node and the proximity principle.

The access layer supports horizontal expansion, and the control layer can add and reduce allocation nodes in real time. During the S game, when the number of people online approached tens of millions, each access node was balanced and scheduled to ensure that the CPU and memory of each node were within a stable range.

4.3 Message queue

The message push link is: the business sends the push, pushes it to the edge node through the service layer, and then delivers it to the client.

The service layer distributes to each edge node in real time. If it is a room type message, it needs to be pushed to multiple edge nodes. The service layer also processes business logic, which greatly affects the message throughput.

Therefore, the message queue and message distribution layer are added. The message distribution layer maintains the information of each edge node and pushes messages, which improves the concurrent processing capability and stability of the system and avoids performance problems caused by message push blocking.

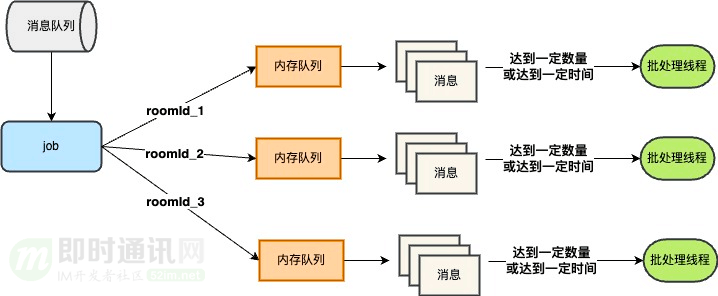

4.4 Message aggregation

When there is a popular event, the number of people online at the same time may reach tens of millions, and a barrage message will be spread to tens of millions of terminals. If everyone online sends one message every second, the amount of messages that need to be sent is 1kw*1kw, which is a very large amount of messages. , at this time, the message distribution layer and access layer will be under great pressure.

The analysis found that these messages are all from the same room and belong to hot rooms, such as the S game room. The number of viewers cannot be reduced, so we can only make a fuss about the number of messages. Business message push cannot be reduced, and the number of diffused messages must be reduced, so message aggregation was thought of.

For room messages, message aggregation is carried out according to certain rules and pushed in batches:

After message aggregation goes online, the QPS called by the message distribution layer to the access layer is reduced by about 60%, which greatly reduces the pressure on the access layer and message distribution layer.

4.5 Compression algorithm

After message aggregation, the number of messages is reduced, but the size of the message body is increased, which affects write IO. If the size of the message body needs to be reduced, message compression is thought of.

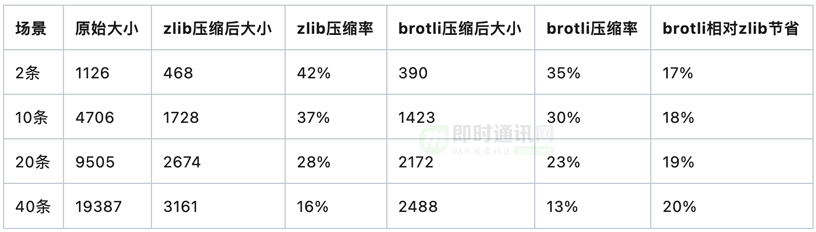

For compression algorithms, we selected two commonly used ones on the market: zlib and brotli for comparison.

We captured the data pushed by the online business, selected the highest compression level, and passed the compression verification:

It can be seen that brotli has great advantages over zlib, and the brotli compression algorithm was finally chosen.

Choose to perform message compression at the message distribution layer to avoid repeated compression at each access node and waste of performance. After going online, it not only improves throughput, but also reduces broadband usage costs.

5. Service guarantee technology design

Nowadays, some businesses rely heavily on long-term push messages. The loss of messages will affect the user experience at best, and block the subsequent business processes at worst, thus affecting business flow. In order to ensure long-term service message guarantee, the following work has been done.

5.1 Multi-active deployment

Multi-active deployment, by deploying the same system architecture and services in different geographical locations, achieves rapid failover of the system in the event of a single geographical failure, thus improving the stability and availability of the system.

Long-term service deployment mainly involves the following points:

- 1) LongConnect has deployed access points in East China, South China, and North China, supporting the three major operators; access points have also been deployed in self-built computer rooms in South China and Central China; to support overseas users, independent access to Singapore computer rooms has been added point;

- 2) For different business scenarios, switch between cloud nodes and self-built nodes in real time. Because the costs of cloud nodes and self-built computer rooms are different, costs should be controlled as much as possible while ensuring service quality.

During the current online operation, network jitters of single nodes or computer rooms are occasionally encountered. Through the control layer, problematic nodes are removed in seconds, which greatly reduces the impact on the business.

5.2 High and low message channels

Multi-service messages access long connections, but the importance of different messages is different, such as barrage messages and invitation pk messages. Losing a few barrage messages will not have a great impact on the user experience, but if the invitation pk message is lost , it will cause the pk business to be unable to proceed with subsequent processes.

For different levels of messages, high-low-quality message channels are used. Important news goes to the high-quality channel, and ordinary news goes to the low-quality channel. In this way, important and ordinary messages are physically separated, and message distribution prioritizes important messages.

For high-quality channels, dual delivery is guaranteed and idempotent deduplication is performed at the access layer. First of all, important messages are for the user level, and the volume will not be very large, so the pressure on the access layer will not increase greatly. In addition, dual-delivery jobs are deployed in multiple computer rooms, which reduces the impact of network jitter in a single computer room.

After the high-quality and low-quality channels went online, we encountered intranet outbound jitters. At that time, the job nodes affiliated to the intranet pushed messages abnormally, but the high-quality job nodes on the cloud could push messages normally, which ensured the arrival of high-quality messages and thus ensured High-quality services will not be affected.

5.3 Gundam functions

The high-low optimization channel solves the link from job to access layer, but the message push connection involves multiple links, such as the service layer to job, and the access layer to client.

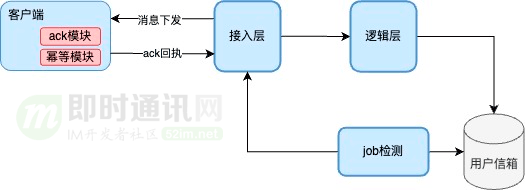

For the entire link, the arrival rate of the terminal is ensured by implementing the must-reach mechanism, which is referred to as the reach function.

Function implementation:

- 1) Each message introduces msgID, and the client performs idempotent deduplication and ack receipt after receiving the message;

- 2) The server performs ack detection on msgid, and if there is no ack, the server will retry the delivery within the validity period.

Final arrival rate = (1-(1-r)^(n+1)), where: r is the single arrival rate of the broadcast, and n is the maximum number of retries.

For example: r = 97%, n=2, then the final arrival rate can reach (1-(1-0.97)^(2+1)) = 99.9973%

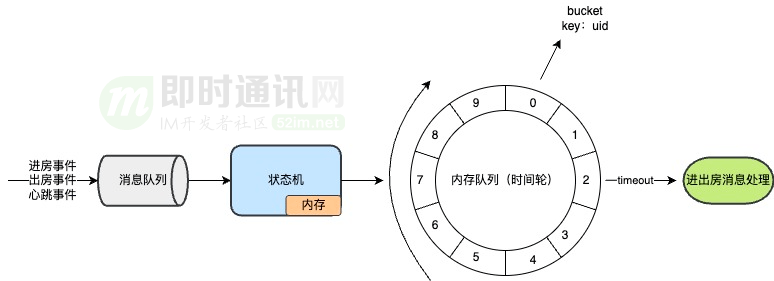

6. Delivery guarantee design for messages entering and exiting the "room"

Some business scenarios require the use of user entry and exit information. For example, when user A enters the live broadcast room, the page will display a message welcoming user A to enter the room or join the online list.

1) Room entry information will be lost, and a compensation mechanism is needed. I thought that the room entry message could be compensated by connecting to the heartbeat, but the heartbeat is continuous. During the connection online period, the business hopes to receive the room entry message only once, so the room entry message needs to have an idempotent mechanism.

2) Room check-out information will also be lost. If lost, the business cannot remove users from the online list. A compensation mechanism is also needed at this time. At this time, you need to add a state machine for the connection and maintain the state machine through heartbeats. When the heartbeat is lost, the connection is considered disconnected and the user checks out.

7. Future planning

After several iterations of the unified long connection service, the basic functions have become stable, and the long connection service will be improved and optimized in the future.

Mainly focus on the following directions:

- 1) Dataization : further improve the ability to collect long-connection full-link network quality data statistics and high-value message full-link tracking;

- 2) Intelligentization : On-device connection establishment, access point selection, etc. can be automatically adjusted according to the actual environment;

- 3) Performance optimization : In the connection module of the access layer, the Ctrips that process uplink and downlink messages are shared, reducing the number of Ctrips at the access layer and further improving single-machine performance and the number of connections;

- 4) Function expansion : Added offline messaging function, etc.

8. Reference materials

[1] Teach you step by step how to write a long Socket connection based on TCP

[5] Detailed explanation of TCP/IP - Chapter 11·UDP: User Datagram Protocol

[6] Detailed explanation of TCP/IP - Chapter 17·TCP: Transmission Control Protocol

[7] From beginner to proficient in WebSocket, half an hour is enough!

[8] One article is enough to quickly understand the TCP protocol

[9] Quickly understand the differences between TCP and UDP

[10] In just one pee, you can quickly understand the difference between TCP and UDP

[11] What exactly is Socket? Understand in one article!

[12] When we read and write Socket, what exactly are we reading and writing?

[13] If you were to design the TCP protocol, what would you do?

[14] Go deep into the operating system and understand what Socket is in one article

[15] It is easy to understand how high-performance servers are implemented.

- An introductory article on mobile IM development: " One article is enough for beginners: Developing mobile IM from scratch "

- Open source IM framework source code: https://github.com/JackJiang2011/MobileIMSDK ( Alternate address click here )

(This article has been simultaneously published at: http://www.52im.net/thread-4647-1-1.html )