Introduction:

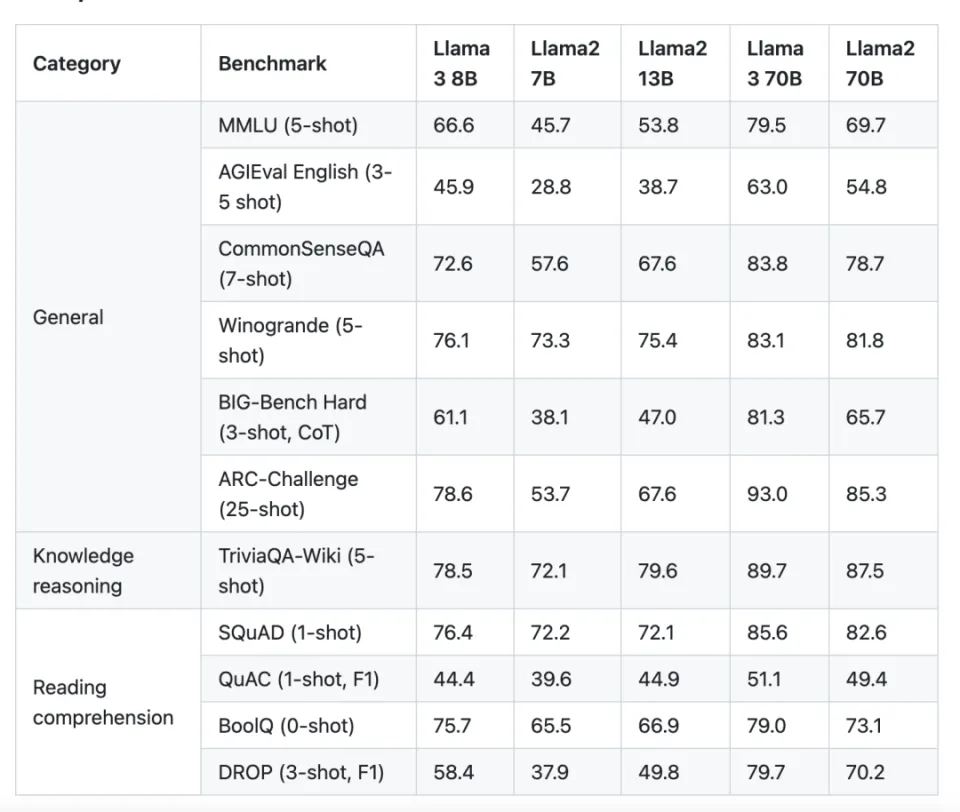

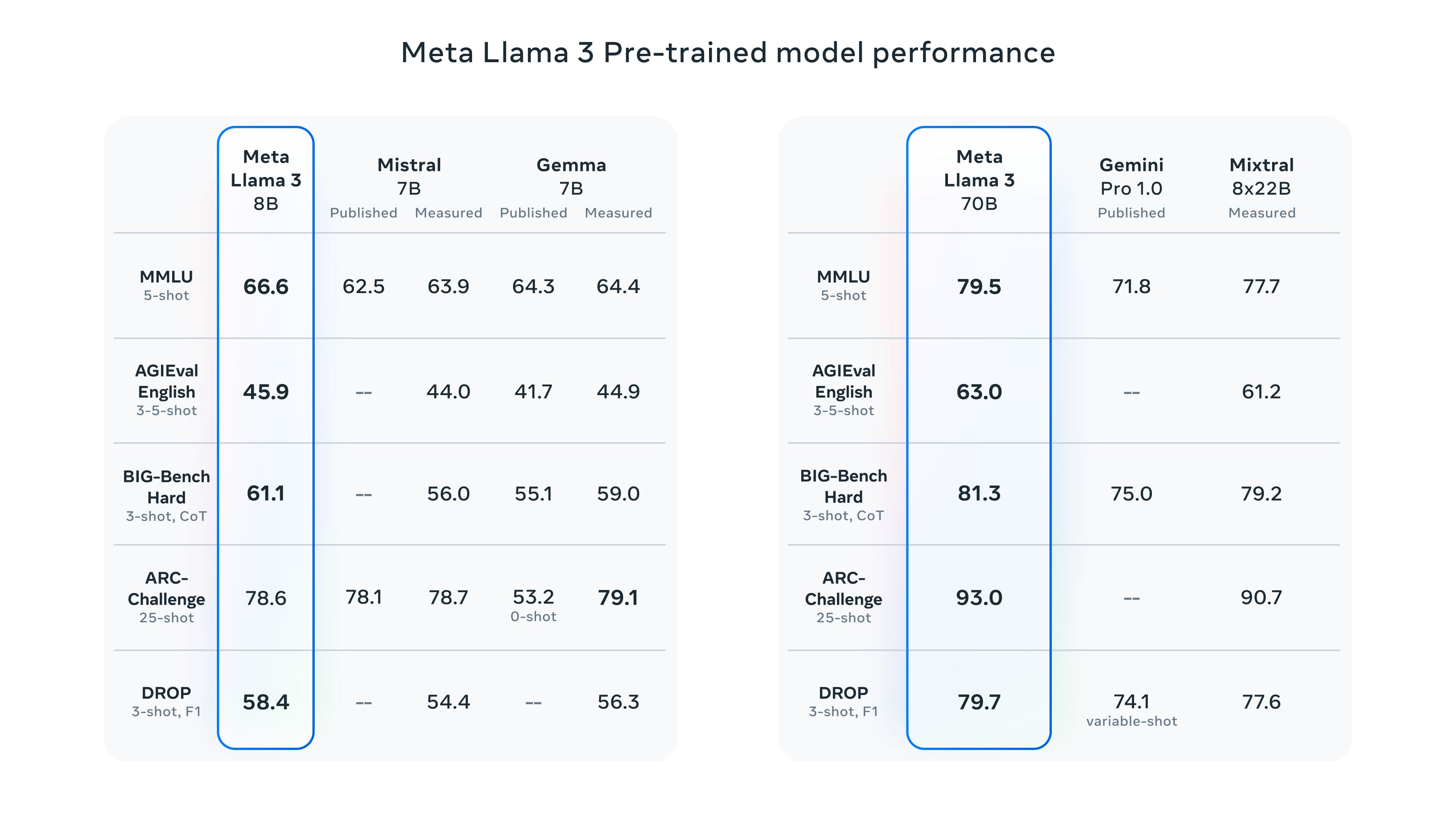

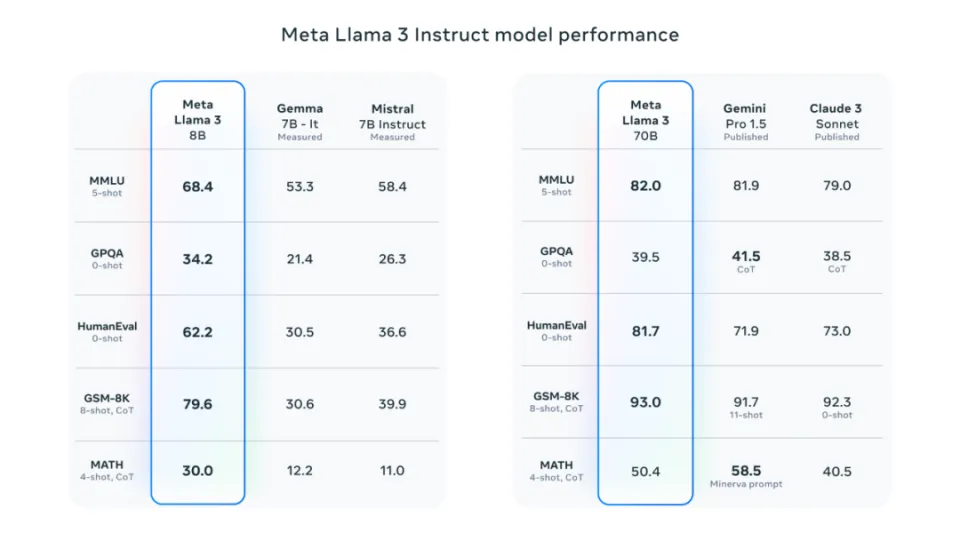

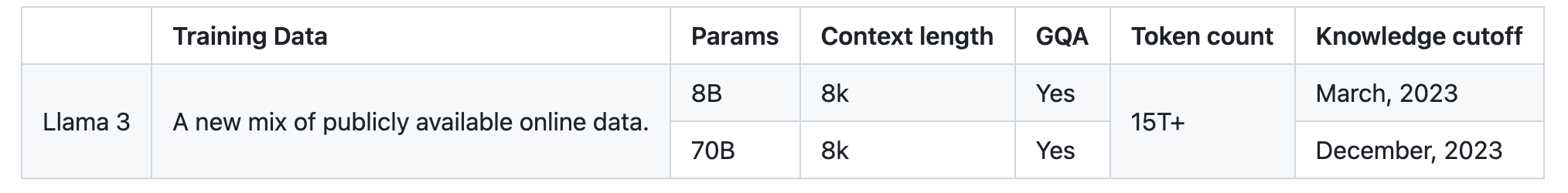

Llama is a large language model (LLM) developed and open sourced by the artificial intelligence research team of Meta (formerly Facebook). It is open for commercial use and has had a profound impact on the entire field of artificial intelligence. Following the previously released Llama 2 model that supports 4096 contexts, Meta has further launched the Meta Llama 3 series of language models with better performance, including an 8B (8 billion parameters) model and a 70B (70 billion parameters) model. The performance of Llama 3 70B is comparable to Gemini 1.5 Pro and surpasses Claude Big Cup in all aspects, while the 400B+ model is expected to compete with Claude Extra Large Cup and the new GPT-4 Turbo

In various test benchmarks, the Llama 3 series models have demonstrated their superior performance. They are comparable to other popular closed-source models on the market in terms of practicality and safety evaluation, and even surpass them in some aspects. The release of Meta Llama 3 series not only consolidates its competitive position in the field of large-scale language models, but also provides researchers, developers and enterprises with powerful tools to promote the further development of language understanding and generation technology.

project address:

https://github.com/meta-llama/llama3

Differences between llama2 and llama3

Differences between llama3 and GPT4

| index | Call 3 | GPT-4 |

|---|---|---|

| Model size | 70B、400B+ | 100B、175B、500B |

| Parameter Type | Transformer | Transformer |

| training objectives | Masked Language Modeling、Perplexity | Masked Language Modeling、Perplexity |

| training data | Books、WebText | Books、WebText |

| performance | SOTA (question and answer, text summarization, machine translation, etc.) | SOTA (question and answer, text summarization, machine translation, etc.) |

| Open source | yes | no |

Highlights of Llama 3

-

Open to everyone: Meta makes cutting-edge AI technology accessible by open-sourcing a lightweight version of Llama 3. Whether you are a developer, researcher or a friend who is curious about AI technology, you can freely explore, create and experiment. Llama 3 provides an easy-to-use API for researchers and developers.

-

Large model scale: The parameter scale of the Llama 3 400B+ model has reached 400 billion, which is a large language model.

-

Will be integrated into various applications soon: Llama 3 is currently empowered with Meta AI, Meta AI experience address: https://www.meta.ai/

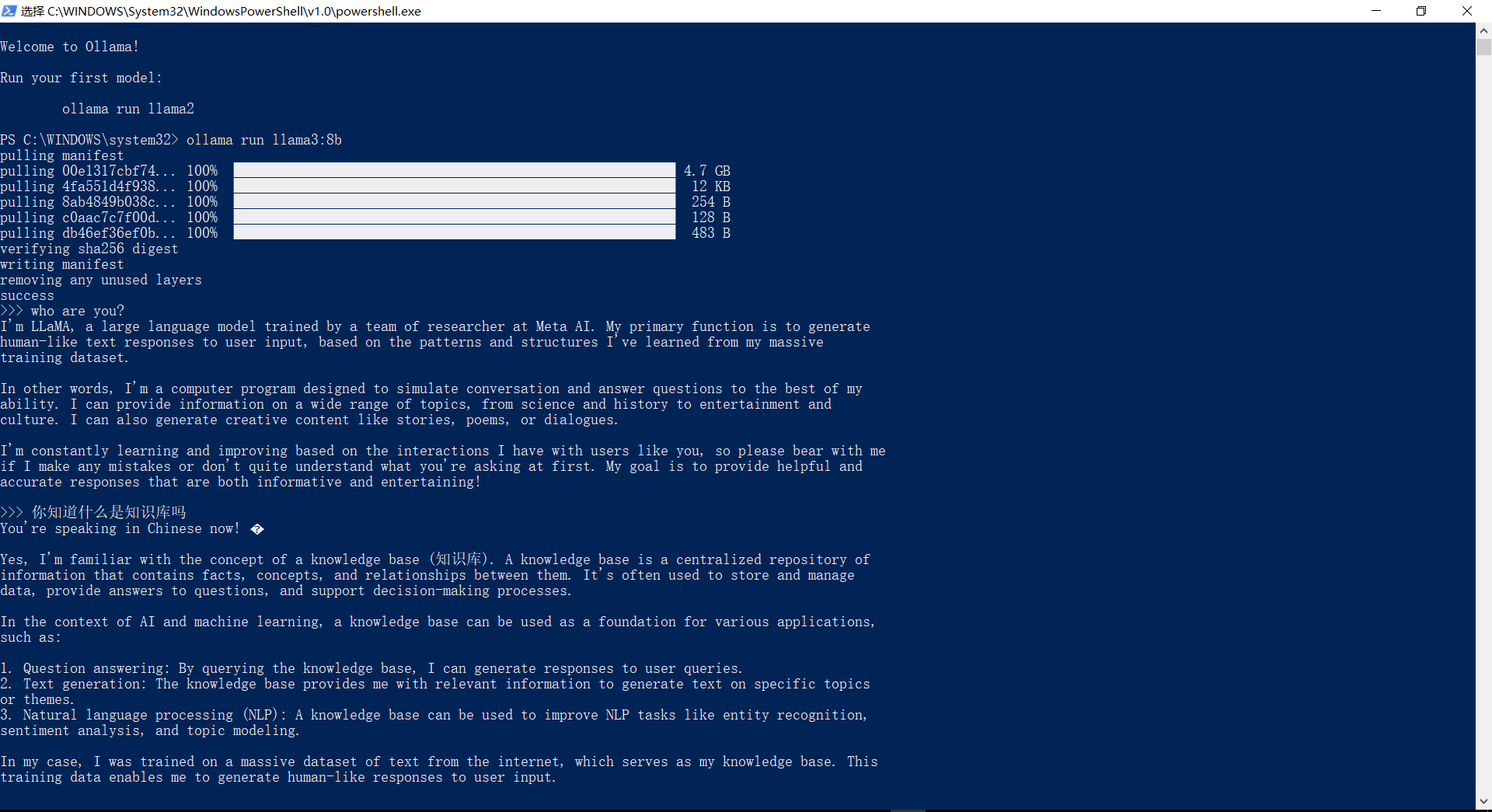

Using Ollama on Windows, running the Llama3 model

Visit https://ollama.com/download/windows page to download OllamaSetup.exethe installation program.

After installation, select the corresponding model parameters for installation according to your computer configuration (at least 8GB of memory is required to run 7B, and at least 16GB of memory is required to run 13B)

What I am running here is Llama3:8b. It can be seen that there are still some problems with Chinese.

| Model | Parameters | Size | Download |

|---|---|---|---|

| Call 3 | 8B | 4.7GB | ollama run llama3 |

| Call 3 | 70B | 40GB | ollama run llama3:70b |

| Mistral | 7B | 4.1GB | ollama run mistral |

| Dolphin Phi | 2.7B | 1.6GB | ollama run dolphin-phi |

| Phi-2 | 2.7B | 1.7GB | ollama run phi |

| Neural Chat | 7B | 4.1GB | ollama run neural-chat |

| Starling | 7B | 4.1GB | ollama run starling-lm |

| Code Llama | 7B | 3.8GB | ollama run codellama |

| Llama 2 Uncensored | 7B | 3.8GB | ollama run llama2-uncensored |

| Call 2 13B | 13B | 7.3GB | ollama run llama2:13b |

| Call 2 70B | 70B | 39GB | ollama run llama2:70b |

| Orca Mini | 3B | 1.9GB | ollama run orca-mini |

| The lava | 7B | 4.5GB | ollama run llava |

| Gemma | 2B | 1.4GB | ollama run gemma:2b |

| Gemma | 7B | 4.8GB | ollama run gemma:7b |

| Solar | 10.7B | 6.1GB | ollama run solar |

Using Hugging Face

Visit: https://huggingface.co/chat/ and switchModels

Replicate use

8B model: https://replicate.com/meta/meta-llama-3-8b

70B model: https://replicate.com/meta/meta-llama-3-70b

Linus took matters into his own hands to prevent kernel developers from replacing tabs with spaces. His father is one of the few leaders who can write code, his second son is the director of the open source technology department, and his youngest son is a core contributor to open source. Huawei: It took 1 year to convert 5,000 commonly used mobile applications Comprehensive migration to Hongmeng Java is the language most prone to third-party vulnerabilities. Wang Chenglu, the father of Hongmeng: open source Hongmeng is the only architectural innovation in the field of basic software in China. Ma Huateng and Zhou Hongyi shake hands to "remove grudges." Former Microsoft developer: Windows 11 performance is "ridiculously bad " " Although what Laoxiangji is open source is not the code, the reasons behind it are very heartwarming. Meta Llama 3 is officially released. Google announces a large-scale restructuringThis article is a reprint of the article Heng Xiaopai , and the copyright belongs to the original author. It is recommended to visit the original text. To reprint this article, please contact the original author.