Table of contents

4.1.1 Threshold-based segmentation

4.1.3 Based on region segmentation

4.1.4 Segmentation based on graph theory

4. Before deep learning

4.1 Image segmentation

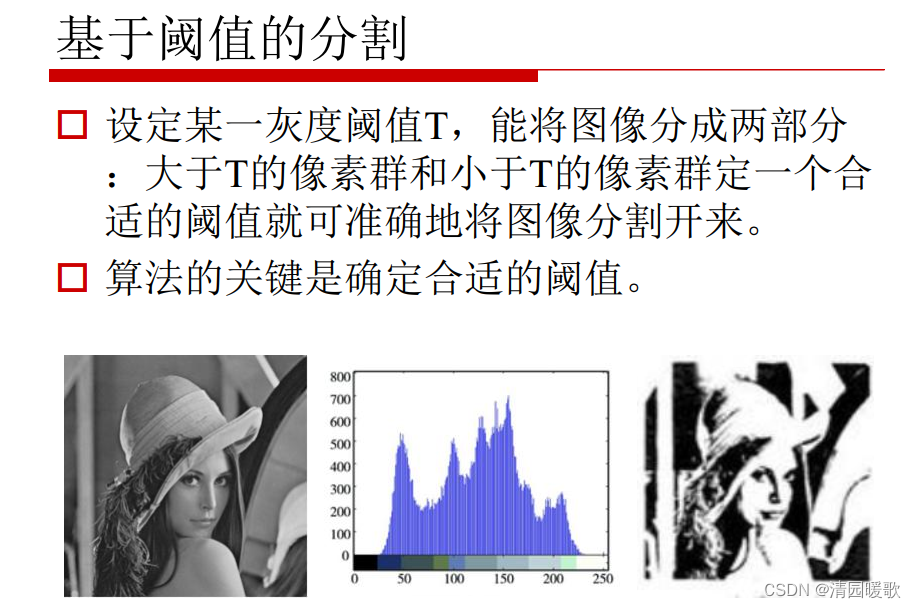

4.1.1 Threshold-based segmentation

There is a threshold selection method that maximizes the mean variance between the selected black and white and the original image.

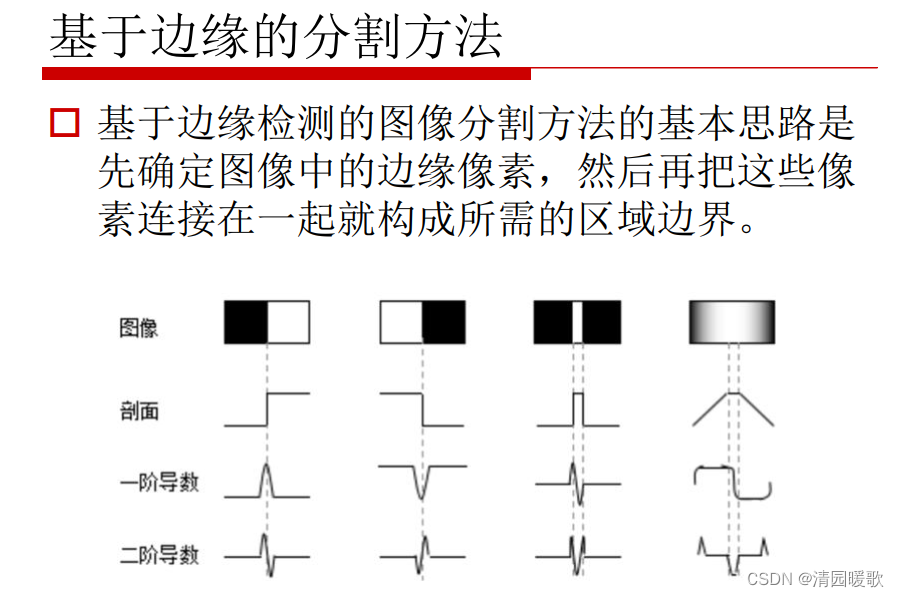

4.1.2 Edge-based segmentation

4.1.3 Based on region segmentation

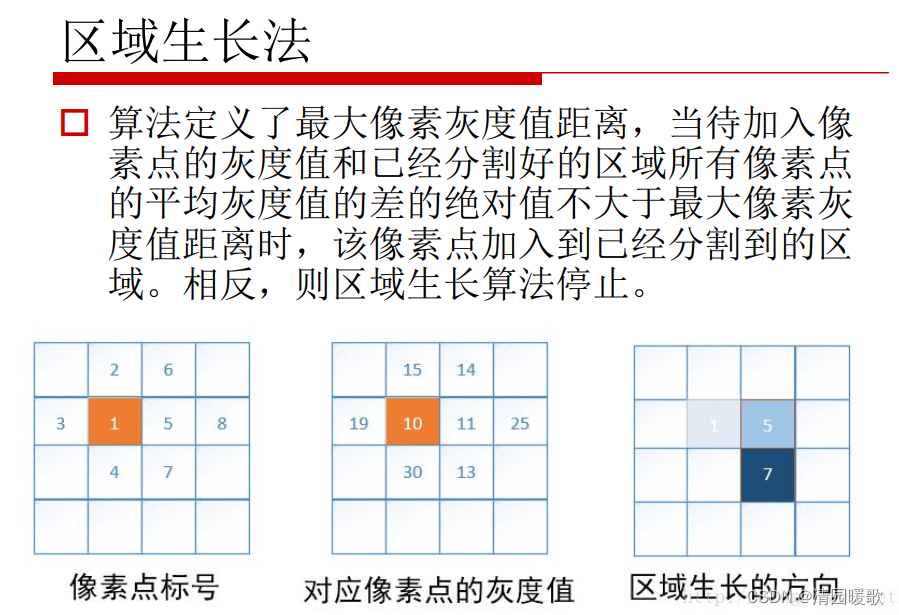

(1) Regional growth method

(2) Watershed algorithm

4.1.4 Segmentation based on graph theory

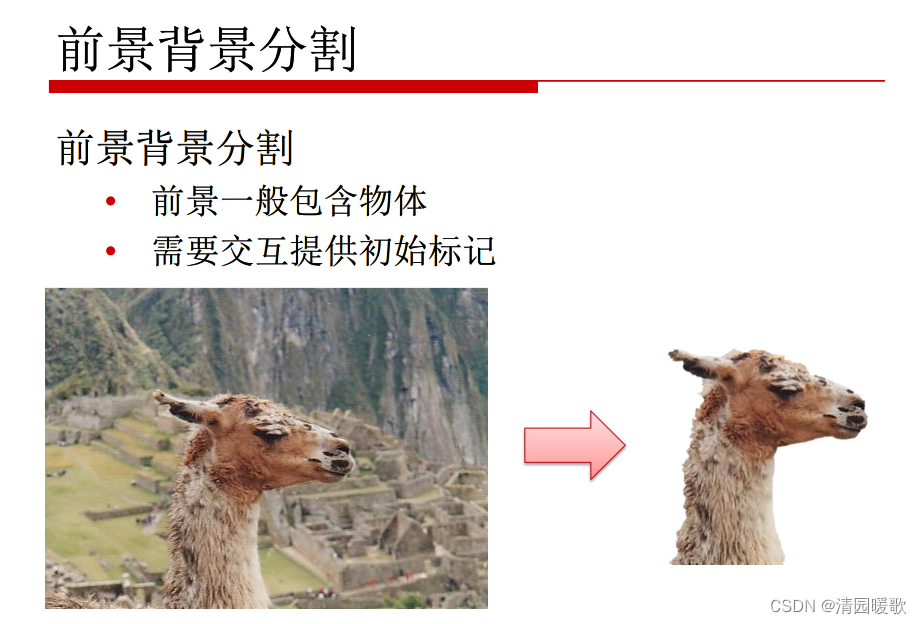

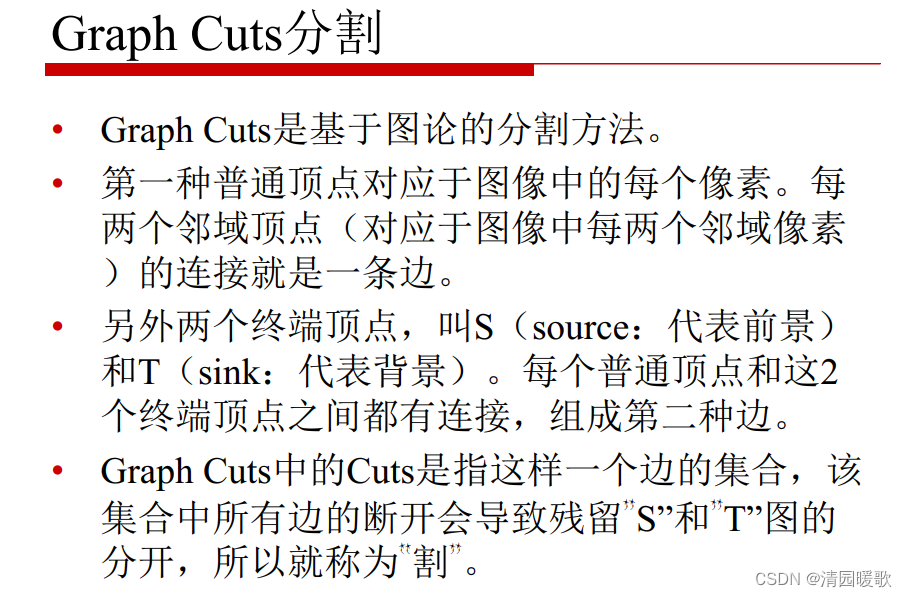

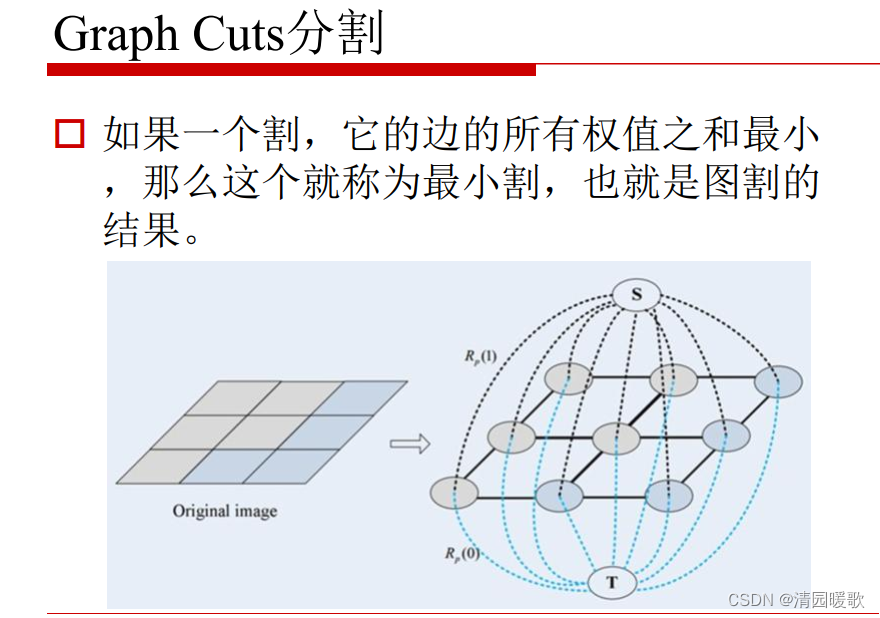

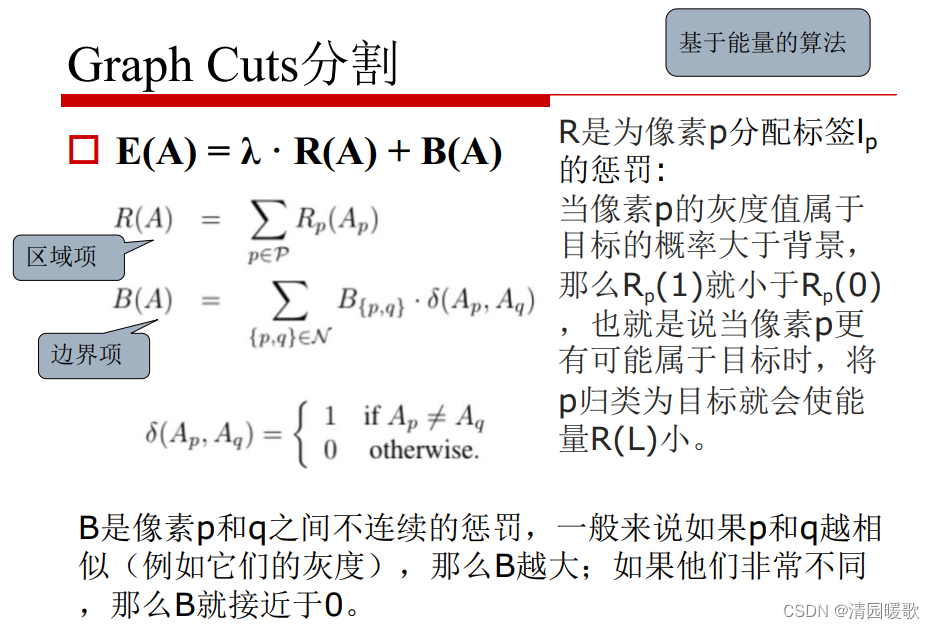

(1) Graph Cuts division

Rp represents whether any point is more likely to belong to the foreground or the background

B is whether the pixels are continuously similar

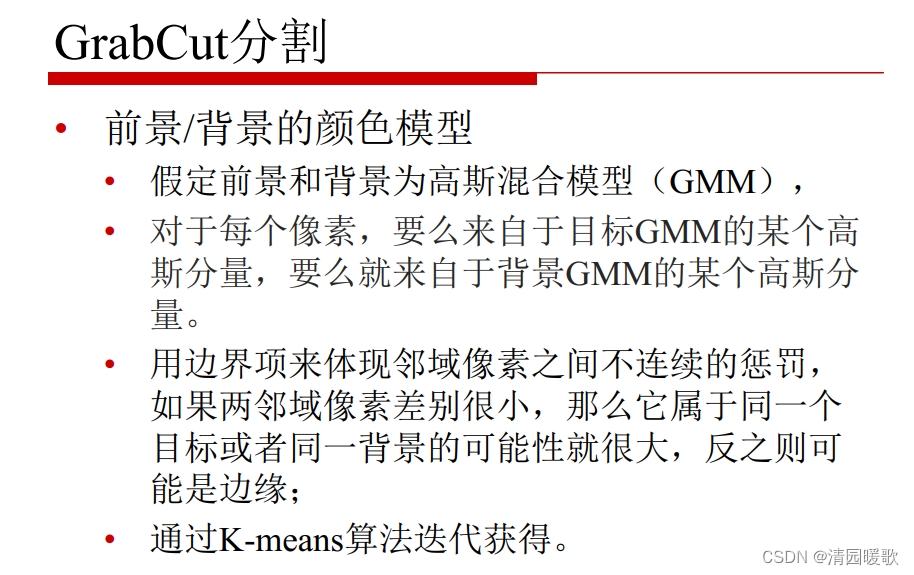

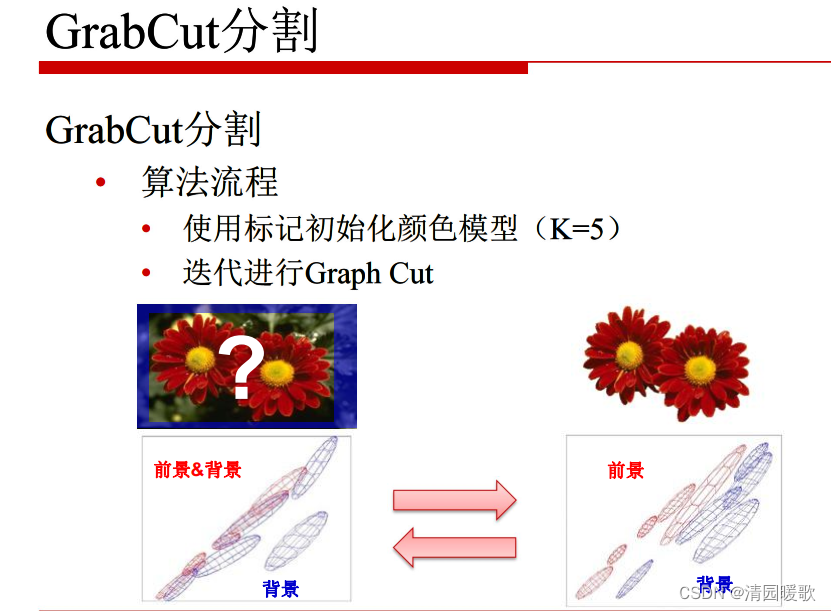

(2) GrabCut division

(2) GrabCut division

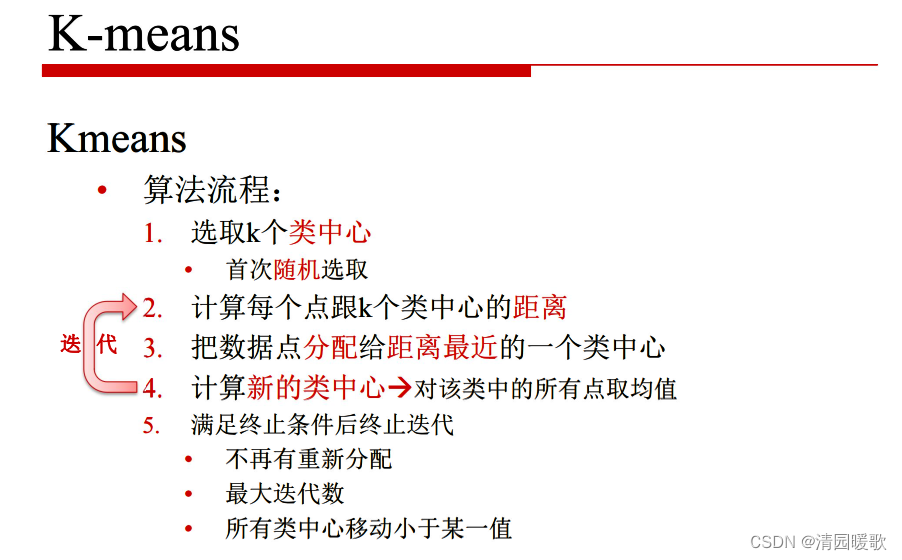

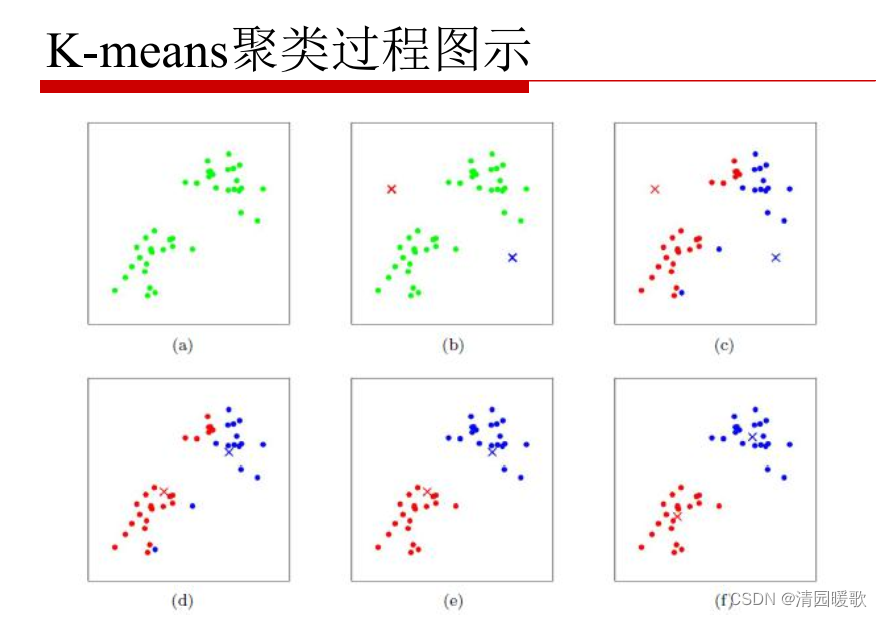

That is, the painted frame has the color distribution of the background and foreground, and then finds these color distributions to find several clustering centers. The color outside the frame is the background, and the background also finds several clustering centers; all that needs to be done is to continuously During the iteration process, the colors in the frame that belong to the background will gradually be classified into the cluster center outside the frame, and the colors in the frame only belong to themselves.

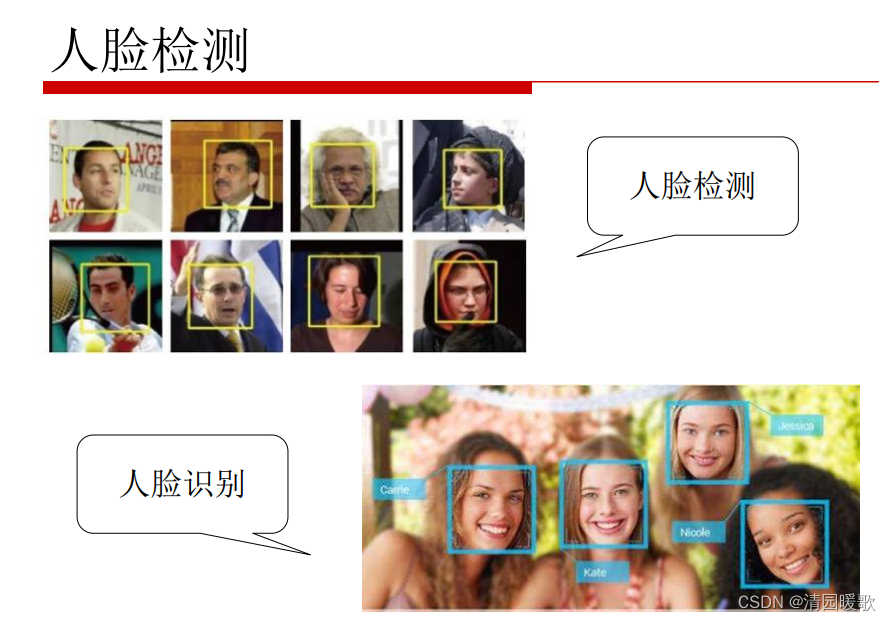

4.2 Face detection

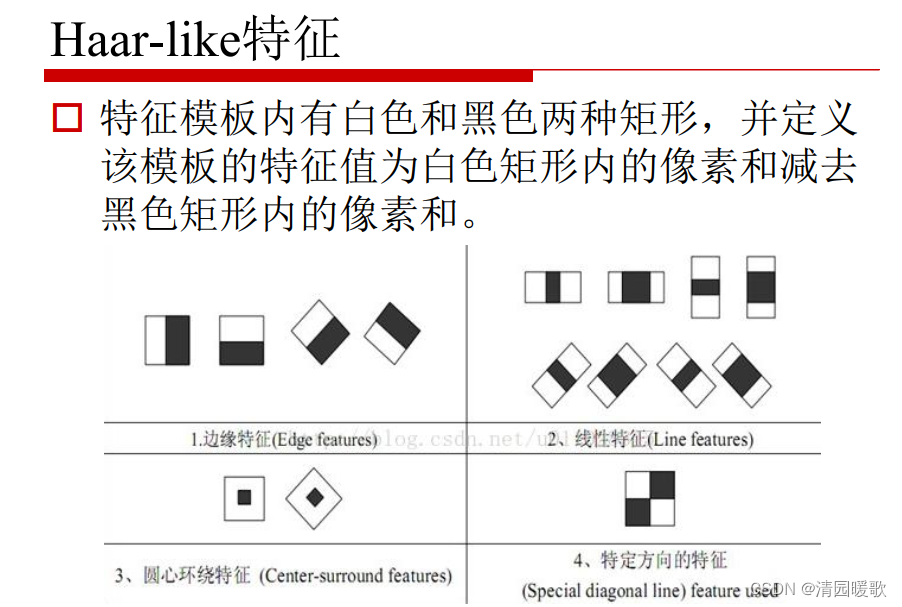

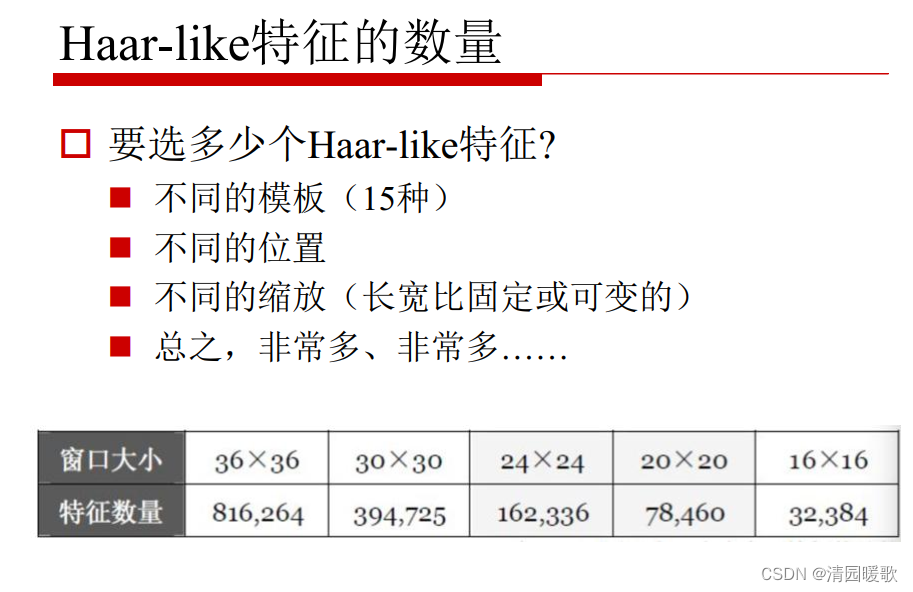

4.2.1 Haar-like features

4.2.2 Haar cascade classifier

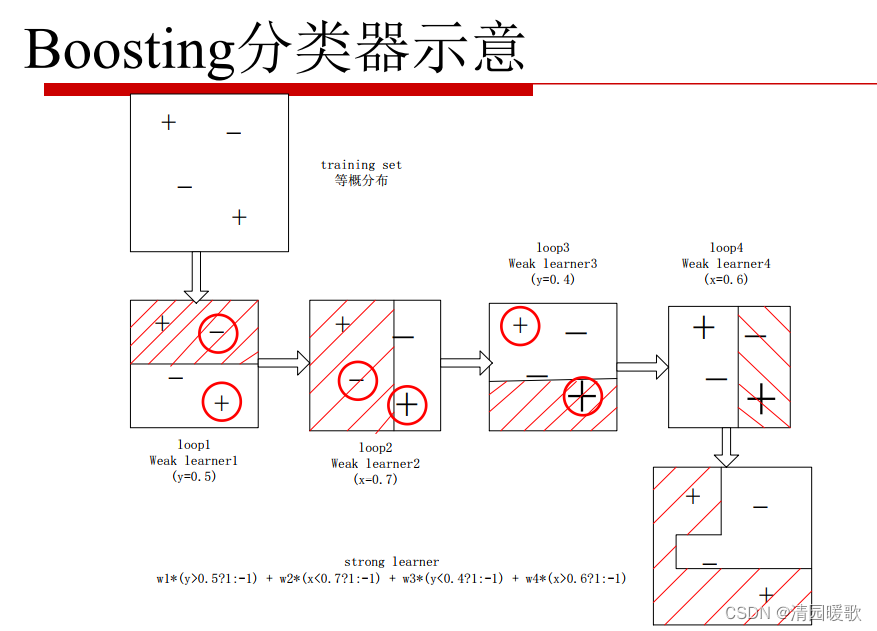

Currently, the only traditional model that can compare with deep learning models is xgboost.

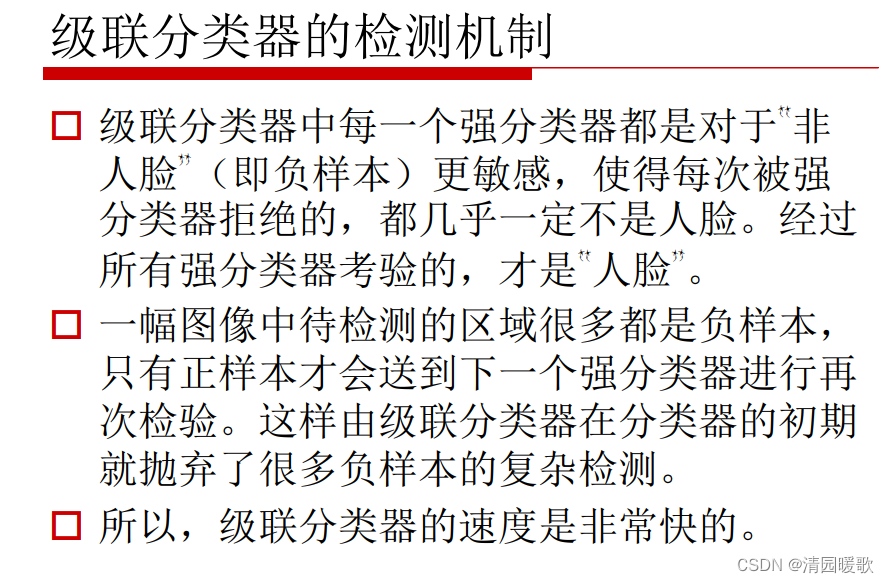

The cascade here means that the classifier is biased. It will look at the positive examples or suspected positive examples more sloppily, but it will make a confident judgment on the positive negative examples; that is to say, for each classifier , the only thing I threw away was not a face, not a positive example, and the remaining ones are not sure yet. This process is superimposed, and each classifier is different. The last remaining ones are the real positive examples.

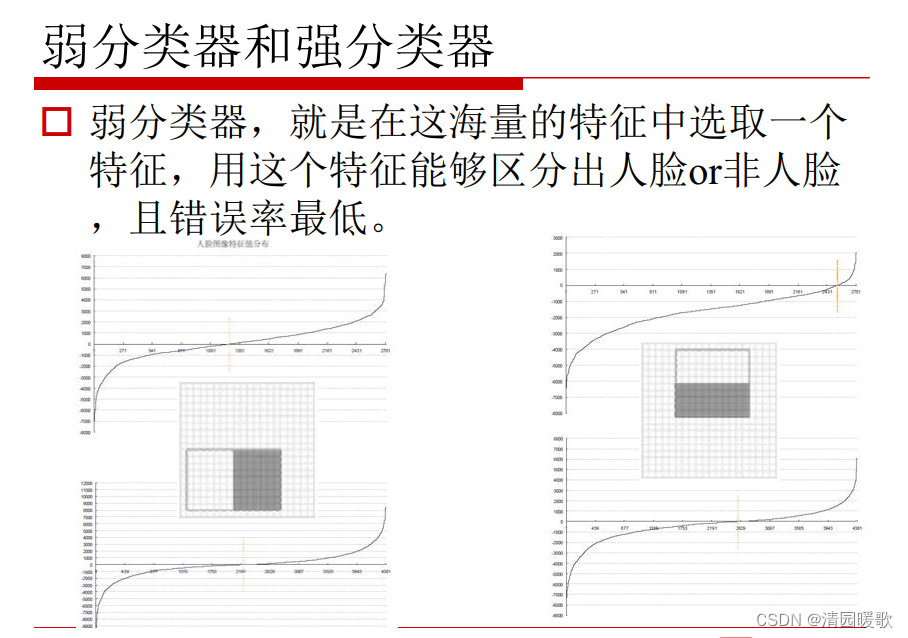

To what extent is the weak classifier weaker, such as the left -right black classifier on the left, the face of the face and the face response diagram is not much different; the difference between the right side is available.

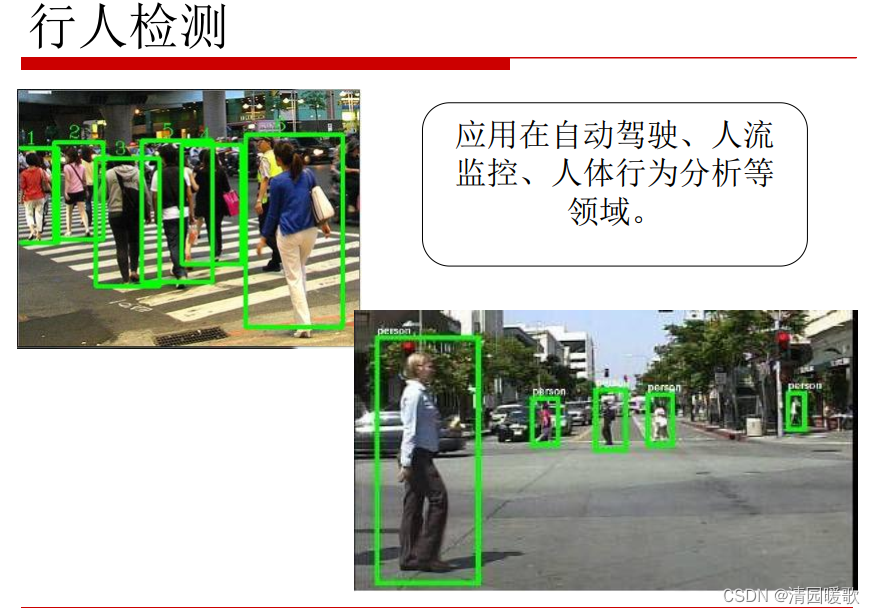

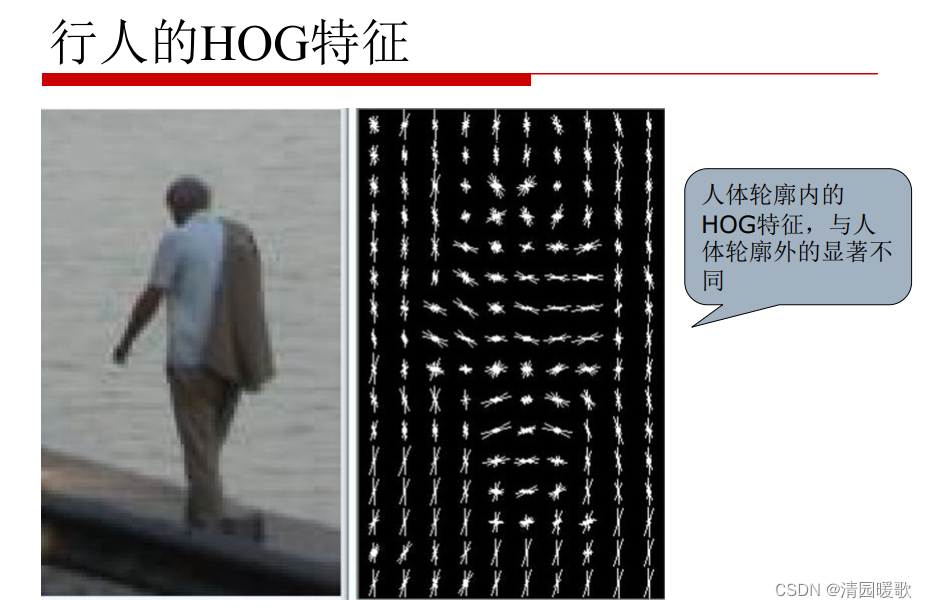

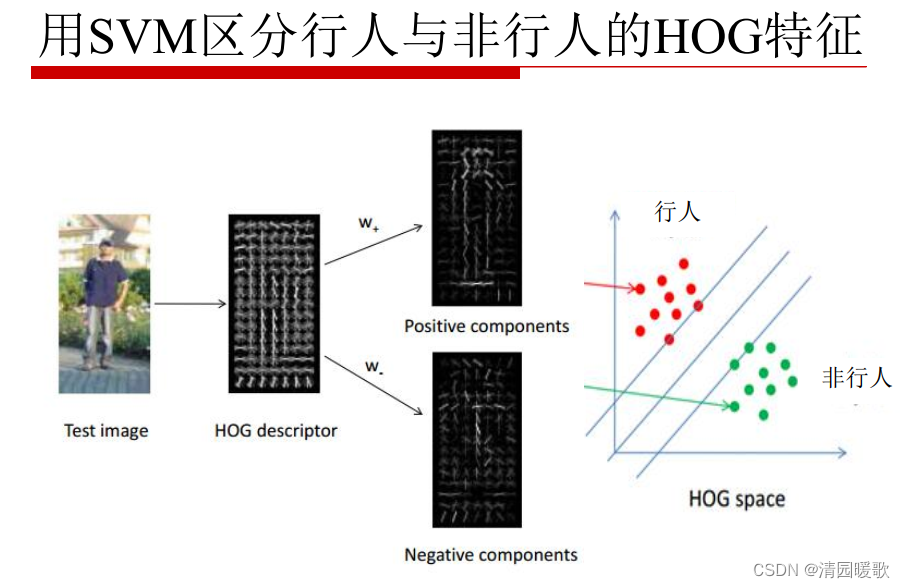

4.3 Pedestrian detection

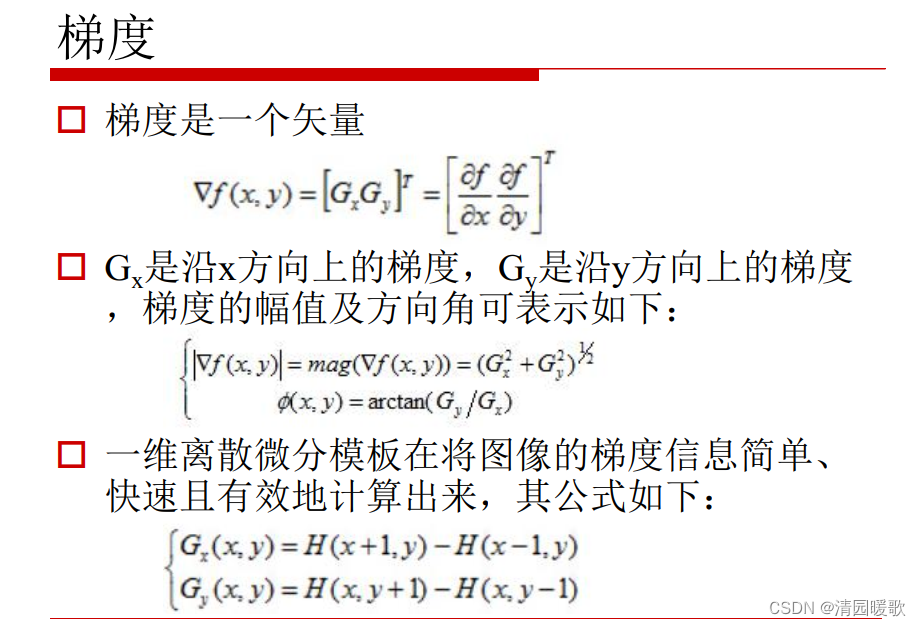

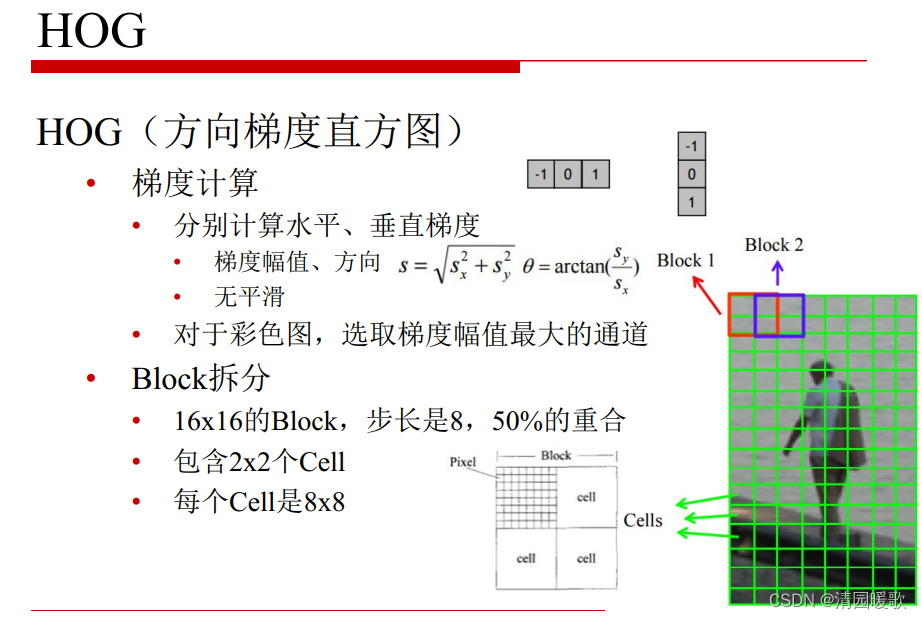

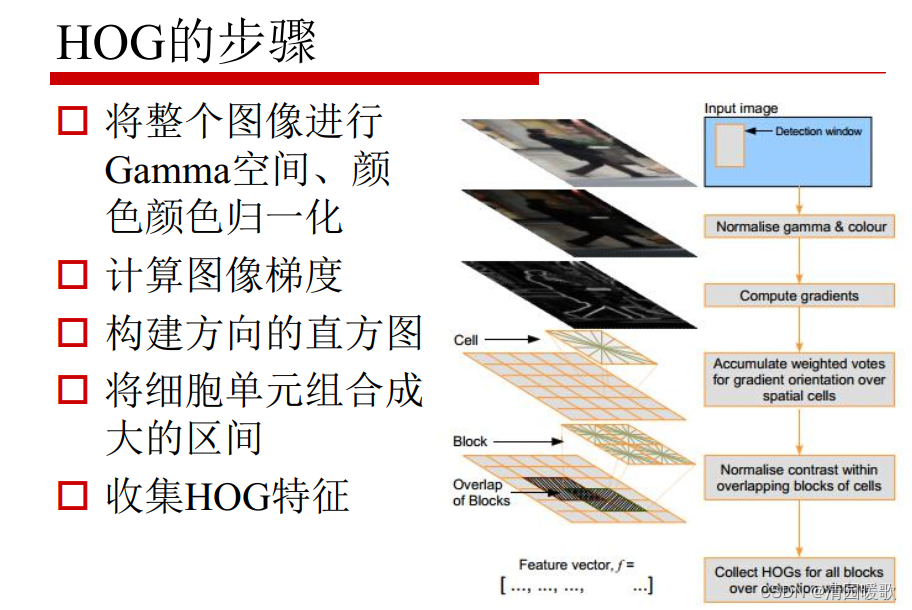

4.3.1 HOG

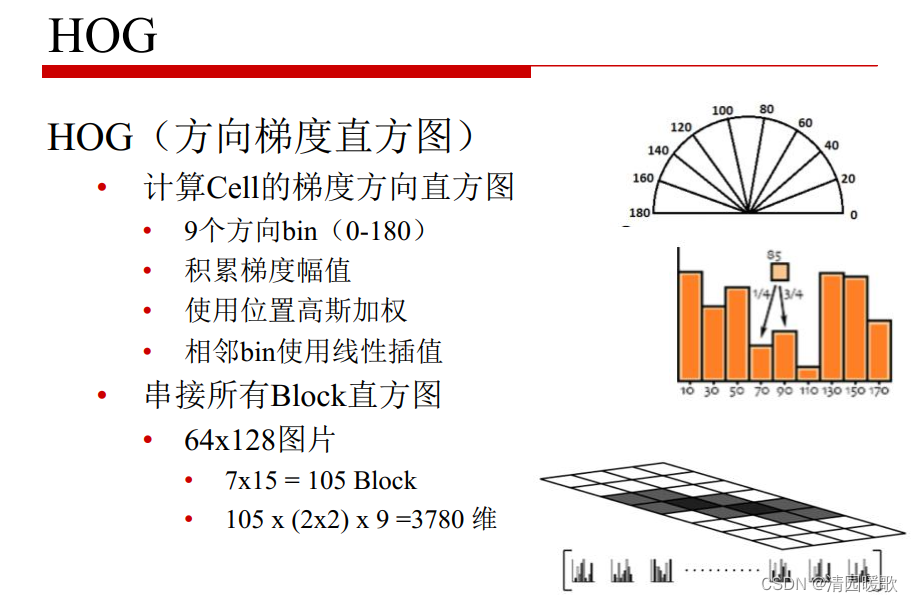

The number of gradients when it falls within 20 degrees, if it's 85 degrees, then between 70 and 90 degrees, uses interpolation, it's 15 at 70 degrees, and 5 at 90 degrees.

9 represents the gradient without direction (sign)

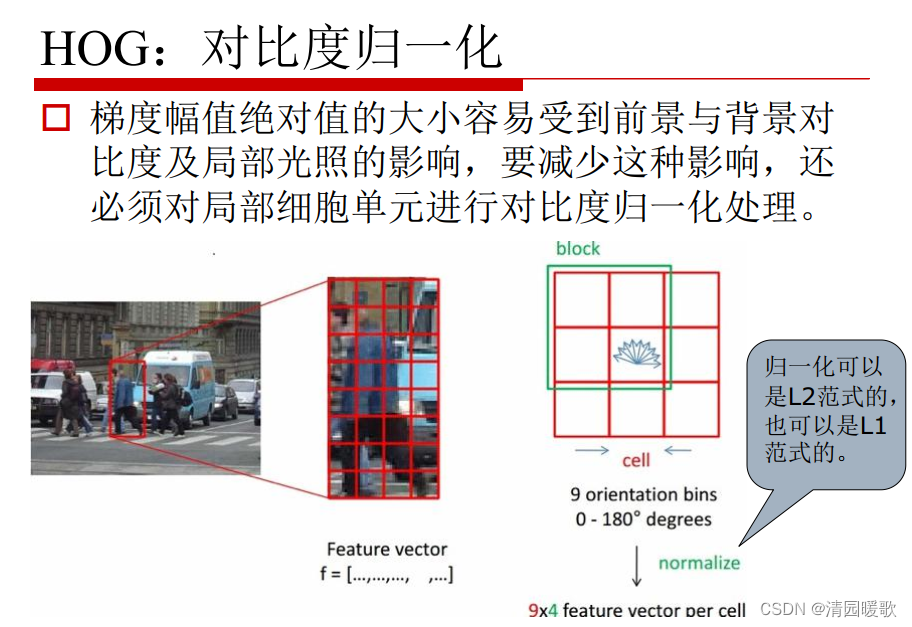

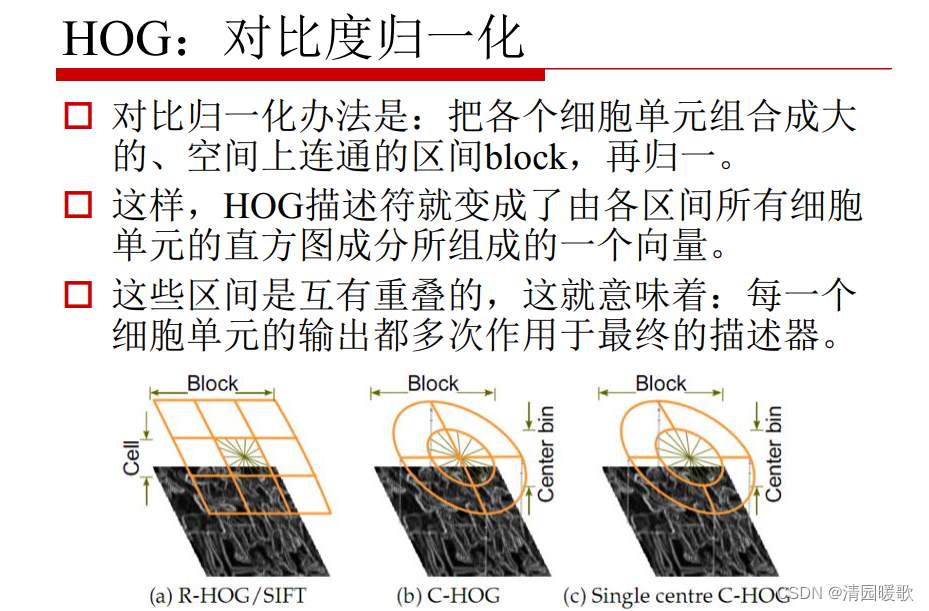

L2 paradigm may be better

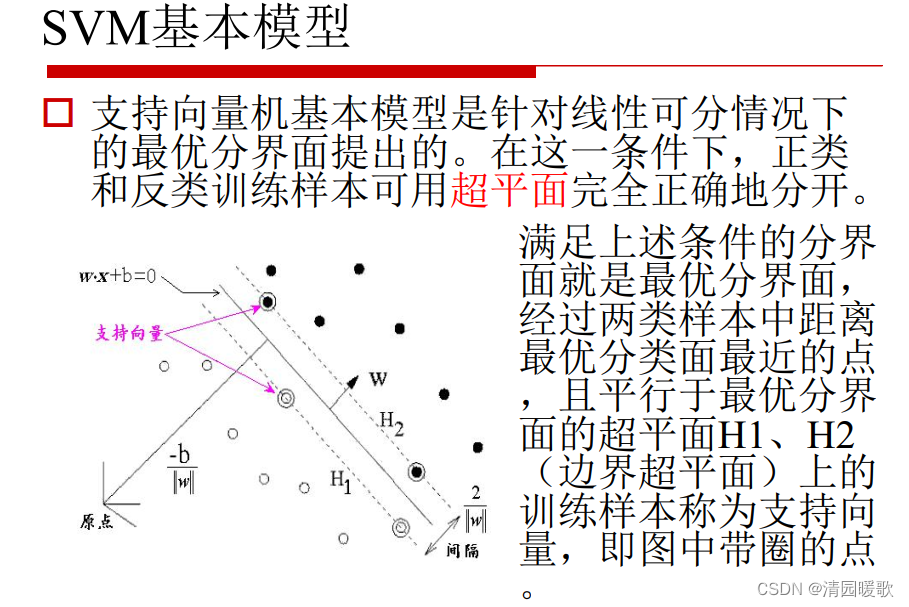

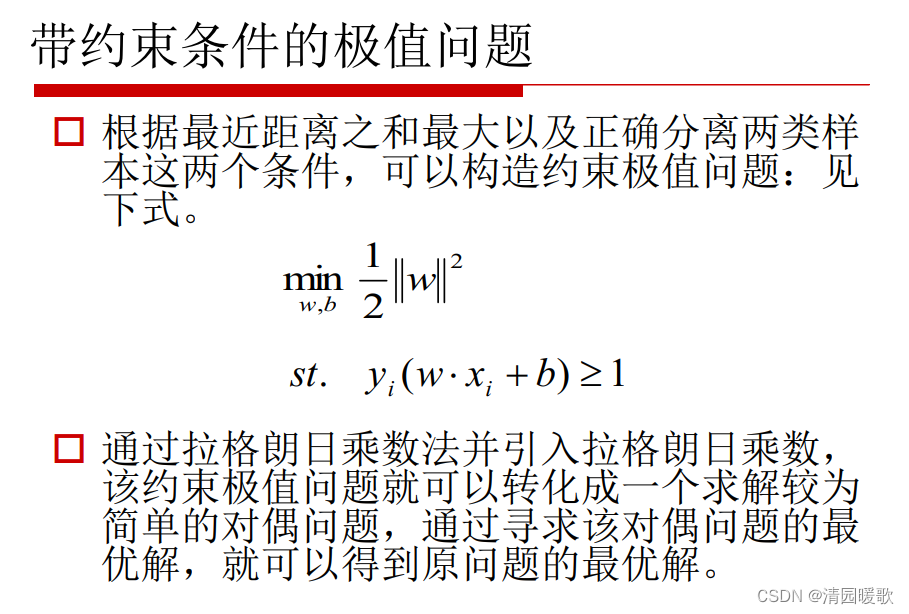

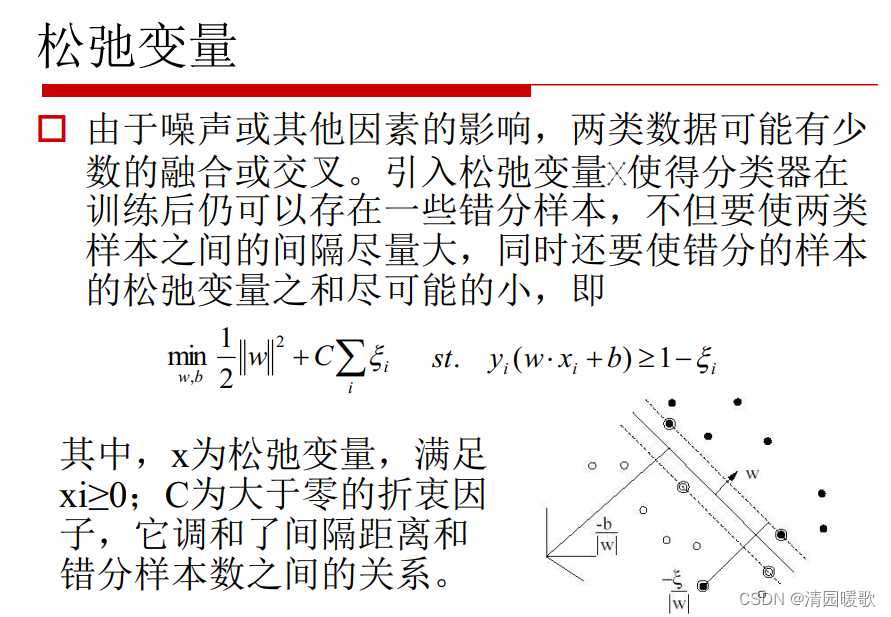

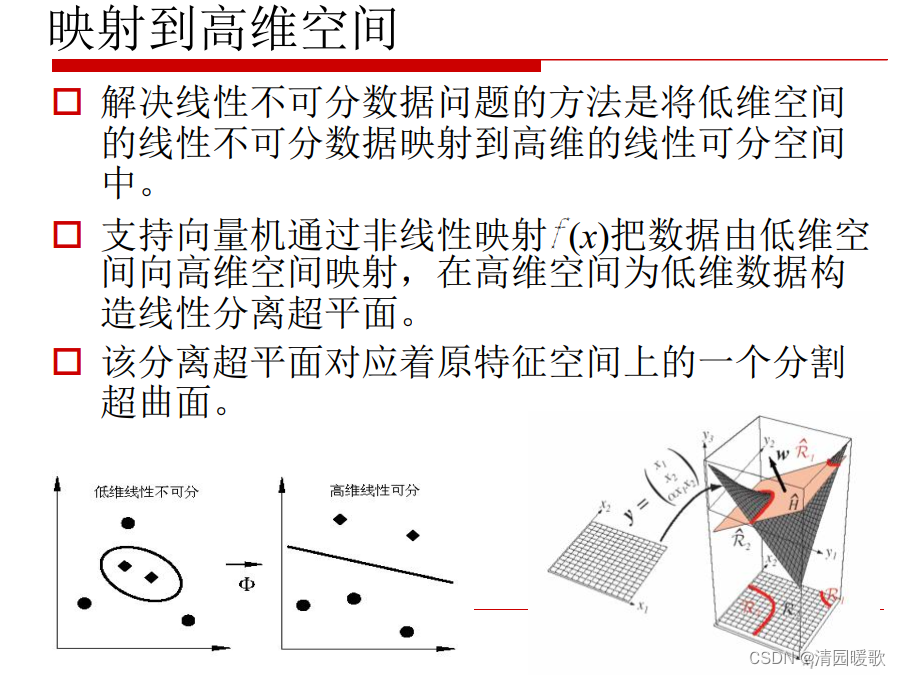

4.3.2 SVM

It is to find a dividing line between two types of samples. After being divided into two, the distance between the interfaces that satisfy the two classifications on the boundary reaches the maximum.

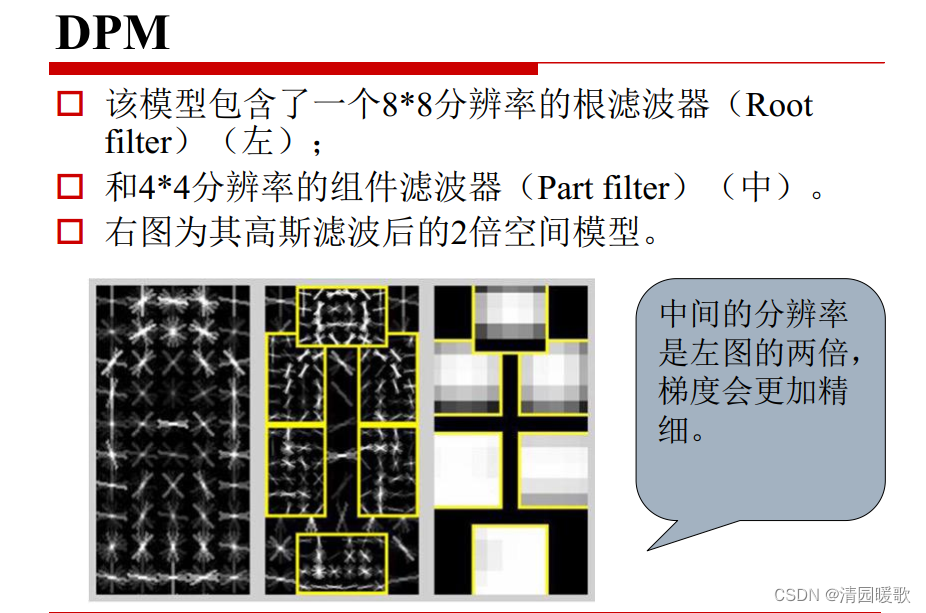

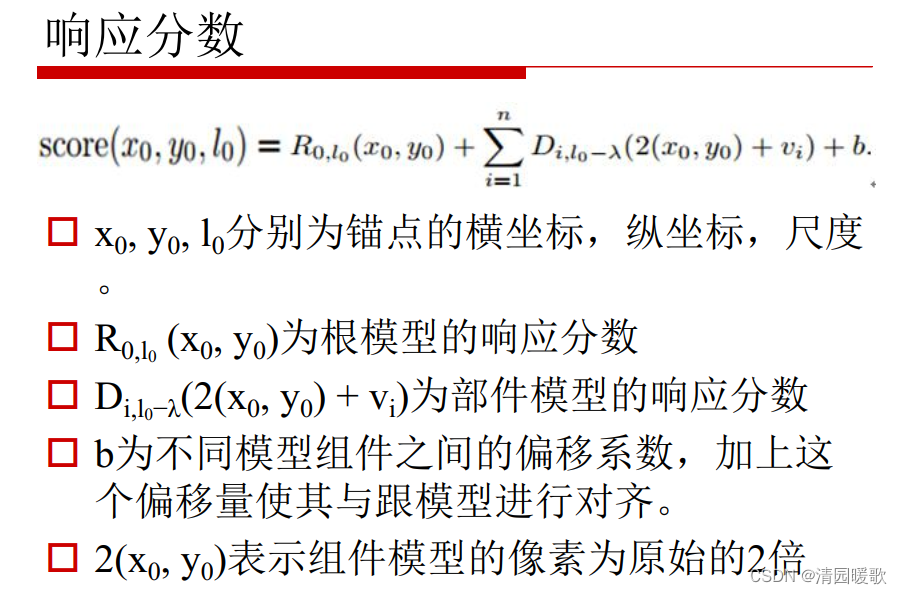

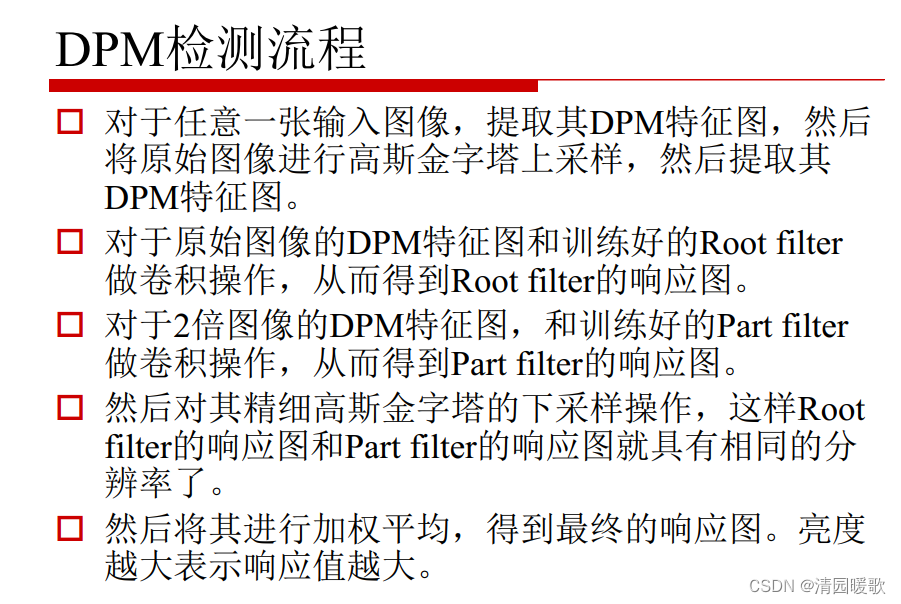

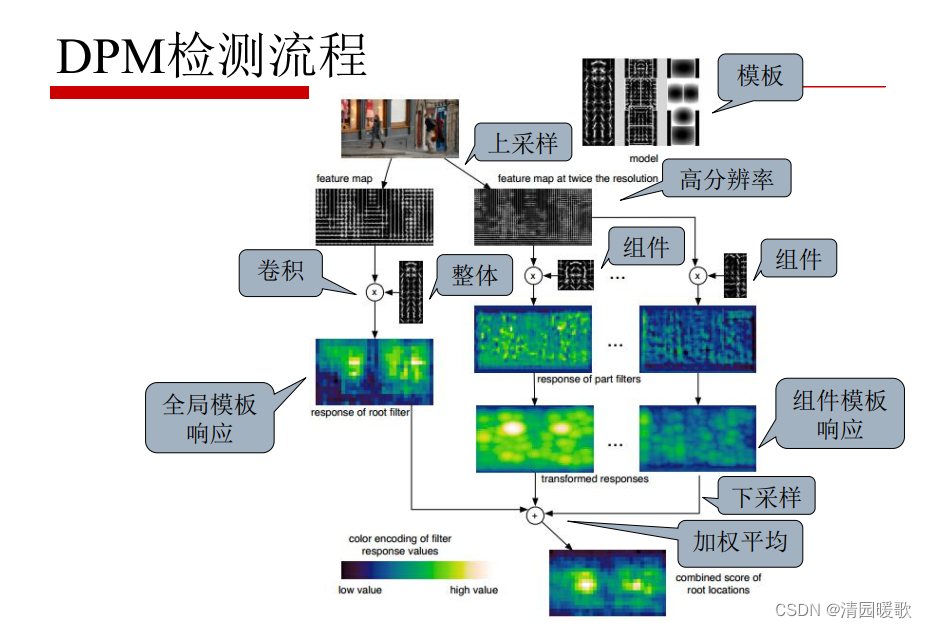

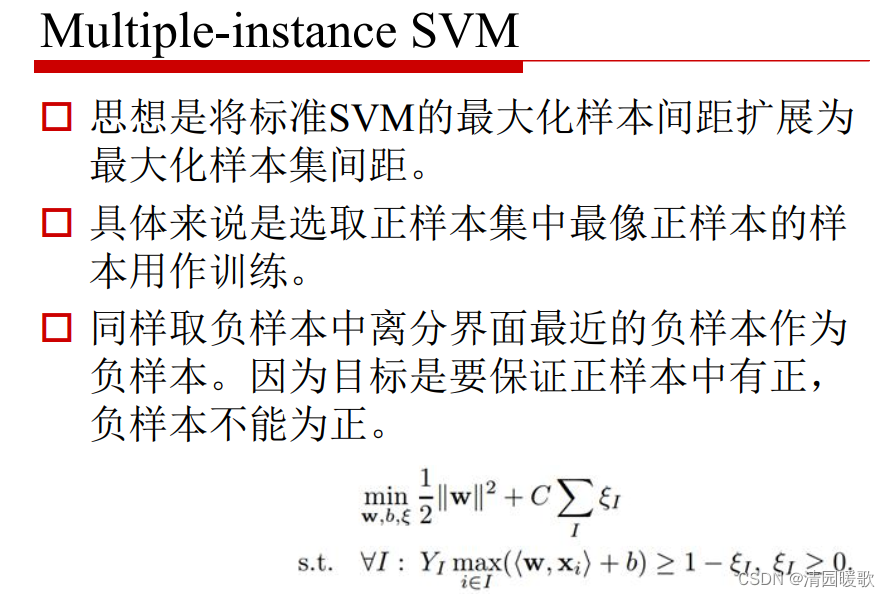

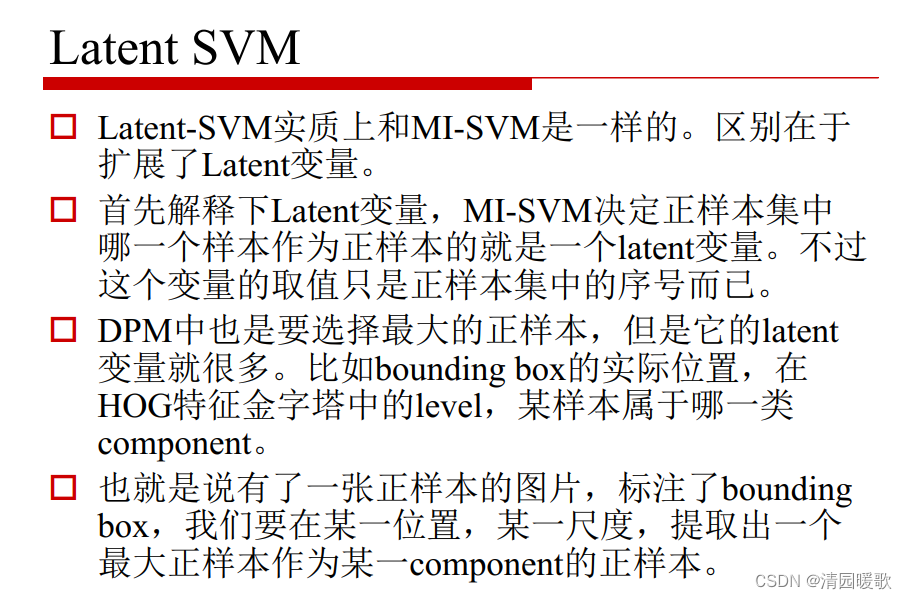

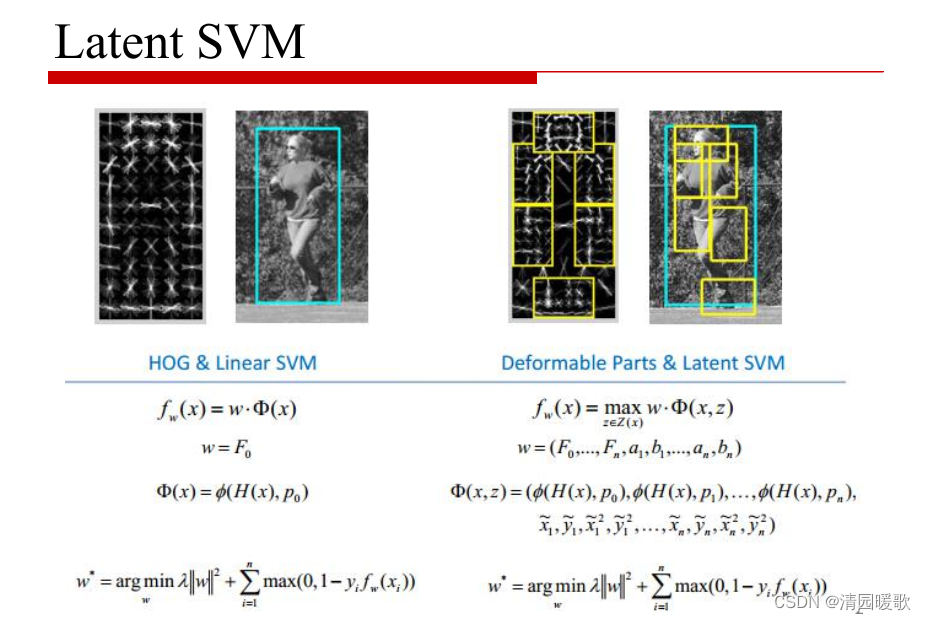

4.3.3 DPM