I. Introduction

How to build the container images required for Kubernetes? I think your answer must be Docker, yes, Docker is indeed the first choice. When we use Jenkins for CI/CD to build container images, we usually deploy the Jenkins service on a physical machine, and then use the docker build command of the physical machine to build the image. But in the Jenkins on K8s environment, both Jenkins Master and Jenkins Slave run as Pods on the Node of the Kubernetes cluster. Our build environments are all Pods and there is no docker command. As we all know, Kubernetes uses containerd as the container runtime by default after version V1.24.x, and no longer supports Docker. We cannot use /var/run/docker.sock on the host machine. We need another way to build container images in a Kubernetes cluster. This article will introduce the Kaniko tool.

2. What is Kaniko?

Github address: https://github.com/GoogleContainerTools/kanikoKaniko is a Google open source tool used to build container images in Kubernetes. It is a tool to build container images from Dockerfile, just like Docker, but the main difference is that Kaniko can run within a container, which means it can run within a Kubernetes cluster. No privileged mode is required, and no sockets need to be exposed. There is no need to run Docker on the nodes of our cluster, so it doesn't matter which container engine we use to run the containers. The important thing is that Kaniko can build container images in containers and automatically build them in Kubernetes clusters, which is unbound from Docker on the host and is more secure and reliable. Features are as follows:

-

docker in docker

-

Does not rely on the Docker daemon

-

No privilege permissions are required, safe construction

-

Just one command

-

Support Dockerfile build

Now, let’s explore Kaniko step by step

3. Using Kaniko in Kubernetes

Kaniko runs as a container and requires three parameters: Dockerfile, context, and the address of the remote mirror repository.

working principle:

-

Read and parse the specified Dockerfile

-

Extract the file system of the base image (FROM image in Dockerfile)

-

Run each command separately in a separate Dockerfile

-

A snapshot of the user space file system will be taken after each run.

-

Each time it runs, attach the snapshot layer to the base layer and update the image metadata

-

Last push image

Prerequisites:

-

Requires a running kubernetes cluster

-

A Kubernetes secret needs to be created that contains the authentication information needed to push to the registry

-

Dockerfile needs to be prepared

3.1 Create Dockerfile

Write a Dockerfile and create a configmap in k8s:

[root@localhost ~]# cat Dockerfile

FROM ubuntu

ENTRYPOINT ["/bin/bash", "-c", "echo hello"]

[root@localhost ~]# kubectl create configmap kaniko-dockerfile --from-file=./Dockerfile

configmap/kaniko-dockerfile created3.2 Create the Secret of the image warehouse certificate

Create a credential that configures kaniko to push to the Alibaba Cloud image warehouse, find a machine with docker installed, and log in to the warehouse.

[root@localhost ~]# docker login --username=xxx registry.cn-shanghai.aliyuncs.com

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login SucceededAfter successful login, a config.json file will be generated, and this file will be used to create a secret for use by the kaniko container.

-

File location in centos environment:/root/.docker/config.json

-

File location in ubuntu environment: /home/ubuntu/.docker/config.json

[root@localhost ~]# kubectl create secret generic kaniko-secret --from-file=/root/.docker/config.json

secret/kaniko-secret created3.3 Start the kaniko container to build the image

kaniko_build_image.yaml:

apiVersion: v1

kind: Pod

metadata:

name: kaniko

spec:

containers:

- name: kaniko

image: gcr.io/kaniko-project/executor:latest

args: ["--dockerfile=/workspace/Dockerfile",

"--context=dir://workspace",

"--destination=registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko-demo:1.0"] # 替换成自己的仓库地址

volumeMounts:

- name: kaniko-secret

mountPath: /kaniko/.docker

- name: dockerfile

mountPath: /workspace

volumes:

- name: kaniko-secret

secret:

secretName: kaniko-secret

items:

- key: config.json

path: config.json

- name: dockerfile

configMap:

name: kaniko-dockerfile

-

args part

This part is what is mentioned above. Kaniko requires three parameters when running: Dockerfile (--dockerfile), context (--context), remote image warehouse (--destination)

-

secret part

Pushing to the specified remote mirror repository requires authentication, so it is mounted to the directory /kaniko/.docker/ in the form of secret, and the file name is config.json

Create the pod, check the status, and see if the log is completed normally.

[root@localhost ~]# kubectl apply -f kaniko_build_image.yaml

pod/kaniko created[root@localhost ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

kaniko 1/1 Running 0 3s

[root@localhost ~]# kubectl logs -f kaniko

INFO[0000] Retrieving image manifest ubuntu

INFO[0000] Retrieving image ubuntu from registry index.docker.io

INFO[0003] Built cross stage deps: map[]

INFO[0003] Retrieving image manifest ubuntu

INFO[0003] Returning cached image manifest

INFO[0003] Executing 0 build triggers

INFO[0003] Building stage 'ubuntu' [idx: '0', base-idx: '-1']

INFO[0003] Skipping unpacking as no commands require it.

INFO[0003] ENTRYPOINT ["/bin/bash", "-c", "echo hello"]

INFO[0003] Pushing image to registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko-demo:1.0

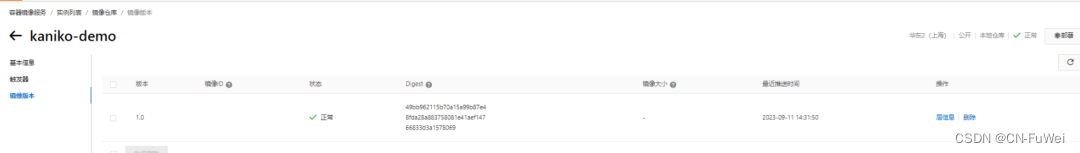

INFO[0004] Pushed registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko-demo@sha256:d1855cc00550f9048c88b507626e0f24acf4c22e02856e006b2b9fdb0b80e567Check that the Alibaba Cloud image warehouse has been uploaded

Use the image generated by kaniko to test whether it is available:

[root@localhost ~]# docker run -it --rm registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko-demo:1.0

Unable to find image 'registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko-demo:1.0' locally

1.0: Pulling from kubesre02/kaniko-demo

445a6a12be2b: Pull complete

Digest: sha256:49bb962115b70a15a99b87e48fda28a883758081e41aef14766833d3a1578069

Status: Downloaded newer image for registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko-demo:1.0

helloWhen hello comes out, it is successful.

4. Customize a Kaniko image

The official kaniko image is built based on scratch and does not have a shell. It is very troublesome to call shell commands in the kaniko native image. So we can customize our own private Kaniko image. The key files of kaniko are actually binary files in the /kaniko directory. The official recommendation is to use the gcr.io/kaniko-project/executor image. We can copy the executor in the /kaniko directory to our own private image Dockerfile:

FROM gcr.io/kaniko-project/executor:latest AS plugin

FROM ubuntu

ENV DOCKER_CONFIG /kaniko/.docker

COPY --from=plugin /kaniko/executor /usr/local/bin/kaniko

RUN mkdir -p /kaniko/.docker/

COPY config.json /kaniko/.docker/The above Dockerfile builds an Ubuntu-based Docker image,

-

FROM gcr.io/kaniko-project/executor:latest AS plugin

-

Build a temporary stage starting from a Docker image named gcr.io/kaniko-project/executor:latest and name it plugin.

-

This step is to build an intermediate container in a Docker image that already contains the Kaniko tool.

-

-

FROM free

-

Next create a new Docker image, based on the official Ubuntu base image.

-

-

ENV DOCKER_CONFIG /kaniko/.docker

-

Set the environment variable DOCKER_CONFIG to /kaniko/.docker, which is the Docker configuration directory used by the Kaniko tool. This is to ensure that Kaniko can find the necessary Docker configuration information.

-

-

COPY --from=plugin /kaniko/executor /usr/local/bin/kaniko

-

Copy the /kaniko/executor file from the previously built plugin stage image to the /usr/local/bin/kaniko path of the new image. This will copy the Kaniko binaries to the new Ubuntu image for subsequent use in that image.

-

-

RUN mkdir -p /kaniko/.docker/

-

Create the /kaniko/.docker/ directory in the new image to store Docker configuration files.

-

-

COPY config.json /kaniko/.docker/

-

Copy the local config.json file to the /kaniko/.docker/ directory of the new image. This config.json file likely contains authentication information and other configuration for the Docker repository so that Kaniko can access and push Docker images.

-

Ultimately, this Docker image will contain the Ubuntu operating system and Kaniko tools, and configure the Docker environment required by Kaniko, so that Kaniko can be used to build Docker images within the container and have a shell environment, while maintaining a certain degree of isolation. and security. Build and upload the image:

[root@localhost ~]# docker build -t registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko:latest .

Sending build context to Docker daemon 212.1MB

Step 1/6 : FROM gcr.io/kaniko-project/executor:latest AS plugin

---> 03375da0f864

Step 2/6 : FROM ubuntu

---> aa786d622bb0

Step 3/6 : ENV DOCKER_CONFIG /kaniko/.docker

---> Using cache

---> f7cd726aa130

Step 4/6 : COPY --from=plugin /kaniko/executor /usr/local/bin/kaniko

---> Using cache

---> 8fcac536196f

Step 5/6 : RUN mkdir -p /kaniko/.docker/

---> Using cache

---> 1afce75446a3

Step 6/6 : COPY config.json /kaniko/.docker/

---> Using cache

---> 4a2873a75a7c

Successfully built 4a2873a75a7c

Successfully tagged registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko:latest

[root@localhost ~]# docker push registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko:latest

The push refers to repository [registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko]

0cb4fb37580b: Pushed

c9dbd3644e5a: Pushed

33a2214b827c: Pushed

c5077dd8160b: Pushed

948c7f86fd48: Pushed

403aab81b15b: Pushed

7bff100f35cb: Pushed

latest: digest: sha256:b5642885f1333a757625cbe36a9b1102aba27646f2572acab79861a74dba1050 size: 17835. More parameters of Kaniko

-

--context: Specify the path to the build context. By default, the context is the directory where the Dockerfile is located. Can be abbreviated -c

-

--dockerfile: Specify the path to the Dockerfile to use. By default, Kaniko looks for a file named Dockerfile in the context. Can be abbreviated -f

-

--destination: Specify the name of the Docker image after the build is completed, which can include labels. For example: myregistry/myimage:tag. Can be abbreviated -d

-

--cache: Enable or disable Kaniko's build cache feature. By default, caching is enabled.

-

--cache-ttl: Set the lifetime of the build cache. For example, --cache-ttl=10h means that the cache is valid for 10 hours after the build is completed.

-

--cache-repo: Specify the Docker repository used to store the build cache. By default, the cache is stored locally.

-

--cache-dir: Specify the local directory path to store the build cache.

-

--skip-tls-verify: Skip TLS certificate verification, for use with insecure Docker repositories.

-

--build-arg: Pass build parameters to the ARG directive in the Dockerfile. For example: --build-arg key=value.

-

--insecure: Allows pulling base images from untrusted registries.

-

--insecure-registry: Allow connections to untrusted registries.

-

--verbosity: Set the verbosity of the build, which can be panic, error, warning, info, debug or trace.

-

--digest-file: Specify a file to store the digest (Digest) of the image generated by the build.

-

--oci-layout-path: Specifies the path to the OCI (Open Container Initiative) layout file, used to store metadata of the build process.

More reference official: https://github.com/GoogleContainerTools/kaniko#additional-flags

6. Kaniko build cache

--cache-copy-layers This parameter will check the cache of layers before executing the command. If present, kaniko will pull and extract the cached layers instead of executing the command. If not kaniko will execute the command and then push the newly created layer to the cache. The user can decide whether to enable caching by setting the --cache=true parameter, and the remote repository used to store the cache layer can be provided via the --cache-repo flag. If this flag is not provided the cache will be inferred from the provided --destination. repo. Usually these two parameters --cache=true --cache-copy-layers=true are used at the same time.

7. Using Kaniko in CI/CD

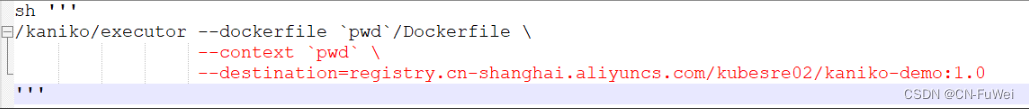

7.1 Used in jenkins pipline

Dynamically generate Jenkins Slave pod CI/CD based on Kubernetes. The following is a simplified Pipeline example:

// 镜像仓库地址

def registry = "registry.cn-shanghai.aliyuncs.com/kubesre02/demo"

pipeline {

agent {

kubernetes {

yaml """

kind: Pod

metadata:

name: kaniko

spec:

containers:

- name: kaniko

# 使用自定义的 kaniko 镜像

image: registry.cn-shanghai.aliyuncs.com/kubesre02/kaniko:latest

imagePullPolicy: Always

command:

- cat

tty: true

"""

}

}

stages {

stage('拉代码') {

steps {

git clone ...

}

}

stage('构建镜像') {

steps {

// 使用 kaniko 来构建镜像

container(name: 'kaniko') {

Dockerfile 内容...

sh "kaniko -f Dockerfile -c ./ -d $registry:$BUILD_NUMBER --force"

}

}

}

stage('部署') {

steps {

部署...

}

}

}

}It can be seen that Kaniko is very suitable for building container images on the Kubernetes platform, and is easy to integrate into the DevOps Jenkins pipeline. Well, kaniko is introduced here.