non-blocking test

multi-Progress

1. multiprocessing.Process

from flask import Flask

import time

import multiprocessing

app = Flask(__name__)

num = multiprocessing.Value("d", 1) # d表示数值,主进程与子进程共享这个value。(主进程与子进程都是用的同一个value)

def func(num):

# 测试是否异常,有异常直接结束子进程

# print('子线程值:', num.value)

# if 1:

# num.value = 1 # 子进程改变数值的值,主进程跟着改变

# print('重置num值:', num.value)

# quit('有异常退出')

print('子线程1:', num.value)

time.sleep(5)

print('睡眠完成')

a=1/0

num.value = 1 # 子进程改变数值的值,主进程跟着改变

print('子线程2:',num.value)

# return '子线程处理完成'

@app.route('/')

def hello_world():

if num.value:

num.value=0

p = multiprocessing.Process(target=func, args=(num,))

p.start()

print('提交成功,请等待处理')

return '提交成功,请等待处理'

if not num.value:

print('正在执行,请等待')

return '正在执行,请等待'

return 'Hello World!'

if __name__ == '__main__':

app.run(debug=True, port=6006)

2、pool

from multiprocessing import Pool,Manager

import os, time, random

manager=Manager()

value1=manager.Value('i',1)

def work1(msg):

print('值1:',msg.value)

time.sleep(5) #将数据交给,处理程序

print('睡眠完成')

# return {"a":a,"b":b,"c":c}

# print("循环任务%d由进程号%d进程执行" % (msg, os.getpid()))

# time.sleep(random.random()) # 随机生产0-1的浮点数

# end_time = time.time() # 结束时间

# print(msg, "执行完毕,耗时%0.2f" % (end_time - start_time))

msg.value=1

print('值2:',msg.value)

@app.route('/')

def work():

if value1.value:

value1.value=0

print('子进程开始执行')

pool = Pool(3) # 定义一个进程池,最大进程数为3

pool.apply_async(func=work1, args=(value1,))

pool.close() # 关闭进程池,关闭后pool不再接收新的请求任务

return '子进程开始执行!'

else:

print('有任务正在执行')

return '有任务正在执行

3.torch.multiprocessing

def func(a,b,c):

time.sleep(5) #将数据交给,处理程序

print('睡眠完成')

return {

"a":a,"b":b,"c":c}

@app.route('/')

def func2():

ctx = torch.multiprocessing.get_context("spawn")

print(torch.multiprocessing.cpu_count())

pool = ctx.Pool(5) # 7.7G

i=100

pool.apply_async(func, args=(f'a{

i}',f'b{

i}',f'c{

i}'))

pool.close()

# i=100

# pool.apply_async(func, args=(f'a{i}',f'b{i}',f'c{i}'))

pool_list = [1,2,3]

for i in range(0,5):

pool.apply_async(func, args=(f'a{

i}',f'b{

i}',f'c{

i}'))

# print('zhi:',res.get())

# pool_list.append(res)

pool.close() # 关闭进程池,不再接受新的进程

# pool.join() # 主进程阻塞等待子进程的退出

for i in pool_list:

# data = i.get()

print('数据:',i)

return 'Hello World!'

Spawn is OK in the pool, but the process is directly stuck.

# ctx = torch.multiprocessing

ctx = torch.multiprocessing.get_context("spawn")

print(torch.multiprocessing.cpu_count())

pool = ctx.Pool(2) # 7.7G

num=1

pool.apply_async(taskModel_execute, args=(start_model, execut_data),error_callback=throw_error)

pool.close()

ctx = torch.multiprocessing.get_context("spawn")

p = ctx.Process(target=taskModel_execute, args=(start_model, execut_data))

p.start()

process shared variables

Value and Array share data through shared memory,

and Manager shares data through shared processes.

'spawn' enables multi-process data copying

num=multiprocessing.Value('d',1.0)#num=0

arr=multiprocessing.Array('i',range(10))#arr=range(10)

p=multiprocessing.Process(target=func1,args=(num,arr))

manager=Manager()

list1=manager.list([1,2,3,4,5])

dict1=manager.dict()

array1=manager.Array('i',range(10))

value1=manager.Value('i',1)

Shared variables between processes

If you want different processes to read and write the same variable, you need to make a special declaration. Multiprocessing provides two implementation methods, one is to share memory, and the other is to use a service process. Shared memory only supports two data structures, Value and Array.

The address in memory of the count accessed by the child process and the main process is the same. There are two points to note here:

- 1. When the multiprocessing.Value object is used with Process, it can be used as a global variable as above, or as an incoming parameter. However, when used with Pool, it can only be used as a global variable, and an error will be reported when used as an incoming parameter. RuntimeError: Synchronized objects should only be shared between processes through inheritance

- 2. When multiple processes read and write shared variables, pay attention to whether the operation is process-safe. For the previous cumulative counter, although it is a statement, it involves reading and writing, and local temporary variables of the process. This operation is not process safe. When accumulating multiple processes, incorrect results will appear. Need to lock cls.count += 1. To lock, you can use an external lock or directly use the get_lock() method.

- 3. Shared memory supports limited data structures. Another way to share variables is to use a service process to manage variables that need to be shared. When other processes operate shared variables, they interact with the service process. This method supports types such as lists and dictionaries, and can share variables between multiple machines. But the speed is slower than the shared memory method. In addition, this method can be used as the incoming parameter of Pool. Similarly, locking is also required for non-process-safe operations.

4. Process exception capture (non-blocking)

1.multiprocessing.Process cannot catch exceptions

p = multiprocessing.Process(target=taskimg_execute, args=(start_img,))

p.start()

2. The pool cannot capture it and can only receive it through functions.

def throw_error(e):

print("error回调方法: ", e)

return {

'code': 440, 'msg': '进程创建异常'+str(e), 'data': {

'status': 400}}

pool = multiprocessing.Pool(processes = 3)

pool.apply_async(taskimg_execute, (start_img,),rror_callback=throw_error) pool.close()

Multithreading

import threading

from flask import Flask

import time

app = Flask(__name__)

g_num = 1

def func(data):

global g_num

print('子线程1:', g_num)

time.sleep(5)

print('睡眠完成')

# a=1/0

g_num = 1 # 子进程改变数值的值,主进程跟着改变

print('子线程2:',g_num)

# return '子线程处理完成'

@app.route('/')

def hello_world():

global g_num

print('主进程:',g_num)

if g_num:

g_num=0

# t1 = threading.Thread(target=func)

t1 = threading.Thread(target=func, args=(6,))

t1.start()

# time.sleep(1)

print('提交成功,请等待处理')

return '提交成功,请等待处理'

if not g_num:

print('正在执行,请等待')

return '正在执行,请等待'

return 'Hello World!'

if __name__ == '__main__':

app.run(debug=True, port=6006)

Start a process in a thread

import threading

from flask import Flask

import time

import multiprocessing

app = Flask(__name__)

g_num = 1

def func2(num):

print('进程1:', num.value)

time.sleep(2)

print('睡眠完成')

# a=1/0

# num.value = 1 # 子进程改变数值的值,主进程跟着改变

print('这是子进程处理')

print('进程2:',num.value)

# return '子线程处理完成'

def func(data):

global g_num

print('子线程1:', g_num)

# time.sleep(5)

# print('睡眠完成')

num = multiprocessing.Value("d", 1)

p = multiprocessing.Process(target=func2, args=(num,))

p.start()

print('子线程提交任务')

# a=1/0

g_num = 1 # 子进程改变数值的值,主进程跟着改变

print('子线程2:',g_num)

# return '子线程处理完成'

@app.route('/')

def hello_world():

global g_num

print('主进程:',g_num)

if g_num:

g_num=0

# t1 = threading.Thread(target=func)

t1 = threading.Thread(target=func, args=(6,))

t1.start()

# time.sleep(1)

print('提交成功,请等待处理')

return '提交成功,请等待处理'

if not g_num:

print('正在执行,请等待')

return '正在执行,请等待'

return 'Hello World!'

@app.route('/thread')

def mytest():

from threading import Thread

from time import sleep, ctime

def funcx(name, sec):

print('---开始---', name, '时间', ctime())

# sleep(sec)

time.sleep(3)

print('***结束***', name, '时间', ctime())

# 创建 Thread 实例

t1 = Thread(target=funcx, args=('第一个线程', 3))

t2 = Thread(target=funcx, args=('第二个线程', 2))

t3 = Thread(target=funcx, args=('第一个线程',3))

t4 = Thread(target=funcx, args=('第二个线程', 2))

# 启动线程运行

t1.start()

t2.start()

t3.start()

t4.start()

return 'i am a thread test!'

if __name__ == '__main__':

app.run(debug=True, port=6006)

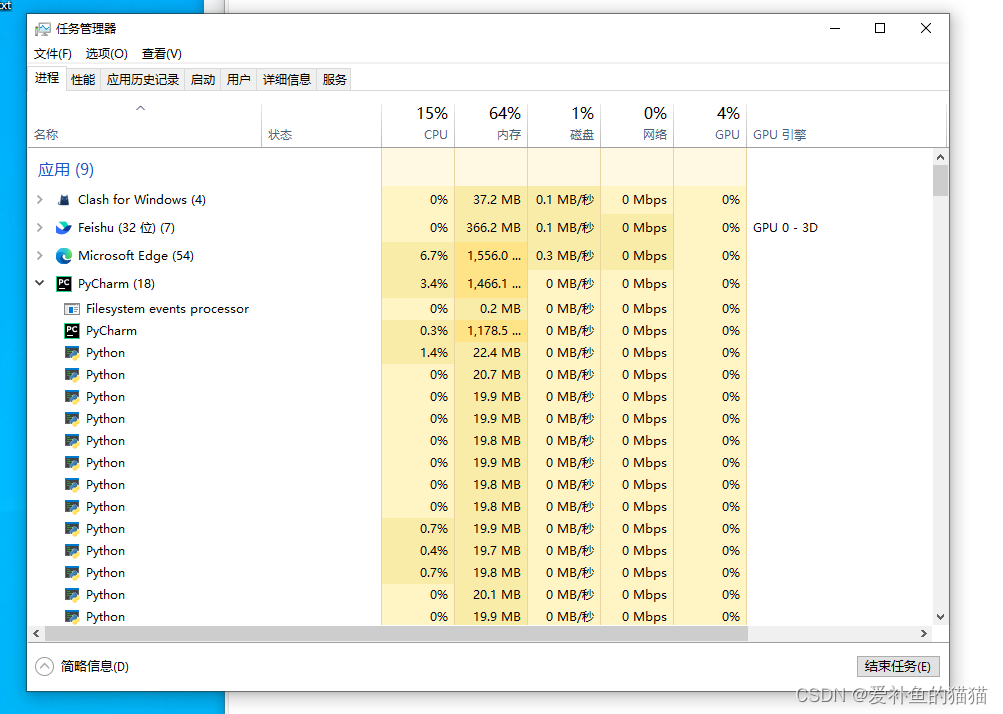

You can view the various numbers of the process in the task manager.

Multi-process spawn, fork, forkserver (multi-process model training)

The exception thrown by raise ValueError('parameter error') can be caught

Python multi-process programming: three modes of creating processes: spawn, fork, forkserver.

First, fork and spawn are different ways to build child processes. The difference is:

fork: In addition to the necessary startup resources, other variables, packages, data, etc. It is inherited from the parent process and is copy-on-write, which means it shares some memory pages of the parent process, so it starts faster. However, since most of the parent process data is used, it is an unsafe process. Spawn: from the

beginning Build a child process, copy the data of the parent process to the child process space, and have its own Python interpreter, so you need to reload the package of the parent process, so the startup is slow. Since the data is your own, it is more secure.

The exception thrown by raise ValueError('parameter error') can be caught.

Multi-process call training model

error: Cannot re-initialize CUDA in forked subprocess. To use CUDA with multiprocessing, you must use the 'spawn' start method

import torch

torch.multiprocessing.set_start_method('spawn',force=True)

#解决不了

# print(multiprocessing.get_start_method())

# multiprocessing.set_start_method('spawn', force=True)

try:

print('subprocess执行命令')

core.testtrain('我是主线程')

print('sub执行结束,没有异常')

except Exception as e:

print("Couldn't parse")

print('捕获异常Reason:', e)