Please see your personal homepage for papers and source code:

https://download.csdn.net/download/qq_45874683/87660118

Summary:

In this project, create an emotion recognition or classification system based on the valence/arousal model. Electroencephalogram (EEG) signals will primarily be used to create the model. Different stimuli elicit different responses in the EEG signal. Different types of video stimulation will be used and their corresponding emotional effects, which are determined by the EEG signal. Our goal was to create an objective system that could determine the type of response a video produced in any subject, allowing videos to be classified according to emotional categories, which were defined using a valence/arousal scale. The project will create an emotion classification system and a video classification system that will enable users to objectively determine the type of video using the EEG signals of the person watching the video. Such a system could be beneficial in creating recommendation systems that are truly objective in nature. This project uses a 1D convolutional neural network model for classification. The EEG signal from 32 channels was first reduced to 14 channels with symmetric differences in EEG channels, and then a model was applied on these 14 channels, giving a weighted accuracy of 90% for classifying emotions into 4 categories.

1 Introduction

Emotion is a complex pattern of responses involving behavioral, experiential and physiological factors. It can be associated with personality, mood, or temperament. Extensive research has been conducted on the relationship between EEG and emotion, many of which involve researchers manually observing EEG signals to identify areas that correspond to certain types of emotions. Recently, the application of machine learning to automate the process of emotion classification in EEG has been extensively studied. In this project, the DEAP dataset is used. Musical clips were used as visual stimuli to elicit different types of emotions from subjects. Emotions are analyzed and classified into 4 regions using the valence arousal model of emotion classification. This project utilizes raw EEG signals with some preprocessing to classify participants' emotional responses to video stimuli. Many deep learning models have been proposed in this field, but most of them first apply transformations such as discrete wavelet transform or power spectral density calculation on EEG. In this project, 1D CNN was applied on the raw EEG signal, achieving 75% Weighted accuracy, illustrates the power of a deep learning model.

2. Theoretical aspects

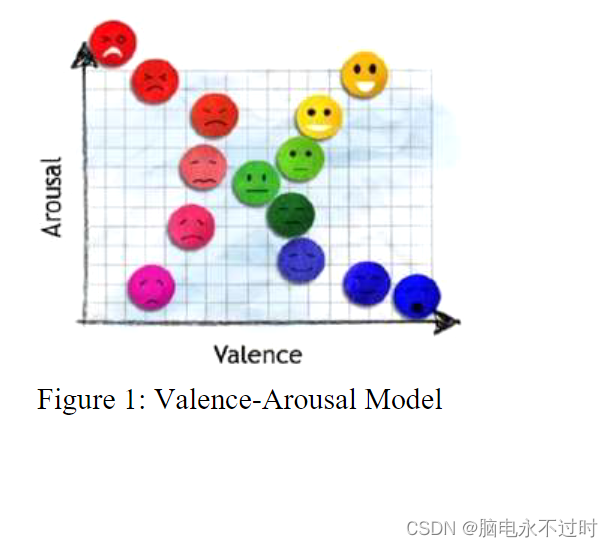

2.1 Valence arousal model

The proposed project plans to use the valence arousal model to describe emotions more quantitatively. In this model, . One can place each emotional state on a two-dimensional plane with arousal and valence. as x-axis and y-axis respectively (as shown in Figure 1). Dominance can also be considered as a third dimension to gain a deeper understanding of emotional states. Valence can range from unpleasant to pleasant, while arousal can range from inactive to active. The valence arousal space can be divided into four quadrants, low arousal/low price, low arousal/high price, high arousal/low price, and high arousal/high price. Valence arousal can also be determined using linear regression.

2.2.DEAP data set

The DEAP dataset was obtained in the following way. Initially, the researchers used a novel stimulus selection method and collected a large set of video clips. Then 1. Extract the smallest fragment of the video clip used in the experiment. This is followed by subjective testing to select the most suitable video clips. Participants then participated in dataset collection and had their EEG signals recorded using 32 active silver chloride electrodes at a sampling rate of 512Hz. Afterwards, each participant rated them on valence and arousal. To maximize the intensity of the emotions obtained, the strongest volunteer ratings were chosen, while a less variable video type was selected. The DEAP dataset is the largest publicly-contributed dataset for spontaneous emotion analysis from physiological signals. It is also the only database that uses musical excerpts as emotional stimuli.

2.3. Correlation between EEG and emotion

EEG technology can be used to provide insights into the human brain. Since EEG helps detect the smallest modulations occurring in the brain, EEG has been found to be a useful technique for studying emotional changes. EEG can detect various emotions such as calm, happiness, stress, sadness, fear, surprise, etc. Valence has a strong correlation with EEG signals and correlations were found in all frequency bands11. It was observed that this association was not consistent with what was observed in the pilot study.

2.4. 1D-CNN model

The proposed project uses CNN on raw EEG. As shown in Figure 3, in 1D CNN, kernels with a certain width and height are passed on the time series data. While passing through the time series, it performs a simple array operation of convolution to learn discriminative features from the EEG signal.

Since 1D CNNs have low computational complexity, they do not require specialized hardware to train and run in real time. They are easier to train and they show good performance in shallow architectures compared to very deep 2D-CNN architectures. There are many parameters that need to be decided in each CNN layer, such as kernel size, number of filters, step size, selection of activation function, etc.

3. Literature review and research gaps discovered

Automatic emotion recognition and classification based on EEG signals is a recent problem, mainly based on the application of traditional methods. Various methods have been proposed, such as using the power spectral density in the EEG signal and applying Naive Bayes on it, or applying nearest neighbor on any other handcrafted features.

Discrete wavelet transform is also a common technique used to extract time-frequency domain features from EEG signals, and SVM and others have been applied to these features.

Some methods extract statistical features from signals, such as their mean, standard deviation, kurtosis, etc., and apply models to them. Overall, the focus is on the statistical features present in the EEG, to which researchers reduce time series and then apply classic machine learning models on these features.

These models also focus on binary classification tasks of high price/low price or high arousal/low arousal. Separate models have been built for each emotion to classify emotions.

In this project, these two issues have been addressed. First, we use raw EEG signals to obtain good classification accuracy compared to using hand-designed features. 1D-CNN is applied to raw EEG signals to obtain 75% accuracy. Second, a multi-classification problem is posed instead of two separate binary classification problems. Multi-category classification is a more challenging problem, and it is more difficult for the model to distinguish 4 categories compared to 2 categories done by previous researchers.

4. Proposed work and methods

4.1. Proposed work

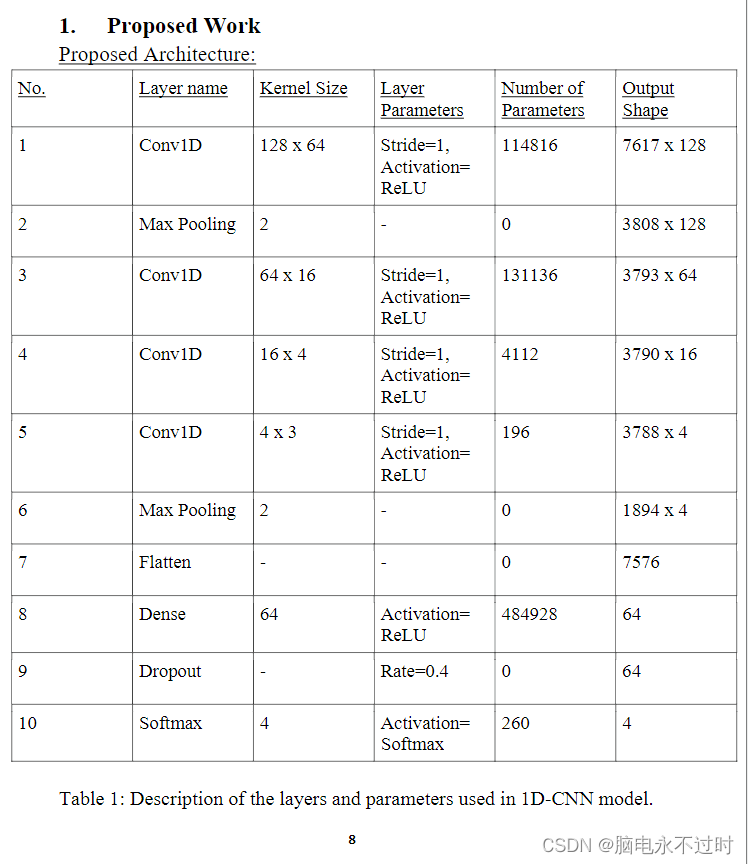

Table 1: Description of layers and parameters used in the one-dimensional network model

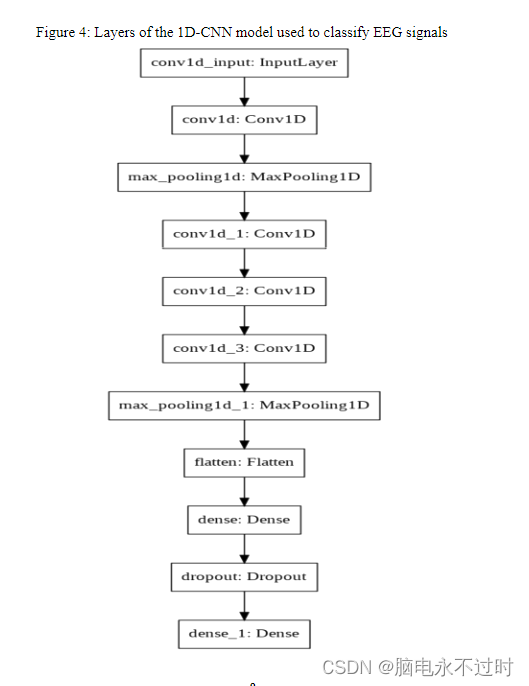

Figure 4: Number of layers of a one-dimensional CNN model used to classify EEG signals

Table 1 and Figure 4 show the proposed model architecture in detail. The proposed model is a one-dimensional convolutional neural network.

The 1D convolutional layer is the first layer of the post-input model and it uses a kernel size of 64 and 128 filters with a stride of 1. Use ReLU activation at the output of this layer. One-dimensional max pooling is performed on the output of the first layer with pool size 2. The 1D convolution is then repeated 3 times with the number of filters being 64, 16 and 4, and the kernel sizes being 16, 4 and 4 respectively. The step size of these three methods is 1, and the activation degree is ReLU. Apply another max pooling layer with pool size 2 and then flatten its output.

Then, a dense layer with 64 neurons is applied together with ReLU activation as part of the classification part of the model. Shedding with a rate of 0.4 is used for regularization purposes and to prevent co-adaptation in neurons. Finally, a Softmax layer with 4 neurons classifies the task into 4 classes.

Hyperparameter tuning was performed to obtain the parameters listed in Table 2. Adam optimizer is used to reach the optimal point of the loss function. A learning rate of 0.01 is used with a decay of 1e-3. A custom decay scheduler is used, which is described in the code. Classification is performed using categorical cross-entropy of the softmax layer. The accuracy of the model is very high

Table 2: Hyperparameters used to obtain the minimum Cross-Entropy Loss of the model

4.2. Method

Algorithms and flowcharts:

In this project, the aim is to create a classifier that will classify the emotions of a subject using the EEG signals produced in the brain during trials of watching video stimuli.

a. Input instance (X) of the classifier:

When the video was shown to the subject during the trial, the subject produced EEG signals, and these signals were time series of length 8064 because the sampling was done at 128Hz for 63 seconds. 32 electrodes were used to read EEG signals from the subject's brain. There were 40 videos in total, each shown to each of the 32 subjects in the experiment. Therefore, for each subject, 40*32*8064 data related to us are generated.

b. Label (Y):

After the trial, each subject rated the video's arousal and excitement after viewing it. Ratings are based on a 1-9 point scale. To generate labels from this data, we have thresholded the values at the middle of the scale (i.e. 5). This gives us two labels: high price and low price, and similarly two wake labels.

c.Indicators:

Accuracy:

It is simply a measure of the number of correctly predicted instances relative to the total number of instances.

Figure 5: Flowchart of emotion classification

The various steps followed during the classification process are shown in Figure 5. First, a total of 1280 instances were read; each instance had data from 32 EEG channels, and the length of each channel 8064 corresponds to 63 seconds of data recording. The first 3 seconds of data are deleted and the last 60 seconds of data are retained. The data has been preprocessed to remove artifacts and downsampled to 128Hz.

Now, using the EEG placement described in [4], a pair of symmetrically opposite EEGs is found. These EEG data were subtracted from the original 32 samples, resulting in 14 time series per sample. These samples are then normalized to a range of 0 to 1 and then normalized to remove the mean and make the standard deviation 1.

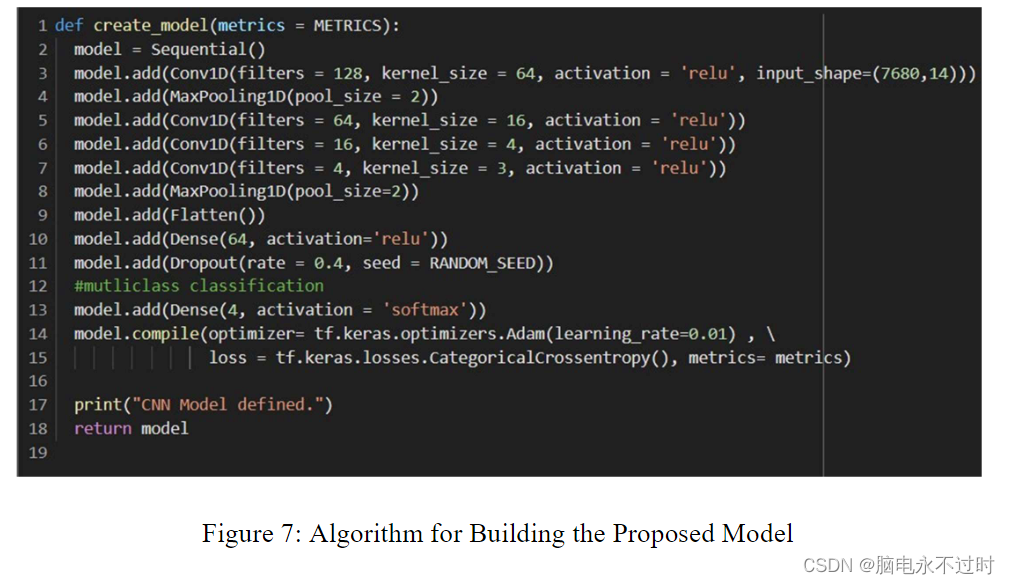

After this minimal preprocessing, the EEG channels were divided into training, validation and test sets with 864, 288 and 128 samples each. A 1D CNN (whose architecture has been described) is then used to learn from this data and perform classification. Figure 7 depicts the algorithm of the deep learning model.

The model is implemented using the Keras API and the Tensorflow backend of the Python language.

5. Results and discussion

This project uses the DEAP[6] data set to classify emotions into 4 categories, namely HV/HA, HV/LA, LV/HA, and LV/LA in the valence arousal model. Fourteen pairs of EEG channels were identified [4] and were obtained from the input of 32 channels for each trial. A total of 1280 samples are divided into training set, validation set and test set, with sizes of 864, 288 and 128 respectively. The model described in Table 1 is used for classification purposes, after hyperparameter tuning, the parameters present in Table 2 are obtained and used.

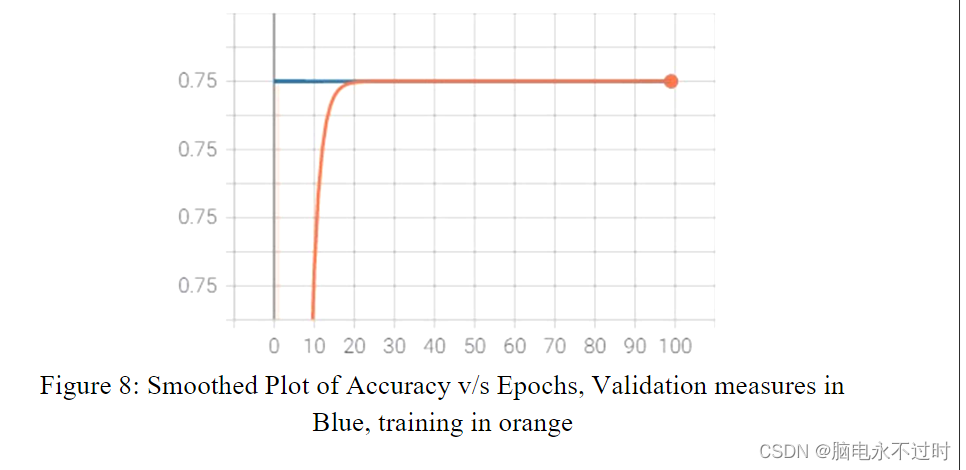

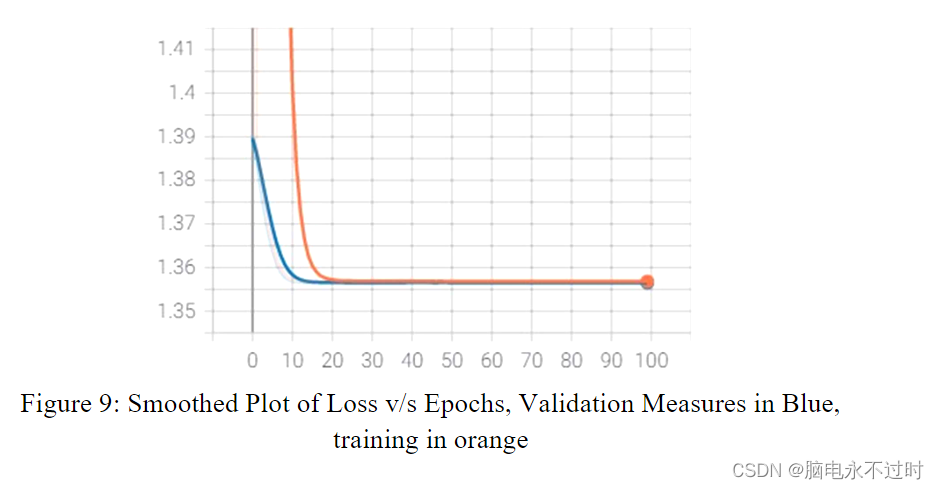

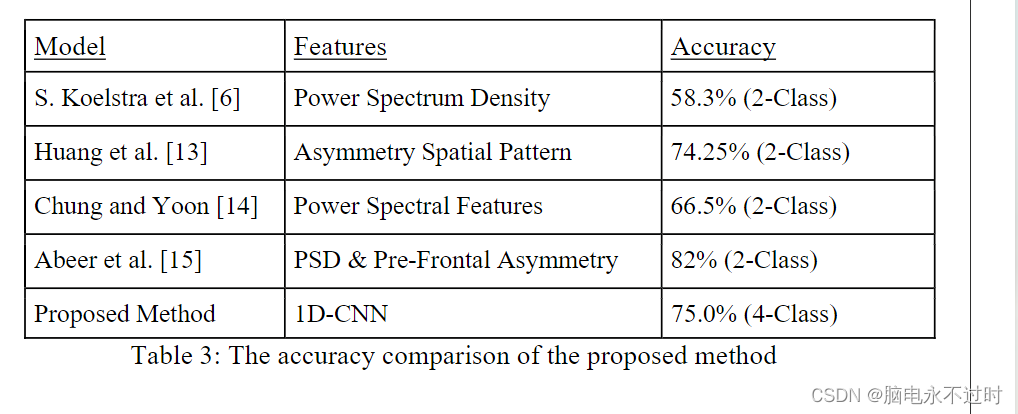

Figures 8 and 9 show plots of accuracy and loss versus epoch. The graph shows that after 75% accuracy, the model stops learning and the accuracy and loss plateau. The study concluded that for a given type of deep learning model and a given dataset, 75% is the highest accuracy achievable using raw EEG signals. This accuracy works for a 4-class classification problem, which is much more difficult than binary classification. [6] using feature extraction methods on EEG signals gave a baseline average of 58.3% for binary classification, which the proposed method significantly exceeded.

Figure 8: Smooth plot of accuracy v/s epoch, blue is validation, orange is training.

Figure 9: Smooth plot of loss v/s epoch, blue for validation, orange for training.

Table 3: Comparison of accuracy of proposed methods

Most studies on emotion classification on the DEAP dataset focus on using complex feature engineering methods, such as discrete wavelet transform, principal component analysis, power spectral density of EEG signals, etc., as well as Naïve Bayes, support vector machines, and KNN classifiers and other models. The proposed method does not use any complex feature engineering techniques. 1D-CNN is applied directly to the original EEG signal. Minimal preprocessing was done, with the first 60 seconds of the EEG signal, normalized to the 0-1 range, and then normalized to remove the mean and make the standard deviation 1. The model's multi-class classification accuracy on raw EEG signals is very high, while the accuracy of comparable studies is only about 85%, and only about 85% for binary classification.

Feature enhancement seems necessary to increase the number of samples available for training the model. Additionally, more adjustments can be made to different architectures of the model to achieve better accuracy.

6. Environmental requirements

Google Labs was used for this project. The dataset was uploaded to Google Drive, and Google Colab's GPU was used to train the model online. The experimental setup can be replicated using the following software and hardware requirements.

6.1 Software requirements

7.Conclusion

Automatic classification and recognition of EEG signals is a difficult task in the field of machine learning. This project proposes an end-to-end deep learning neural network model for classifying the emotions experienced by a person through music video clips into 4 categories. 1D-CNN is used for this task, which learns features from raw EEG signals. Compared with the average binary classification accuracy of 58.3% in [6], the proposed method achieves a weighted accuracy of 75% on the test data. This model illustrates the ability of deep learning models to learn features from raw data without any complex feature extraction in traditional techniques.

Please see your personal homepage for papers and source code: