1. What is the role of performance indicators in performance testing?

Performance indicators play a very important role in performance testing. They help us evaluate and understand the performance of the system. The following uses easy-to-understand words to explain the role and significance of performance indicators:

- Help us understand the processing power of the system: Performance indicators can tell us how many requests the system can handle under a given load. Just like a courier boy, how many packages he can deliver every day is his processing capacity. For a system, performance indicators can tell us how many requests it can handle, so that we can know whether the system's processing capabilities meet our needs.

- Help us evaluate the stability of the system: Performance metrics can also help us evaluate the stability of the system under high load conditions. Just like a car, to know whether it is stable at high speeds, we need to know its top speed and handling performance. For the system, performance indicators can tell us whether the system can remain stable under high concurrency conditions without crashing or slowing down the response.

- Help us find performance bottlenecks and optimization directions: Performance indicators can help us find performance bottlenecks and optimization directions of the system. Just like a traffic jam at an intersection, we need to know where the traffic jam is in order to take appropriate measures. For the system, performance indicators can tell us which link is causing the performance problem, such as slow database response, high network latency, etc., thus helping us find the direction for optimization.

All in all, performance indicators help us understand the processing capabilities of the system, evaluate the stability of the system, and help us discover performance bottlenecks and optimization directions during performance testing. They are like a compass that guides us to understand the performance of the system and make appropriate decisions and optimizations.

This article will provide a special introduction to commonly used indicators in the performance testing process, hoping to help everyone.

现在我也找了很多测试的朋友,做了一个分享技术的交流群,共享了很多我们收集的技术文档和视频教程。

如果你不想再体验自学时找不到资源,没人解答问题,坚持几天便放弃的感受

可以加入我们一起交流。而且还有很多在自动化,性能,安全,测试开发等等方面有一定建树的技术大牛

分享他们的经验,还会分享很多直播讲座和技术沙龙

可以免费学习!划重点!开源的!!!

qq群号:110685036

2. Response time

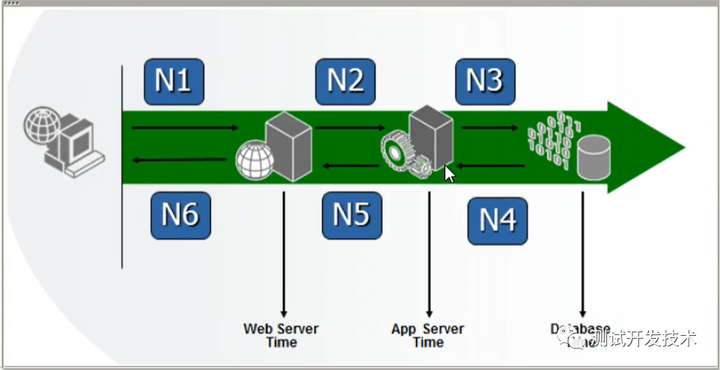

Response time is one of the key indicators of performance testing. The complete client response time mainly includes the following components:

- The time the client sent the request

- The time it took for the request to travel over the network

- The time it takes for a request to enter the server-side queue and wait for processing

- The time it took for the server to process the request

- The time it takes for the response to come back over the network

- The time the client received the response

The time of each link can be obtained in the following ways:

- Client time: The client code records the time of sending the request and receiving the response, and the calculated difference is the client time.

- Network time: Use a packet capture tool to capture request and response packets, and calculate the time difference between request and response packets during network transmission.

- Server queue time: Obtain the time difference between the request entering the queue and starting processing through logs or tracking points.

- Server processing time: Obtain the time difference between the start of request processing and the end of processing through logs and tracking points.

- Total response time: The client has its own statistics, and the time difference between the entire request and the response can also be obtained by capturing packets.

If the response time is further classified into three categories, it can be divided into:

- Client response time: After the client initiates a request, the time from initiating the request to receiving the server response.

- Network consumption time: The time it takes for a request to be transmitted over the network, including the time it takes to send a request and receive a response.

- Server processing time: After the server receives the request, it takes time to process the request and return a response.

To obtain data for these components, the following methods can be used:

- Client response time: Performance testing tools usually provide statistics on client response time, which can be obtained directly from the test tool's test report.

- Network consumption time: You can use network packet capture tools, such as Wireshark, to capture request and response network data packets and obtain network transmission time.

- Server-side processing time: You can add a log or timer in the server-side code to record the start time and end time of request processing. Calculate the time difference between the two to determine the server-side processing time.

In actual testing, performance testing tools can be used to automatically collect and calculate this data. For example, in Apache JMeter, you can use the "Response Time" Listener to get the response time, use the "View Results in Table" Listener to view the network transmission time, and use logs or timers to record server-side processing time.

By collecting and correlating the time data of each link, we can get the decomposition of the entire response time, analyze the performance bottlenecks, and then carry out targeted optimization.

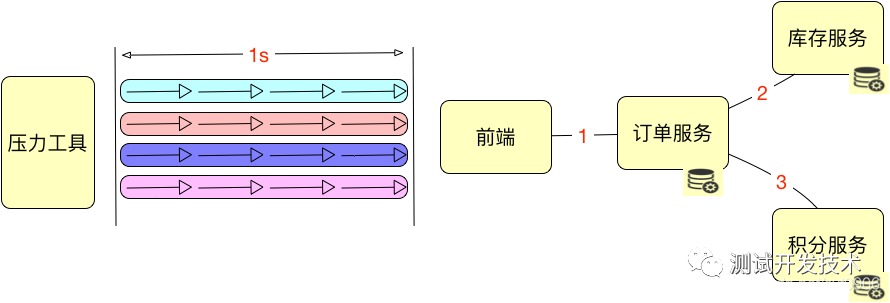

3. Number of concurrent users

The number of concurrent users refers to the number of users who access the system at the same time during the same time period. It is commonly used in performance testing:

- Measure the concurrency capability of the system: The number of concurrent users can be used to evaluate the performance of the system when processing multiple user requests at the same time. By increasing the number of concurrent users, you can test the system's performance under high load conditions and determine the system's concurrent processing capabilities.

- Discover system bottlenecks: By gradually increasing the number of concurrent users, you can observe whether the performance of the system decreases as the number of concurrent users increases. When the system reaches the peak number of concurrent users, if performance drops significantly, it may mean that there is a bottleneck in the system and needs to be optimized.

- Evaluate the stability of the system: The increase in the number of concurrent users will increase the load on the system. By observing the stability of the system under high concurrency conditions, the reliability and stability of the system during long-term operation can be evaluated.

The specific method of calculating the number of concurrent users can be determined based on the testing requirements and testing tools. Here's a common calculation:

- Define the test scenario: determine the upper limit and incremental step size for the number of concurrent users. For example, set the number of concurrent users to start from 10 and increase by 10 users each time until the preset maximum number of concurrent users is reached.

- Configure test tools: Use performance testing tools, such as Apache JMeter, configure the test plan, and set the number of threads in the thread group to the current number of concurrent users.

- Execute tests: Run performance tests and observe system performance metrics such as response time, throughput, etc.

- Increase the number of concurrent users: Gradually increase the number of concurrent users and repeat steps 2 and 3 until the preset maximum number of concurrent users is reached.

- Analysis results: Analyze the performance of the system under different numbers of concurrent users based on the test results, such as changes in response time, changes in throughput, etc.

Among them, it should be noted that the maximum number of concurrent users refers to the maximum number of user requests that the system can handle simultaneously .

It is mainly used in performance testing to determine and evaluate the performance of the system under maximum load conditions. By testing the system's performance under the maximum number of concurrent users, the system's ultimate capacity can be determined and help plan system expansion and upgrades.

- When the system reaches the maximum number of concurrent users, if the performance drops significantly, it may mean that there is a bottleneck in the system and needs to be optimized. The maximum number of concurrent users can help discover system performance bottlenecks under high load conditions and guide performance optimization work.

- Testing of the maximum number of concurrent users can evaluate the reliability and stability of the system over long periods of time. By observing the performance of the system under maximum load, you can determine whether the system can run stably and avoid problems such as performance degradation or system crashes.

The method of calculating the maximum number of concurrent users can be determined based on the testing requirements and testing tools. Here's a common calculation:

- Initial number of concurrent users: Set an initial number of concurrent users based on the estimated capacity and performance requirements of the system.

- Increase the number of concurrent users: Gradually increase the number of concurrent users, adding a certain number of users each time, for example, 10 users each time.

- Monitor system performance: After each increase in the number of concurrent users, observe the system's performance indicators, such as response time, throughput, etc. If the performance of the system drops significantly, it may mean that the system has reached the maximum number of concurrent users.

- Continue to increase the number of concurrent users: If the system's performance is still stable, you can continue to increase the number of concurrent users until the system's performance drops significantly or reaches the preset maximum number of concurrent users.

It should be noted that the calculation method of the number of concurrent users may vary depending on the testing tool and testing requirements. In actual testing, adjustments and optimizations can be made according to specific conditions.

4. Throughput

Throughput is a very important indicator in performance testing.

Throughput represents the amount of work a system can process or produce per unit time. For web systems, throughput usually refers to the number of requests that can be processed or the number of transactions completed per second. effect:

- Evaluate the upper limit of system processing capacity, reflecting the maximum load capacity.

- Compare the impact of different hardware architectures, algorithms, etc. on throughput.

- Trade-off with other quality indicators to find a balance. Calculation method:

- The total number of requests completed during the test time/test time.

- Average number of completed requests per second.

- The peak point of the throughput curve under different loads is the maximum throughput. For example: If a server handles 1000 requests in 10 seconds, its throughput is:

1000/10 = 100 req/s

As the load increases, the throughput first increases and then decreases. The peak point throughput is the maximum processing capacity of the server. Therefore, throughput intuitively reflects the system's processing capabilities and is an important indicator for load testing and capacity planning. Increasing throughput can handle more requests, but there are other trade-offs as well.

5. What is the difference between throughput and concurrency?

Throughput and the number of concurrent users are two different indicators in performance testing, and they have different meanings and applicable scenarios.

Throughput : refers to the number of requests processed by the system in unit time. It is mainly used to evaluate the processing power and performance of the system. By testing your system's throughput, you can evaluate the performance of your system by understanding the number of requests it can handle under a given load. Throughput is calculated by counting the number of requests per unit time.

Number of concurrent users : refers to the number of user requests that exist at the same time, indicating the number of user requests currently carried by the system. It is mainly used to evaluate the concurrency capabilities and stability of the system. By testing the number of concurrent users of the system, you can understand the number of user requests that the system can handle simultaneously under a given load, thereby evaluating the concurrency performance of the system. The number of concurrent users is calculated by counting the number of simultaneous user requests.

The two have the following differences:

- Throughput (Throughput) indicates the number of requests or transactions that the system can handle per unit time. The higher the throughput, the stronger the system's processing capabilities.

- The number of concurrent users (Concurrent Users) indicates the number of active users accessing the system at the same time. An increase in the number of concurrent users will increase the system load. Applicable scenarios for both:

- Throughput focuses more on testing the upper limit of the overall processing capacity of the system and is used to test the maximum load that the system can withstand.

- The number of concurrent users focuses on simulating the number of user visits in real scenarios and is used to test the performance of the system under different user numbers.

The difference between throughput and the number of concurrent users is that throughput is an indicator for evaluating system processing capabilities, indicating the number of requests processed by the system per unit time; while the number of concurrent users refers to the number of user requests that exist at the same time, indicating the user requests currently carried by the system. quantity.

For example, if the number of concurrent users of a system is 100, and the throughput is 200 requests/second, then the system processes 200 requests per unit time (one second), and these requests may come from 100 concurrent users. user.

For example: We need to test the peak processing capacity of an e-commerce website. You can increase the number of concurrent users quantitatively until the throughput reaches its peak and then begins to decrease. The maximum throughput at this time is the processing upper limit of the system, and the corresponding number of concurrent users is the maximum number of users that the system can bear. Then we can use different numbers of concurrent users to test indicators such as system response time and success rate in various common scenarios. So both are very important, and setting both appropriately can comprehensively test the performance of the system. The throughput test peak value and the number of concurrent users simulate real scenarios.

6. What exactly is load, and what is the difference between the number of requests and the number of concurrencies?

In performance testing, load refers to the stress or load placed on a system, simulating the workload and concurrent requests faced by the system under actual usage.

The difference between load, number of requests and number of concurrency is as follows:

- Load: Load is a description of the overall pressure on the system, which includes various resource demands and operations faced by the system. Load can be composed of many aspects, such as user behavior, concurrent requests, data volume, network latency, etc. Loads can be used to simulate the different workloads faced by the system under actual usage.

- Number of requests: The number of requests refers to the number of requests sent to the system within a unit of time. It is one of the indicators to measure the processing power of the system. The number of requests is usually used to evaluate the system's processing capability under a given load, that is, the number of requests that the system can handle per unit time.

- Concurrency number: The concurrency number refers to the number of user requests that exist at the same time, indicating the number of user requests currently carried by the system. It is one of the indicators to measure the concurrency capability of the system. The concurrency number is usually used to evaluate the concurrency performance of the system under a given load, that is, the number of user requests that the system can handle simultaneously.

Suppose there is an online shopping website and we conduct performance testing to evaluate the performance of the system. In the test, we set up different load scenarios, including user behavior, concurrent requests and data volume.

- Load case: We first simulate real user behaviors, such as browsing products, searching for products, adding to shopping cart, and placing orders. At the same time, we set a certain number of concurrent requests to simulate multiple users accessing the website at the same time. In addition, we also added a large amount of commodity data to increase the system's load on the database. These combine to form the load on the system, simulating the workload and stress under real-world usage.

- Number of requests and concurrency cases: Under the above load conditions, we can count the number of requests sent to the system per unit time, such as the number of requests sent per second. These request numbers can be used to evaluate a system's ability to handle a given load. At the same time, we can also count the number of user requests that exist at the same time, that is, the number of concurrent users. These number of concurrent users can be used to evaluate the concurrent performance of the system under a given load.

The difference and relationship between load, number of requests, and number of concurrency can be explained by such a scenario: Suppose we want to do a performance test on a shopping system, and set 2,000 users to access and operate the shopping system within one hour. So:

- Number of requests: During the 1-hour test, each user may perform multiple operations, such as searching for products, adding shopping carts, checking out, etc., and the total number of requests may reach 10,000.

- Number of concurrency: We can set each user to make a request every 10 seconds, so there are approximately 2000/10=200 concurrent requests at the same time.

- Load: During the entire 1-hour test, the system needed to withstand a total load of 10,000 requests. It can be seen that the load represents the overall request volume, reflecting the total number of requests that the system needs to process within a certain period of time. The number of concurrency represents the number of instantaneous simultaneous processing requests. The number of requests is the total number of requests during the entire test process. All three need to be viewed in conjunction with the time interval. By adjusting the number of concurrent users above and the frequency of requests, different loads can be generated to test the performance of the system.

Through the above cases, we can see the different meanings and applications of load, number of requests, and number of concurrency in performance testing. Load is a description of the overall pressure on the system, while the number of requests and the number of concurrency are indicators used to evaluate the system's processing capabilities and concurrency performance.

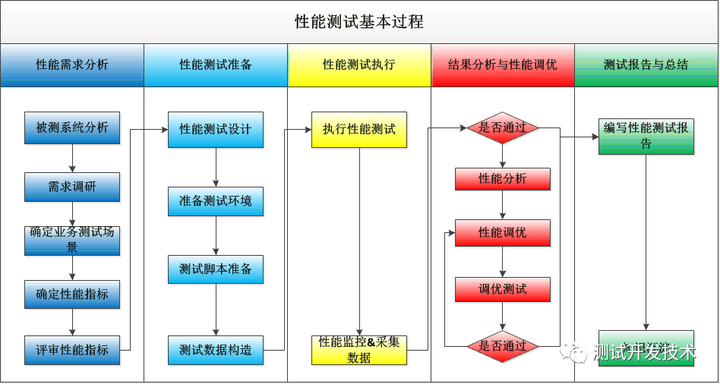

7. Performance testing process

How to carry out performance testing in actual work is basically the same. You can perform performance testing according to the following steps:

- Clarify the purpose of testing

First of all, it is necessary to clarify the goal of this performance test, whether it is to know the maximum load capacity of the system or to test the performance in a typical scenario, etc.

- Select key indicators

Choose one or two of the most critical performance indicators to focus on based on the purpose of the test, such as maximum response time, average response time, throughput, error rate, etc. It is not necessary or possible to test all indicators.

- Design test scenarios

Design reasonable test scenarios based on the actual usage scenarios of the system, and determine parameters such as the number of concurrent users, request volume, and test duration.

- Run test case

Write test cases, run them in set scenarios, and generate loads for testing.

- Collect and analyze results

Use relevant tools to collect monitoring results of key performance indicators and conduct analysis.

- Positioning optimization finds performance bottlenecks (CPU, IO, network, etc.) based on analysis results, and then optimizes them.

- Repeat the test and re-test to verify the optimization results.

In short, you can start from a small scope, select the most critical indicators, design representative test scenarios, and use this as a starting point to gradually expand the test scope and optimize the system.

Hope the above information is helpful to you! If you have any questions, please feel free to ask me.

Finally, I would like to thank everyone who read my article carefully. Looking at the increase in fans and attention, there is always some courtesy. Although it is not a very valuable thing, if you can use it, you can take it directly!

Software Testing Interview Document

We must study to find a high-paying job. The following interview questions are from the latest interview materials from first-tier Internet companies such as Alibaba, Tencent, Byte, etc., and some Byte bosses have given authoritative answers. After finishing this set I believe everyone can find a satisfactory job based on the interview information.