In the season of white dew and autumn rain, ModelWhale has also ushered in a new round of version updates to continuously optimize your user experience on multiple levels.

In this update, ModelWhale mainly has the following functional iterations:

-

Optimize Canvas multi-person self-research and development process (Team version✓)

-

Added the use of historical versions of the Canvas component (Team Edition✓)

-

Added asynchronous request model service (Professional version ✓ Team version ✓)

-

Optimize model service instance monitoring (Professional version ✓ Team version ✓)

-

Added pre-run code before injection analysis (Professional version ✓ Team version ✓)

-

Added data co-editing and project view-only permission settings (Team version ✓)

-

Added Notebook custom font size and line spacing (Basic version ✓ Professional version ✓ Team version ✓ )

1 Optimize Canvas multi-person self-research and development process (Team version ✓)

ModelWhale Canvas has "algorithm encapsulation" + "algorithm quick use" capabilities. Algorithm engineers within the organization can develop their own Canvas component encapsulation code, distribute Canvas analysis templates for direct use by organizational members, quickly build a research framework, and complete data analysis work with low code.

Components have 3 states, including to be tested, under testing, and online ; usually involving 3 roles, including component creator, component collaborative developer, and component user . After the "component creator" creates the component and proposes testing , he can test, edit, and iterate the algorithm components with the "component collaborative developer". During this period, multiple versions can be generated to record the iteration process; after the component is completed, the "component creator" " Online component, final version of the component (other process iteration versions will be automatically hidden); after being online, "component users" can freely use the algorithm component in their own Canvas canvas.

The Canvas component after it goes online also supports secondary editing iteration : "Component Creator" and "Component Collaboration Developer" can optimize and adjust code, parameters, ports and other information based on the online component version, and then the "Component Creator" Launch new component versions (hiding other component versions is also supported). For more instructions, see: Canvas low-code tool (development) .

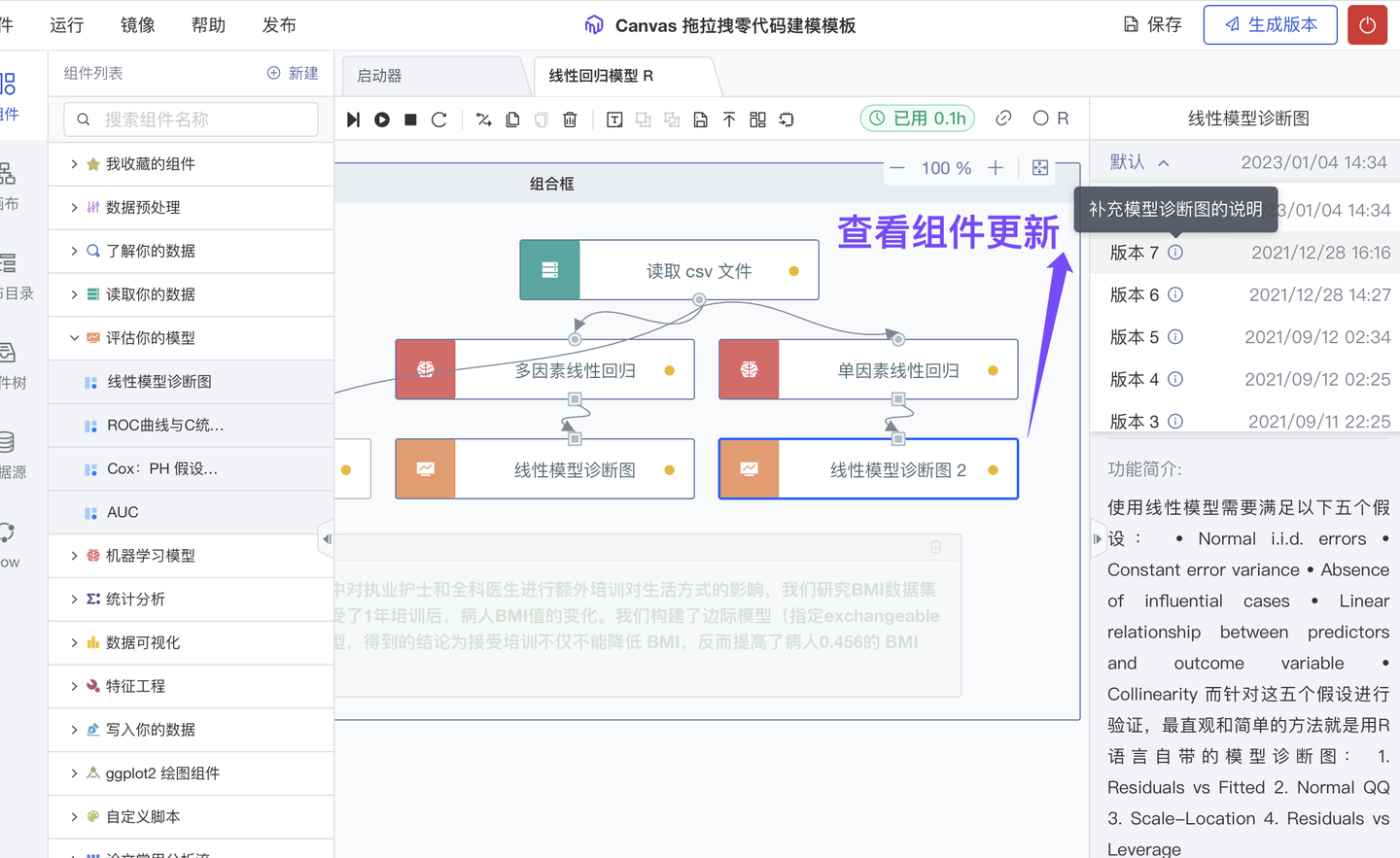

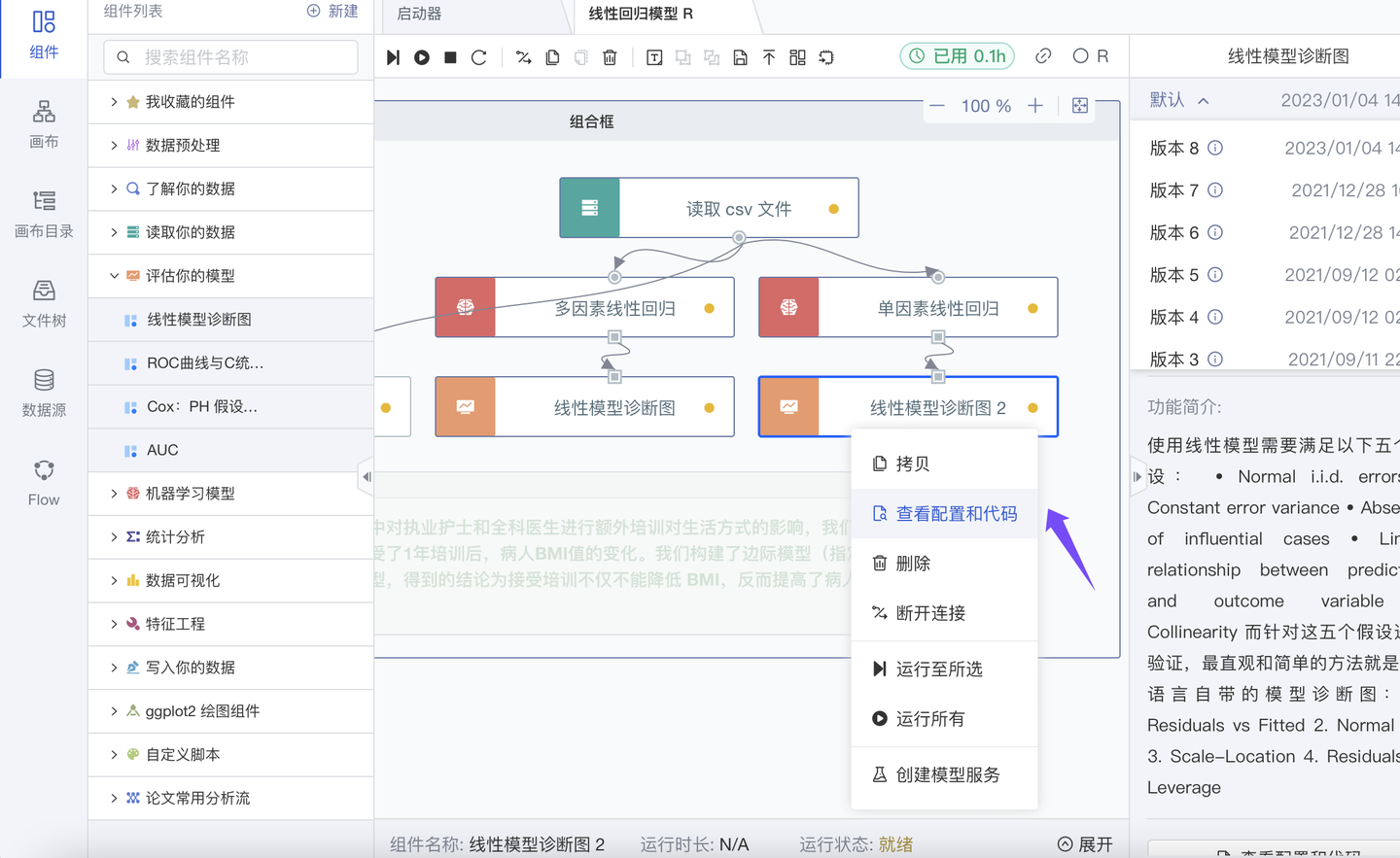

2 Added the use of historical versions of the Canvas component (Team Edition✓)

After the algorithm engineers (component developers) within the organization have completed the update iteration of the component and launched the new component version, organizational users (component users) are now supported to continue to use previous historical versions of the component based on the original Canvas analysis template. , Canvas Flow workflow continues to work: component users no longer need to additionally understand the "component version concept" and the "component change log" , which also reduces the explanation cost for component developers.

Updates to the Canvas component are forward compatible, that is, the original functionality will not be unavailable after the update. If necessary, organizational users can "switch component version" or "choose to use the default version" at any time; for more instructions, see: Canvas low-code tool (usage) .

3 Added asynchronous request model service (Professional version ✓ Team version ✓)

To apply the trained model to actual scenarios , model service deployment is required: to allow the value of the model to be better utilized in real application scenarios, and to feed back the iterative optimization of the model. However, model services with a long single inference time will cause some problems when calling , such as requiring model developers to modify ngnix and timeout each time, or transform the request to asynchronous at the application layer to smoothly call the model. The ModelWhale model Rest service now supports two request forms: [synchronous return] and [asynchronous return]; among them, (1) synchronously requested model services support further setting of "expansion mechanism" and "expansion threshold"; (2) asynchronous The requested model service supports "abandon" selection in ModelWhale call monitoring to meet the model calling needs in various scenarios. For more usage instructions, see: Model Service Deployment .

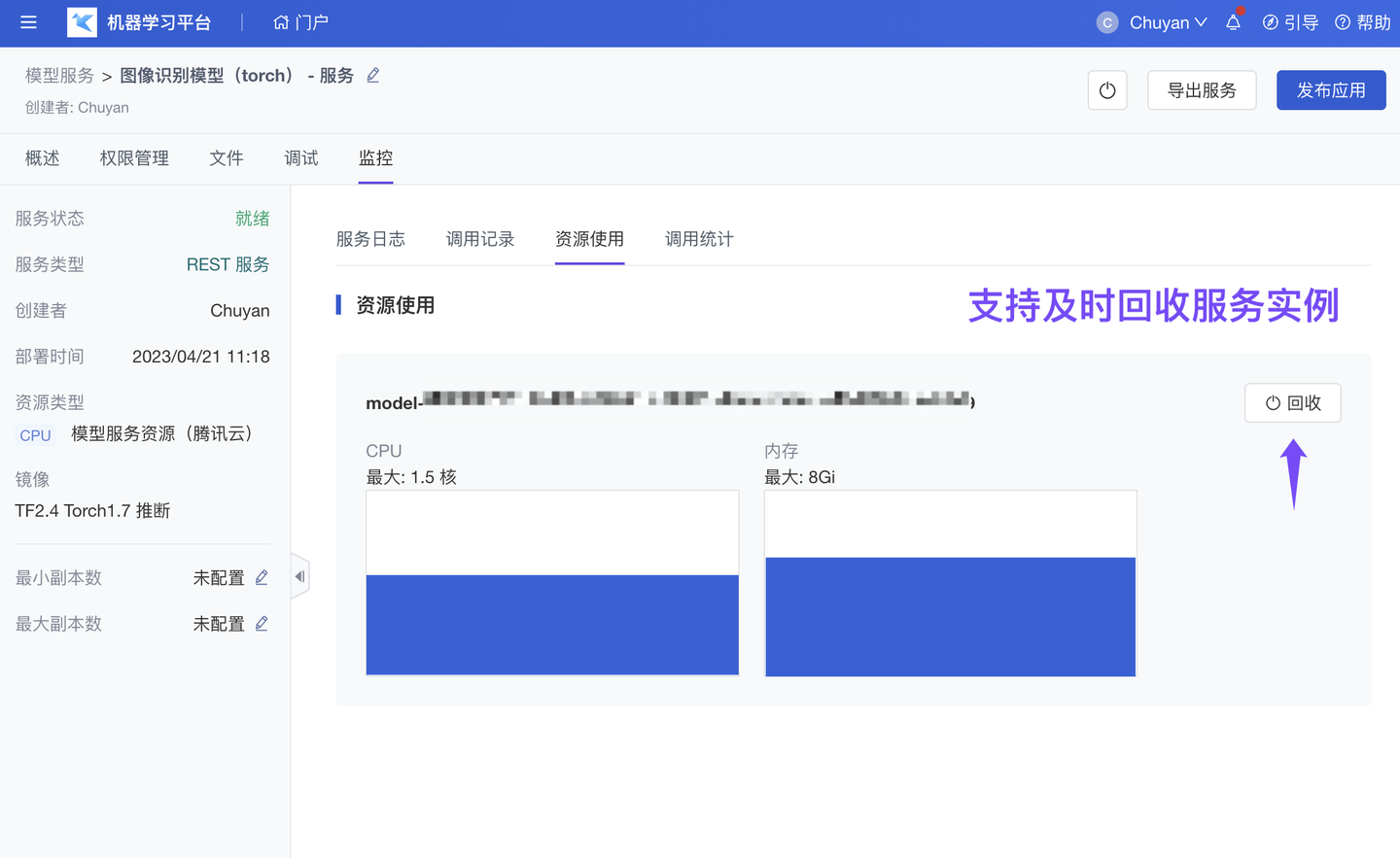

4 Optimize model service instance monitoring (Professional version ✓ Team version ✓)

ModelWhale supports resource usage monitoring of deployed model services , so that model developers can: (1) Expand capacity in a timely manner when model service usage increases and triggers a threshold ; (2) Timely recycle model service instances that are no longer used (such as some The old instance after modifying the configuration) to avoid waste due to resource occupation.

In addition, in addition to being able to monitor CPU model services, ModelWhale now also supports monitoring GPU model services : model developers can view the video memory and GPU usage of GPU model services in ModelWhale to track and understand the actual operation of the service. For more instructions, see: Model Service Deployment-Model Monitoring .

5 Added pre-run code before injection analysis (Professional version ✓ Team version ✓)

Depending on your research and analysis habits, you may want to preload certain tool libraries, analysis codes , or inject environment variables in advance before starting data exploration to build the required research environment. Now, you can enter them in ModelWhale "Personal Settings - Preferences - Inject Kernel Code".

After saving and checking the configuration before entering the programming interface (Notebook, Canvas), every time you connect to the Kernel (including first connection, reconnection after disconnection, and restarting the Kernel connection) , we will automatically execute the relevant code and build all for you. Required software analysis environment to improve the efficiency of your research work.

6 Added data co-editing and project viewing only permission settings (Team version ✓)

ModelWhale is equipped with a strict and flexible permission system for safe and efficient content sharing and collaboration .

(1) For data sets , we have added the "can be edited together" permission to the existing "can view and use" and "can distribute and manage" permissions : so that others can edit and update data files, description documents, Database and object storage connection and other information;

(2) For code projects , we have added a "view only " permission to the existing "can fork (generate a copy of the content)" and "can edit together" permissions: others can only browse the project content and cannot access it online. Fork (generate a copy of the content) and download the content for transfer and reproduction.

Other content entities on the ModelWhale data science platform, including but not limited to pre-training models , factor result libraries, parameter combination result libraries, algorithm libraries, model services, etc. , also support similar permission control.

7 Added Notebook custom font size and line spacing (Basic version ✓ Professional version ✓ Team version ✓)

ModelWhale Notebook has good code presentation and can be used as a " project report " to carry your research results.

In terms of content display , you can (1) "cite" past reports across projects and notebooks , such as historical test data analysis results, pictures and conclusions in professional reports, etc., to increase the rigor of the research report; you can also (2) hide the code Enter/display only the code output to make the report content more concise.

In terms of content formatting , in addition to (1) using Markdown Cell for document directory formatting , it now also (2) supports customizing font size and line spacing to create more beautiful research reports.

In addition, clicking on the Code Cell now enters the editing state by default, allowing for faster code editing.

For more instructions, see: Notebook - Analysis report writing .

The above is the entire content of this ModelWhale version update.

Enter ModelWhale.com , try the professional version (personal research) or the team version (organizational collaboration) for free, and get free CPU and GPU computing power! (It is recommended to use the computer for trial experience)

If you have any suggestions, questions, or trial renewal needs about ModelWhale, please [Contact MW] . MoMo is happy to serve you and communicate with you.