Author: Duan Zhongjie, Liu Bingyan, Wang Chengyu, Zou Xinyi, Huang Jun

Overview

In the past few years, with the rapid development of AI Generated Content (AIGC), the Stable Diffusion model has emerged in this field. In order to promote the development of this field, the Alibaba Cloud machine learning PAI team referred to the model structure of Stable Diffusion, combined with the characteristics of the Chinese language, processed and filtered a large amount of model pre-training data, and optimized the training process, and proposed PAI- Diffusion Chinese text and image generation model achieves a significant improvement in image generation quality and diversification of styles. The Pipeline of the PAI-Diffusion model not only includes the standard Diffusion Model, but also integrates the Chinese CLIP cross-modal alignment model, allowing the model to generate high-definition large images in various scenarios that comply with Chinese text descriptions. In addition, we also launched PAI’s self-developed Prompt beautifier BeautifulPrompt, which enables Stable Diffusion applications to create beautiful pictures with one click.

In this work, we expanded the PAI-Diffusion Chinese model family to multiple application scenarios, supporting multiple common functions such as text-based images, text-based images, image restoration, LoRA, and ControlNet. In order to better interact with the open source community, we have made all 12 PAI-Diffusion Chinese models (including basic models, LoRA, ControlNet, etc.) open source, and support users to download and use them freely, and work with developers to jointly promote the development of AI-generated content technology. Develop and create more creative and impactful work. In addition, two inference tools corresponding to the PAI-Diffusion Chinese model are also launched in the open source community. Among them, Chinese Diffusion WebUI, as a plug-in of Stable Diffusion WebUI, is seamlessly compatible with PAI-EAS and supports launching Chinese AIGC applications on PAI-EAS within 5 minutes with one click; Diffusers-API also perfectly supports the rapid deployment and inference of Chinese models.

In the following, we introduce in detail the use of the PAI-Diffusion Chinese model family and its tools Chinese Diffusion WebUI and Diffuser-API.

Multi-scenario PAI-Diffusion Chinese model family

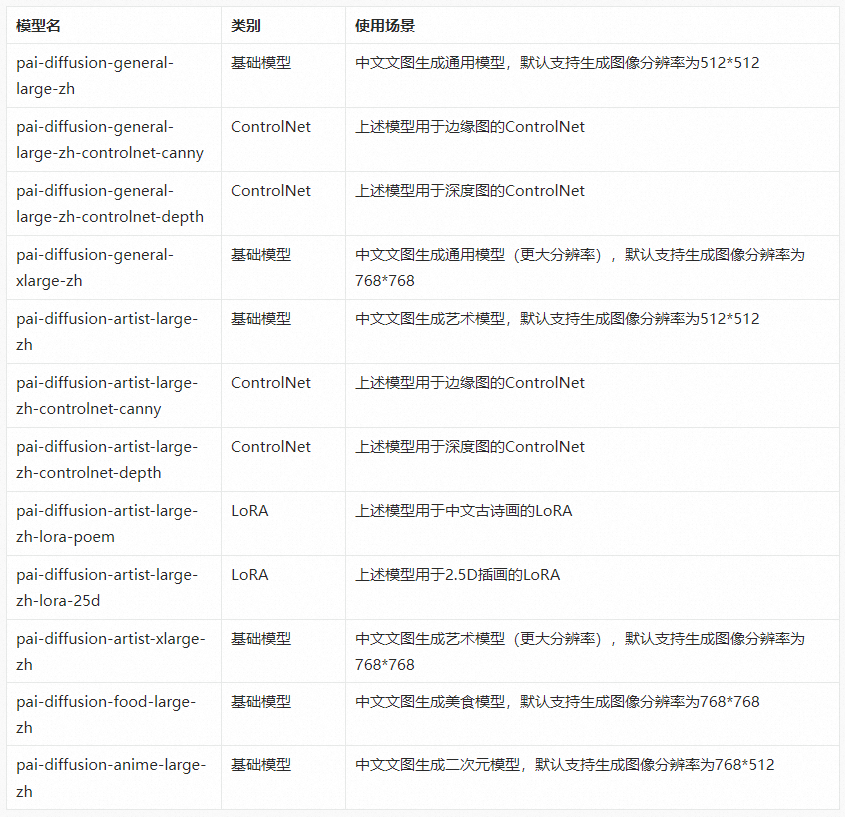

We used massive Chinese image-text pair data to train the following 12 models, including basic models, LoRA, ControlNet, etc. The model list is as follows:

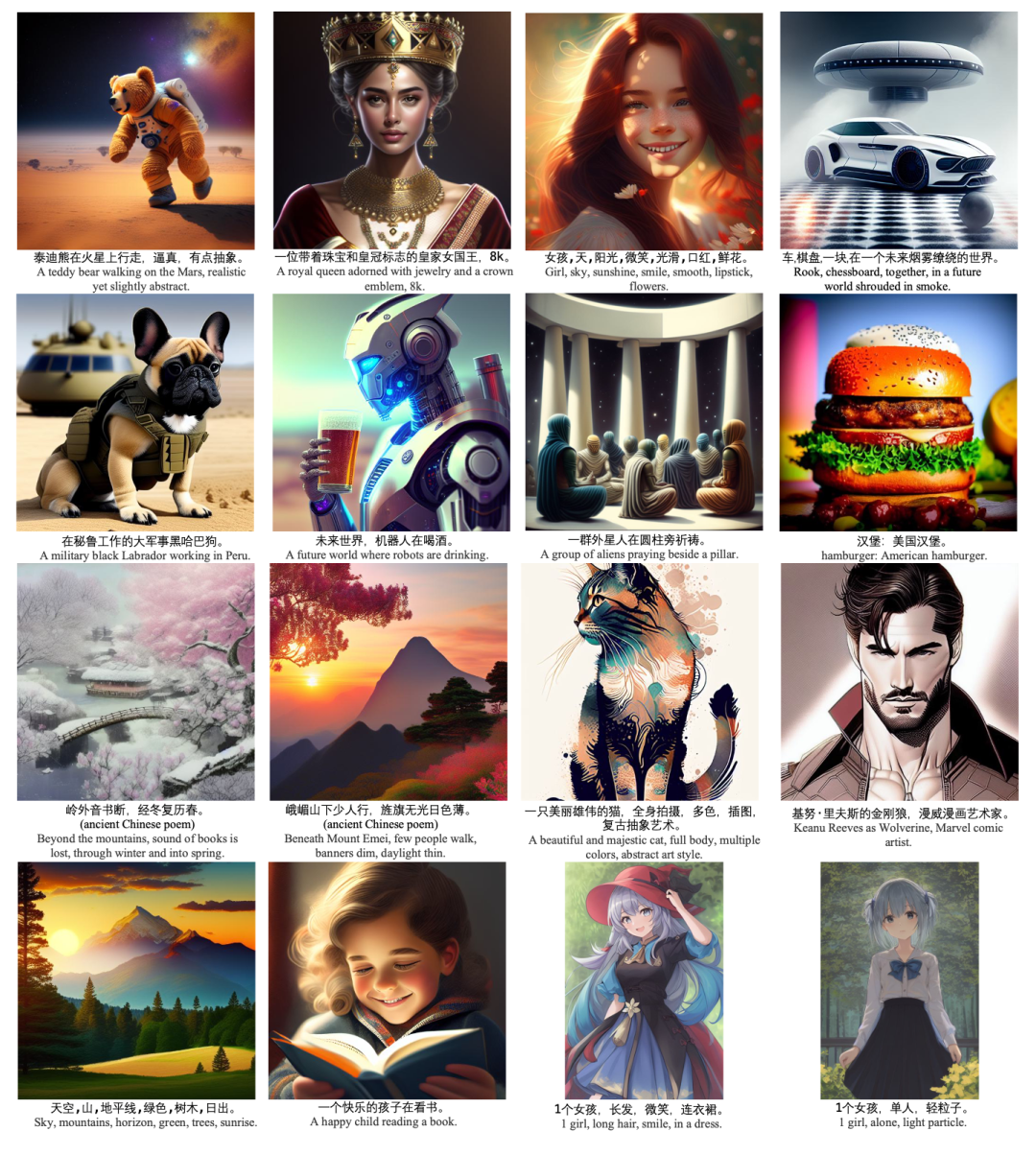

All the above models can be downloaded in our Hugging Face Space, and our models can also be called through ModelScope. The effect generated by the model is as follows:

The application scenarios of three PAI-Diffusion Chinese models are given below:

Application scenario 1: Input the draft image and the corresponding prompt to generate a detailed artistic drawing.

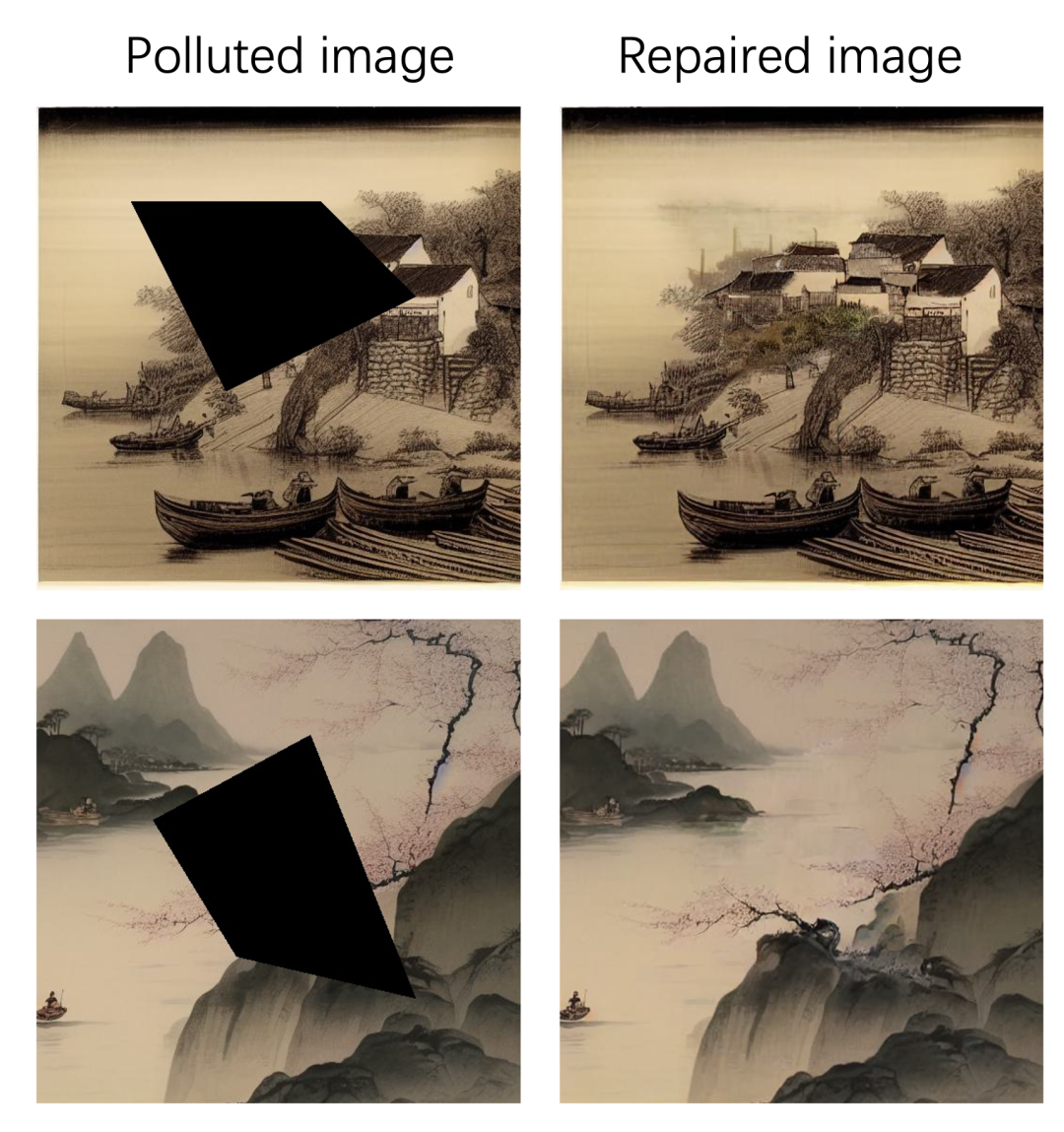

Application scenario 2: Repair of contaminated and damaged ancient poetry and painting images, that is, image in-painting.

Application scenario three: Drawing ancient Chinese indoor scenes for Chinese style games.

In order to improve the quality of the model output images as much as possible, we collect a large number of open source image-text pair data sets, including the large-scale Chinese cross-modal pre-training data set WuKong, the large-scale multi-language multi-modal data set LAION-5B, etc. In addition, we have also collected a large number of data sets in different fields and scenarios to expand the application scenarios of the PAI-Diffusion Chinese model family. We performed various cleaning methods on images and text to filter out low-quality data. Specific data processing methods include NSFW (Not Safe From Work) data filtering and watermark data removal. We also use CLIP scores and aesthetic value scores to filter data with lower CLIP scores and aesthetic value scores to ensure the semantic consistency of the generated images. and quality. In order to adapt to Chinese semantic scenarios, our CLIP Text Encoder uses EasyNLP's self-developed Chinese CLIP model ( https://github.com/alibaba/EasyNLP) for modeling, making the model better understand the Chinese language.

PAI-Diffusion Chinese model deployment tool

This section introduces in detail the two open source tools corresponding to the PAI-Diffusion Chinese model. Chinese Diffusion WebUI is seamlessly compatible with PAI-EAS as a plug-in, supporting the launch of Chinese AIGC applications with one click within 5 minutes; Diffusers-API supports the rapid deployment and inference of Chinese models through APIs.

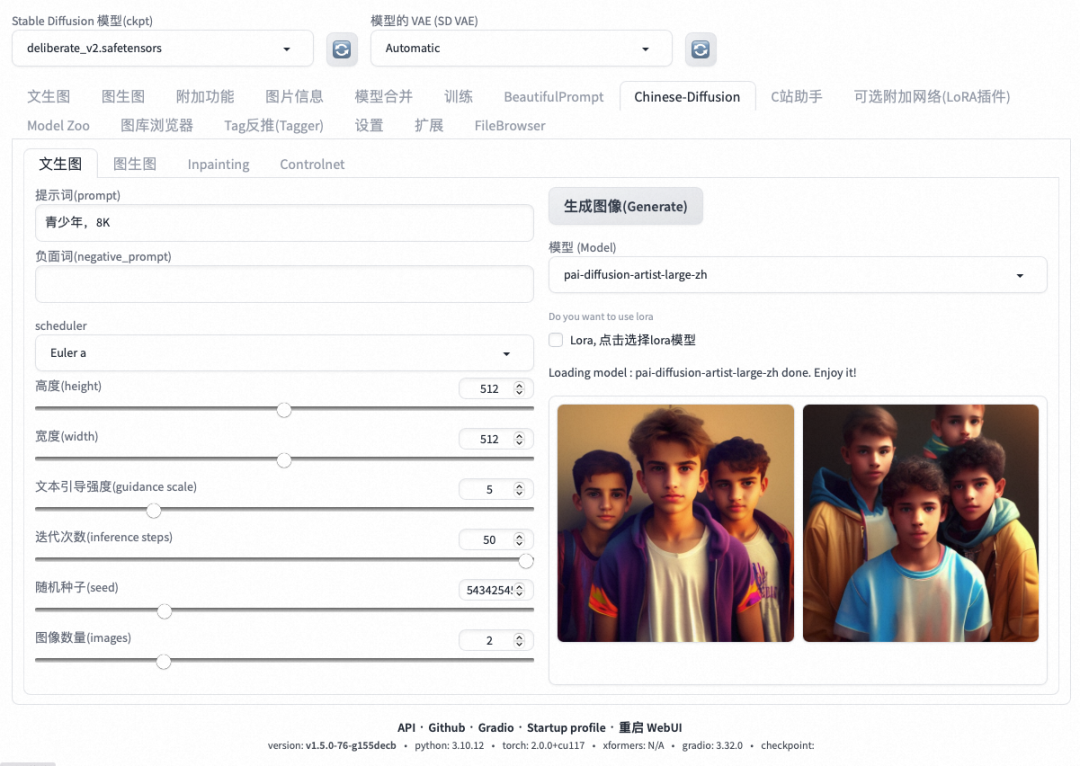

Chinese Diffusion WebUI

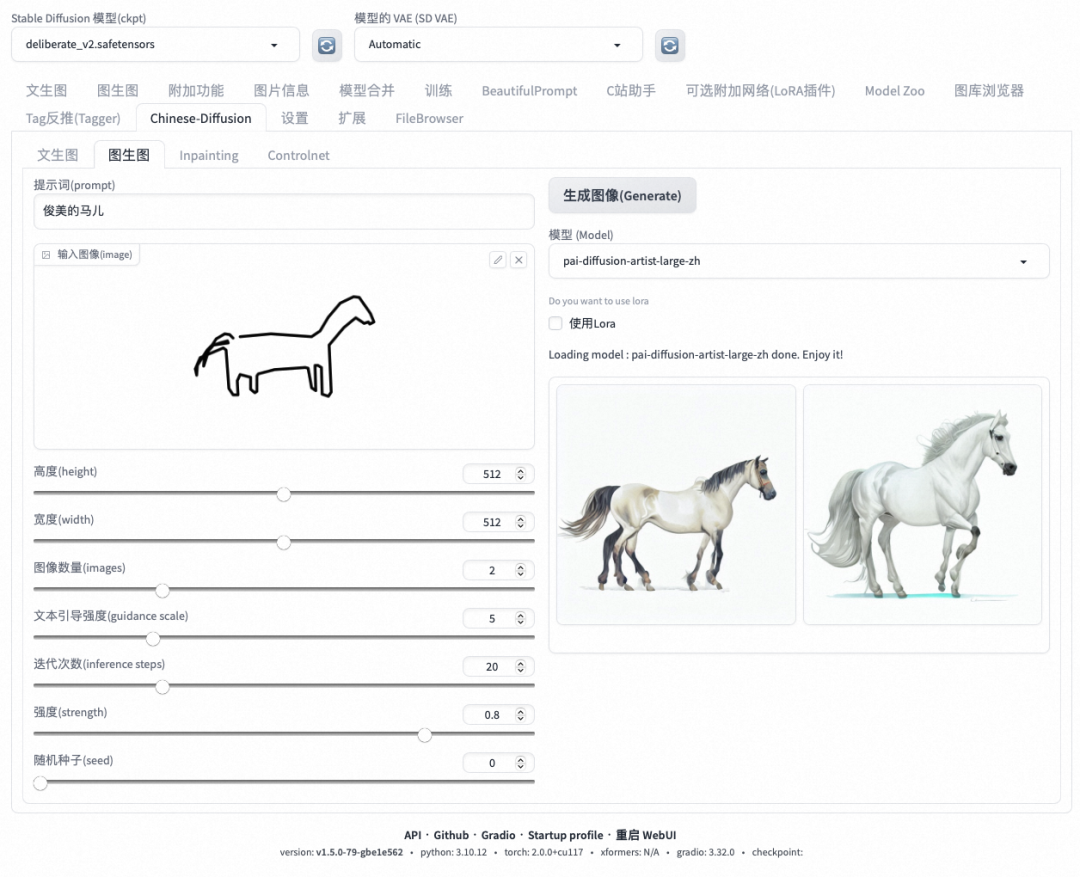

Since Stable Diffusion WebUI cannot natively support Chinese models, we developed Chinese Diffusion WebUI and provided it to users as a plug-in for Stable Diffusion WebUI. It provides a graphical user interface, allowing users (especially designers without programming experience) to use various functions of the PAI-Diffusion Chinese model, such as Vincentian diagrams, Tusheng diagrams, image style transfer, image editing, etc. The interface of Chinese Diffusion WebUI is as shown below:

In order to facilitate users to use Chinese Diffusion WebUI on PAI-EAS, our plug-in also supports two modes: stand-alone version and cluster version. Users can choose different modes according to needs and resources. In the stand-alone version, users use Chinese Diffusion WebUI on an exclusive node, which is especially convenient for individual designers to use. The cluster version uses PAI's elastic inference service to achieve parallel processing, efficiently utilize and share computing resources, thereby achieving higher resource utilization.

In addition, Chinese Diffusion WebUI can also be used in non-PAI-EAS environments. Users only need to download the Chinese Diffusion WebUI plug-in and place it in the plug-in directory of the standard Stable Diffusion WebUI for local use.

Diffusers-API

Diffusers-API is an open source text and image generation cloud service SDK based on Diffusers developed by the Alibaba Cloud machine learning PAI team. Users can directly deploy various Diffusion-related services on PAI-EAS based on the images provided by this project, such as Wenshengtu, Tushengtu, LoRA, ControlNet, etc. Diffusers-API also performs inference optimization on the model based on PAI-Blade, reducing the end-to-end delay of the inference process by 2.3 times. At the same time, it can significantly reduce the memory usage, surpassing industry SOTA optimization methods such as TensorRT-v8.5.

In Diffusers-API, we use StableDiffusionLongPromptWeightingPipeline as the default inference interface to support English Prompts with weights and no length restrictions. However, the default inference interface of Diffusers cannot seamlessly support the processing of Chinese text. We have extended the StableDiffusionLongPromptWeightingPipeline to automatically detect the language based on the Text Encoder of the loaded model and perform adaptation, so that it supports one-click deployment of the community Stable Diffusion and PAI-Diffusion Chinese models without modifying any interface of the Diffusers-API. An example of its HTTP request body is as follows:

{

"task_id" : "001",

"prompt": "一只可爱的小猫咪",

"negative_prompt": "模糊",

"cfg_scale": 7,

"steps": 25,

"image_num": 1,

"width": 512,

"height": 512,

"use_base64": True

}

The steps to deploy the PAI-Diffusion Chinese model are detailed here.

Summarize

Through the open source of the previous PAI-Diffusion Chinese model, we have successfully improved the quality and style diversification of image generation, and achieved the generation of high-definition large images of various scenes described in Chinese text. In addition, we also launched the self-developed Prompt beautifier BeautifulPrompt, which provides one-click image beautification capabilities for Stable Diffusion applications. In this work, we not only expanded the PAI-Diffusion Chinese model family to multiple application scenarios, but also fully open sourced 12 PAI-Diffusion Chinese models, including basic models, LoRA, ControlNet, etc. Our work hopes to provide developers with more creative possibilities and innovation opportunities, jointly promote the development of AI-generated content technology, and create more creative and influential works. In addition, we have also launched two open source tools, Chinese Diffusion WebUI and Diffuser-API, to provide a convenient use experience. Chinese Diffusion WebUI is seamlessly compatible with PAI-EAS as a plug-in, allowing users to quickly build Chinese AIGC applications within 5 minutes; while Diffusers-API perfectly supports the rapid deployment and inference of Chinese models. We look forward to working with developers to promote the advancement of AI-generated content technology.

Bun releases official version 1.0, a magical bug in Windows File Explorer when JavaScript is runtime written by Zig , improves performance in one second JetBrains releases Rust IDE: RustRover PHP latest statistics: market share exceeds 70%, the king of CMS transplants Python programs To Mojo, the performance is increased by 250 times and the speed is faster than C. The performance of .NET 8 is greatly improved, far ahead of .NET 7. Comparison of the three major runtimes of JS: Deno, Bun and Node.js Visual Studio Code 1.82 NetEase Fuxi responded to employees "due to BUG was threatened by HR and passed away. The Unity engine will charge based on the number of game installations (runtime fee) starting next year.