1. StatefulSet operation

–

1. First experience

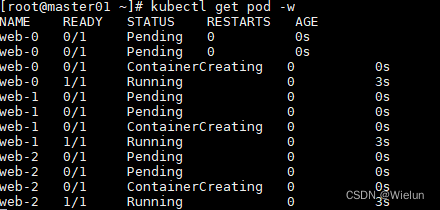

You can find that you wait for the previous one to start before starting the next one. Therefore, the failure of the health check of the container will also affect the startup

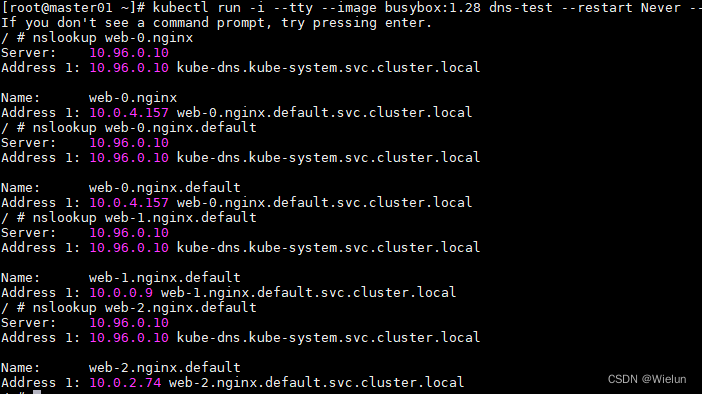

domain name: web-2.nginx.default.svc.cluster.local, which is generally written to web-2.nginx.default. That's it

[root@master01 ~]# cat ssweb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dns-test 1/1 Running 0 2m2s 10.0.4.103 master01 <none> <none>

web-0 1/1 Running 0 9m45s 10.0.4.157 master01 <none> <none>

web-1 1/1 Running 0 9m42s 10.0.0.9 master03 <none> <none>

web-2 1/1 Running 0 9m39s 10.0.2.74 node01 <none> <none>

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d2h

nginx ClusterIP None <none> 80/TCP 41s

[root@master01 ~]# kubectl get pod -w

test:

[root@master01 ~]# kubectl run -i --tty --image busybox:1.28 dns-test --restart Never --rm

Hostname and DNS will not change

It can be found that the IP address has changed, but nothing else has changed.

[root@master01 ~]# kubectl delete pod -l app=nginx

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dns-test 1/1 Running 0 5m3s 10.0.4.103 master01 <none> <none>

web-0 1/1 Running 0 47s 10.0.4.152 master01 <none> <none>

web-1 1/1 Running 0 44s 10.0.0.211 master03 <none> <none>

web-2 1/1 Running 0 41s 10.0.2.48 node01 <none> <none>

2. Expansion and reduction

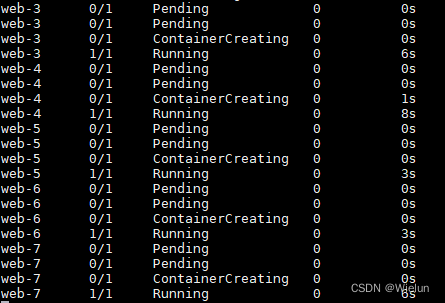

(1) Expansion

Expand in the same order as before

[root@master01 ~]# kubectl scale statefulset web --replicas 8

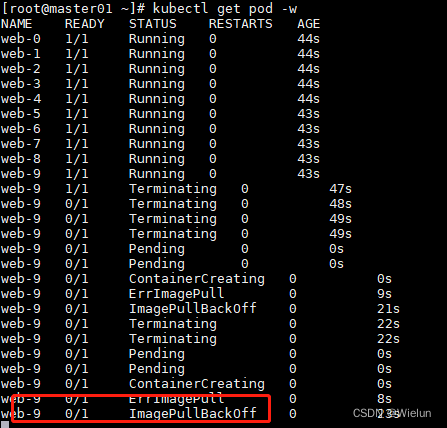

[root@master01 ~]# kubectl get pod -w

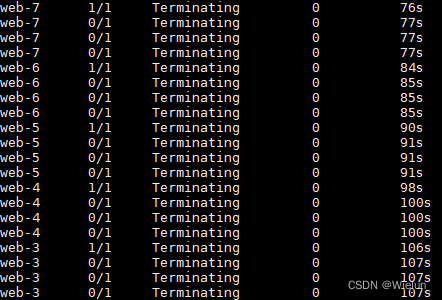

(2) Shrinking

Both scale and path are OK. You can find that web-7 is deleted first, and then web-6 is deleted.

[root@master01 ~]# kubectl scale statefulset web --replicas 3

[root@master01 ~]# kubectl patch sts web -p '{"spec":{"replicas":3}}'

[root@master01 ~]# kubectl get pod -w

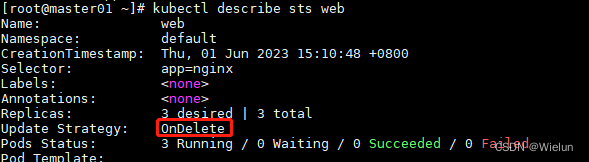

(3)OnDelete update

The default is RollingUpdate

[root@master01 ~]# cat ssweb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

updateStrategy:

type: OnDelete

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl describe sts web

test:

Found that the image will not be updated

[root@master01 ~]# kubectl patch statefulsets.apps web --type='json' -p '[{"op":"replace","path":"/spec/template/spec/containers/0/image","value":"nginx:1.19"}]'

[root@master01 ~]# kubectl get sts -owide

NAME READY AGE CONTAINERS IMAGES

web 3/3 9m55s nginx nginx:1.19

[root@master01 ~]# kubectl describe pod web-0|grep Image:

Image: nginx:latest

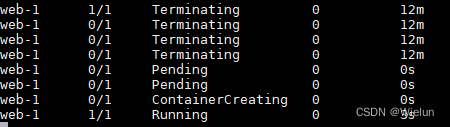

[root@master01 ~]# kubectl delete pod web-1

[root@master01 ~]# kubectl get pod -w

[root@master01 ~]# for i in web-0 web-1 web-2;do echo -n $i:;kubectl describe pod $i|grep Image:;done

web-0: Image: nginx:latest

web-1: Image: nginx:1.19

web-2: Image: nginx:latest

(4) RollingUpdate update

[root@master01 ~]# cat ssweb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

updateStrategy:

type: OnDelete

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl describe sts web|grep Update

Update Strategy: RollingUpdate

[root@master01 ~]# kubectl set image statefulset/web nginx=nginx:1.19

[root@master01 ~]# for i in web-0 web-1 web-2;do echo -n $i:;kubectl describe pod $i|grep Image:;done

web-0: Image: nginx:1.19

web-1: Image: nginx:1.19

web-2: Image: nginx:1.19

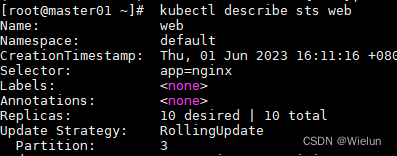

3. Grayscale release

(1)partition

Set partition to 3 to protect 3 from updating

[root@master01 ~]# cat ssweb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

updateStrategy:

type: OnDelete

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl patch statefulsets.apps web -p '{"spec":{"updateStrategy":{"type":"RollingUpdate","rollingUpdate":{"partition":3}}}}'

[root@master01 ~]# kubectl set image statefulset/web nginx=nginx:1.21

[root@master01 ~]# for i in web-0 web-1 web-2;do echo -n $i:;kubectl describe pod $i|grep Image:;done

web-0: Image: nginx:1.19

web-1: Image: nginx:1.19

web-2: Image: nginx:1.19

[root@master01 ~]# cat ssweb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl set image statefulset/web nginx=nginx:1.19

[root@master01 ~]# for i in web-{0..9};do echo -n $i:;kubectl describe pod $i|grep Image:;done

web-0: Image: nginx:latest

web-1: Image: nginx:latest

web-2: Image: nginx:latest

web-3: Image: nginx:1.19

web-4: Image: nginx:1.19

web-5: Image: nginx:1.19

web-6: Image: nginx:1.19

web-7: Image: nginx:1.19

web-8: Image: nginx:1.19

web-9: Image: nginx:1.19

[root@master01 ~]# kubectl patch statefulsets.apps web -p '{"spec":{"updateStrategy":{"type":"RollingUpdate","rollingUpdate":{"partition":2}}}}'

[root@master01 ~]# for i in web-{0..9};do echo -n $i:;kubectl describe pod $i|grep Image:;done

web-0: Image: nginx:latest

web-1: Image: nginx:latest

web-2: Image: nginx:1.19

web-3: Image: nginx:1.19

web-4: Image: nginx:1.19

web-5: Image: nginx:1.19

web-6: Image: nginx:1.19

web-7: Image: nginx:1.19

web-8: Image: nginx:1.19

web-9: Image: nginx:1.19

(2) Rollback

[root@master01 ~]# kubectl rollout history statefulset web

statefulset.apps/web

REVISION CHANGE-CAUSE

1 <none>

2 <none>

[root@master01 ~]# kubectl rollout history statefulset web --revision 2

statefulset.apps/web with revision #2

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx:1.19

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

[root@master01 ~]# kubectl rollout undo statefulset web --to-revision 1

[root@master01 ~]# for i in web-{0..9};do echo -n $i:;kubectl describe pod $i|grep Image:;done

web-0: Image: nginx:latest

web-1: Image: nginx:latest

web-2: Image: nginx:latest

web-3: Image: nginx:latest

web-4: Image: nginx:latest

web-5: Image: nginx:latest

web-6: Image: nginx:latest

web-7: Image: nginx:latest

web-8: Image: nginx:latest

web-9: Image: nginx:latest

(3) Set through yaml file

[root@master01 ~]# cat ssweb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 10%

partition: 3

serviceName: "nginx"

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl describe sts web

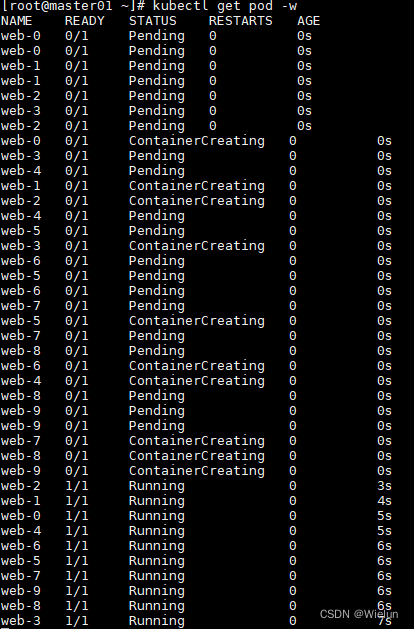

(4) Setting through yaml file (parallel)

[root@master01 ~]# cat ssweb.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

podManagementPolicy: Parallel

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 10%

partition: 3

serviceName: "nginx"

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl get pod -w

Cascade deletion (method 1)

[root@master01 ~]# kubectl delete sts web

statefulset.apps "web" deleted

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d4h

nginx ClusterIP None <none> 80/TCP 3m50s

[root@master01 ~]# kubectl delete svc nginx

Cascade deletion (method 2)

[root@master01 ~]# kubectl delete sts web --cascade=orphan

[root@master01 ~]# kubectl delete pod -l app=nginx

force delete

Not recommended

[root@master01 ~]# kubectl apply -f ssweb.yaml

[root@master01 ~]# kubectl set image statefulset/web nginx=nginx:haha

[root@master01 ~]# kubectl delete pods web-9 --grace-period=0 --force

Delete normally

Recommended when Terminating or Unknown

[root@master01 ~]# kubectl patch pod web-9 -p '{"metadata":{"finalizers":null}}'

2. Service operation

1. First experience

[root@master01 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# cat nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 80

selector:

app: frontend

[root@master01 ~]# kubectl apply -f nginx.yaml

[root@master01 ~]# kubectl apply -f nginx-svc.yaml

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

frontend-64dccd9ff7-bdmk8 1/1 Running 0 6m48s 10.0.0.230 master03 <none> <none>

frontend-64dccd9ff7-f9xqq 1/1 Running 0 6m48s 10.0.2.157 node01 <none> <none>

frontend-64dccd9ff7-shrn9 1/1 Running 0 6m48s 10.0.4.32 master01 <none> <none>

[root@master01 ~]# kubectl get svc -owide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d5h <none>

nginx-svc ClusterIP 10.103.140.145 <none> 8080/TCP 37s app=frontend

[root@master01 ~]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=frontend

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.103.140.145

IPs: 10.103.140.145

Port: <unset> 8080/TCP

TargetPort: 80/TCP

Endpoints: 10.0.0.230:80,10.0.2.157:80,10.0.4.32:80

Session Affinity: None

Events: <none>

2. Capacity expansion test

[root@master01 ~]# kubectl scale deployment frontend --replicas 6

[root@master01 ~]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=frontend

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.103.140.145

IPs: 10.103.140.145

Port: <unset> 8080/TCP

TargetPort: 80/TCP

Endpoints: 10.0.0.230:80,10.0.1.163:80,10.0.2.157:80 + 3 more...

Session Affinity: None

Events: <none>

[root@master01 ~]# kubectl get ep

NAME ENDPOINTS AGE

kubernetes 10.10.10.21:6443,10.10.10.22:6443,10.10.10.23:6443 2d5h

nginx-svc 10.0.0.230:80,10.0.1.163:80,10.0.2.157:80 + 3 more... 5m59s

[root@master01 ~]# kubectl describe ep nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2023-06-01T09:30:53Z

Subsets:

Addresses: 10.0.0.230,10.0.1.163,10.0.2.157,10.0.3.9,10.0.4.153,10.0.4.32

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

<unset> 80 TCP

Events: <none>

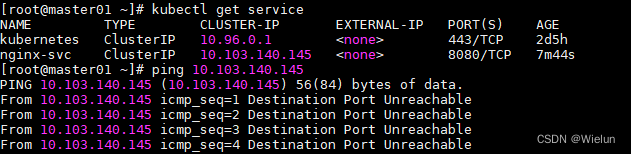

3. Test cluster IP

[root@master01 ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d5h

nginx-svc ClusterIP 10.103.140.145 <none> 8080/TCP 7m44s

[root@master01 ~]# ping 10.103.140.145

[root@master01 ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

frontend-64dccd9ff7-69675 1/1 Running 0 8m45s 10.0.3.9 node02 <none> <none>

frontend-64dccd9ff7-bdmk8 1/1 Running 0 17m 10.0.0.230 master03 <none> <none>

frontend-64dccd9ff7-f9xqq 1/1 Running 0 17m 10.0.2.157 node01 <none> <none>

frontend-64dccd9ff7-h5bnk 1/1 Running 0 8m45s 10.0.1.163 master02 <none> <none>

frontend-64dccd9ff7-jvx6z 1/1 Running 0 8m45s 10.0.4.153 master01 <none> <none>

frontend-64dccd9ff7-shrn9 1/1 Running 0 17m 10.0.4.32 master01 <none> <none>

[root@master01 ~]# ipvsadm -Ln|grep -A 6 10.103.140.145

TCP 10.103.140.145:8080 rr

-> 10.0.0.230:80 Masq 1 0 0

-> 10.0.1.163:80 Masq 1 0 0

-> 10.0.2.157:80 Masq 1 0 0

-> 10.0.3.9:80 Masq 1 0 0

-> 10.0.4.32:80 Masq 1 0 0

-> 10.0.4.153:80 Masq 1 0 0

4. Test ns-nginx

[root@master01 ~]# kubectl create ns test

[root@master01 ~]# cat ns-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ns-nginx

namespace: test

spec:

replicas: 3

selector:

matchLabels:

app: nstest

template:

metadata:

labels:

app: nstest

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: ns-svc

namespace: test

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 80

selector:

app: nstest

[root@master01 ~]# kubectl apply -f ns-test.yaml

[root@master01 ~]# kubectl get pod -n test

NAME READY STATUS RESTARTS AGE

ns-nginx-867c4f9bcb-g7db5 1/1 Running 0 17s

ns-nginx-867c4f9bcb-vc86d 1/1 Running 0 17s

ns-nginx-867c4f9bcb-x2vf5 1/1 Running 0 17s

[root@master01 ~]# kubectl get svc -n test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ns-svc ClusterIP 10.104.254.251 <none> 8080/TCP 24s

[root@master01 ~]# kubectl run box -it --rm --image busybox:1.28 /bin/sh

/ # cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.96.0.10

options ndots:5

/ # nslookup ns-svc.test

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: ns-svc.test

Address 1: 10.104.254.251 ns-svc.test.svc.cluster.local

/ # wget ns-svc.test:8080

Connecting to ns-svc.test:8080 (10.104.254.251:8080)

index.html 100% |****************************************************************************************************************| 615 0:00:00 ETA

/ # cat index.html

<!DOCTYPE html>

...

</html>

5. Set hostname

[root@master01 ~]# cat domainname.yaml

apiVersion: v1

kind: Service

metadata:

name: default-subdomain

spec:

selector:

name: busybox

clusterIP: None

ports:

- name: foo

port: 1234

targetPort: 1234

---

apiVersion: v1

kind: Pod

metadata:

name: busybox1

labels:

name: busybox

spec:

hostname: busybox-1

subdomain: default-subdomain

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

---

apiVersion: v1

kind: Pod

metadata:

name: busybox2

labels:

name: busybox

spec:

hostname: busybox-2

subdomain: default-subdomain

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

[root@master01 ~]# kubectl apply -f domainname.yaml

[root@master01 ~]# kubectl get pod -owide|grep busy

busybox1 1/1 Running 0 4s 10.0.0.188 master03 <none> <none>

busybox2 1/1 Running 0 4s 10.0.4.197 master01 <none> <none>

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default-subdomain ClusterIP None <none> 1234/TCP 82s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d6h

nginx-svc ClusterIP 10.103.140.145 <none> 8080/TCP 95m

[root@master01 ~]# kubectl describe svc default-subdomain

Name: default-subdomain

Namespace: default

Labels: <none>

Annotations: <none>

Selector: name=busybox

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None

IPs: None

Port: foo 1234/TCP

TargetPort: 1234/TCP

Endpoints: 10.0.4.159:1234,10.0.4.46:1234

Session Affinity: None

Events: <none>

Test access:

[root@master01 ~]# kubectl exec -it busybox1 -- sh

/ # nslookup default-subdomain

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: default-subdomain

Address 1: 10.0.4.197 busybox-2.default-subdomain.default.svc.cluster.local

Address 2: 10.0.0.188 busybox-1.default-subdomain.default.svc.cluster.local

/ # nslookup busybox-1

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: busybox-1

Address 1: 10.0.0.188 busybox-1.default-subdomain.default.svc.cluster.local

/ # nslookup busybox-2

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

nslookup: can't resolve 'busybox-2'

/ # nslookup busybox-2.default-subdomain

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: busybox-2.default-subdomain

Address 1: 10.0.4.197 busybox-2.default-subdomain.default.svc.cluster.local

6、setHostnameAsFQDN

FQDN: up to 64 characters, otherwise it will cause pending

[root@master01 ~]# cat domainname.yaml

apiVersion: v1

kind: Service

metadata:

name: default-subdomain

spec:

selector:

name: busybox

clusterIP: None

ports:

- name: foo

port: 1234

targetPort: 1234

---

apiVersion: v1

kind: Pod

metadata:

name: busybox1

labels:

name: busybox

spec:

setHostnameAsFQDN: true

hostname: busybox-1

subdomain: default-subdomain

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

---

apiVersion: v1

kind: Pod

metadata:

name: busybox2

labels:

name: busybox

spec:

hostname: busybox-2

subdomain: default-subdomain

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

[root@master01 ~]# kubectl apply -f domainname.yaml

service/default-subdomain created

[root@master01 ~]# kubectl exec -it busybox1 -- sh

/ # hostname

busybox-1.default-subdomain.default.svc.cluster.local

[root@master01 ~]# kubectl exec -it busybox2 -- sh

/ # hostname

busybox-2

/ # hostname -f

busybox-2.default-subdomain.default.svc.cluster.local

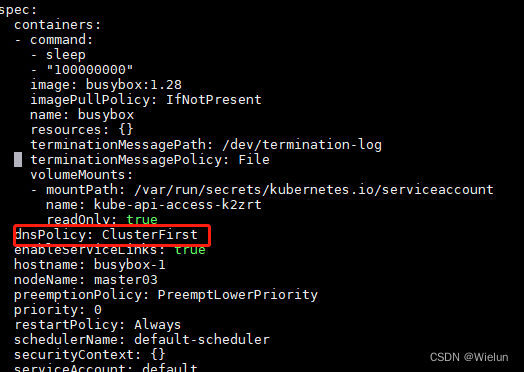

7. DNS strategy

(1)Default ClusterFirst

[root@master01 ~]# kubectl edit pod busybox1

(2)ClusterFirstWithHostNet

[root@master01 ~]# cat domainname.yaml

apiVersion: v1

kind: Service

metadata:

name: default-subdomain

spec:

selector:

name: busybox

clusterIP: None

ports:

- name: foo

port: 1234

targetPort: 1234

---

apiVersion: v1

kind: Pod

metadata:

name: busybox1

labels:

name: busybox

spec:

hostname: busybox-1

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

---

apiVersion: v1

kind: Pod

metadata:

name: busybox2

labels:

name: busybox

spec:

hostname: busybox-2

subdomain: default-subdomain

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

[root@master01 ~]# kubectl apply -f domainname.yaml

[root@master01 ~]# kubectl get pod|grep busy

busybox1 1/1 Running 0 26s

busybox2 1/1 Running 0 26s

[root@master01 ~]# kubectl exec -it busybox1 -- sh #部署到了master03节点上了

/ # hostname

master03

/ # exit

[root@master01 ~]# kubectl exec -it busybox2 -- sh

/ # hostname

busybox-2

/ # exit

(3)None

Set up dns yourself, searches: search domain names

[root@master01 ~]# cat domainname.yaml

apiVersion: v1

kind: Service

metadata:

name: default-subdomain

spec:

selector:

name: busybox

clusterIP: None

ports:

- name: foo

port: 1234

targetPort: 1234

---

apiVersion: v1

kind: Pod

metadata:

name: busybox1

labels:

name: busybox

spec:

dnsPolicy: None

dnsConfig:

nameservers:

- 114.114.114.114

searches:

- ns1.my.dns.search

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

---

apiVersion: v1

kind: Pod

metadata:

name: busybox2

labels:

name: busybox

spec:

hostname: busybox-2

subdomain: default-subdomain

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "100000000"

[root@master01 ~]# kubectl delete -f domainname.yaml

[root@master01 ~]# kubectl apply -f domainname.yaml

test:

[root@master01 ~]# kubectl exec -it busybox1 -- sh

/ # ping www.baidu.com

PING www.baidu.com (14.119.104.254): 56 data bytes

64 bytes from 14.119.104.254: seq=0 ttl=50 time=42.844 ms

64 bytes from 14.119.104.254: seq=1 ttl=50 time=42.574 ms

^C

--- www.baidu.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 42.574/42.709/42.844 ms

/ # nslookup www.baidu.com

Server: 114.114.114.114

Address 1: 114.114.114.114 public1.114dns.com

Name: www.baidu.com

Address 1: 14.119.104.189

Address 2: 14.119.104.254

/ # cat /etc/resolv.conf

search ns1.my.dns.search

nameserver 114.114.114.114

/ # hostname -f

busybox1

8. sessionAffinity affinity

ClientIP and None modes can do affinity

[root@master01 ~]# cat nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

sessionAffinity: ClientIP

ports:

- protocol: TCP

port: 8080

targetPort: 80

selector:

app: frontend

[root@master01 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# kubectl apply -f nginx.yaml

[root@master01 ~]# kubectl apply -f nginx-svc.yaml

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d23h

nginx-svc ClusterIP 10.103.140.145 <none> 8080/TCP 18h

test:

It can be found that each time you access a machine

[root@master01 ~]# curl 10.103.140.145:8080

[root@master01 ~]# kubectl logs frontend-64dccd9ff7-bn4wq

9. Customize http-web-svc

[root@master01 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: http-web-svc

[root@master01 ~]# cat nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

sessionAffinity: ClientIP

ports:

- protocol: TCP

port: 8080

targetPort: http-web-svc

selector:

app: frontend

[root@master01 ~]# kubectl delete -f nginx.yaml

[root@master01 ~]# kubectl delete -f nginx-svc.yaml

[root@master01 ~]# kubectl apply -f nginx.yaml

[root@master01 ~]# kubectl apply -f nginx-svc.yaml

test:

[root@master01 ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d23h

nginx-svc ClusterIP 10.99.182.168 <none> 8080/TCP 3s

[root@master01 ~]# kubectl describe svc nginx-svc

Name: nginx-svc

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=frontend

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.99.182.168

IPs: 10.99.182.168

Port: <unset> 8080/TCP

TargetPort: http-web-svc/TCP

Endpoints: 10.0.0.87:80,10.0.1.247:80,10.0.2.1:80

Session Affinity: ClientIP

Events: <none>

10. Access external data without tags

(1) Create an httpd

[root@node01 ~]# yum install -y httpd

[root@node01 ~]# systemctl start httpd

[root@node01 ~]# echo "<h1>node01</h1>" > /var/www/html/index.html

(2) Create Endpoints

[root@master01 ~]# cat ex-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Endpoints

metadata:

name: my-service

subsets:

- addresses:

- ip: 10.10.10.24

ports:

- port: 80

[root@master01 ~]# kubectl apply -f ex-svc.yaml

(3) Test

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 162m

my-service ClusterIP 10.109.96.132 <none> 80/TCP 41s

[root@master01 ~]# kubectl describe svc my-service

Name: my-service

Namespace: default

Labels: <none>

Annotations: <none>

Selector: <none>

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.109.96.132

IPs: 10.109.96.132

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.10.10.24:80

Session Affinity: None

Events: <none>

[root@master01 ~]# curl 10.109.96.132

<h1>node01</h1>

11、NodePort

For cloud vendors, we can use LoadBalancer

to set the port range in the apiserver.

[root@master01 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

[root@master01 ~]# cat nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

type: NodePort

ports:

- protocol: TCP

port: 8080

targetPort: 80

selector:

app: frontend

[root@master01 ~]# kubectl apply -f nginx.yaml

[root@master01 ~]# kubectl apply -f nginx-svc.yaml

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h3m

my-service ClusterIP 10.109.96.132 <none> 80/TCP 21m

nginx-svc NodePort 10.110.184.239 <none> 8080:30649/TCP 10m

test:

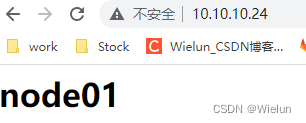

All nodes can be accessed by 30649

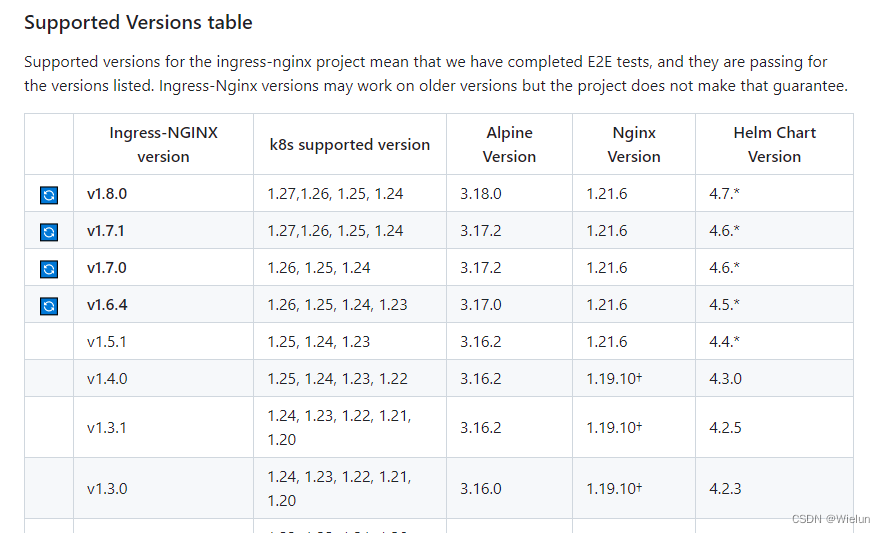

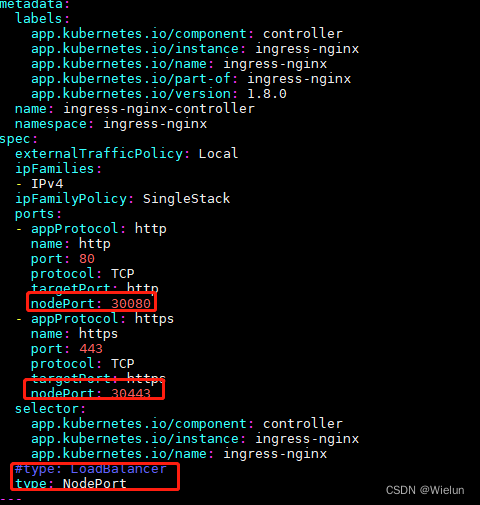

3. Ingress operation

1. Ingress installation

https://github.com/kubernetes/ingress-nginx

https://github.com/kubernetes/ingress-nginx/blob/main/deploy/static/provider/cloud/deploy.yaml

If updated, you can only replace or edit, cannot apply

[root@master01 ~]# cat deploy.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-nginx-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

externalTrafficPolicy: Local

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

nodePort: 30080

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

nodePort: 30443

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

#type: LoadBalancer

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

spec:

# hostNetwork: tue

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: bitnami/nginx-ingress-controller:1.8.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.8.0

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

[root@master01 ~]# kubectl apply -f deploy.yaml

2. Case test

(1) nginx environment

# 每个节点都执行

[root@master01 ~]# mkdir -p /www/nginx && echo "master01" >/www/nginx/index.html

[root@master01 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-cluster

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html/

volumes:

- name: www

hostPath:

path: /www/nginx

---

apiVersion: v1

kind: Service

metadata:

name: nginxsvc

spec:

selector:

app: nginx

ports:

- name: http

port: 80

targetPort: 80

[root@master01 ~]# kubectl apply -f nginx.yaml

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5h17m

my-service ClusterIP 10.109.96.132 <none> 80/TCP 155m

nginxsvc ClusterIP 10.102.155.12 <none> 80/TCP 2m6s

[root@master01 ~]# curl 10.102.155.12

master01

(2) Generate ingress-nginx

[root@master01 ~]# cat ingress-single.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: test-ingress

spec:

ingressClassName: nginx

defaultBackend:

service:

name: nginxsvc

port:

number: 80

[root@master01 ~]# kubectl apply -f ingress-single.yaml

[root@master01 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

test-ingress nginx * 10.104.238.113 80 107s

[root@master01 ~]# kubectl describe ingress test-ingress

Name: test-ingress

Labels: <none>

Namespace: default

Address: 10.104.238.113

Ingress Class: nginx

Default backend: nginxsvc:80 (10.0.1.120:80,10.0.2.66:80,10.0.3.217:80)

Rules:

Host Path Backends

---- ---- --------

* * nginxsvc:80 (10.0.1.120:80,10.0.2.66:80,10.0.3.217:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 110s (x2 over 2m21s) nginx-ingress-controller Scheduled for sync

[root@master01 ~]# kubectl get pod -owide -n ingress-nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-rdnrn 0/1 Completed 0 29m 10.0.3.128 master02 <none> <none>

ingress-nginx-admission-patch-vtgr4 0/1 Completed 0 29m 10.0.2.60 node02 <none> <none>

ingress-nginx-controller-59b655fb7b-b4j9q 1/1 Running 0 29m 10.0.0.50 node01 <none> <none>

[root@master01 ~]# curl 10.0.0.50

master02

[root@master01 ~]# curl 10.0.0.50

node02

[root@master01 ~]# curl 10.0.0.50

master01

(3) Create httpd

# 每个节点都执行

[root@master01 ~]# mkdir -p /www/httpd && echo "master01 httpd" >/www/httpd/index.html

[root@master01 ~]# cat httpd.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpd-cluster

spec:

replicas: 3

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: httpd:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/local/apache2/htdocs

volumes:

- name: www

hostPath:

path: /www/httpd

---

apiVersion: v1

kind: Service

metadata:

name: httpdsvc

spec:

selector:

app: httpd

ports:

- name: httpd

port: 80

targetPort: 80

[root@master01 ~]# kubectl apply -f httpd.yaml

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

httpdsvc ClusterIP 10.105.174.147 <none> 80/TCP 32s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5h38m

my-service ClusterIP 10.109.96.132 <none> 80/TCP 177m

nginxsvc ClusterIP 10.102.155.12 <none> 80/TCP 23m

[root@master01 ~]# cat ingress-httpd.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: http-ingress

spec:

ingressClassName: nginx

rules:

- host: "nginx.wielun.com"

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nginxsvc

port:

number: 80

- host: "httpd.wielun.com"

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: httpdsvc

port:

number: 80

[root@master01 ~]# kubectl apply -f ingress-httpd.yaml

(4) Test results

[root@master01 ~]# vim /etc/hosts

10.104.238.113 nginx.wielun.com httpd.wielun.com

[root@master01 ~]# curl -H "Host: httpd.wielun.com" 10.104.238.113

master01 httpd

[root@master01 ~]# curl -H "Host: nginx.wielun.com" 10.104.238.113

master01

[root@master01 ~]# kubectl describe ingressclasses.networking.k8s.io nginx

Name: nginx

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.8.0

Annotations: <none>

Controller: k8s.io/ingress-nginx

Events: <none>

[root@master01 ~]# kubectl get ingressclasses.networking.k8s.io

NAME CONTROLLER PARAMETERS AGE

nginx k8s.io/ingress-nginx <none> 73m

[root@master01 ~]# kubectl describe ingress http-ingress

Name: http-ingress

Labels: <none>

Namespace: default

Address: 10.104.238.113

Ingress Class: nginx

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

nginx.wielun.com

/ nginxsvc:80 (10.0.1.120:80,10.0.2.66:80,10.0.3.217:80)

httpd.wielun.com

/ httpdsvc:80 (10.0.0.132:80,10.0.1.114:80,10.0.3.164:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 28m (x2 over 29m) nginx-ingress-controller Scheduled for sync

3. Matching by path

(1) Generate path

[root@master01 ~]# mkdir -p /www/httpd/test && cp /www/httpd/index.html /www/httpd/test

[root@master01 ~]#mkdir -p /www/nginx/prod && cp /www/nginx/index.html /www/nginx/prod

(2) Create service and ingress

[root@master01 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-cluster

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html/

volumes:

- name: www

hostPath:

path: /www/nginx

---

apiVersion: v1

kind: Service

metadata:

name: nginxsvc

spec:

selector:

app: nginx

ports:

- name: http

port: 8080

targetPort: 80

[root@master01 ~]# cat httpd.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpd-cluster

spec:

replicas: 3

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: httpd:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/local/apache2/htdocs

volumes:

- name: www

hostPath:

path: /www/httpd

---

apiVersion: v1

kind: Service

metadata:

name: httpdsvc

spec:

selector:

app: httpd

ports:

- name: httpd

port: 8081

targetPort: 80

[root@master01 ~]# cat ingress-httpd.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: http-ingress

spec:

ingressClassName: nginx

rules:

- host: "nginx.wielun.com"

http:

paths:

- pathType: Prefix

path: /prod

backend:

service:

name: nginxsvc

port:

number: 8080

- pathType: Prefix

path: /test

backend:

service:

name: httpdsvc

port:

number: 8081

[root@master01 ~]# kubectl apply -f httpd.yaml

[root@master01 ~]# kubectl apply -f nginx.yaml

[root@master01 ~]# kubectl apply -f ingress-httpd.yaml

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

httpdsvc ClusterIP 10.110.116.208 <none> 8081/TCP 31s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h

my-service ClusterIP 10.109.96.132 <none> 80/TCP 22h

nginxsvc ClusterIP 10.110.201.244 <none> 8080/TCP 28s

(3) Test

[root@master01 ~]# curl nginx.wielun.com/prod/

<html>

<head><title>403 Forbidden</title></head>

<body>

<center><h1>403 Forbidden</h1></center>

<hr><center>nginx/1.25.0</center>

</body>

</html>

[root@master01 ~]# curl nginx.wielun.com/test

<!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN">

<html><head>

<title>301 Moved Permanently</title>

</head><body>

<h1>Moved Permanently</h1>

<p>The document has moved <a href="http://nginx.wielun.com/test/">here</a>.</p>

</body></html>

[root@master01 ~]# curl nginx.wielun.com/test/

master01 httpd

[root@master01 ~]# kubectl describe ingress http-ingress

Name: http-ingress

Labels: <none>

Namespace: default

Address: 10.104.238.113

Ingress Class: nginx

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

nginx.wielun.com

/prod nginxsvc:8080 (10.0.0.108:80,10.0.1.159:80,10.0.3.47:80)

/test httpdsvc:8081 (10.0.1.204:80,10.0.2.47:80,10.0.3.242:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 19h (x2 over 19h) nginx-ingress-controller Scheduled for sync

Normal Sync 5m41s (x2 over 10m) nginx-ingress-controller Scheduled for sync

4. Rewrite implements dependent items

[root@master01 ~]# cat ingress-rewrite.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: http-ingress

annotations:

nginx.ingress.kubernetes.io/rewite-target: https://www.baidu.com

spec:

ingressClassName: nginx

rules:

- host: "nginx.wielun.com"

http:

paths:

- pathType: Prefix

path: /

backend:

service:

name: nginxsvc

port:

number: 80

[root@master01 ~]# kubectl delete -f ingress-httpd.yaml

[root@master01 ~]# kubectl apply -f ingress-rewrite.yaml

4. Grayscale release

1. Grayscale publishing method

- canary: blue-green and grayscale release, community version

- service: blue-green and grayscale release, cloud version

Traffic classification:

canary-by-header - > canary-by-cookie - > canary-weight

- Traffic segmentation based on Request Header, suitable for grayscale publishing and AB testing

- Traffic segmentation based on cookies, suitable for grayscale publishing and AB testing

- Traffic segmentation based on weight, suitable for blue and green releases

2. Environment preparation

[root@master01 ~]# mkdir -p /www/{old,new}

[root@master01 ~]# echo "old version" >> /www/old/index.html && echo "new version" >> /www/new/index.html

3. Create an old environment

(1) Configure canary-nginx.yaml

sessionAffinity: production to be opened

[root@master01 ~]# cat canary-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: old-nginx

spec:

replicas: 3

selector:

matchLabels:

app: old-nginx

template:

metadata:

labels:

app: old-nginx

spec:

containers:

- name: old-nginx

image: nginx:1.19

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: old

mountPath: /usr/share/nginx/html/

volumes:

- name: old

hostPath:

path: /www/old

---

apiVersion: v1

kind: Service

metadata:

name: old-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: old-nginx

sessionAffinity: None

[root@master01 ~]# kubectl apply -f canary-nginx.yaml

[root@master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 27h

my-service ClusterIP 10.109.96.132 <none> 80/TCP 24h

old-nginx ClusterIP 10.101.60.205 <none> 80/TCP 4s

[root@master01 ~]# kubectl get deployment -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

old-nginx 3/3 3 3 94s old-nginx nginx:1.19 app=old-nginx

(2) Configure canary-ingress.yaml

[root@master01 ~]# cat canary-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: old-release

spec:

ingressClassName: nginx

rules:

- host: "www.wielun.com"

http:

paths:

- pathType: ImplementationSpecific

path: /

backend:

service:

name: old-nginx

port:

number: 80

[root@master01 ~]# kubectl apply -f canary-ingress.yaml

[root@master01 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

old-release nginx www.wielun.com 10.104.238.113 80 1m

[root@master01 ~]# vim /etc/hosts

10.104.238.113 www.wielun.com

[root@master01 ~]# curl www.wielun.com

old version

4. Request header method

(1) Configure canary-nginx-new.yaml

[root@master01 ~]# cat canary-nginx-new.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: new-nginx

spec:

replicas: 3

selector:

matchLabels:

app: new-nginx

template:

metadata:

labels:

app: new-nginx

spec:

containers:

- name: new-nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: new

mountPath: /usr/share/nginx/html/

volumes:

- name: new

hostPath:

path: /www/new

---

apiVersion: v1

kind: Service

metadata:

name: new-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: new-nginx

sessionAffinity: None

[root@master01 ~]# kubectl apply -f canary-nginx-new.yaml

(2) Configure canary-ingress-new.yaml

[root@master01 ~]# cat canary-ingress-new.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: new-release

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-header: "foo"

nginx.ingress.kubernetes.io/canary-by-header-value: "bar"

spec:

ingressClassName: nginx

rules:

- host: "www.wielun.com"

http:

paths:

- pathType: ImplementationSpecific

path: /

backend:

service:

name: new-nginx

port:

number: 80

[root@master01 ~]# kubectl apply -f canary-ingress-new.yaml

[root@master01 ~]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

new-release nginx www.wielun.com 10.104.238.113 80 5m

old-release nginx www.wielun.com 10.104.238.113 80 11m

(3) Test

[root@master01 ~]# kubectl describe ingress old-release

Name: old-release

Labels: <none>

Namespace: default

Address: 10.104.238.113

Ingress Class: nginx

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

www.wielun.com

/ old-nginx:80 (10.0.1.50:80,10.0.2.213:80,10.0.3.160:80)

Annotations: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 13m (x2 over 14m) nginx-ingress-controller Scheduled for sync

[root@master01 ~]# kubectl describe ingress new-release

Name: new-release

Labels: <none>

Namespace: default

Address: 10.104.238.113

Ingress Class: nginx

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

www.wielun.com

/ new-nginx:80 (10.0.0.143:80,10.0.1.245:80,10.0.3.8:80)

Annotations: nginx.ingress.kubernetes.io/canary: true

nginx.ingress.kubernetes.io/canary-by-header: foo

nginx.ingress.kubernetes.io/canary-by-header-value: bar

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 2m59s (x2 over 3m36s) nginx-ingress-controller Scheduled for sync

[root@master01 ~]# curl www.wielun.com

old version

[root@master01 ~]# curl -H "foo:bar" www.wielun.com

new version

5. Weight

[root@master01 ~]# cat canary-ingress-new.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: new-release

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-weight: "50"

spec:

ingressClassName: nginx

rules:

- host: "www.wielun.com"

http:

paths:

- pathType: ImplementationSpecific

path: /

backend:

service:

name: new-nginx

port:

number: 80

[root@master01 ~]# kubectl apply -f canary-ingress-new.yaml

[root@master01 ~]# for i in {0..10};do curl www.wielun.com;done

new version

new version

new version

old version

new version

old version

old version

old version

new version

old version

new version

6. Switch all to the new version

[root@master01 ~]# cat canary-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: old-nginx

spec:

replicas: 3

selector:

matchLabels:

app: old-nginx

template:

metadata:

labels:

app: old-nginx

spec:

containers:

- name: old-nginx

image: nginx:1.19

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

volumeMounts:

- name: old

mountPath: /usr/share/nginx/html/

volumes:

- name: old

hostPath:

path: /www/old

---

apiVersion: v1

kind: Service

metadata:

name: old-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: new-nginx

sessionAffinity: None

[root@master01 ~]# kubectl apply -f canary-nginx.yaml

[root@master01 ~]# for i in {0..10};do curl www.wielun.com;done

new version

new version

new version

new version

new version

new version

new version

new version

new version

new version

new version