1. Inter-thread communication

Two key issues between threads

- How to communicate between threads? That is: what type of machine is used to exchange information between threads;

- How to synchronize between threads? That is: what mechanism does a thread use to control the relative order of operations sent between different threads?

There are two concurrency models that solve these two problems:

- message passing concurrency model

- Shared Memory Concurrency Model

The differences between these two models are shown in the table below:

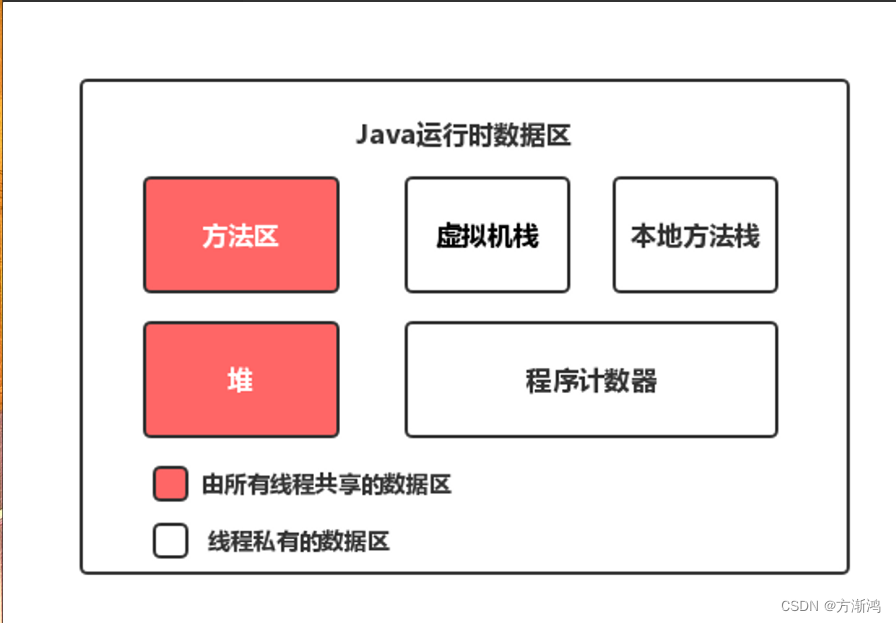

Abstract structure of java memory model

Partitioning of runtime memory

[External link image transfer failed. The source site may have an anti-leeching mechanism. It is recommended to save the image and upload it directly (img-OqDDhj0P-1688461734883)(https://s3-us-west-2.amazonaws.com/secure.notion -static.com/c78ee9c6-8f0a-484b-847c-39db7368fbe2/Untitled.png)]

For each thread, the stack is private and the heap is shared.

That is to say, the variables in the stack (local variables, method definition parameters, exception handler parameters) will not be shared between threads, so there will be no memory visibility issues (discussed below), and they will not be affected by memory. model impact. The variables in the heap are shared and are called shared variables in this article.

So, memory visibility is for shared variables .

Since the heap is shared, why is there a problem of invisible memory in the heap?

This is because modern computers tend to cache shared variables in the cache area in order to be efficient, because the CPU accesses the cache area much faster than accessing the memory.

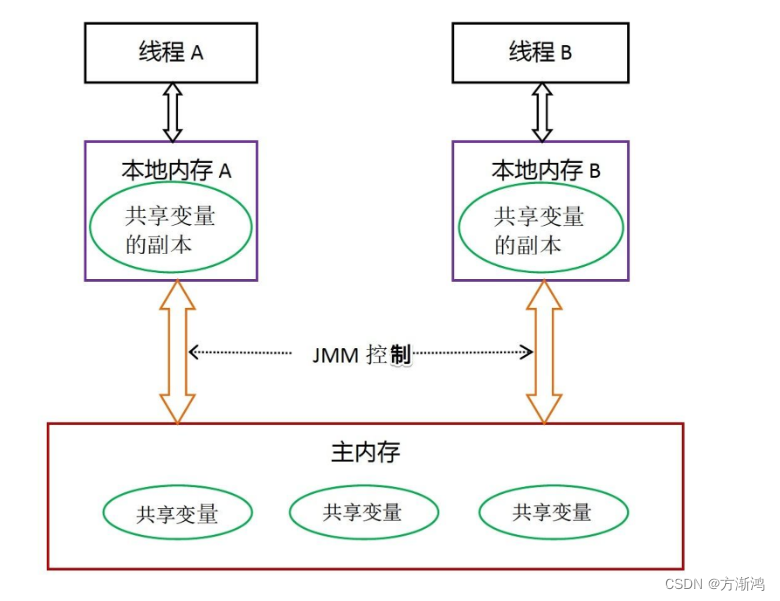

Shared variables between threads are stored in main memory, and each thread has a private local memory that stores a copy of the shared variables that the thread can read and write. Local memory is an abstract concept of the Java memory model and does not really exist. It covers caches, write buffers, registers, etc.

It can be seen from the figure:

- All shared variables are stored in main memory.

- Each thread keeps a copy of the shared variables used by that thread.

- If thread A and thread B want to communicate, they must go through the following two steps:

- Thread A refreshes the updated shared variables in local memory A to the main memory.

- Thread B goes to main memory to read the shared variables that thread A has updated before.

Therefore, thread A cannot directly access the working memory of thread B, and inter-thread communication must go through main memory.

Note that according to the regulations of JMM, all operations of threads on shared variables must be performed in their own local memory and cannot be read directly from main memory .

So thread B does not go directly to the main memory to read the value of the shared variable. Instead, it first finds the shared variable in local memory B and finds that the shared variable has been updated. Then local memory B goes to main memory to read this shared variable. The new value of the shared variable is copied to local memory B. Finally, thread B reads the new value in local memory B.

So how do you know that this shared variable has been updated by other threads? This is the contribution of JMM, and it is also one of the reasons for the existence of JMM. JMM provides memory visibility guarantees by controlling the interaction between main memory and each thread's local memory .

The volatile keyword in Java can ensure the visibility of shared variables in multi-threaded operations and prohibit instruction reordering. The synchronized keyword not only guarantees visibility, but also guarantees atomicity (mutual exclusion). At a lower level, JMM implements memory visibility and prohibits reordering through memory barriers. For the convenience of programmers' understanding, happens-before is proposed, which is simpler and easier to understand, thus avoiding programmers from having to learn complex reordering rules and the specific implementation methods of these rules in order to understand memory visibility.

2. Thread communication class

Located under the java.util.concurrent package

| kind | effect |

|---|---|

| Semaphore | limit the number of threads |

| Exchanger | Two threads exchange data |

| CountDownLatch | The thread waits until the counter reaches 0 to start working |

| CyclicBarrier | It has a similar function to CountDownLatch, but can be used repeatedly. |

| Phaser | Enhanced CyclicBarrier |

| AtomicBoolean | In a multi-threaded environment, atomicity is guaranteed, and only one thread will change the state at a time. |

| CountDownLatch | A timer, used in multi-threading, stops waiting when the number reaches 0 |

1、Semaphore

Semaphore means signal when translated. As the name suggests, the function provided by this tool class is that multiple threads "signal" each other. And this "signal" is an int type data, which can also be regarded as a "resource".

The most important methods are the acquire method and the release method. The acquire() method will apply for a permit, and the release method will release a permit. Of course, you can also apply for multiple acquires (int permits) or release multiple releases (int permits).

Each time you acquire, the permits will be reduced by one or more. If it is reduced to 0 and another thread comes to acquire, then this thread will be blocked until other threads release the permit.

2、Exchanger

**Exchanger class is used to exchange data between two threads. **It supports generics, which means you can transfer any data between two threads.

public class ExchangerDemo {

public static void main(String[] args) throws InterruptedException {

Exchanger<String> exchanger = new Exchanger<>();

new Thread(() -> {

try {

System.out.println("这是线程A,得到了另一个线程的数据:"

+ exchanger.exchange("这是来自线程A的数据"));

} catch (InterruptedException e) {

e.printStackTrace();

}

}).start();

System.out.println("这个时候线程A是阻塞的,在等待线程B的数据");

Thread.sleep(1000);

new Thread(() -> {

try {

System.out.println("这是线程B,得到了另一个线程的数据:"

+ exchanger.exchange("这是来自线程B的数据"));

} catch (InterruptedException e) {

e.printStackTrace();

}

}).start();

}

}

Output:

At this time, thread A is blocked and waiting for data from thread B.

This is Thread B, getting data from another thread: This is the data from Thread A

This is Thread A, getting data from another thread: This is the data from Thread B

It can be seen that when a thread calls the exchange method, it is in a blocked state. Only when another thread also calls the exchange method will it continue to execute. Looking at the source code, you can find that it uses park/unpark to switch the waiting state, but before using the park/unpark method, a CAS check is used, presumably to improve performance.

Exchanger is generally used to exchange data in memory more conveniently between two threads. Because it supports generics, we can transfer any data, such as IO streams or IO caches. According to the comments in the JDK, it can be summarized as the following features:

- This type of external operation is synchronous;

- Used to exchange data between threads that appear in pairs;

- Can be regarded as a two-way synchronization queue;

- It can be applied to scenarios such as genetic algorithms and pipeline design.

The Exchanger class also has a method with a timeout parameter. If another thread does not call exchange within the specified time, a timeout exception will be thrown.

public V exchange(V x, long timeout, TimeUnit unit)

So the question is, can Exchanger only exchange data between two threads? What happens when three exchange methods are called on the same instance? The answer is that only the first two threads will exchange data, and the third thread will enter the blocking state.

It should be noted that exchange can be reused. That is to say. Two threads can use Exchanger to continuously exchange data in memory

3、CountDownLatch

In fact, the principle of the CountDownLatch class is quite simple. Internally it is also an implementation class Sync that inherits AQS, and the implementation is very simple. It may be the simplest implementation among the AQS subclasses in the JDK. Interested readers can check it out Look at the source code of this inner class.

It should be noted that the count value (count) in the constructor is actually the number of threads that need to wait for the lock . This value can only be set once, and CountDownLatch does not provide any mechanism to reset the count value.

4、CyclicBarrier

CyclicBarrier is not divided into await()sum and countDown(), but only a single await()method.

5、Phaser

The word Phaser means "phase shifter, phaser" (well, I don't know what this is, the information below is from Baidu Encyclopedia). This class appeared in JDK 1.7.

Glossary:

- party: corresponds to a thread, the number can be passed in through register or construction parameters;

- arrive: corresponds to the state of a party. It is initially unarrived. When calling

arriveAndAwaitAdvance()orarriveAndDeregister()entering the arrive state, you cangetUnarrivedParties()obtain the current unarrived quantity; - Register: Register a party. At each stage, all registered parties must arrive before entering the next stage;

- deRegister: Reduce one party.

- Phase: Phase. When all registered parties have arrived, the Phaser

onAdvance()method will be called to determine whether to enter the next phase.

6、AtomicBoolean

AtomicBoolean is an atomic variable under the java.util.concurrent.atomic package. This package provides a set of atomic classes. Its basic feature is that in a multi-threaded environment, when multiple threads execute methods contained in instances of these classes at the same time, it is exclusive, that is, when a thread enters a method and executes the instructions in it, it will not be blocked by other threads. It is interrupted, and other threads are like spin locks. They wait until the execution of the method is completed before the JVM selects another thread from the waiting queue to enter. This is just a logical understanding. In fact, it is implemented with the help of hardware-related instructions, and the thread will not be blocked (or it is only blocked at the hardware level).

- create

AtomicBoolean LOGIN = new AtomicBoolean(false);

- use

LOGIN.set(true);

7、CountDownLatch

CountDownLatch can make one thread wait for other threads to complete their execution before executing.

CountDownLatch defines a counter and a blocking queue. When the counter value decreases to 0, the threads in the blocking queue are in a suspended state. When the counter decreases to 0, all threads in the blocking queue will be awakened. The counter here is a flag. It can represent a task and a thread, or it can represent a countdown timer. CountDownLatch can solve the scenario where one or more threads must rely on certain necessary prerequisite services to be executed before execution.

- create

CountDownLatch WAIT = new CountDownLatch(1);

- use

CountDownLatch(int count); //构造方法,创建一个值为count 的计数器。await();//阻塞当前线程,将当前线程加入阻塞队列。await(long timeout, TimeUnit unit);//在timeout的时间之内阻塞当前线程,时间一过则当前线程可以执行,countDown();//对计数器进行递减1操作,当计数器递减至0时,当前线程会去唤醒阻塞队列里的所有线程。