Table of contents

1--Dynamic input and static input

3--Complete Code Demonstration

5--Test the dynamically exported Onnx model

1--Dynamic input and static input

When using Pytorch to export the network to the Onnx model format, it can be exported as dynamic input and static input. Dynamic input means that some dimensions of the model input data are dynamic and can be set by the user when using the model; static input means that the dimension of the model input data is static and cannot be changed. When the user uses the model, only the specified dimension can be input data for inference.

Obviously, dynamic input is more versatile than static input.

2--Pytorch API

In Pytorch , dynamic input and static input are specified through the dynamic_axes parameter of torch.onnx.export() . The default value of dynamic_axes is None , which means static input by default.

The following shows the usage of dynamic export, and sets the dynamic export input by defining the dynamic_axes parameter. 0, 2, and 3 in dynamic_axes indicate that the corresponding dimension is set to a dynamic value;

# 导出为动态输入

input_name = 'input'

output_name = 'output'

torch.onnx.export(model,

input_data,

"Dynamics_InputNet.onnx",

opset_version=11,

input_names=[input_name],

output_names=[output_name],

dynamic_axes={

input_name: {0: 'batch_size', 2: 'input_height', 3: 'input_width'},

output_name: {0: 'batch_size', 2: 'output_height', 3: 'output_width'}})3--Complete Code Demonstration

In the following code, a network is defined, and the network is exported to Onnx model format using dynamic export and static export.

import torch

import torch.nn as nn

class Model_Net(nn.Module):

def __init__(self):

super(Model_Net, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels=64, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

)

def forward(self, data):

data = self.layer1(data)

return data

if __name__ == "__main__":

# 设置输入参数

Batch_size = 8

Channel = 3

Height = 256

Width = 256

input_data = torch.rand((Batch_size, Channel, Height, Width))

# 实例化模型

model = Model_Net()

# 导出为静态输入

input_name = 'input'

output_name = 'output'

torch.onnx.export(model,

input_data,

"Static_InputNet.onnx",

verbose=True,

input_names=[input_name],

output_names=[output_name])

# 导出为动态输入

torch.onnx.export(model,

input_data,

"Dynamics_InputNet.onnx",

opset_version=11,

input_names=[input_name],

output_names=[output_name],

dynamic_axes={

input_name: {0: 'batch_size', 2: 'input_height', 3: 'input_width'},

output_name: {0: 'batch_size', 2: 'output_height', 3: 'output_width'}})

4-- Model Visualization

The static model and dynamic model exported through netron library visualization, the code is as follows:

import netron

netron.start("./Dynamics_InputNet.onnx")

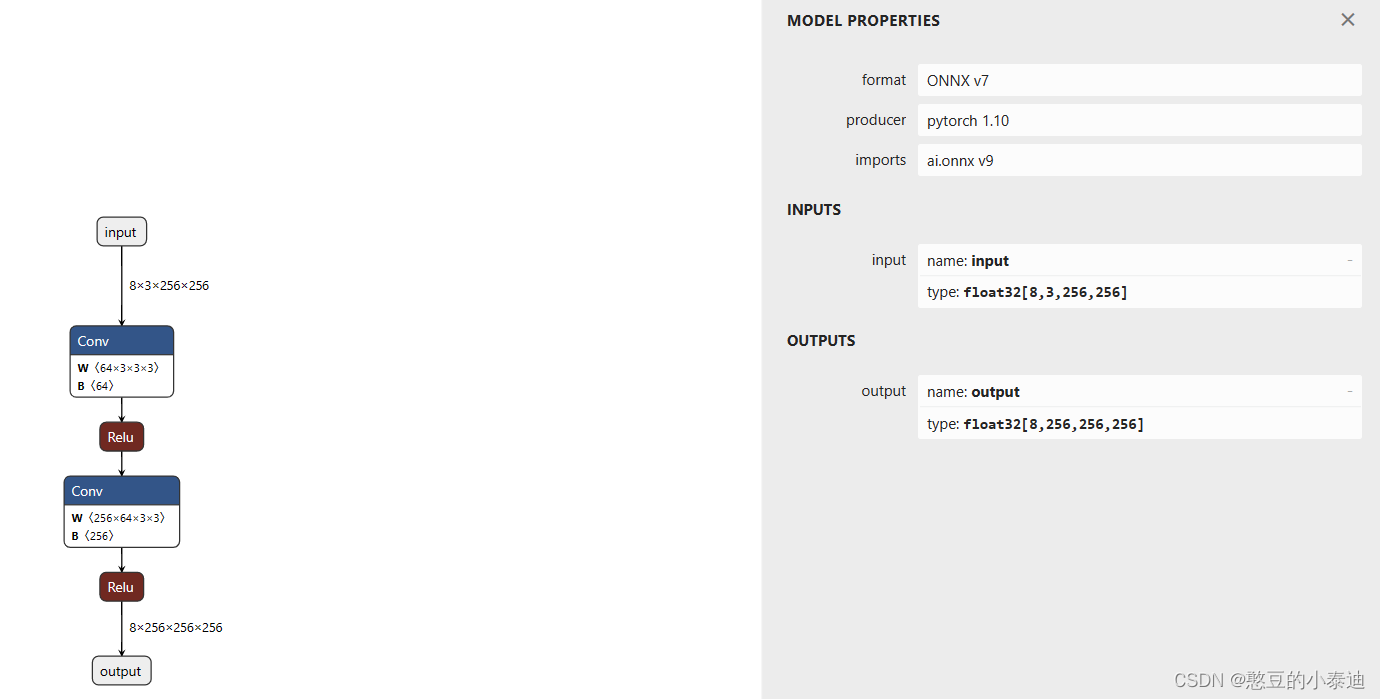

Static model visualization:

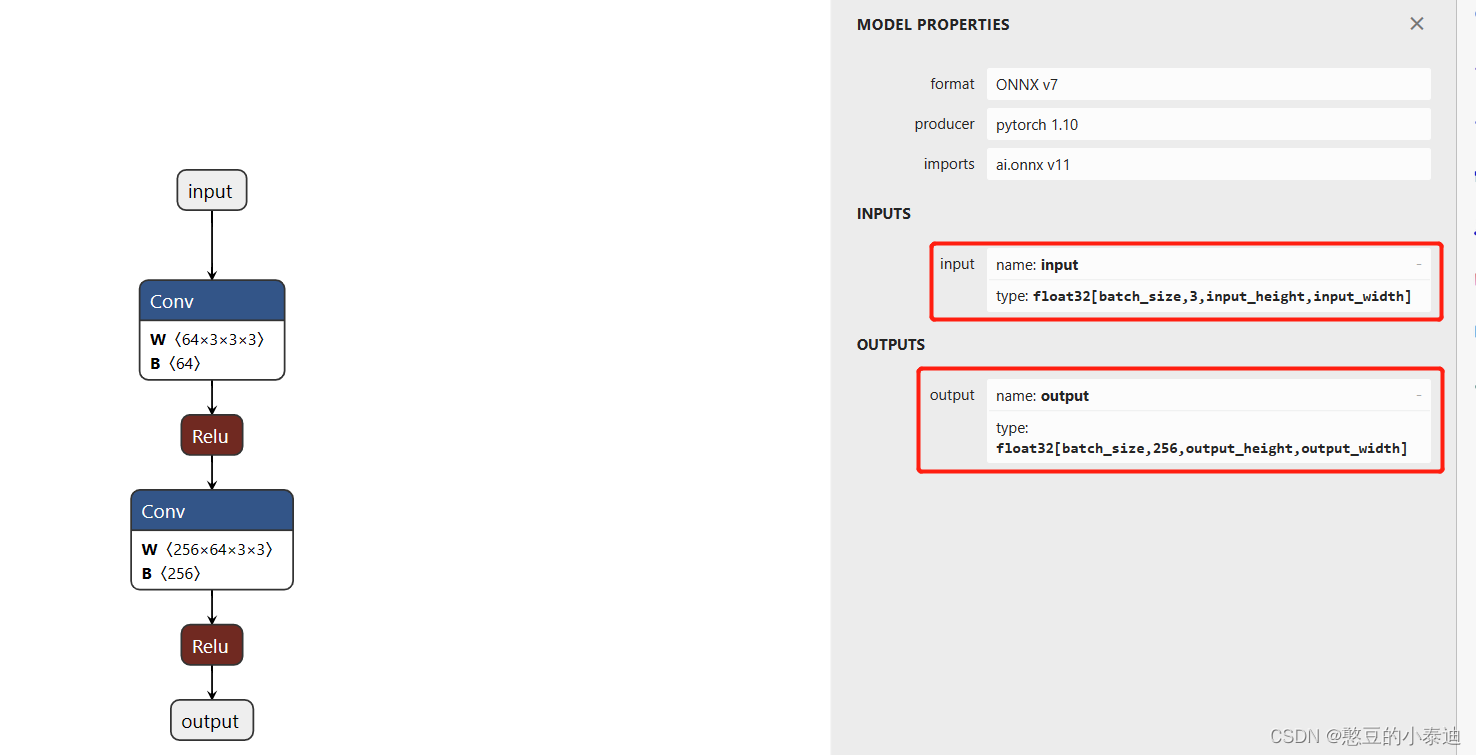

Dynamic model visualization:

5--Test the dynamically exported Onnx model

import numpy as np

import onnx

import onnxruntime

if __name__ == "__main__":

input_data1 = np.random.rand(4, 3, 256, 256).astype(np.float32)

input_data2 = np.random.rand(8, 3, 512, 512).astype(np.float32)

# 导入 Onnx 模型

Onnx_file = "./Dynamics_InputNet.onnx"

Model = onnx.load(Onnx_file)

onnx.checker.check_model(Model) # 验证Onnx模型是否准确

# 使用 onnxruntime 推理

model = onnxruntime.InferenceSession(Onnx_file, providers=['TensorrtExecutionProvider', 'CUDAExecutionProvider', 'CPUExecutionProvider'])

input_name = model.get_inputs()[0].name

output_name = model.get_outputs()[0].name

output1 = model.run([output_name], {input_name:input_data1})

output2 = model.run([output_name], {input_name:input_data2})

print('output1.shape: ', np.squeeze(np.array(output1), 0).shape)

print('output2.shape: ', np.squeeze(np.array(output2), 0).shape)

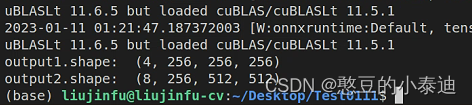

It can be seen from the output results that corresponding to the dynamic input Onnx model, its output dimension is also dynamic, and there is a corresponding relationship, which indicates that the exported Onnx model is correct.