Deep Learning (28) - YOLO Series (7)

咱就是说,需要源码请造访:Jane的GitHub: Here

I haven't finished writing in the morning, and I will continue in the afternoon, which is a small tail. In fact, I finished writing the key parts of the training and the key parts of the data in the morning. Now I will write the reasoning part.

In order to improve the efficiency of the reasoning process, the speed is faster:

detect the whole process

1.1 attempt_load(weights)

- weights is the weight trained before loading yolov7

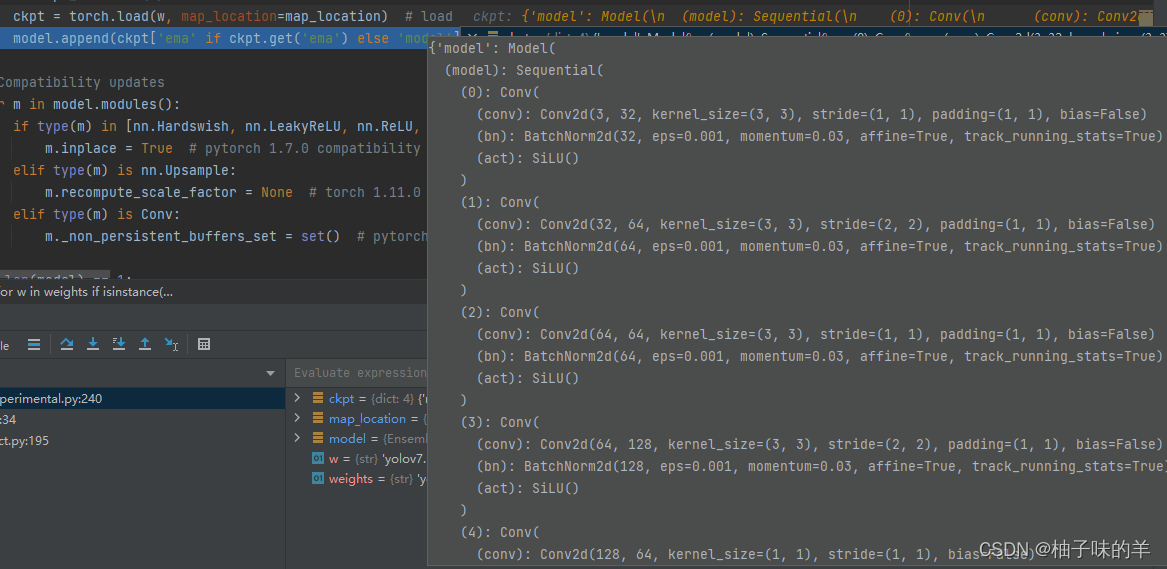

- There is still BN after the first load, and there is no merger

- The key is in the following fuse()

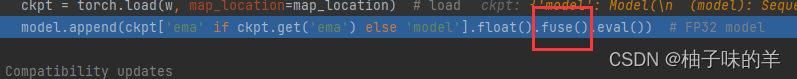

1.2 model.fuse()

# 很隐蔽,刚开始我没想到接口是在这里的

def fuse(self): # fuse model Conv2d() + BatchNorm2d() layers

print('Fusing layers... ')

for m in self.model.modules():

if isinstance(m, RepConv):

#print(f" fuse_repvgg_block")

m.fuse_repvgg_block()

elif isinstance(m, RepConv_OREPA):

#print(f" switch_to_deploy")

m.switch_to_deploy()

elif type(m) is Conv and hasattr(m, 'bn'):

m.conv = fuse_conv_and_bn(m.conv, m.bn) # update conv

delattr(m, 'bn') # remove batchnorm

m.forward = m.fuseforward # update forward

elif isinstance(m, (IDetect, IAuxDetect)):

m.fuse()

m.forward = m.fuseforward

self.info()

return self

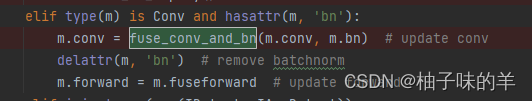

When encountering conv, there must be a BN, so

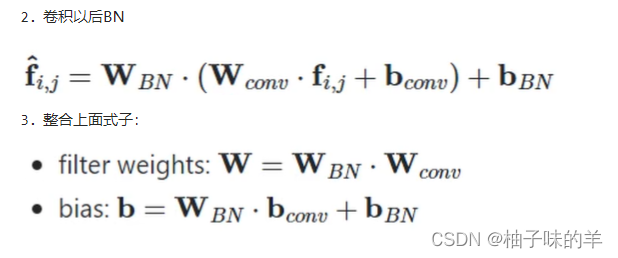

1.3 fuse_conv_and_bn(conv,bn)

- First define a new conv [the inputsize, outputsize and kernel are the same as the original input]

- First get w_conv:

w_conv = conv.weight.clone().view(conv.out_channels, -1) - Get w_bn:

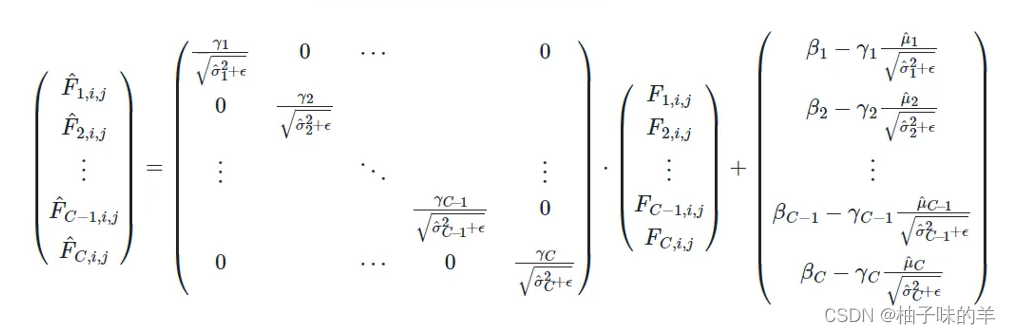

w_bn = torch.diag(bn.weight.div(torch.sqrt(bn.eps + bn.running_var)))【bn.weight 就是以下公式中的gamma,sigma平方是方差bn.running_var】

- get w_fuse:

fusedconv.weight.copy_(torch.mm(w_bn, w_conv).view(fusedconv.weight.shape)) - Get b_conv, because we set the bias to 0 during the learning process, so:

b_conv = torch.zeros(conv.weight.size(0), device=conv.weight.device) if conv.bias is None else conv.bias - Get b_bn:

b_bn = bn.bias - bn.weight.mul(bn.running_mean).div(torch.sqrt(bn.running_var + bn.eps))[bn.bias is β in the above formula, μ is the mean value bn.running_mean] - Calculate b_fuse

fusedconv.bias.copy_(torch.mm(w_bn, b_conv.reshape(-1, 1)).reshape(-1) + b_bn)

def fuse_conv_and_bn(conv, bn):

# Fuse convolution and batchnorm layers https://tehnokv.com/posts/fusing-batchnorm-and-conv/

fusedconv = nn.Conv2d(conv.in_channels,

conv.out_channels,

kernel_size=conv.kernel_size,

stride=conv.stride,

padding=conv.padding,

groups=conv.groups,

bias=True).requires_grad_(False).to(conv.weight.device)

# prepare filters bn.weight 对应论文中的gamma bn.bias对应论文中的beta bn.running_mean则是对于当前batch size的数据所统计出来的平均值 bn.running_var是对于当前batch size的数据所统计出来的方差

w_conv = conv.weight.clone().view(conv.out_channels, -1)

w_bn = torch.diag(bn.weight.div(torch.sqrt(bn.eps + bn.running_var)))

fusedconv.weight.copy_(torch.mm(w_bn, w_conv).view(fusedconv.weight.shape))

# prepare spatial bias

b_conv = torch.zeros(conv.weight.size(0), device=conv.weight.device) if conv.bias is None else conv.bias

b_bn = bn.bias - bn.weight.mul(bn.running_mean).div(torch.sqrt(bn.running_var + bn.eps))

fusedconv.bias.copy_(torch.mm(w_bn, b_conv.reshape(-1, 1)).reshape(-1) + b_bn)

return fusedconv

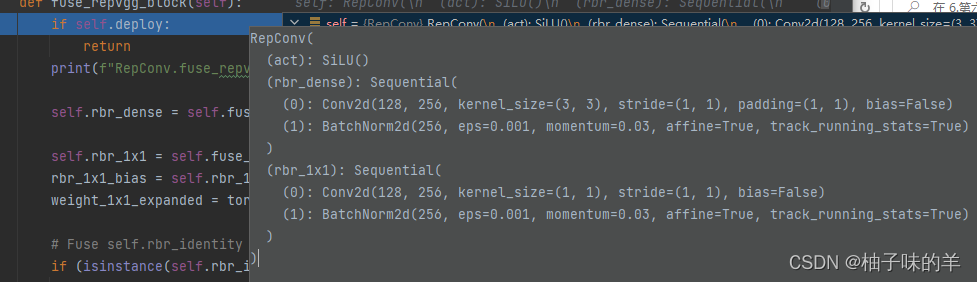

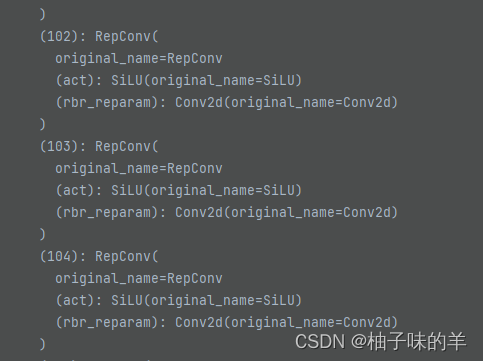

1.4 Repvgg_block

Combine convolution and BN in Repvgg

- The original block↓

- After fusing rbr_dense:

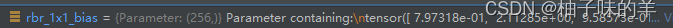

- After fusing rbr_1*1:

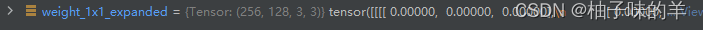

1.5 Convolve 1* 1 padding into 3* 3

After padding

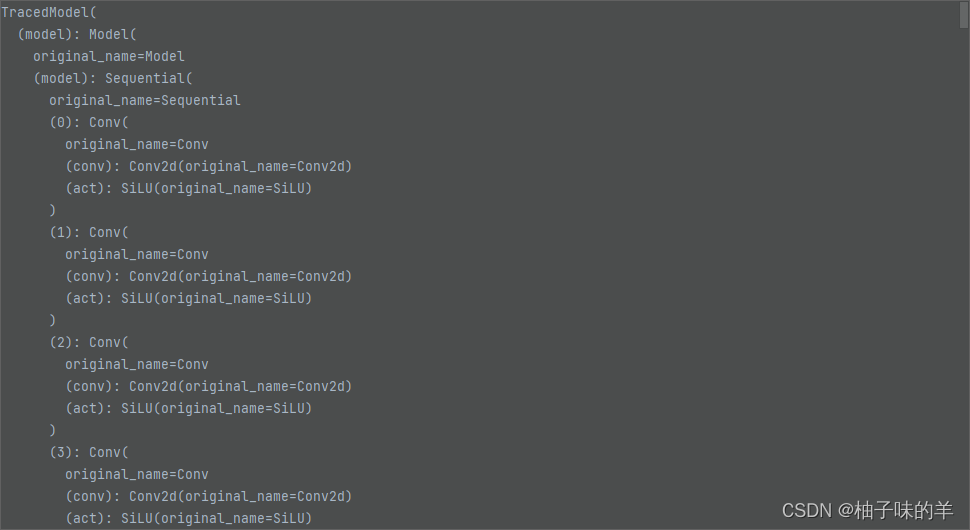

, everything is changed: the model looks like this ——>

OK, it’s really gone this time, 886~~~~