Two paralyzed patients have been able to communicate with unprecedented accuracy and speed through the use of artificial intelligence (AI)-enhanced brain-machine implants.

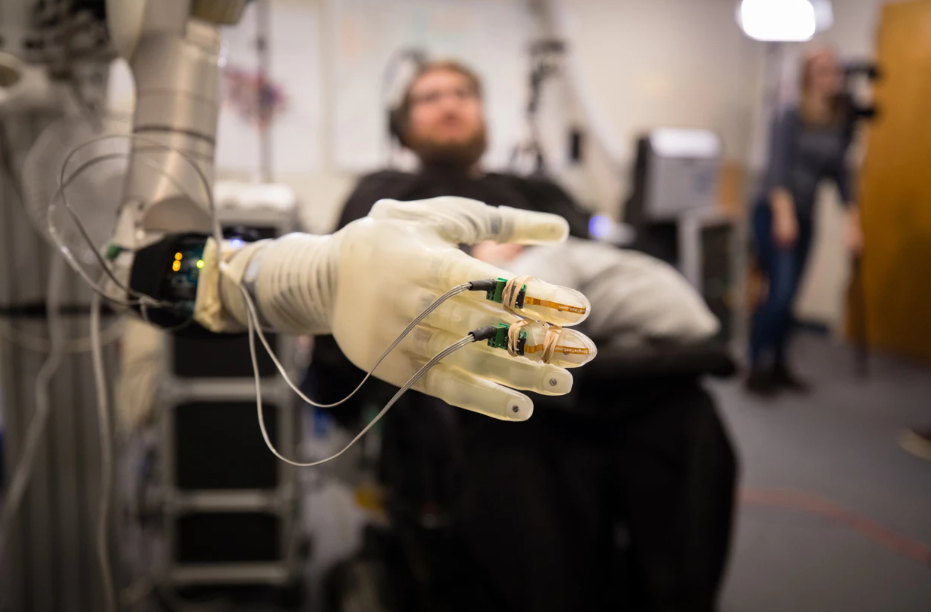

A brain-computer interface converts participants' brain signals into animated speech and facial movements. Source: Noah Berger

Published separately in the journal Nature (IF2022=64.8) on August 23, two research teams describe a brain-computer interface (BCI) that converts brain signals into text or synthesized speech. These BCIs decode speech at rates of 62 words per minute and 78 words per minute, respectively. These new techniques are all faster than any previous attempt, despite the rate of natural conversation at about 160 words per minute.

"It is now conceivable in the future that it might be possible to restore fluent communication to paralyzed patients, allowing them to say whatever they want with sufficient accuracy," neuroscientist Francis Willett of Stanford University in California said in an August 22 news conference. High, can be reliably understood."

Such devices "could become products in the near future," says Christian Herff, a computational neuroscientist at Maastricht University in the Netherlands.

Electrodes and Algorithms

Willett and colleagues developed a BCI that interprets neural activity at the cellular level and translates it into text [1]. They collaborated on the study with Pat Bennett, a 67-year-old patient with motor neurone disease, also known as amyotrophic lateral sclerosis, a condition in which the gradual loss of muscle control leads to movement and difficulty speaking.

Paralyzed people use their brain activity to control prosthetics. Source: Petter/UPMC

First, the researchers performed surgery on Bennett, implanting a set of small silicon electrodes a few millimeters below the language-related brain region. They then trained a deep-learning algorithm to identify unique signals in Bennett's brain as he tried to utter various phrases using the large vocabulary of 125,000 words and the small vocabulary of 50 words. The AI decodes words from phonemes, the subunits of speech that form spoken language. For a vocabulary of 50 words, the BCI works 2.7 times faster than the earlier state-of-the-art BCI [3] with a word error rate of 9.1%. For a vocabulary of 125,000 words, the error rate rises to 23.8%. "About three-quarters of the words were correctly decoded," Willett said in a news release.

"For those who cannot speak, it means they can stay in touch with the larger world, perhaps continue to work and maintain friends and family relationships, " Bennett said in a statement to reporters.

reading brain activity

In another study [2], neurosurgeon Edward Chang of the University of California, San Francisco, and colleagues worked with Ann, a 47-year-old woman who lost her ability to speak after a brainstem stroke 18 years earlier.

They took a different approach from Willett's team, placing a thin rectangle containing 253 electrodes on the surface of the cortex to record neural activity. The technique, called electrocorticography (ECoG), is considered less invasive and can record the combined activity of thousands of neurons simultaneously. The team trained the AI algorithm to recognize patterns in the brain activity associated with Ann's attempts to speak 249 sentences, using a vocabulary of 1,024 words. The device ended up producing 78 words per minute with a median word error rate of 25.5%.

Although the implant used by Willett's team captures neural activity more precisely and performs better on larger vocabularies, Blaise Yvert, a neurotechnology researcher at the Krenoble Institute for Neuroscience, said, "Seeing that ECoG can achieve low error Rates are a good thing."

Chang and his team also created custom algorithms, trained using recordings from Ann's wedding footage, to convert Ann's brain signals into animated avatars with synthetic voices and simulated facial expressions. Ultimately, they created a personalized voice that sounded like Ann did before her injury.

In a feedback session after the study, Ann told the researchers: "The simple fact of hearing a voice similar to my own is emotionally charged. When I can speak for myself, it means a lot to me! "

"Voice is a very important part of our identity," Chang said. "It's not just about communication, it's about who we are."

Clinical application

An exoskeleton controlled by brain activity was tested on an able-bodied boy. Source: 160over90

Many improvements are needed before BCIs can be used clinically. "The ideal would be a wireless connection," Ann told the researchers. A BCI suitable for everyday use would have to be a fully implantable system with no visible connectors or cables, Yvert added. Both teams hope to continue improving the speed and accuracy of their devices with more powerful decoding algorithms.

Participants in both studies were still able to use facial muscles when thinking about speaking, and brain regions associated with language were intact, Herff said. "It doesn't necessarily work for every patient."

"We see it as a proof of concept, just to provide momentum for people in the field to turn it into a usable product," Willett said.

The devices must also be tested in larger groups of people to prove their reliability. "No matter how elegant the data and how sophisticated the technology, we have to understand them in a very careful way," says Judy Illes, a neuroethics researcher at the University of British Columbia in Vancouver, Canada. "We have to be careful not to overcommit," she adds. Its broad applicability to large populations, I'm not sure we've done that yet."

References :

[1]. Willett, F. R. et al. Nature https://doi.org/10.1038/s41586-023-06377-x (2023).

[2]. Metzger, SL et al. Nature https://doi.org/10.1038/s41586-023-06443-4 (2023).

[3]. Moses, DA et al. N. Engl. J. Med. 385, 217–227 (2021).

Read the original content :

https://doi.org/10.1038/d41586-023-02682-7

Past products (click on the picture to go directly to the text corresponding tutorial)