Find the real interface of the data

Open the Jingdong product website to view product reviews. We clicked on the comment to turn the page, and found that the URL did not change, indicating that the webpage is a dynamic webpage.

API name: item_review - get JD product reviews

public parameters

| name | type | must | describe |

|---|---|---|---|

| key | String | yes | Call key (must be spliced in the URL in GET mode) |

| secret | String | yes | call key |

| api_name | String | yes | API interface name (included in the request address) [item_search, item_get, item_search_shop, etc.] |

| cache | String | no | [yes, no] The default is yes, the cached data will be called, and the speed is relatively fast |

| result_type | String | no | [json,jsonu,xml,serialize,var_export] returns the data format, the default is json, and the content output by jsonu can be read directly in Chinese |

| lang | String | no | [cn,en,ru] translation language, default cn Simplified Chinese |

| version | String | no | API version |

request parameters

Request parameter: num_iid=71619129750&page=1

Parameter description: item_id: product ID

page: number of pages

response parameters

Version: Date:

| name | type | must | example value | describe |

|---|---|---|---|---|

| items |

items[] | 0 | Get JD Product Reviews | |

| rate_content |

String | 0 | The style of this canvas shoe is quite good, it is also very versatile to wear, and the workmanship is very fine. ! | comments |

| rate_date |

Date | 0 | 2020-07-16 17:04:45 | comment date |

| pics |

MIX | 0 | ["//img30.360buyimg.com/n0/s128x96_jfs/t1/143538/26/2997/98915/5f10182dE075cf6f4/3893a6ebd54bf20b.jpg"] | comment pictures |

| display_user_nick |

String | 0 | j***X | Buyer Nickname |

| auction_sku |

String | 0 | Color: White (velvet); Size: 2XL | Review Product Attributes |

| add_feedback |

String | 0 | The fabric of the clothes is very good and it is very comfortable to wear. The clothes fit well! | Review content |

Through the loop, crawl the comment data of all pages

The key to page-turning crawling is to find the "page-turning" rule of the real address. We clicked on page 1, page 2, and page 3 respectively, and found that the different page numbers were the same except that the page parameters were inconsistent. The "page" of page 1 is 1, the "page" of page 2 is 2, the "page" of page 2 is 2, and so on. We nest a For loop and store the data through pandas. Run the code to automatically crawl the comment information of other pages and store it in the t.xlsx file. All codes are as follows:

import requests

import pandas as pd

items=[]

for i in range(1,20):

header = {'User-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.131 Safari/537.36 SLBrowser/8.0.1.4031 SLBChan/105'}

url=f'https://api.m.jd.com/?appid=item-v3&functionId=pc_club_productPageComments&client=pc&clientVersion=1.0.0&t=1684832645932&loginType=3&uuid=122270672.2081861737.1683857907.1684829964.1684832583.3&productId=100009464799&score=0&sortType=5&page={i}&pageSize=10&isShadowSku=0&rid=0&fold=1&bbtf=1&shield='

response= requests.get(url=url,headers=header)

json=response.json()

data=json['comments']

for t in data:

content =t['content']

time =t['creationTime']

item=[content,time]

items.append(item)

df = pd.DataFrame(items,columns=['评论内容','发布时间'])

df.to_excel(r'C:\Users\蓝胖子\Desktop\t.xlsx',encoding='utf_8_sig')

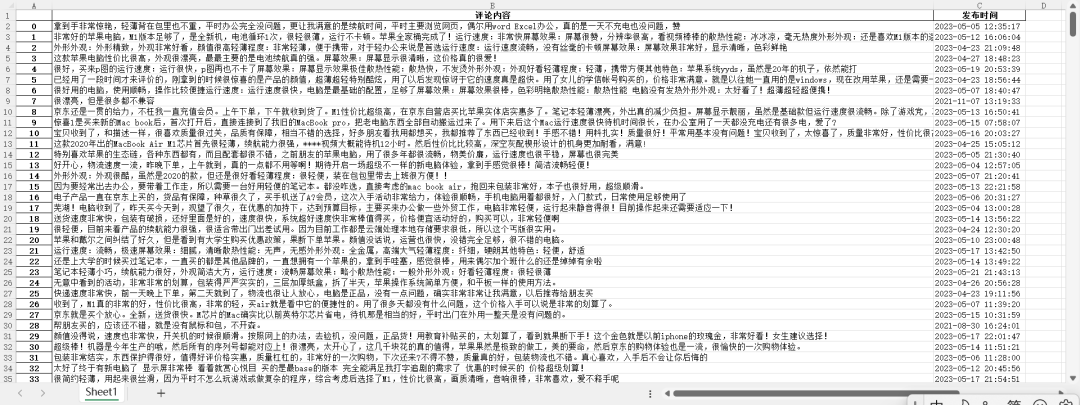

Finally, the crawled data results are as follows: