这是张老师的二作文章,可得好好读。

Summary

Traditional federated learning algorithms have strict requirements on the participation rate of devices, which limits the potential coverage of federated learning. This paper extends the current learning paradigm to include devices that may become inactive, compute incomplete updates, and leave or arrive during training. We draw analytical results to illustrate that allowing more flexible device participation affects learning convergence when the data is non-IID.

We then propose a new federated aggregation scheme that converges even though devices may be inactive or return incomplete updates. We also investigate how the learning process accommodates early departure or late arrival and analyze their impact on convergence.

1 Introduction

Considering that federated learning typically requires thousands of communication rounds to converge, it is difficult in practice to ensure that all devices are available throughout the training process. Additionally, there are often multiple applications running concurrently on user devices, competing for already highly constrained hardware resources. Therefore, there is no guarantee that the device can complete the specified training tasks as expected in each round of training.

While many methods have been proposed to offload the workload of individual devices, such as weight compression and federated dropout, they cannot completely eliminate the possibility of a device being unable to perform its training duties. Therefore, in large-scale federated learning, many resource-constrained devices must first be excluded from joining federated learning, which limits the potential availability of training datasets and weakens the applicability of federated learning. Furthermore, existing work does not specify how to react when encountering unexpected device behaviors, nor does it analyze the (negative) impact of these behaviors on training progress.

In this paper, we relax these restrictions and allow devices to follow a more flexible participation model :

- Incompleteness : A device may submit only partially completed work in a round.

- Inactive : Additionally, the device may not complete any updates, or respond to the coordinator at all.

- Early exit : In extreme cases, existing equipment may exit training before completing all training epochs.

- Late Arrivals : In addition to existing equipment, new equipment may join after training has started.

Our approach to increasing the flexibility of device participation includes the following components, which complement the existing FedAvg algorithm and address the challenges posed by flexible device participation:

- Debiasing of Partial Model Updates

- Fast reboot on device arrival

- Redefining Model Suitability for Device Deviation

2 related work

(Some work on asynchronous training) Asynchronous aggregation in the algorithm can be naturally applied to random inactive devices, but the authors do not analyze how the convergence of their algorithm is affected by inactive or incomplete devices and data heterogeneity.

(some work that relaxes the device requirements for participation) These works do not show how changes in devices affect the convergence of training, nor do they incorporate user data heterogeneity into the algorithm design.

Wait for related work research.

3 Convergence analysis

3.1 Algorithm description

Suppose there is NN hereN devices, we kkfor each devicek defines a local objective functionF k ( w ) F_k(w)Fk( w ) . in thatwww is obviously the weight parameter of machine learning,F k ( w ) F_k(w)Fk( w ) can be devicekkAverage experience loss over all points over k . Our global goal is to minimize the following function:

F ( w ) = ∑ k = 1 N p k F k ( w ) F(w)=\sum_{k=1}^Np_kF_k(w) F(w)=k=1∑NpkFk(w)

where pk = nknp^k=\frac{n_k}{n}pk=nnk, n k n_k nkis the device kkThe number of data owned by k , and n = ∑ k = 1 N nkn=\sum_{k=1}^Nn_kn=∑k=1Nnk. Order w ∗ w^*w∗ is the functionF ( w ) F(w)F ( w ) takes the weight parameter of the minimum value. We useF k ∗ F_k^*Fk∗represents F k F_kFkminimum value.

To describe the device kkHow different the data distribution of k is from the data distribution of other devices, we quantify as Γ k = F k ( w ∗ ) − F k ∗ \Gamma_k=F_k(w^*)-F_k^*Ck=Fk(w∗)−Fk∗, at the same time Γ = ∑ k = 1 N pk Γ k \Gamma=\sum_{k=1}^Np_k\Gamma_kC=∑k=1NpkCk.

Consider discrete time steps t = 0 , 1 , ⋯ t=0,1,\cdotst=0,1,⋯ .whenttt isEEWhen the multiple of E , the model weights are synchronized. Suppose there are at mostTTFor T rounds, for each round (eg atτ \tauτ round), we perform the following three steps:

- Synchronization : The server broadcasts the latest weight w τ EG w_{\tau E}^\mathcal{G}wτEGto all clients. Each client updates its own weight parameter: w τ E k = w τ EG w_{\tau E}^k=w_{\tau E}^\mathcal{G}wτEk=wτEG

- Local training : when i = 0 , ⋯ , s τ k − 1 i=0,\cdots,s_\tau^k-1i=0,⋯,stk−When 1 , each device has its own loss functionF k F_kFkTransportation SGD calculation: w τ E + i + 1 k = w τ E + ik − η τ g τ E + ik w_{\tau E+i+1}^k=w_{\tau E+i}^k- \eta_\tau g_{\tau E+i}^kwτE+i+1k=wτE+ik−thetgτE+ikHere η τ \eta_\tauthetis with τ \tauτ decaylearning rate,0 ≤ s τ k ≤ E 0\le s_\tau^k\le E0≤stk≤E represents the number of time steps of local updates done in this round. gtk = ∇ F k ( wtk , ξ tk ) g_t^k=\nabla F_k(w_t^k,\xi_t^k)gtk=∇Fk(wtk,Xtk) is the devicekkThe stochastic gradient of k , whereξ tk \xi_t^kXtkRepresents the data of the local mini-batch. We also define g ˉ tk = ∇ F k ( wtk ) \bar g_t^k=\nabla F_k(w_t^k)gˉtk=∇Fk(wtk) means the devicekkThe full batch gradient of k , so g ˉ tk = E ξ tk [ gtk ] \bar g_t^k=\mathbb E_{\xi_t^k}[g_t^k]gˉtk=EXtk[gtk]

- 码线:电视器砖线梯度可生活在于安全权重parameters:w ( τ + 1 ) EG = w τ EG + ∑ k = 1 N p τ k ( w τ E + s τ k − w τ EG ) w ( τ + 1 ) EG = w τ EG − ∑ k = 1 N p τ k ∑ i = 0 s τ k η τ g τ E + ik w_{(\tau+1) E}^\mathcal{G}=w_{ \tau E}^\mathcal{G}+\sum_{k=1}^Np_\tau^k(w_{\tau E+s_{\tau}^k}-w_{\tau E}^\mathcal{ G})\\w_{(\tau+1) E}^\mathcal{G}=w_{\tau E}^\mathcal{G}-\sum_{k=1}^Np_\tau^k\sum_ {i=0}^{s_\tau^k}\eta_\tau g_{\tau E+i}^kw( τ + 1 ) EG=wτEG+k=1∑Nptk(wτE+stk−wτEG)w( τ + 1 ) EG=wτEG−k=1∑Nptki=0∑stkthetgτE+ikIf s τ k = 0 s_\tau^k=0stk=0 (that is, devicekkk at theτ \tauτ round without any update), then we say devicekkk at theτ \tauThe tau round isinactive. If0 < s τ k < E 0<s_\tau^k<E0<stk<E , then we say the devicekkk isincomplete. We will eachs τ k s_\tau^kstkAs a random variable following any distribution, if s τ k s_\tau^k of different devicesstkhave different distributions, then they are heterogeneous, otherwise they are homogeneous. At the same time, we allow the aggregated weight coefficient p τ k p_\tau^kptkWith time steps τ \tauτ changes. (Generallyp τ k p_\tau^kptkis s τ k s_\tau^kstkThe function)

As a special case, traditional FedAvg assumes that all devices complete all EEs per roundE time step training, sos τ k ≡ E s_\tau^k\equiv Estk≡E. _ And the aggregation coefficient p τ k ≡ pk p_\tau^k\equiv p^kused by FedAvg participated by all devicesptk≡pk , so the right side of the previous formula can be written as:w ( τ + 1 ) EG = ∑ k = 1 N p τ kw τ E k w_{(\tau+1) E}^\mathcal{G}=\sum_ {k=1}^Np_\tau^kw_{\tau E}^kw( τ + 1 ) EG=k=1∑NptkwτEkThis is because gradient aggregation is equivalent to directly aggregating model parameters .

3.2 General convergence bound

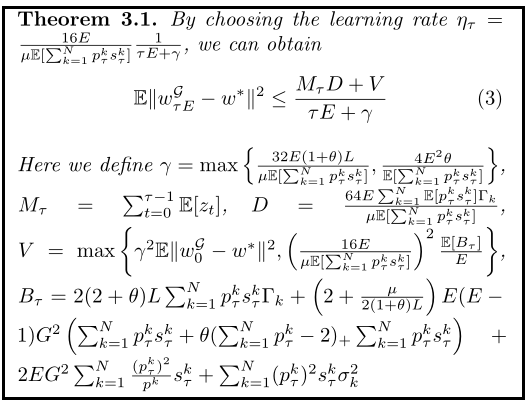

This part proves the following convergence bounds through various assumptions (including Lipschitz gradient, etc.):

3.3 Global Goal Transfer

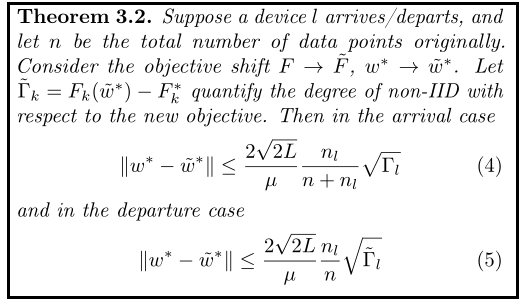

This chapter describes the phenomenon that the global loss function is shifted towards the device due to the acceptance of the weights of the specific device. The article has the following theorem:

The article then derives a new convergence bound in the case of global target shifts.