Why not call the from_pretrained method to download the model directly? And only available online. but

- If the network is not good, the model download time will be very long, and it is common for a small model to download for several hours

- If you change the training server, you have to download it again.

- And it is easy to report an error

How to call the model in the huagging face, such as vit

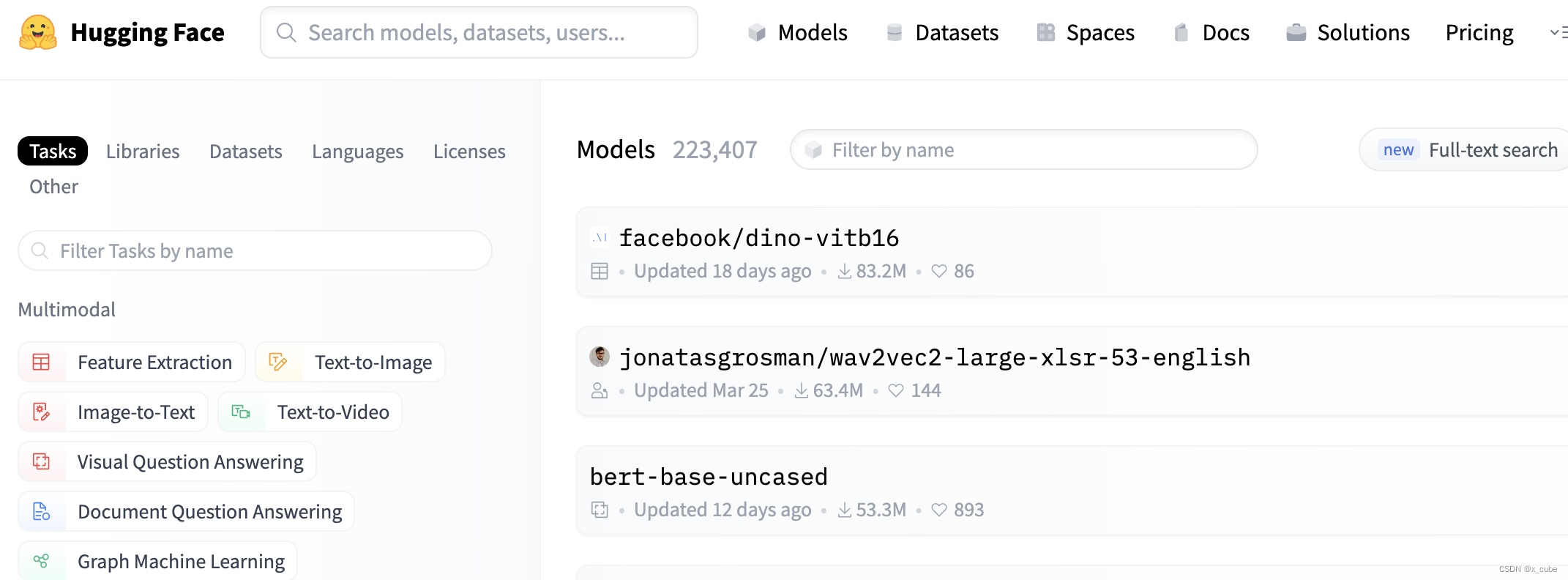

Locally, you can find the model you need in Models - Hugging Face , download it and call it locally. The example is as follows

from transformers import ViTImageProcessor, ViTModel

from PIL import Image

import requests

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

# 此处可以是模型名字也可以选择本地路径,本地不容易报错

image_model = 'google/vit-base-patch16-224-in21k'

# image_model = ./models/vit-base-patch16-224-in21k'

processor = ViTImageProcessor.from_pretrained(image_model)

model = ViTModel.from_pretrained(image_model)

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

last_hidden_states = outputs.last_hidden_state

Download operation:

1 Open the model page and enter the model you want.

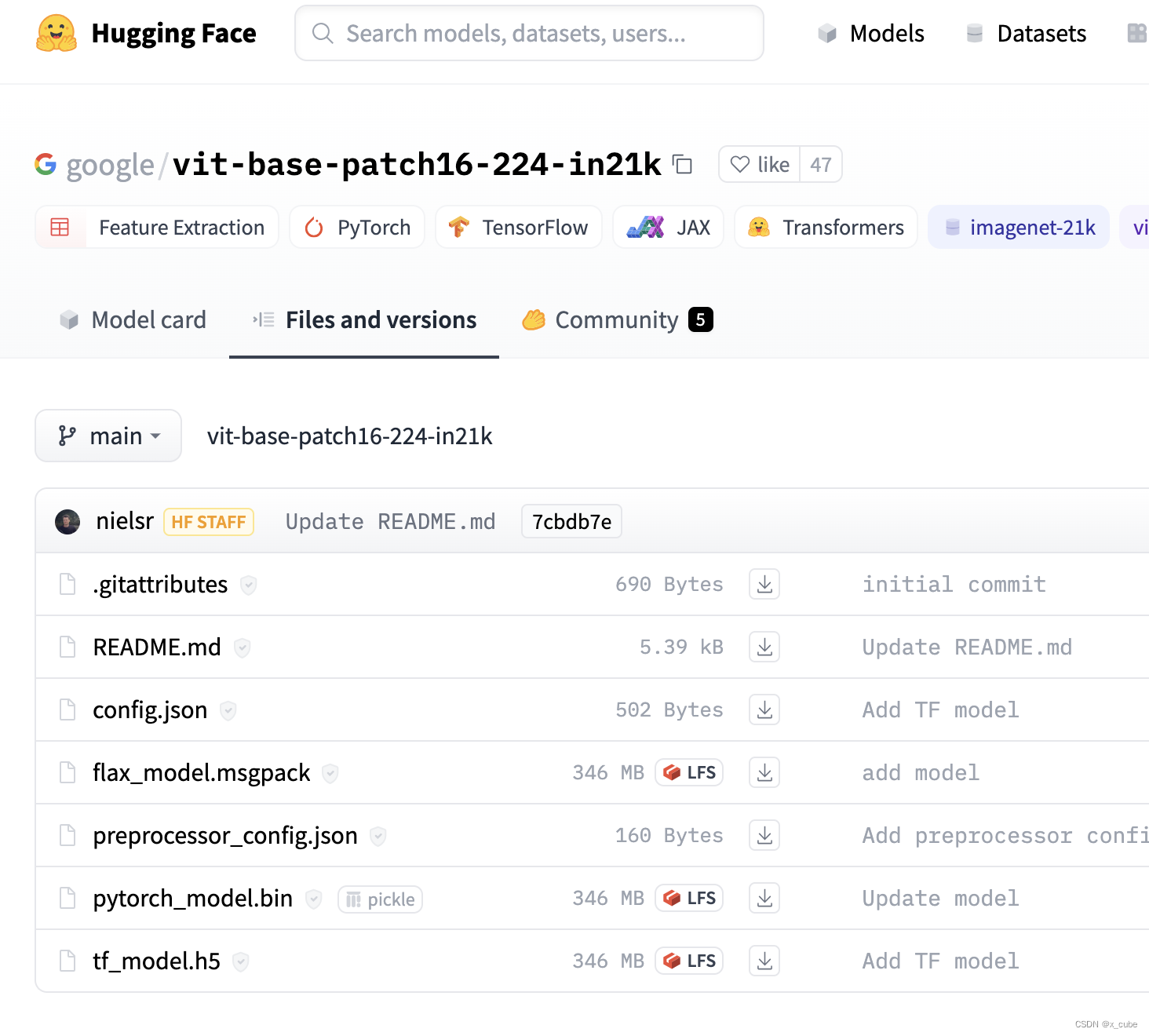

Click on the page to be selected and select the middle option:

Download what you need, choose one for LFS, choose pytorch_model.bin for pytorch, and choose tf_model.h5 for tensorflow.

reference: