cluster and distributed

System performance expansion method:

- Scale UP: Vertical expansion, improving the performance of a single server, such as increasing the CPU, memory, etc., the performance of a single server has an upper limit, and it is impossible to expand vertically without limit.

- Scale Out: Expand horizontally, add devices, and run multiple services in parallel. That is, the Cluster cluster

cluster

Cluster: Cluster, a single system formed by combining multiple computers to solve a specific problem

three types

LB

Load Balancing, load balancing, consists of multiple hosts, and each host only bears part of the access requests.

Product Categories

Software load balancing

LVS: four-layer routing, according to the IP and port number requested by the user, the user's request is distributed to different hosts

HAProxy: supports seven-layer scheduling, mainly for http protocol to achieve load balancing

Nginx: supports seven-layer scheduling, mainly realizes load balancing for http, smtp, pop3, imap and other protocols, and is only responsible for parsing limited seven-layer protocols

hardware load balancing

F5 BIG-IP

Citrix Netscaler

A10

HA

High Availiablity, high availability, aims to improve the always-on capability of the service, and will not cause the service to be unavailable due to downtime

Measuring Availability: Uptime / (Uptime + Downtime)

1 year = 365 days = 8760 hours

99% means 8760 * 1% = 87.6 hours of failure within one year

99.9% or 8760 * 0.1% = 8.76 hours of failure within a year

99.99% means 8760 * 0.01% = 52.6 minutes of failure within one year

99.999% means 8760 * 0.001% = 5.26 minutes of failure within one year

99.9999% means 8760 * 0.0001% = 31 seconds failure within one year

product

Keepalived

HPC

High—performance computing, high-performance computing clusters, computing massive data, and solving complex problems.

distributed

Distributed storage: Ceph, GlusterFS, FastDFS, MogileFS

Distributed computing: hadoop, Spark

Distributed common applications:

- Distributed application services are split according to functions, using microservices

- Distributed static resources. Static resources are placed on different storage clusters

- Distributed data and storage - using a key-value cache system

- Distributed computing - use distributed computing for special business, such as Hadoop cluster

Compared

Cluster: The same business system is deployed on multiple servers. In the cluster, there is no difference in the functions implemented by each server, and the data and code are the same

Distributed: A business is split into multiple sub-services, or is itself a different business, deployed on multiple servers. In distributed, the functions implemented by each server are different, and the data and code are also different. The functions of each distributed server add up to form a complete business

Distributed improves efficiency by shortening the execution time of a single task, while cluster improves efficiency by increasing the number of tasks executed per unit time.

LVS

The VS schedules and forwards the request message to a certain RS according to the target IP, target protocol and port of the request message, and selects the RS according to the scheduling algorithm. LVS is integrated into the kernel, works in the position of the INPUT chain, and processes the traffic sent to INPUT.

Related terms:

VS: Virtual Server, responsible for scheduling

RS: Real Server, the server that really provides services

CIP:Client IP

VIP: Virtual Server IP, the external network IP of the VS host

DIP: Director IP, the intranet IP of the VS host

RIP:Real Server IP

Operating mode

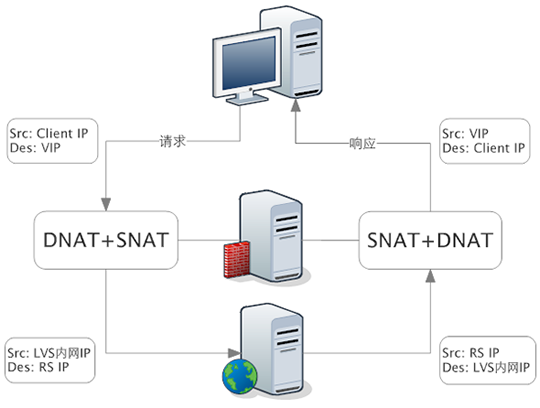

1. LVS-NAT address translation mode

The essence is the DNAT of multi-target IP, and forwarding is realized by modifying the target address and target port in the request message to the RIP and Port of a selected RS

working principle

- The client sends a request to LVS (load balancer), the source address of the request message is CIP (client IP), and the destination address is VIP (LVS external network IP)

- After LVS receives the request message, it finds that the request is the address in the rule, it will modify the VIP to RIP (real server IP) and send the message to RS according to the scheduling algorithm

- RS receives the request message and will respond to the request, and will return the response message to LVS

- LVS modifies the source IP of the response message to the local VIP and sends it to the client

Notice

- RIP and DIP should be in the same network, and private network IP should be used;

- The gateway of RIP must point to the DIP of lVS, otherwise the response message cannot be delivered to the client.

- Both the request message and the response message must be forwarded by the Director, and the Director is likely to become a system bottleneck

- Support port mapping, which can modify the destination port of the request message

- VS must be a Linux system, RS can be any system

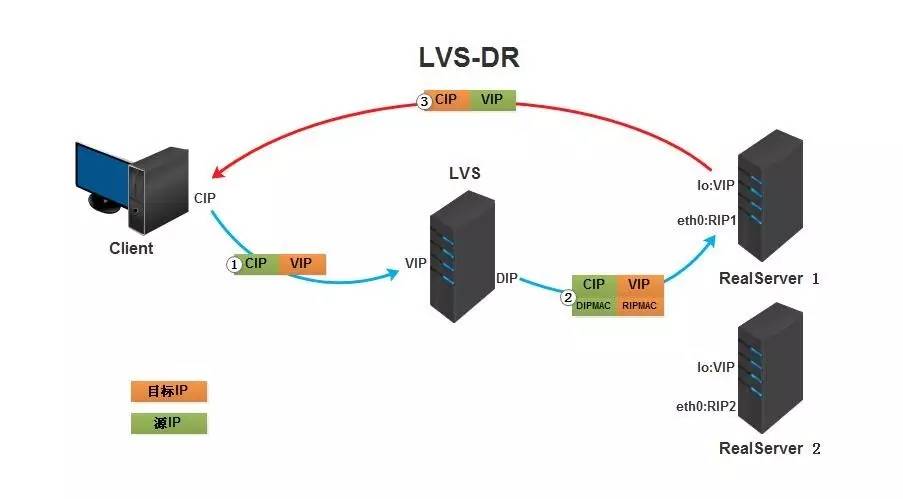

2. LVS-DR direct routing mode

Direct Routing, direct routing, LVS default mode , the most widely used. The request message is forwarded by re-encapsulating a MAC header. The source MAC is the MAC of the interface where the DIP is located, and the target MAC is the MAC address of the interface where the RIP of a selected RS is located. The source IP/Port and destination IP/Port remain unchanged

working principle

- The client sends a request to LVS, the source address of the request message is CIP, and the destination address is VIP

- After LVS receives the request message, it finds that the request is an address that exists in the rule, it will change the source MAC address to its own DIP MAC address, and the target MAC address to the RIP MAC address, and send the message according to the scheduling algorithm to RS

- After RS receives and processes the request message, it sends the response message to the eth0 network card through the lo interface and sends it directly to the client

Notice

- Direct and each RS are configured with VIP

- Ensure that the front-end router sends the request packet with the destination IP as VIP to Direct

- Statically bind the MAC address of VIP and Direct on the front-end gateway

- Using the arptables tool on RS

- arptables -A IN -d $VIP -j DROP

- arptables -A OUT -s $VIP -j mangle --mangle-ip-s $RIP

- Modify kernel parameters on RS to limit arp notification and response level

- /proc/sys/net/ipv4/conf/all/arp_ignore

- 0 is the default value, which means that any address configured on any local interface can be used to respond

- 1 Respond only when the requested destination IP is configured on the local host where the request packet was received

- /proc/sys/net/ipv4/conf/all/arp_announce

- 0 The default value, all the interface scheduling information of this machine is advertised to the network of each interface

- 1 Try to avoid advertising interface information to non-directly connected networks

- 2 It is necessary to avoid passing interface information to non-local networks

- /proc/sys/net/ipv4/conf/all/arp_ignore

- The RIP of RS can use the private network address/public network address; RIP and DIP are in the same network; the RIP gateway cannot point to DIP to ensure that the response message will not pass through the Direct

- RS and Direct must be on the same physical network

- The request message needs to go through Direct, but the response message does not go through Direct, but is sent directly to the Client

- Port mapping is not supported (ports cannot be modified)

- No need to open ip_forward

- RS available on most systems

3. LVS-TUNIP tunnel mode

Do not modify the IP header of the request message (source IP is CIP, destination IP is VIP), but encapsulates an IP header outside the original IP message (source IP is DIP, destination IP is RIP), and sends the message to the selected Outgoing RS, RS directly responds to the client (source IP is VIP, destination IP is CIP)

working principle

- The client sends a request to LVS, the source address of the request message is CIP, and the destination address is VIP

- After LVS receives the request message, it finds that the request is the address in the rule. It will encapsulate another layer of IP message in the header of the client request message, change the source IP to DIP, and change the target IP to RIP. and send the package to RS

- After receiving the request message, RS unpacks the first layer of encapsulation and finds that there is still a layer of IP header in which the target address is the VIP of its own lo interface. It will process the request message again and send the response message to the eth0 network card through the lo interface. sent directly to the client

Notice

- RIP and DIP may not be in the same physical network, the gateway of RS generally cannot point to DIP, and RIP can communicate with the public network. That is to say, cluster nodes can be implemented across the Internet. DIP, VIP, RIP can be public address

- The tun interface of the RS needs to be configured with a VIP address in order to receive the data packets sent by the direct, as well as the source IP of the response message

- Direct forwarding to RS requires the use of a tunnel. The source IP of the IP header on the outer layer of the tunnel is DIP, the destination IP is RIP, and the IP header that RS responds to the client is obtained based on the analysis of the IP header on the inner layer of the tunnel. The source IP is VIP, target IP is CIP

- The request message needs to go through Direct, but the response does not go through Direct, and the response is completed by RS itself

- Port mapping is not supported

- The RS system must support the tunnel function

4. LVS-FULLNAT

Address forwarding is performed by simultaneously modifying the source IP and destination IP of the request message.

CIP > DIP VIP > RIP

What is solved is the problem of LVS and RS crossing VLANs. After the cross-VLAN problem is solved, LVS and RS no longer have a subordination relationship on VLANs, and multiple LVSs can correspond to multiple RSs to solve the problem of horizontal expansion.

Notice:

- VIP is a public network address, RIP and DIP are private network addresses, and usually not in the same IP network; therefore, the gateway of RIP generally does not point to DIP

- The source address of the request message received by RS is DIP, so it only needs to respond to DIP; but Direct still needs to send it to Client

- Both request and response messages go through Direct

- Compared with NAT mode, it can better realize cross-VLAN communication between LVS and RS

- Support port mapping

Summary and comparison of various working modes

| NAT | AGAIN | DR | |

| RS server system | arbitrarily | support tunnel | Support Non-arp |

| RS server network | private network | LAN/WAN | local area network |

| Number of RS servers | 10-20 | 100 | 100 |

| RS Server Gateway | load balancer | own route | own route |

| advantage | port conversion | Wan | best performance |

| shortcoming | Direct is easy to become a bottleneck | support tunnel | Cross-network segments are not supported |

| efficiency | generally | high | Highest |

debug algorithm

According to whether it considers the current load status of each RS when scheduling, it is divided into two types: static method and dynamic method

static algorithm

Scheduling only according to the algorithm itself

- RR : roundrobin, polling

- WRR : Weighted RR, weighted round robin

- SH: Source Hashing, implement session sticky, source IP address hash; always send requests from the same IP address to the RS selected for the first time, so as to realize session binding

- DH: Destination Hashing; Destination address hash, the first round-robin scheduling to RS, subsequent requests sent to the same destination address are always forwarded to the first selected RS, the typical usage scenario is the load in the forward proxy cache scenario Balancing, like web caching

dynamic algorithm

Scheduling Overhead=value is mainly based on the current load status of each RS and the scheduling algorithm, and smaller RSs will be scheduled

- LC : least connections, suitable for long link applications

- Overhead=activeconns*256+inactiveconns

- WLC : Weight LC, the default scheduling method

- Overhead=(activeconns*256+inactiveconns)/weight

- SED: Shortest Exception Delay, high initial connection weight priority, only checks active connections, regardless of inactive connections

- Overhead=(activeconns+1)*256/weight

- NQ: Never Queue, evenly distributed in the first round, followed by SED

- LBLC: Locality-Based LC, dynamic DH algorithm, usage scenarios: realize forward proxy according to load status, realize Web Cache, etc.

- LBLCR: LBLC with Replications, LBLC with replication function, solves the problem of LBLC load imbalance, from heavy load replication to light load RS, realizes Web Cache, etc.

New scheduling algorithm after kernel version 4.15

FO (Weighted Fail Over) scheduling algorithm, in this FO algorithm, traverse the real server linked list associated with the virtual service, find the real

server with the highest weight that has not yet been overloaded (the IP_ _VS_ DEST_ F_ OVERLOAD flag is not set), and perform scheduling

OVF (Overflow-connection) scheduling algorithm, implemented based on the number of active connections and weight values of real servers. New connections are dispatched to the real server with the highest weight value until the number of active connections exceeds the weight value, and then dispatched to the next real server with the highest weight value. In this OVF algorithm, the real server linked list associated with the virtual service is traversed , find the available real server with the highest weight value.

A usable real server needs to meet the following conditions at the same time:

- Not overloaded (IP_VS_DEST_F_OVERLOAD flag not set)

- The real server's current number of active connections is less than its weight value

- Its weight value is not zero

related software

Package: ipvsadm

Unit File: ipvsadm.service

Main program: /usr/sbin/ipvsadm

Rule saving tool: /usr/sbin/ipvsadm-save

Rule reload tool: /usr/sbin/ipvsadm-restore

Configuration file: /etc/sysconfig/ipvsadm-config

ipvs scheduling rule file: /etc/sysconfig/ipvsadm

ipvsadm command

Manage cluster services

increase, modify

ipvsadm -A|E -t|u|f service-address [-s scheduler] [-p [timeout]]

-A: Add a cluster service

-E: edit a cluster service

-t: TCP protocol port, VIP: TCP_Port

-u: UDP protocol port, VIP: UDP_Port

-f: firewalld Mark, mark, a number

-s scheduler: Specifies the scheduling algorithm of the cluster, the default is WLC

-p: Set the timeout period. After it is turned on, it means that within the specified time, requests from the same IP will be forwarded to the same real server at the backend

delete

ipvsadm -D -t|u|f service-address

RS on the management cluster

increase, modify

ipvsadm -a|e -t|u|f service-address -r service-address [-g|i|m] [-w weight]

-a: add a real server

-e: edit a real server

-g: gateway, dr type, default

-i: ipip, tun type

-m: masquerade, nat type

-w:weight, weight

delete

ipvsadm -d -t|u|f service-address -r service-address

Clear everything defined

ipvsadm -C

clear counter

ipvsadm -Z [-t|u|f service-address]

Check

ipvsadm -L|l [options]

options:

--numeric, -n: output address and port as numbers

--exact: extended information

--connection, -c: current IPVS connection output

--state: statistics

--rate: output rate information

ipvs rules

/proc/net/ip_vs

ipvs connection

/proc/net/ip_vs_conn

Save: It is recommended to save to /etc/sysconfig/ipvsadm

ipvsadm-save > path

ipvsadm -S > path

systemctl stop ipvsadm.service #The rules will be automatically saved to /etc/sysconfig/ipvsadm

overload

ipvsadm-restore < path

systemctl start ipvsadm.service #The rules of /etc/sysconfig/ipvsadm will be automatically loaded

#查看内核支持LVS信息

[root@wenzi ~]#grep -i -C 10 ipvs /boot/config-4.18.0-193.el8.x86_64

...

# IPVS transport protocol load balancing support

#支持的协议

CONFIG_IP_VS_PROTO_TCP=y

CONFIG_IP_VS_PROTO_UDP=y

CONFIG_IP_VS_PROTO_AH_ESP=y

CONFIG_IP_VS_PROTO_ESP=y

CONFIG_IP_VS_PROTO_AH=y

CONFIG_IP_VS_PROTO_SCTP=y

# IPVS scheduler

#调度算法

CONFIG_IP_VS_RR=m

CONFIG_IP_VS_WRR=m

CONFIG_IP_VS_LC=m

CONFIG_IP_VS_WLC=m

CONFIG_IP_VS_FO=m

CONFIG_IP_VS_OVF=m

CONFIG_IP_VS_LBLC=m

CONFIG_IP_VS_LBLCR=m

CONFIG_IP_VS_DH=m

CONFIG_IP_VS_SH=m

# CONFIG_IP_VS_MH is not set

CONFIG_IP_VS_SED=m

CONFIG_IP_VS_NQ=m

# IPVS SH scheduler

CONFIG_IP_VS_SH_TAB_BITS=8

# IPVS MH scheduler

CONFIG_IP_VS_MH_TAB_INDEX=12

...NAT experiment

| CPU name | IP | GW |

| Client | 192.168.10.11/24 | none |

| LVS | 192.168.10.12/24 192.168.20.1/24 |

none |

| RS1 | 192.168.20.21/24 | 192.168.20.1 |

| RS2 | 192.168.20.22/24 | 192.168.20.1 |

RS1 and RS2 install httpd as a test LVS effect

LVS install ipvsadm

#LVS host installation ipvsadm

yum -y install ipvsadm

#Check if the ipv4 forwarding function is enabled, 0 is not enabled, 1 is

sysctl enabled net.ipv4.ip_forward

#Enable ipv4 forwarding function

vim /etc/sysctl.conf

net.ipv4.ip_forward=1

#Make the configuration take effect

sysctl -p#Configure LVS load balancing strategy

# -A specifies VIP, -t specifies TCP, -s specifies scheduling algorithm rr

ipvsadm -A -t 192.168.10.12:80 -s rr

# -a specifies the real server, -t specifies TCP, -m specifies nat mode

ipvsadm -a -t 192.168.10.12:80 -r 192.168.20.21:80 -m

ipvsadm -a -t 192.168.10.12:80 -r 192.168.20.22:80 -m

#View configured policies

ipvsadm -Ln

Access LVS from the Client host

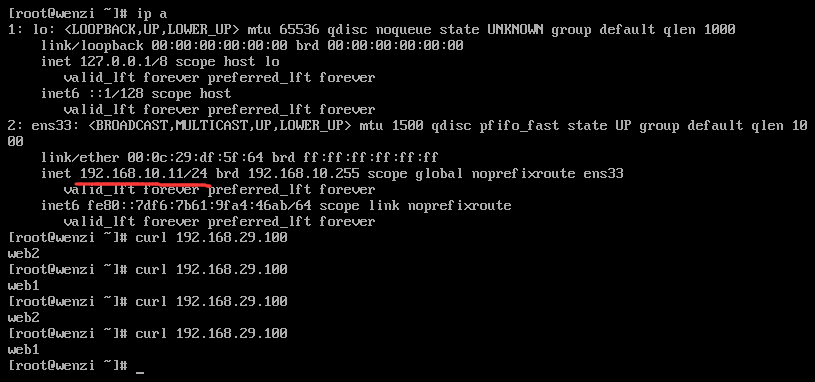

DR experiment

| CPU name | IP | GW |

| Client | 192.168.10.11/24 | 192.168.10.1 |

| Router | 192.168.10.1/24 192.168.29.141/24 |

|

| LVS | lo:VIP:192.168.29.100/32 192.168.29.142/24 |

192.168.29.141/24 |

| RS1 | lo:VIP:192.168.29.100/32 192.168.29.143/24 |

192.168.29.141/24 |

| RS2 | lo:VIP:192.168.29.100/32 192.168.29.149/24 |

192.168.29.141/24 |

Router Router

#Enable ipv4 routing forwarding function

[root@wenzi ~]#echo "net.ipv4.ip_forward" >> /etc/sysctl.conf

RS1

[root@RS1 ~]#yum -y install httpd

[root@RS1 ~]#echo "web1" > /var/www/html/index.html

[root@RS1 ~]#echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@RS1 ~]#echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

[root@RS1 ~]#echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@RS1 ~]#echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce#在lo口配置VIP

[root@RS1 ~]#ifconfig lo:1 192.168.29.100/32

[root@RS1 ~]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.29.100/0 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:05:13:8b brd ff:ff:ff:ff:ff:ff

inet 192.168.29.143/24 brd 192.168.29.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::5433:5ac9:62bb:cf34/64 scope link noprefixroute

valid_lft forever preferred_lft forever

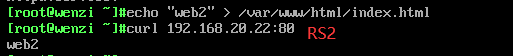

RS2

[root@RS1 ~]#yum -y install httpd

[root@RS1 ~]#echo "web2" > /var/www/html/index.html

[root@RS1 ~]#echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@RS1 ~]#echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

[root@RS1 ~]#echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@RS1 ~]#echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce#Configure VIP on lo port

[root@RS1 ~]#ifconfig lo:1 192.168.29.100/32[root@RS2 ~]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.29.100/0 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:2b:af:56 brd ff:ff:ff:ff:ff:ff

inet 192.168.29.149/24 brd 192.168.29.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::10a:4a5a:dfca:7a85/64 scope link noprefixroute

valid_lft forever preferred_lft forever

LVS

#Configure VIP on lo port

[root@LVS ~]#ifconfig lo:1 192.168.29.100/32

[root@LVS ~]#ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.29.100/0 scope global lo:1

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:ea:07:84 brd ff:ff:ff:ff:ff:ff

inet 192.168.29.142/24 brd 192.168.29.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::9648:92b:c378:fddf/64 scope link noprefixroute

valid_lft forever preferred_lft forever[root@LVS ~]#ipvsadm -A -t 192.168.29.100:80 -s rr

[root@LVS ~]#ipvsadm -a -t 192.168.29.100:80 -r 192.168.29.143 -g

[root@LVS ~]#ipvsadm -a -t 192.168.29.100:80 -r 192.168.29.149 -g[root@LVS ~]#ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.29.100:80 rr

-> 192.168.29.143:80 Route 1 0 0

-> 192.168.29.149:80 Route 1 0 0

client testing

LVS high availability implementation

When LVS is not available

When the Director is unavailable, the entire system will be unavailable;

solution:

High availability, Keepalived, heartbeat/corosync

When RS is not available

When a certain RS is unavailable, Director will still dispatch requests to this RS

solution:

The Director checks the health status of each RS, disables it when it fails, and enables it when it succeeds.

Keepalived、heartbeat/corosync、Idirectord。

Detection method: icmp (network layer), port detection (transport layer), request for a key resource (application layer)