In today's article, we mainly focus on the following topics:

Hyperheterogeneous computing, why does it need an open ecosystem?

Should an open ecosystem be defined by hardware or software?

What kind of ecology is open?

1 Processor type: from CPU to ASIC

1.1 CPU Instruction Set Architecture ISA

ISA (Instruction Set Architecture, Instruction Set Architecture) is the part related to computer architecture and programming (excluding composition and implementation). ISA defines: instruction set, data type, register, addressing mode, memory management, I/O model, etc.

The CPU is Turing complete and is a self-running processor: the CPU actively reads the instruction stream from the instruction memory, and then executes it after decoding;

Instruction execution involves data loading (Load), calculation and storage (Store).

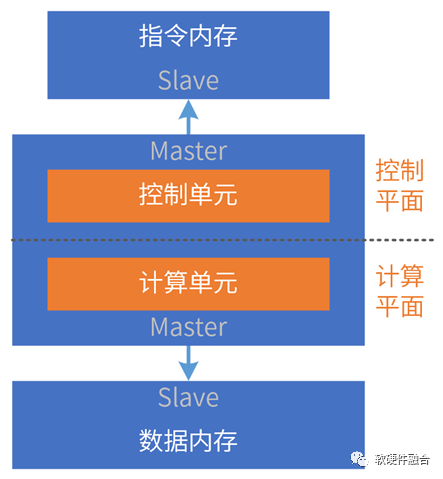

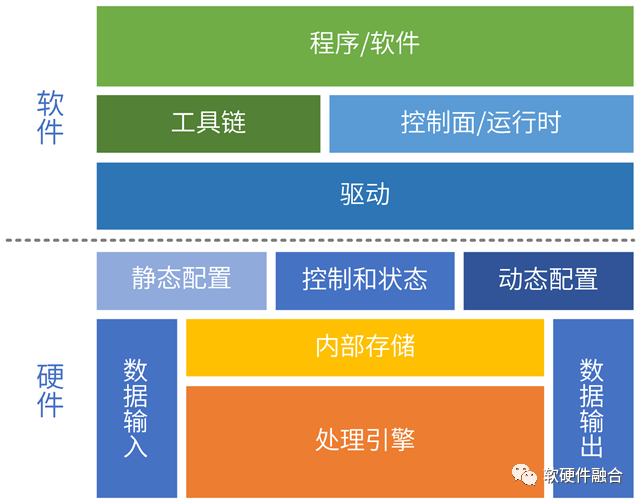

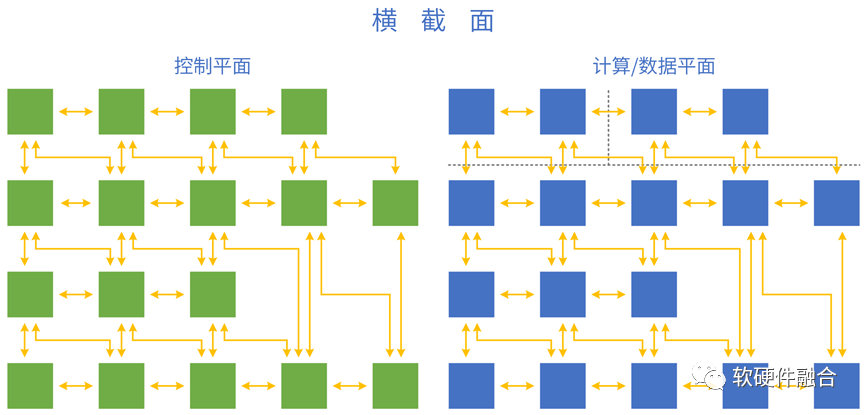

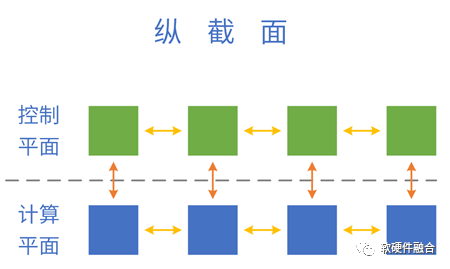

We can simply divide the processor into two parts, the control plane and the computing plane.

A CPU is a processing engine that streams instructions to drive computation.

1.2 (CPU perspective) GPU architecture

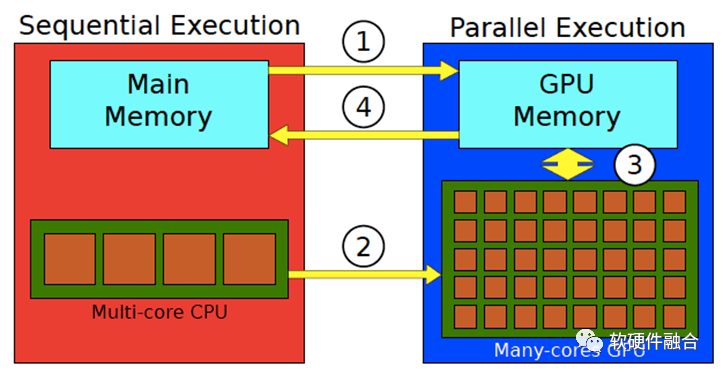

Architecture focuses on the interactive "interface" between hardware and software, while microarchitecture focuses on specific implementations. Therefore, GPU architecture usually refers to the "interface" of the GPU as seen from the perspective of the CPU. Looking at the processing flow of the GPU from the perspective of the CPU:

The CPU prepares the data and saves it in the CPU memory;

Copy the data to be processed from CPU memory to GPU memory (processing ①);

The CPU instructs the GPU to work, configures and starts the GPU kernel (processing ②);

Multiple GPU cores are executed in parallel to process the prepared data (3 processing in the figure);

After the processing is completed, copy the processing result back to the CPU memory (processing ④);

The CPU performs subsequent processing on the results of the GPU.

1.3 ASIC dedicated processing engine

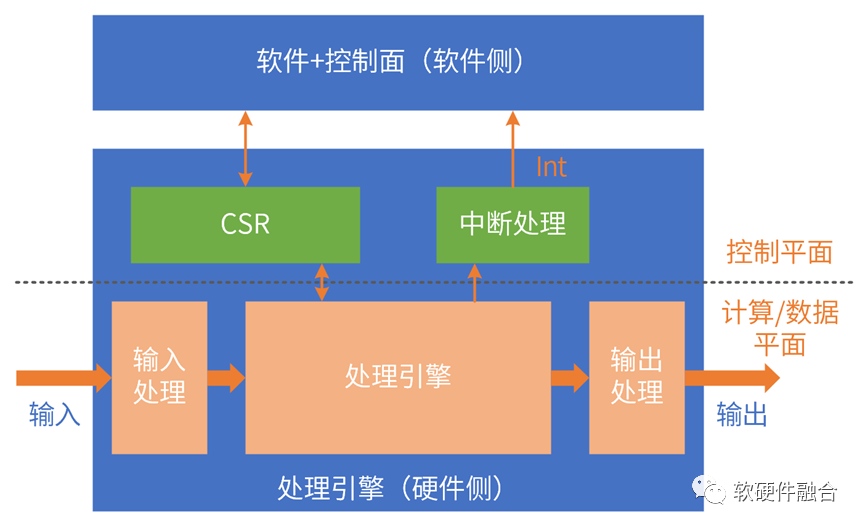

The logic of the ASIC function is completely determined: the driver interacts with the CSR and configurable entries to control the hardware operation.

The scenarios covered by ASIC are small and diverse; even in the same scenario, there are still differences in the implementation of different manufacturers; ASIC scenarios are fragmented and there is no ecology at all.

Similar to the GPU, the operation of the ASIC still requires the participation of the CPU:

Data input: the data is ready in the memory, the CPU controls the input logic of the ASIC engine, and moves the data from the memory to the processing engine;

ASIC operation control: control CSR, configurable entries, interrupts, etc.;

Data output: The CPU controls the output logic of the ASIC engine, moves the data from the engine to the memory, and waits for subsequent processing.

ASICs are processing engines for dataflow-driven computing.

1.4 DSA field-specific architecture

DSA is a callback based on ASIC. It has a certain programmability, covers more scenarios, and has the same performance as ASIC.

DSA classic case:

-

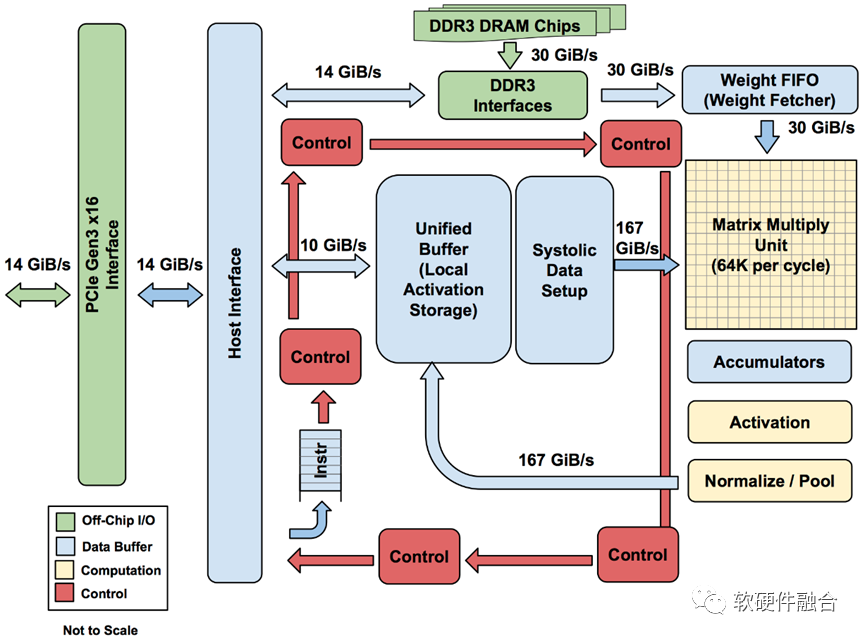

AI-DSA, such as Google TPU;

-

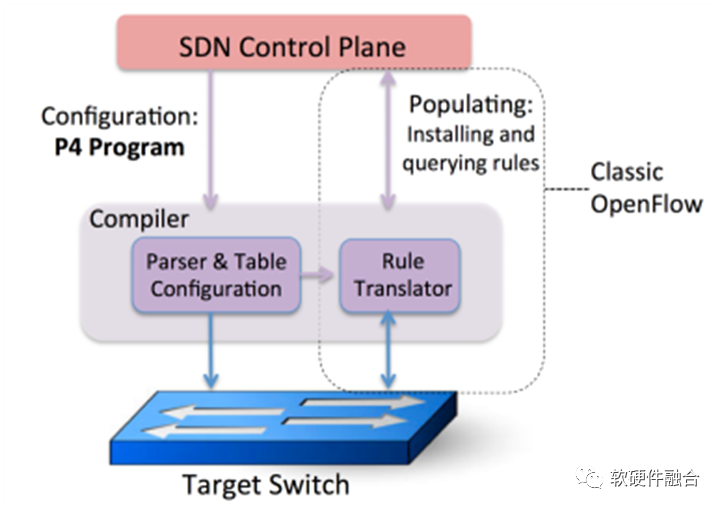

Network DSA, such as Intel Barefoot P4-DSA network switching chip.

1.5 Summary: From CPU to ASIC, the architecture is becoming more and more fragmented

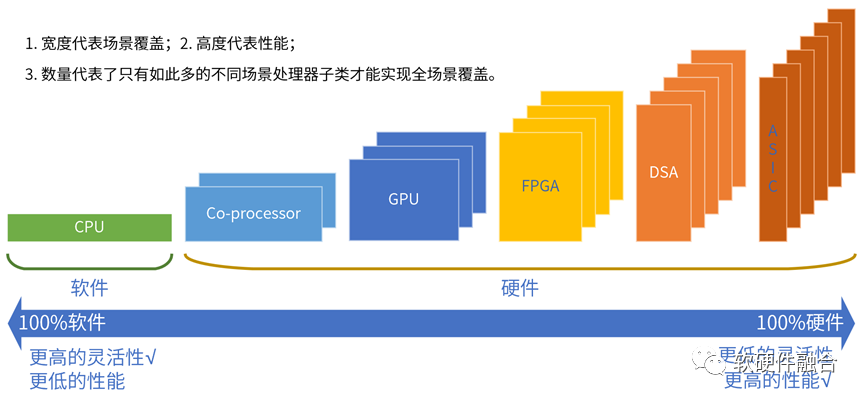

Instructions are the medium between software and hardware, and the complexity of instructions (unit computing density) determines the degree of decoupling of software and hardware in the system.

According to the complexity of instructions, typical processor platforms are roughly divided into CPU, coprocessor, GPU, FPGA, DSA, and ASIC.

Everything in the world is composed of elementary particles, and complex processing is composed of basic calculations.

The higher the instruction complexity, the smaller the scene covered by a single processor engine, and the more forms the processor engine will have.

From CPU to ASIC, processor engines are becoming more and more fragmented, and it is becoming more and more difficult to build an ecosystem.

2 Computing Architecture: From Heterogeneous to Super Heterogeneous

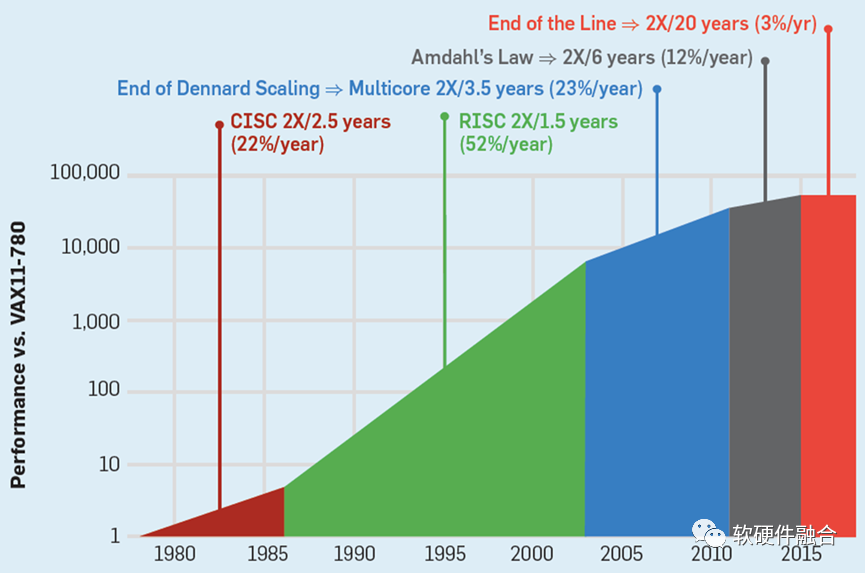

2.1 CPU performance bottleneck, triggering a chain reaction

In complex computing scenarios such as cloud computing, edge computing, and some super terminals, the requirements for flexibility are much higher than those for performance.

The general flexibility of the CPU is good. In the case of meeting the performance requirements, the CPU is still the best choice for various complex computing scenarios.

The demand for computing power continues to increase, and various heterogeneous acceleration methods have to be used for performance optimization.

Practice has proved that in complex computing scenarios, general flexibility cannot be lost while improving performance (by implication, many current technical solutions damage flexibility).

2.2 Problems Existing in Heterogeneous Computing

The challenge of complex computing: the more complex the system, the more flexible the processor needs to be selected; the greater the performance challenge, the more customized the acceleration processor needs to be selected.

The essential contradiction is: a single processor cannot balance performance and flexibility; even if we try our best to balance it, we can only "fix the symptoms but not the root cause".

The xPU in CPU+xPU heterogeneous computing determines the performance/flexibility characteristics of the entire system:

GPU flexibility is better, but the performance efficiency is not extreme enough;

DSA has good performance, but poor flexibility, and it is difficult to adapt to the flexibility requirements of complex computing scenarios. Case: AI is difficult to implement.

The power consumption and cost of FPGA are high, some custom development is required, and there are not many implementation cases.

ASIC functions are completely fixed, and it is difficult to adapt to flexible and complex computing scenarios.

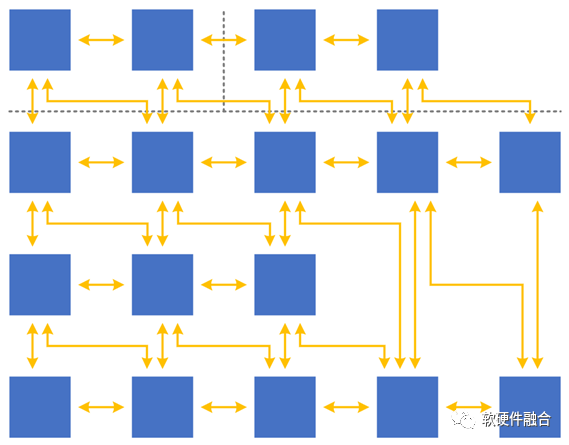

Heterogeneous computing also has the problem of computing islands:

Heterogeneous computing is oriented to a certain field or scenario, and the interaction between fields is difficult.

The server has limited physical space and cannot have multiple physical accelerator cards, so these acceleration solutions need to be integrated;

What needs to be emphasized is: integration is not a simple patchwork, but a structural reconstruction.

2.3 Preconditions for Hyperheterogeneity: Complex Systems and Super-Scale

Basic features:

① Ultra-large-scale computing clusters;

②The complex macro system is composed of hierarchical and block components (systems).

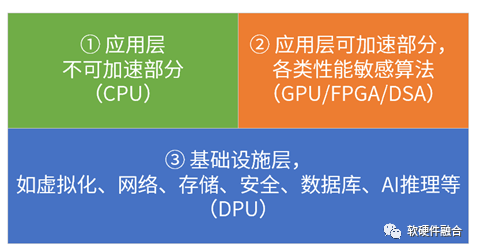

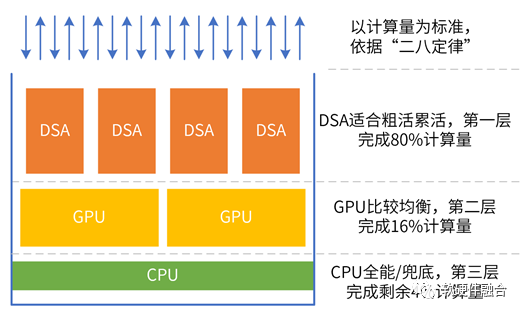

The complexity of the macro system of a single server, as well as ultra-large-scale cloud and edge computing, make the "28th rule" common in the system. Therefore, it is possible to deposit: relatively certain tasks to the infrastructure layer, and relatively elastic precipitation to elastic The acceleration part, the others continue to be placed on the CPU (CPU pocket).

2.4 From heterogeneous parallelism to super heterogeneous parallelism

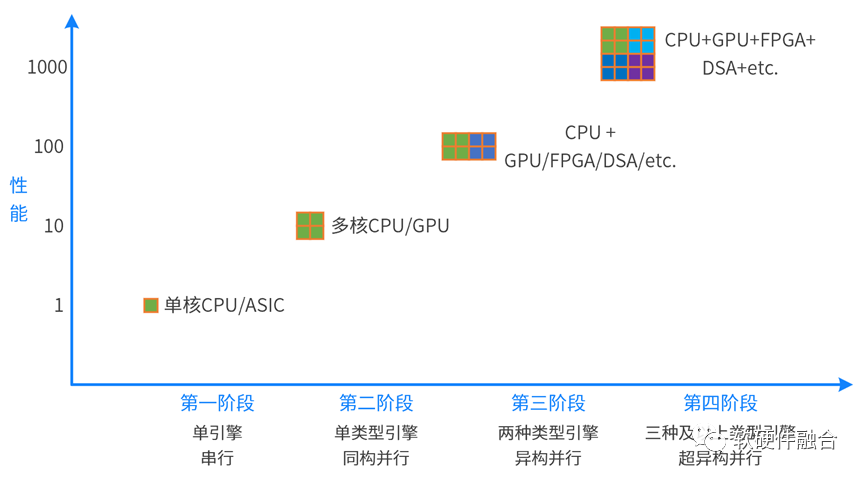

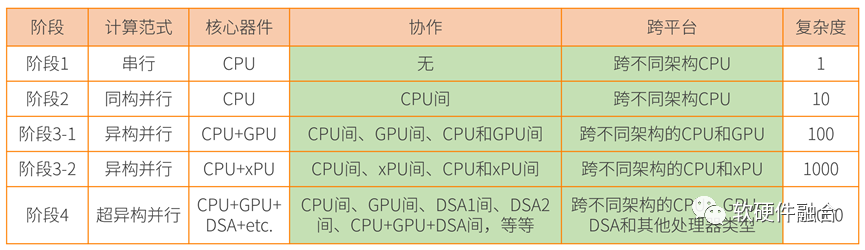

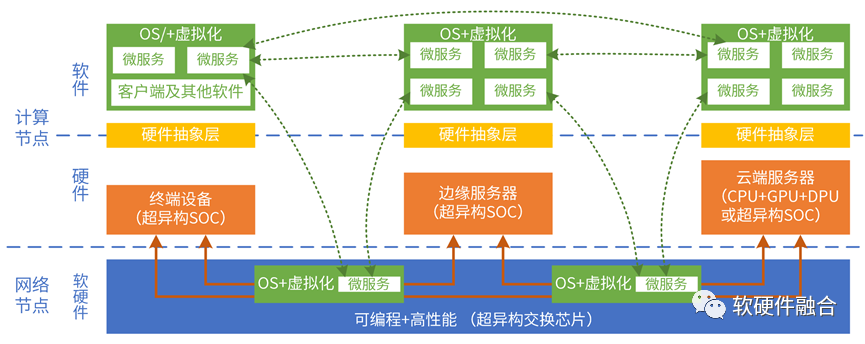

Computing has moved from single-core serial to multi-core parallel; and further from homogeneous parallel to heterogeneous parallel. In the future, computing needs to move further from heterogeneous parallelism to super-heterogeneous parallelism.

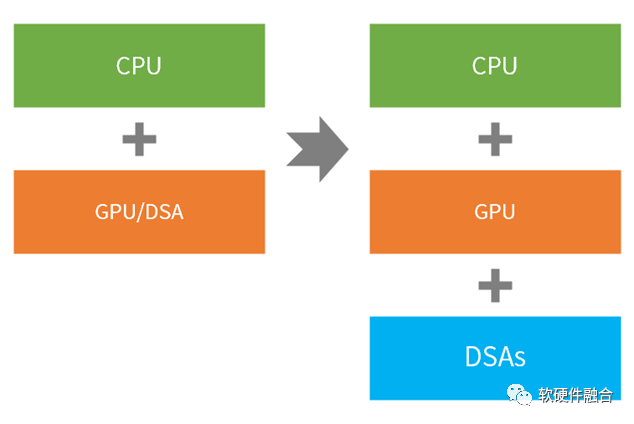

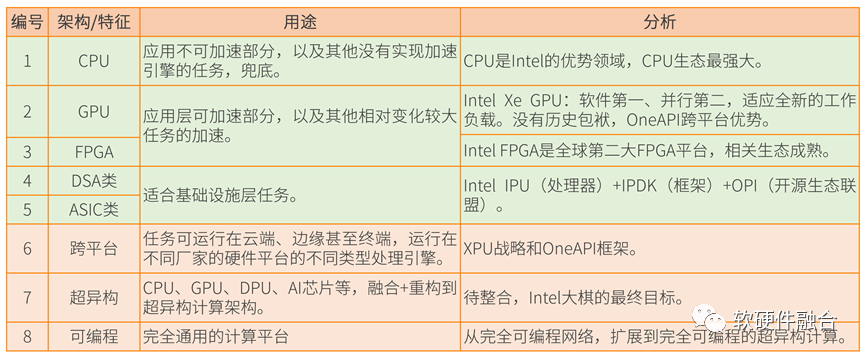

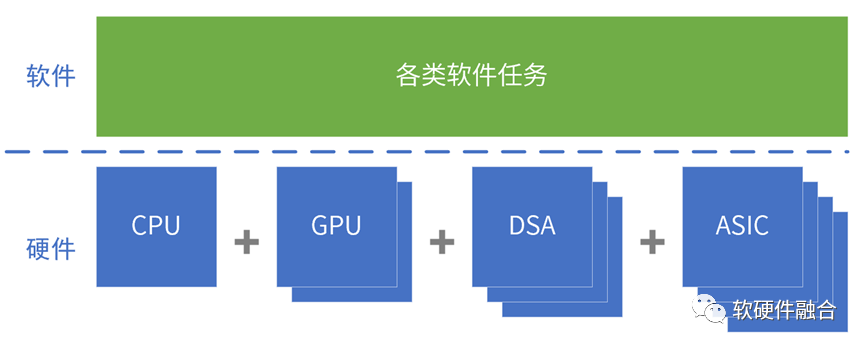

Heterogeneous computing is a two-level processing engine type of CPU+xPU, while super heterogeneous computing is a three-level processing engine type of CPU+GPU+DSA.

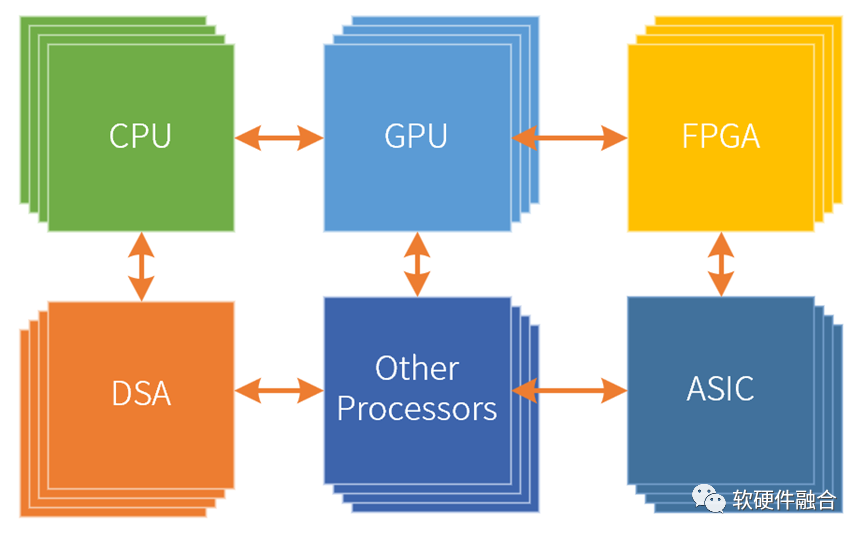

Super heterogeneous computing is not a simple integration, but more heterogeneous computing integration and reconstruction, sufficient and flexible data interaction between various types of processors, forming a unified super heterogeneous computing macro system.

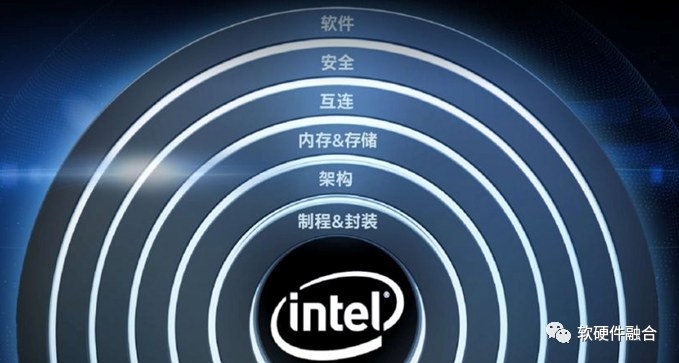

2.5 Intel: Hyperheterogeneous, XPU and oneAPI

In 2019, Intel proposed the concept of super-heterogeneous computing; so far, Intel has no products that fully meet the concept of super-heterogeneous computing.

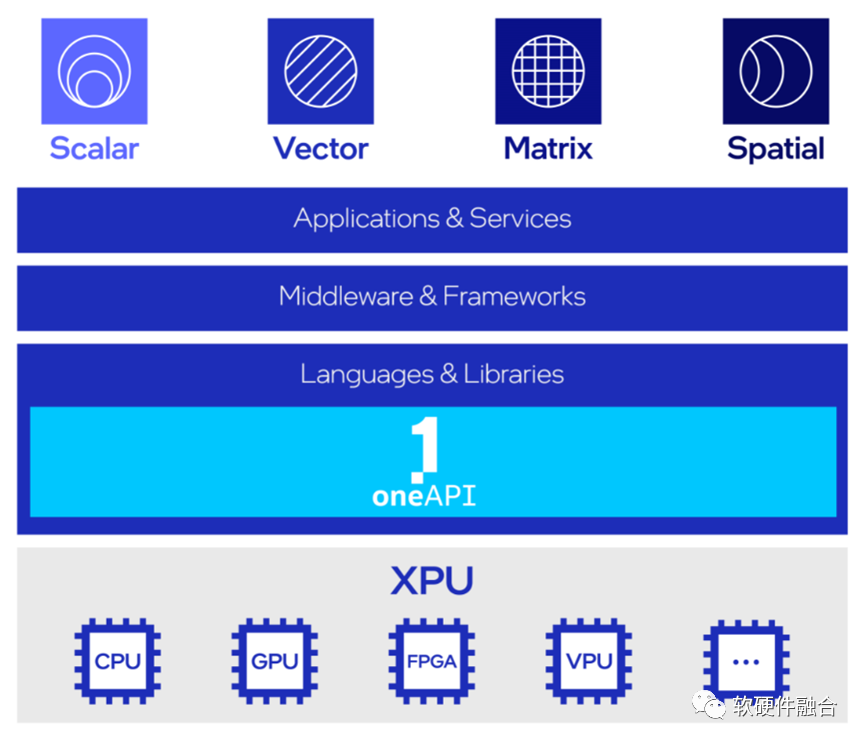

The basic engine of ultra-heterogeneous computing is XPU, which is a combination of multiple architectures, including CPU, GPU, FPGA and other accelerators;

oneAPI is an open source cross-platform programming framework. The bottom layer is different XPU processors. OneAPI provides a consistent programming interface to enable cross-platform reuse of applications.

2.6 Intel Super Heterogeneous Analysis

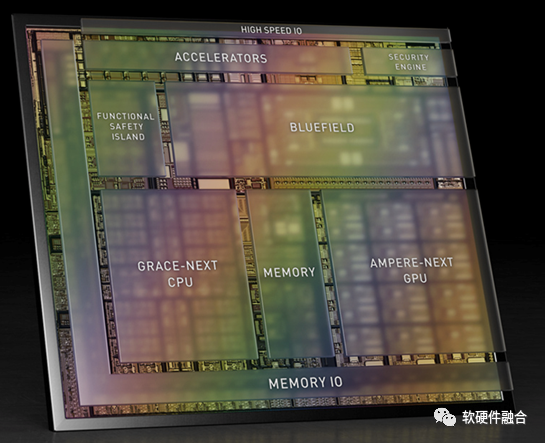

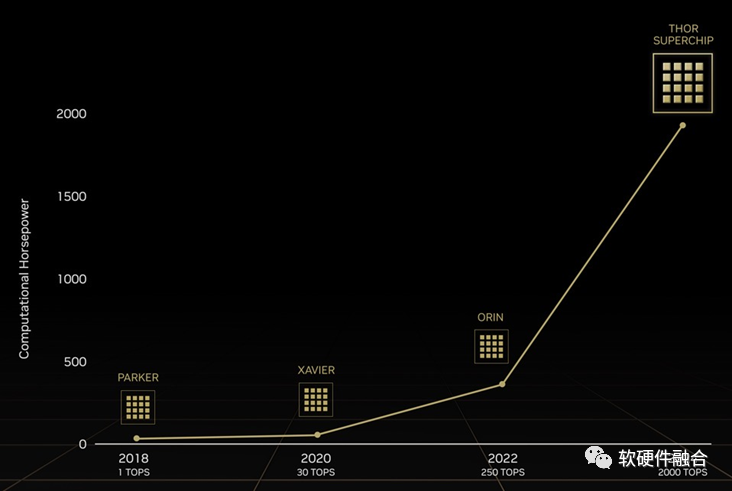

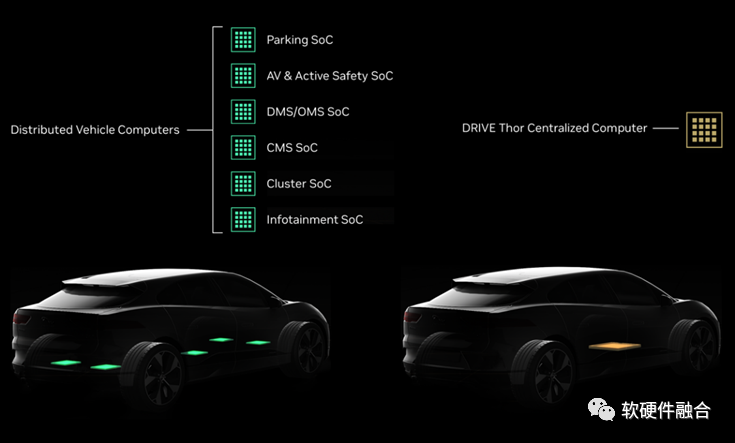

2.7 NVIDIA Self-Driving Thor

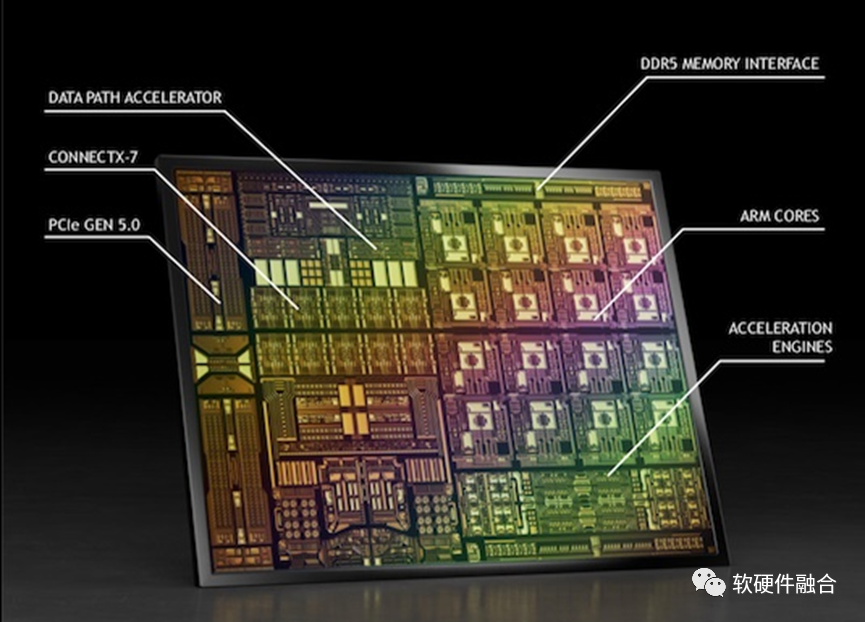

(The picture above is a schematic diagram of the Atlan architecture. Atlan and Thor have the same architecture, but there are differences in performance)

NVIDIA's self-driving Thor chip is composed of three parts: CPU+GPU+DPU with a data center architecture, and is a super-heterogeneous computing chip with a computing power of up to 2000TFLOPS.

Thor integrates the functions of traditional multiple DCUs (SOCs) into a single ultra-heterogeneous processing chip.

2.8 Why now?

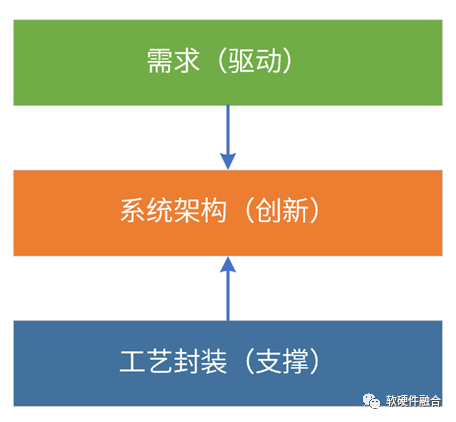

First, it is demand-driven. New software applications emerge in an endless stream, with a new hotspot every two years; moreover, existing hotspot technologies are still evolving rapidly. Metaverse is the next Internet form after the Internet and mobile Internet. To achieve a metaverse-level experience, the computing power needs to be increased by 1000 times.

Second, process and packaging support. Process packaging continues to improve, the process is below 10nm, and the chip is from 2D->3D->4D. Chiplets enable the construction of super-large systems with an order of magnitude increase in scale at the single-chip level. The larger the system scale, the more obvious the advantages of hyper-heterogeneity.

Finally, system architecture requires continuous innovation. Through architectural innovation, performance improvements of multiple orders of magnitude are achieved at the single-chip level. Challenge: Heterogeneous programming is difficult, and super-heterogeneous programming is even more difficult; how to better control super-heterogeneous is the key to success.

2.9 Summary: Hyperheterogeneous Design and Development Difficulty Increases Exponentially

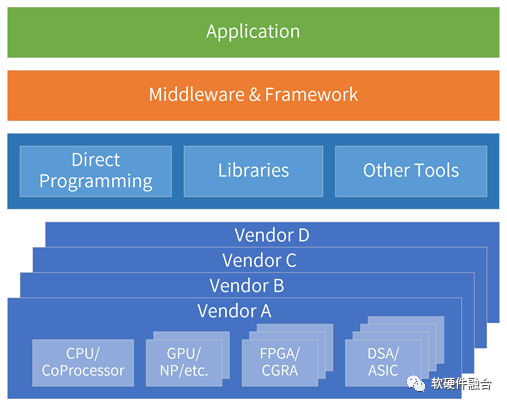

Software needs to be reused across platforms, across ① different architectures, ② different processor types, ③ different manufacturers’ platforms, ④ different locations, and ⑤ different device types.

How to manage such complex super heterogeneity?

3 Current status of open architecture and ecology

3.1 Overview of Open Architecture and Ecology

The system must be open at some level:

-

User Interface: The application must provide a UI for the user to use.

-

Development library: Refers to code that provides functions that developers can call from their own code to handle common tasks, such as CUDA libraries.

-

Operating system: Provides a system call interface.

-

The hardware needs to provide a hardware interface/architecture to call the system software, such as I/O interface, CPU architecture, GPU architecture, etc.

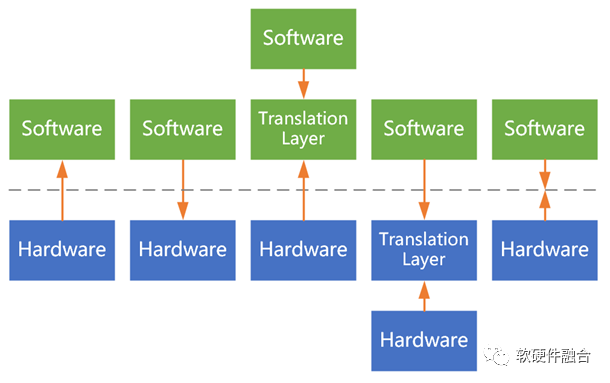

Interoperability: the ability of different systems and modules to work together and share information. The two adjacent layers of the system stack interact according to the paired interface protocol; if the interfaces do not match, an interface conversion layer is required.

Open interface/architecture and ecology: Based on interoperability, a standard open interface/architecture is formed; everyone follows this interface/architecture to develop products and services, thereby forming an open ecology.

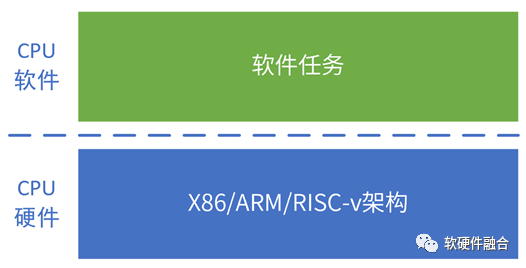

3.2 RISC-V: Open CPU Architecture

The x86 architecture is mainly used in the PC and data center fields, and the ARM architecture is mainly used in the mobile field, and is currently expanding to the PC and server fields.

To provide chips with low-cost CPUs, UC Berkeley developed RISC-V, an open ISA that has become the industry standard.

Theoretically, it can run across different CPU architectures through static compilation and dynamic translation. However, there are problems such as loss and stability, and a lot of work on transplantation is required.

It is difficult for a program on one platform to run on another platform (static), and it is even more difficult to run across platforms (dynamic).

Ideally, if an open ecosystem of RISC-V is formed, there will be no cross-platform losses and risks, and everyone can focus on the innovation of CPU micro-architecture and upper-layer software.

Advantages of RISC-V:

free. The instruction set architecture is free to obtain, does not require authorization, and has no commercial constraints.

openness. Any manufacturer can design its own RISC-v CPU, and everyone will build an open ecosystem for mutual prosperity and symbiosis.

Simple and efficient. Without the historical baggage, the ISA is more efficient.

standardization. most critical value. If RISC-v becomes the mainstream architecture, there will be no cost or price such as cross-platform.

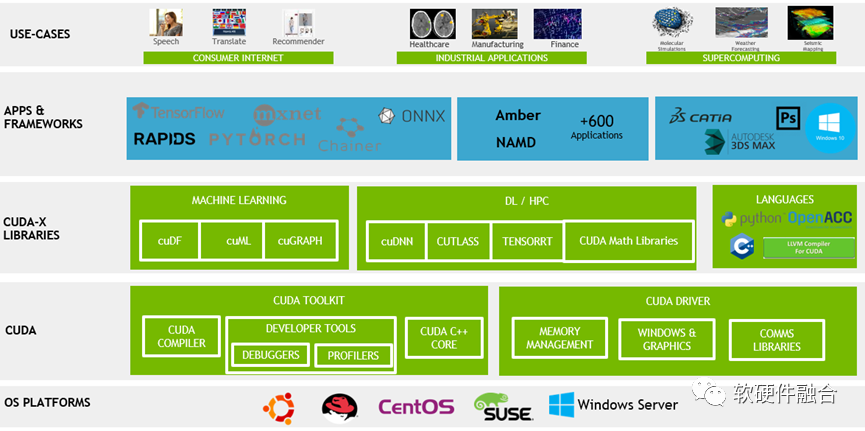

3.3 GPU Architecture Ecology

At present, GPU does not have a standardized, open source ISA architecture like CPU RISC-V.

CUDA is a development framework for NVIDIA GPUs and is forward compatible.

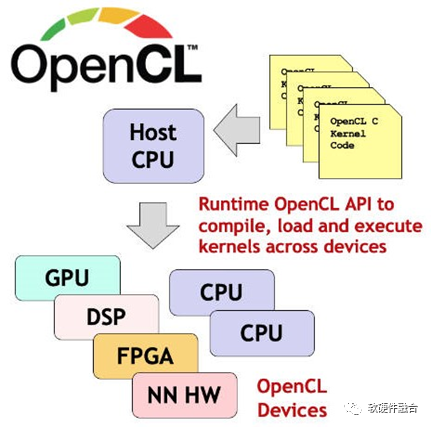

OpenCL is a completely open heterogeneous development framework that can span CPU, GPU, FPGA and other processing engines.

3.4 DSA, Scrimmage

① There are various DSA fields. Even in the same field, ② the DSA architectures of different manufacturers are still different, and even ③ the architectures of different generations of products of the same manufacturer are different.

The most typical AI-DSA scenario is currently in the stage of various DSA architectures.

To build the ecology of DSA:

-

Software-defined, no need to develop applications, compatible with existing software ecology;

-

A small amount of programming (many DSA programming, similar to static configuration scripts), a standard domain programming language with a low threshold;

-

Open architecture to prevent market fragmentation caused by too many architectures.

The DSA architecture is generally divided into two parts: static and dynamic:

-

static part. The class configuration script is mapped to a specific DSA engine through the compiler, and the specific functions of the DSA engine are programmed.

-

dynamic part. The dynamic part of DSA is usually not implemented by programming, but is adapted to existing software; it is equivalent to offloading the data plane/computing plane of the software to the hardware, and the control plane is still in the software; it conforms to the idea of software definition.

3.5 DSA/ASIC integration platform: NVIDIA DPU

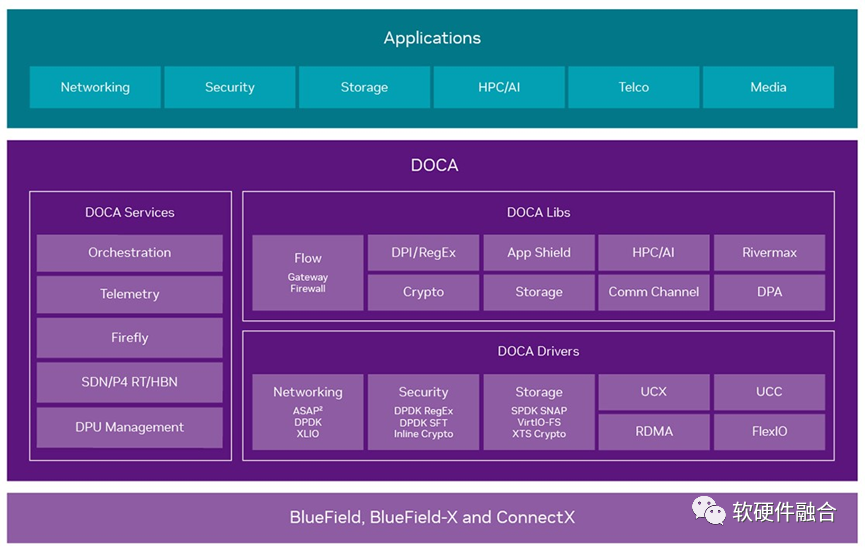

DPU integrates virtualization, network, storage, security and other DSA/ASIC engines, DOCA framework: library files, runtime and services, drivers.

DPU/DOCA is a non-open source/open closed architecture, platform and ecology.

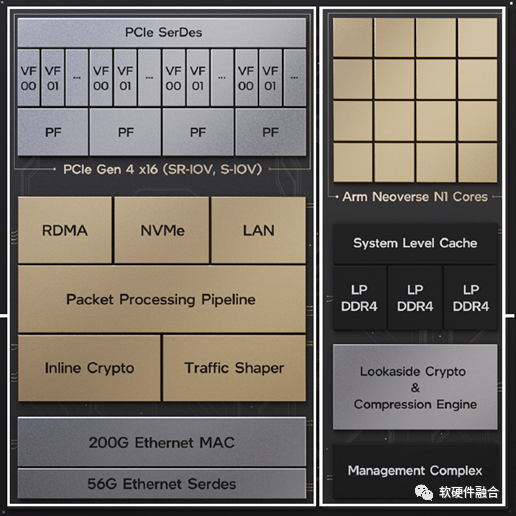

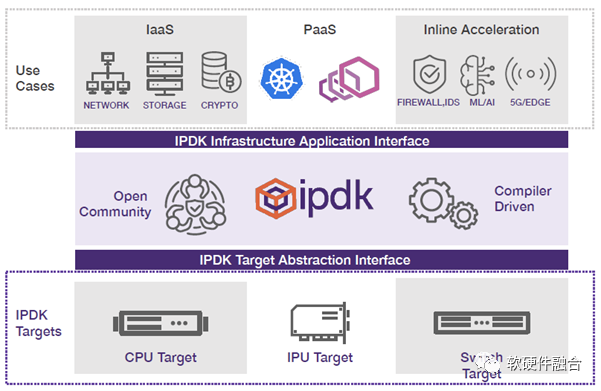

3.6 DSA/ASIC integrated platform: Intel IPU

Similar to NVIDIA DPU, Intel IPU integrates DSA/ASIC engines such as virtualization, network, storage, and security.

IPDK is an open source infrastructure programming framework that can run on CPU, IPU, DPU or switch.

The OPI project of Intel and the Linux Foundation: Cultivate a standard open ecosystem for the next-generation architecture and framework based on DPU/IPU-like technologies.

Intel IPU/IPDK/OPI is an open source/open architecture, platform and ecology.

3.7 Hardware Enablement: Hardware Enablement

As the data center evolved into a combination of virtual machines, bare metal machines, and containers, the OpenStack community expanded into the new OpenInfra community.

It aims to promote the development of technologies in the field of open infrastructure, including: public cloud, private cloud, hybrid cloud, and AI/ML, CI/CD, container, edge computing, etc.

Ubuntu hardware-enabled version: In order to better support the latest hardware, the kernel version will be updated frequently, which is not suitable for enterprise users.

OpenInfra hardware enablement: software components need to enable hardware in cloud and edge environments, including GPU, DPU, FPGA, etc.

In the context of hardware acceleration/software offloading, hardware enablement can be understood as:

-

The software must support hardware acceleration, "hardware acceleration native";

-

If there are hardware-accelerated resources, use hardware-accelerated mode;

-

If there is no hardware-accelerated resource, continue to use the traditional software operating mode.

4 Hardware defines software, or software defines hardware?

4.1 Control plane and computing plane of the system

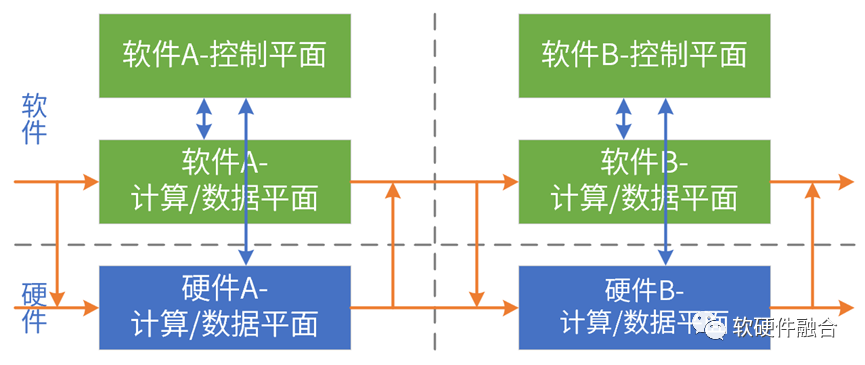

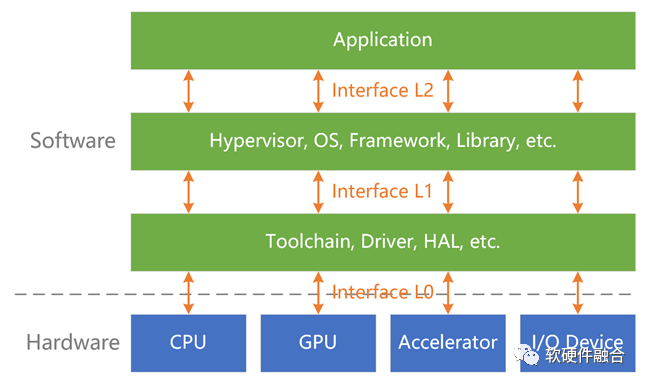

Map the layered and block system to the specific implementation of software and hardware: the control plane runs on the CPU, and the computing/data plane runs on CPU, GPU, DSA and other processing engines.

4.2 Generalized software and hardware interfaces

Generic interface:

-

interfaces between blocks and between layers;

-

The interface between software and software, between hardware and hardware, and between software and hardware.

Interface between software and hardware:

-

Hardware definition interface, software adaptation;

-

Software-defined interface, hardware adaptation;

-

Hardware/software defined interface, software interface adaptation layer;

-

Hardware/software defined interface, interface adaptation layer offload;

-

Software and hardware design follows standard interfaces (requirements: standard, efficient, open source, iterative).

4.3 What is hardware/software definition?

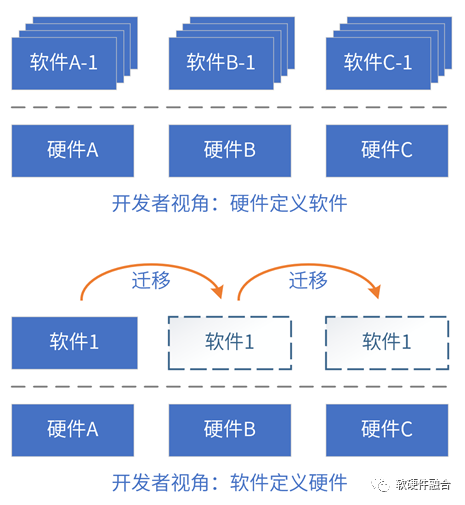

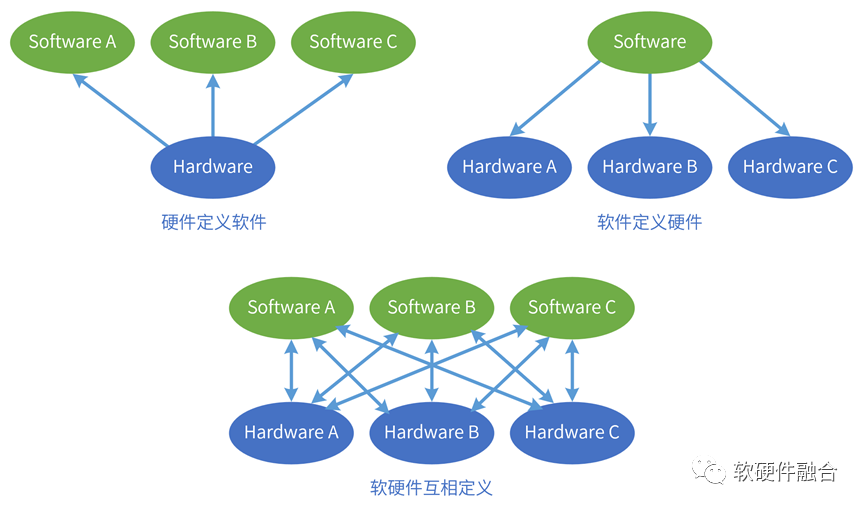

The system is composed of software and hardware, and it must be coordinated with software and hardware. From the interrelationship and influence of software and hardware, the software definition and hardware definition are expounded.

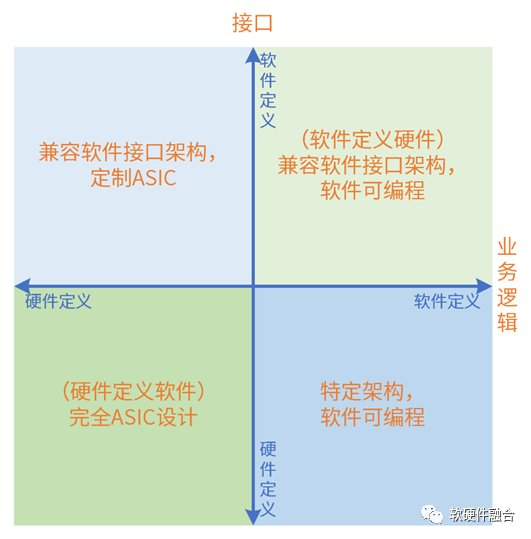

Evaluation criteria: who decides the business logic of the system; who decides the interaction interface (architecture) of software and hardware.

"Hardware-defined software" is defined as: the business logic of the system is mainly based on hardware implementation, supplemented by software implementation; the software relies on the interface provided by the hardware to build.

"Software-defined hardware" is defined as: when the business logic of a system is mainly implemented by software and supplemented by hardware; or the hardware engine is software-programmable, and the hardware engine performs operations according to the logic programmed by the software; Interface construction.

4.4 Basis for Hardware/Software Definition: System Complexity

Whether hardware defines software or software defines hardware is closely related to system complexity.

The complexity of the system is small and the iteration is slow. It can quickly design and optimize the division of system software and hardware, first develop the hardware, and then start the software development of the system layer and application layer.

Quantitative changes lead to qualitative changes. As the complexity of the system increases and the system iterates quickly, it is very difficult to directly implement a fully optimized design. The system implementation becomes evolutionary:

-

The previous system was not stable enough, and the algorithm and business logic were iterating rapidly, so ideas needed to be realized quickly. In this way, the software implementation based on CPU is more suitable;

-

With the development of the system, the algorithm and business logic are gradually stabilized, and then gradually optimized to GPU, DSA and other hardware acceleration to continuously optimize performance.

Hardware defined or software defined? Essentially system defined. The complexity of the system is too high, and it is difficult to achieve it at one time, so the realization of the system has become a process of continuous optimization and iteration.

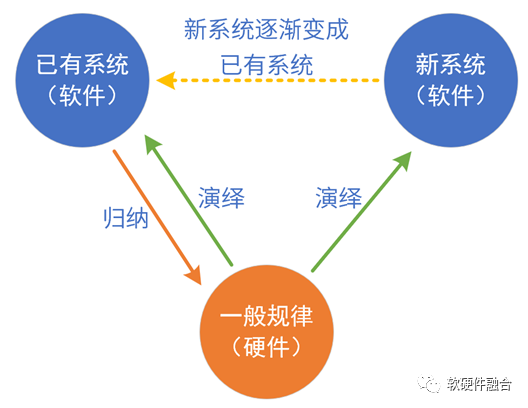

4.5 The Nature of Hardware/Software Definition: Induction and Deduction

The essence of hardware/software definition is to realize the system and its development through induction and deduction. From a long-term and development perspective, the system is defined by hardware and software. We analyze with a typical processing engine implementation:

-

CPU: Summarize the commonality (the most basic instruction) in all fields, and this commonality is "universal". That is to say, compared with other platforms, "the CPU does not need to be generalized", and only needs to continue to interpret new fields and scenarios. Software definition: The CPU is already a mature software platform, and system prototypes can be quickly implemented based on the CPU. When the system algorithm and business logic are stable, performance optimization can be performed through hardware acceleration.

-

GPU: Summarize the commonality of many fields, then hardware realizes commonality, and software realizes individuality. After the implementation of the GPU platform, it can continue to perform new scenarios in new fields. GPU is a sufficiently general and mature parallel computing development platform.

-

DSA: Summarize the business logic of multiple scenarios in a certain field: the hardware realizes the common parts, and the software realizes the individual parts. After the implementation of the DSA platform, new scenarios can be deduced in this field.

-

ASIC: The algorithm and business logic of specific scenarios are directly transformed into hardware, and the software is only the basic control function. Direct mapping, not to mention induction and deduction.

5 Summary: Common Definition of Software and Hardware, Super Heterogeneous Open Ecosystem

5.1 The software natively supports hardware acceleration

Software natively supports hardware acceleration:

-

Software architecture adjustment, separation of control plane and computing/data plane;

-

Standardization of control plane and computing/data plane interfaces;

-

Discovery of hardware acceleration resources, adaptive selection of software computing/data plane or hardware computing/data plane.

-

Data input can come from software or hardware;

-

The output of data can go to software or to hardware.

5.2 Fully Programmable

Fully programmable, not CPU programmable, but programmable based on the ultimate optimized performance. Fully Programmable is a layered programmable architecture:

-

The infrastructure layer is programmable. The workload of the infrastructure layer does not change frequently, and the DSA architecture can be adopted.

-

Elastic applications are programmable. Supports mainstream GPU programming methods, and can accelerate common applications through DSA to achieve higher efficiency.

-

Application programmable. The application has the greatest uncertainty and still runs on the CPU. At the same time, the CPU is responsible for the bottom line, and all other tasks that are not suitable for acceleration or do not have an acceleration engine run on the CPU.

Order of magnitude improvement in performance while being fully programmable:

-

From the perspective of the whole system, most of them are accelerated by DSA, so the performance efficiency of the system is close to that of DSA;

-

Chiplet+ super-heterogeneous, order of magnitude increase in system scale and order of magnitude improvement in system performance;

-

From the user's perspective, the application runs on the CPU, and what the user experiences is 100% CPU programmable.

5.3 Open Interface and Architecture

The fields/scenes covered by ASIC/DSA are small, and the coverage of chips to scenes (compared to CPU and GPU) is becoming more and more fragmented.

It is becoming more and more difficult to build an ecology. It is necessary to gradually shift from hardware definition to software definition.

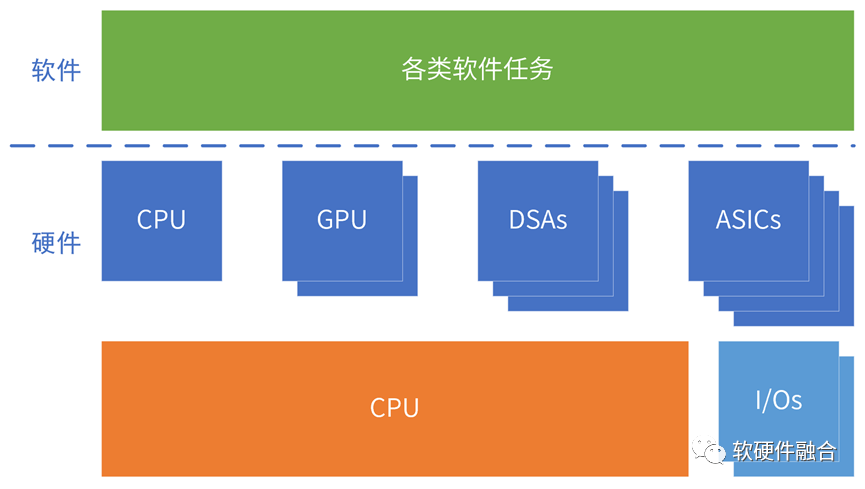

Ultra-heterogeneous computing, including CPU, GPU, and various DSA and ASIC processors.

There are more and more heterogeneous engine architectures. Efficient, standard, and open interfaces and architecture systems must be built in order to build a consistent macro architecture (combination of multiple architectures) platform and avoid fragmentation of scene coverage.

5.4 Computing needs to span different types of processors

Hyperheterogeneity includes different types of processors such as CPU, GPU, FPGA, and DSA, and computing tasks need to be run across different types of processors.

oneAPI is Intel's open source cross-platform programming framework, which provides a consistent programming interface through oneAPI, enabling applications to run across different types of processors.

More broadly, not only across different types of processors, but also across chip platforms from different manufacturers.

5.5 Hyper-Heterogeneous Open Ecosystem

In order to improve the utilization rate of computing power, it is necessary to integrate the computing resources of each isolated island into a unified resource pool.

Computing resource pooling needs to be achieved through cross-platform integration:

-

Dimension 1: across the same type of processor architecture. For example, software can run across x86, ARM and RISC-v CPUs.

-

Dimension 2: across different types of processor architectures. Software needs to run across processors such as CPUs, GPUs, FPGAs, and DSAs.

-

Dimension 3: Across different chip platforms. For example, software can run on chips from different companies such as Intel and NVIDIA.

-

Dimension 4: Different locations on the edge and end of the cross-cloud network. Computing can be adaptively run at the most suitable location on the edge of the cloud network according to changes in various factors.

-

Dimension 5: Across different cloud network edge service providers, different end users, and different types of terminal equipment.

In the hyper-heterogeneous era, an open ecology must be formed so that computing resources can form a whole and meet the requirements for an order of magnitude increase in computing power in application scenarios such as the Metaverse.

5.6 Common Definition of Software and Hardware: Hyper-Heterogeneous Open Ecosystem

First of all, it is a super-heterogeneous computing architecture. Hyperheterogeneous computing composed of multiple architecture processing engines such as CPU+GPU+FPGA+DSA; to achieve both: flexibility close to CPU, performance efficiency close to ASIC, and performance improved by multiple orders of magnitude.

Secondly, it must be platformized & programmable. Two everything: software defines everything, and hardware accelerates everything; two complete: a fully software-programmable hardware acceleration platform, and software programming determines business logic completely; one universal: sufficient versatility to meet the needs of multiple scenarios and users, Meet the long-term evolution of the business.

Finally, be standard & open. The architecture/interface is standard, open, and continuously evolving; embraces an open source and open ecosystem; supports cloud-native, cloud-network edge-end integration; users have no (hardware, framework, etc.) platform dependencies.