foreword

There are 3 kinds of thread management security in iOS , namely NSThread , GCD and NSOperation , excluding pthread which is hardly used directly . This article summarizes its usage characteristics, important APIs , and thread safety.

NSThread

Lightweight thread operation, object-oriented, but it is not very friendly to manually manage the thread declaration cycle, and it is not very friendly to control the execution order between different threads.

Benefits of this article, free C++ learning package, technical video/code, 1000 Dachang interview questions, including (C++ basics, network programming, database, middleware, back-end development, audio and video development, Qt development)↓↓↓ ↓↓↓See below↓↓Click at the bottom of the article to get it for free↓↓

create

init(target: Any, selector: Selector, object argument: Any?) // 需要手动 start()

init(block: @escaping () -> Void) // 需要手动 start()

detachNewThread(_ block: @escaping () -> Void) // 自启动

detachNewThreadSelector(_ selector: Selector, toTarget target: Any, with argument: Any?) // 自启动

performSelector(inBackground aSelector: Selector, with arg: Any?) // 隐式创建thread state control

start() // 启动

sleep(until date: Date) // 休眠到指定时间

sleep(forTimeInterval ti: TimeInterval) // 休眠指定时间

exit() // 强制立即退出,不管是否执行完毕,可能会导致异常

cancel() // 不会立即退出,待到处理完线程上下文后退出,可用 isCancel 监听其是否退出inter-thread communication

performSelector(onMainThread aSelector: Selector, with arg: Any?, waitUntilDone wait: Bool, modes array: [String]?) // 到主线程中执行

performSelector(onMainThread aSelector: Selector, with arg: Any?, waitUntilDone wait: Bool) // 到主线程中执行

perform(_ aSelector: Selector, on thr: Thread, with arg: Any?, waitUntilDone wait: Bool, modes array: [String]?) // 到指定线程中执行

perform(_ aSelector: Selector, on thr: Thread, with arg: Any?, waitUntilDone wait: Bool) // 到指定线程中执行thread keepalive

let thread = CusThread.init(target: self, selector: #selector(threadAction), object: nil)

@objc func threadAction() {

let runLoop = RunLoop.current

runLoop.add(.init(), forMode: .default)

while !Thread.current.isCancelled {

runLoop.run(mode: .default, before: Date.init(timeInterval: 2, since: .now)) // 两秒执行一次

otherAction()

}

}RunLoop needs to add port / timer and other content, otherwise runLoop will exit immediately and manually call the cancel () method when it needs to exit to prevent memory leaks caused by RunLoop holding Thread .

GCD

Introduction

Grand Central Dispatch (GCD) is a newer solution for multicore programming developed by Apple. It is primarily used to optimize applications to support multi-core processors and other symmetric multiprocessing systems. It is a concurrent task executed on the basis of thread pool mode. First introduced in Mac OS X 10.6 Snow Leopard, it is also available in iOS 4 and above. GCD is a multi-thread solution launched by iOS , which emphasizes the concept of "task block" and ignores the management of threads; GCD is a lightweight thread processing method written in C language, its source code is here, and it is maintained internally The concept of the thread pool generated by pthread is introduced .

Tasks & Queues

The core concepts "queue" and "task" in GCD :

The queue is the waiting queue for executing tasks. The tasks in the queue are executed on the principle of first-in-first-out. It is mainly divided into serial queues and parallel queues.

- Serial queue ( Serial Dispatch Queue ): Only one task is executed at a time.

- Parallel queue ( Concurrent Dispatch Queue ): Multiple tasks can be executed concurrently.

let queue = DispatchQueue(label: "name") // 串行队列创建 / 默认

let queue = DispatchQueue(label: "name", attributes: .concurrent) // 并行队列创建The task is the code block you put in GCD , which is divided into two types: synchronous and asynchronous

- Synchronization task ( sync ): The synchronization task will wait for the previous tasks in the queue to be executed before executing. The task is executed in the current thread and does not have the ability to start the thread.

- Asynchronous task ( async ): an asynchronous task can continue to execute the task without waiting for the execution of the previous task, and execute the task in a new thread, which has the ability to start the thread.

queue.sync { // 追加同步任务

print(Thread.current)

}

queue.async { // 追加异步任务

print(Thread.current)

} Main queue : the queue where the main thread is located. It is a serial queue and can be obtained through DispatchQueue.main

Global queue : parallel queue, which can be obtained through DispatchQueue.global(), and different global queues can also be obtained according to different task priorities: DispatchQueue.global(qos: .background)

Since tasks are appended to the queue, there are 4 combinations:

| concurrent queue |

serial queue |

main queue |

|

| Synchronization (sync) |

No new thread/serial execution of tasks |

No new thread/serial execution of tasks |

No new thread/serial execution of tasks |

| Asynchronous (async) |

Start a new thread / execute tasks concurrently |

Start a new thread/execute tasks serially |

No new thread/serial execution of tasks |

Deadlocks in GCD

Deadlock refers to a phenomenon in which two or more processes are blocked due to competition for resources or communication with each other during execution. If there is no external force, they will not be able to advance. At this time, the system is said to be in a deadlock state or a deadlock has occurred in the system, and these processes that are always waiting for each other are called deadlock processes.

Deadlock has four necessary conditions: mutual exclusion & request hold & non-preemption & loop condition.

In GCD , due to improper use of API , it may cause deadlock. The concept of deadlock is not the same as the above expression. It is mainly caused by the inability to execute tasks due to mutual waiting between tasks. The more common ones are as follows:

Executed in the main thread

DispatchQueue.main.sync {

print(Thread.current) // 同步任务

}

// 当前任务let queue = DispatchQueue.init(label: "name")

queue.sync {

queue.sync {

print(Thread.current) // 同步任务

}

} // 当前任务

print(Thread.current)

}

Reason: The synchronous task cannot be started, and it needs to wait for the tasks in the current queue to be executed, and the current task cannot be completed due to the synchronous task.

Other important APIs

DiapatchGroup

After adding multiple asynchronous tasks, you can use DispatchGroup to perform task execution in a unified manner. Similarly, based on manually adding tasks to DispatchGroup ( enter / leave ), you can also perform synchronous operations after multiple network requests.

// 将任务加入到DispatchGroup

let group = DispatchGroup()

let queue = DispatchQueue.init(label: "name", attributes: .concurrent)

for i in 1...5 {

queue.async(group: group, execute: {

print("------ \(i)")

})

}

group.notify(queue: queue) {

print("end")

}

// 手动将任务加入到DispatchGroup

let group = DispatchGroup()

let queue = DispatchQueue.init(label: "name", attributes: .concurrent)

for i in 1...5 {

group.enter()

netwrk.api {

group.leave()

}

}

group.notify(queue: queue) {

print("end")

}

Barrier function (barrier)

The fence task will be executed after the previous tasks are executed, and the additional tasks will be executed after the fence task is executed. In specific scenarios, it can be used for the "reader-writer problem", that is, there can be multiple readers at the same time , but there can only be one writer at a time, such as database read and write operations.

queue.async(group: nil, qos: .default, flags: .barrier) {

print("隔离")

}Delay execution (asyncAfter)

Add the delayed task to the queue after a specified time delay. It should be noted that two times, DispatchTime and DispatchWallTime , can be passed. The former is based on the system time and cannot be changed. The latter is the system clock, that is, the time of the lock screen interface.

queue.asyncAfter(deadline: .now() + 1) {

print("执行")

}

Semaphore (semaphore)

DispatchSemaphore, like the semaphore in the operating system, is used to avoid problems such as data competition. It is often used in iOS to control the maximum number of concurrent task executions.

- signal() : set the semaphore + 1

- wait() : If the semaphore >= 1 at this time, decrement the semaphore by 1 and return; if the semaphore <= 0, block the thread to wait.

let semaphore = DispatchSemaphore(value: 3) // 将并发任务执行数量控制为3

let queue = DispatchQueue.init(label: "name", attributes: .concurrent)

for i in 1...5 {

semaphore.wait()

queue.async {

print(i)

semaphore.signal()

}

}

dispatch source (DispatchSource)

DispatchSource is used to monitor the underlying objects of the system, such as file descriptors, Mach ports, semaphores, memory warnings, etc. The main processing time is as follows:

| macro definition |

illustrate |

| DispatchSourceUserDataAdd |

data increase |

| DispatchSourceUserDataOr |

Data OR |

| DispatchSourceMachSend |

Mach port send |

| DispatchSourceMachReceive |

Mach port receive |

| DispatchSourceMemoryPressure |

memory condition |

| DispatchSourceProcess |

process events |

| DispatchSourceRead |

read data |

| DispatchSourceSignal |

Signal |

| DispatchSourceTimer |

timer |

| DispatchSourceFileSystemObject |

file system changes |

| DispatchSourceWrite |

file write |

For example:

monitor memory

var source = DispatchSource.makeMemoryPressureSource(eventMask: .all, queue: .main)

source.setEventHandler {

print(source.data)

// data为枚举值的rawValue, 主要有 normal、warning、critical、all

}

source.activate()

timer

var source: DispatchSourceTimer?

source = DispatchSource.makeTimerSource(flags: .strict, queue: .main)

source?.schedule(deadline: .now() + 1, repeating: 1)

source?.setEventHandler {

print("定时器触发\(Date.now)")

}

source?.activate()

It is worth noting that when using NSTimer , if the page is swiped, the NSTimer will be invalid. You need to add commonMode to the RunLoop added to the timer . If you use DispatchSourceTimer , this will not happen.

It should be noted that the source of the above code should not be tested with local variables, otherwise it will be released when it goes out of scope.

DispatchIO

DispatchIO provides a channel for operating file descriptors, which can efficiently read files by using multiple threads. The following is the main process:

- Create a DispatchIO object and create a channel

- Perform read / write operations

- Call close to close the channel

- Perform cleanupHandler callback processing

var ioWrite: DispatchIO?

var ioRead: DispatchIO?

let queue = DispatchQueue(label: "com.nihao.serialQueue")

let filePath: NSString = (NSTemporaryDirectory() + "text.txt") as NSString

let fileDescriptor = open(filePath.utf8String!, (O_RDWR | O_CREAT | O_APPEND), (S_IRWXU | S_IRWXG))

let cleanupHandler: (Int32) -> Void = { errorNumber in

print("最后的回调")

}

ioWrite = DispatchIO(type: .stream, fileDescriptor: fileDescriptor, queue: queue, cleanupHandler: cleanupHandler)

ioRead = DispatchIO(type: .stream, fileDescriptor: fileDescriptor, queue: queue, cleanupHandler: cleanupHandler)

let formattedString = "nihao!!!!!"

let data = Array(formattedString.utf8).withUnsafeBytes {

DispatchData(bytes: $0)

}

ioWrite?.write(offset: 0, data: data, queue: queue) { done, data, error in }

ioRead?.read(offset: 0, length: Int.max, queue: queue) { done, data, error in }

NSOperation

NSOperation is an object-oriented encapsulation based on GCD , so it also has two concepts of "task NSOperation " and "queue NSOperationQueue ". At the same time, it also adds features such as interdependence between NSOperation , monitoring NSOperation status through KVO , and canceling tasks.

NSOperation is a formal abstract class. The system provides two subclasses, NSInvocationOperation and NSBlockInvocation . However, since NSInvocation is not available in Swift , NSInvocationOperation is also not available in Swift . At the same time, you can also customize NSOperation . If you only use NSOperation , the task will only run on the main thread, so it needs to be used with NSOperationQueue .

After NSOperationQueue is initialized, it is a concurrent queue. It will call tasks according to the priority and preparation status. The main queue can be obtained through the class attribute main, mainly by adding operation to the queue . It is worth noting that when the task has been executed or the execution has ended, it cannot be added to the queue again, otherwise a crash will occur . Here are some usage examples:

let queue = OperationQueue()

queue.maxConcurrentOperationCount = 2 //设置最大并发数

let operationA = BlockOperation { () -> Void in

print("A - \(Thread.current)")

}

let operationB = BlockOperation { () -> Void in

print("B - \(Thread.current)")

}

operationA.addDependency(operationB) // A 依赖于 B, 当 B 执行后 A 才会执行

queue.addOperation(operationA)

queue.addOperation(operationB)

queue.addBarrierBlock {

print("我是屏障")

}

queue.addOperation { () -> Void in

print("2 - \(Thread.current)")

}

Custom NSOperation

Generally speaking , it is not difficult to customize NSOperation for non-concurrent NSOperation. You only need to rewrite the start() method and write the operations that need to be performed . But if you want to customize concurrent NSOperation , you need to implement at least the following methods and properties:

- start()

- isAsynchronous

- isExecuting

- In general, isFinish needs to maintain some state of NSOperation while executing the function. If KVO monitoring is also performed , KVO notification needs to be sent to reflect the value change. For details, please refer to Apple 's documentation to customize the NSOperation object

thread safety

In a program that is executed in parallel by multiple threads with shared data, the thread-safe code will ensure that each thread can execute normally and correctly through a synchronization mechanism, and there will be no accidents such as data pollution.

After realizing the benefits of multi-threading, it is necessary to ensure the security of the data. Thread safety is to ensure that correct results can be obtained during multi-threaded execution, that is, data is not polluted. There are two schemes to ensure thread safety, "synchronous" and "non-synchronous". "Synchronization" refers to ensuring that shared data is only used by one thread at a time during the process of concurrent data access by multiple threads, such as locking. "Asynchronous" means that "synchronous" operations are not required in some cases. For example, the function itself does not involve shared code, so naturally there is no need for "synchronous" to ensure data security.

solution

no sync

Reentrant code ( ReentrantCode ): It can be interrupted at any time during the execution of this function, transferred to ** OS ** scheduling to execute another piece of code, and there will be no error when returning control, which means that it uses itself Variables on the stack do not depend on any other variables. In this case, the result is the same every time, and does not rely on shared variables, ensuring thread safety without synchronization.

Thread local storage : If the data required in the thread must be shared with other threads, try to determine whether the shared data can only be executed in the same thread. If so, you can create a copy of the shared variable for the thread, which can also Implementing no synchronization guarantees thread safety. For example:

class Person: NSCopying {

func copy(with zone: NSZone? = nil) -> Any {

return Person(-1, name: "")

}

var age: Int

var name: String

init(_ age: Int, name: String) {

self.age = age

self.name = name

}

}

var person = Person.init(2, name: "") // 全局变量

let queue = DispatchQueue.init(label: "name")

for i in 0...100 {

queue.async {

var currPerson = self.person.copy()

}

}

If the person needs to be accessed every time in the thread , and there is no need to synchronize back to the original person later , only operating in the current thread can also ensure thread safety.

Synchronize

Mutual exclusion synchronization : also known as non-blocking synchronization, means that before the call returns the result, the current thread will be suspended and enter the blocking state, and it will continue only after the result is obtained. It is a pessimistic synchronization strategy. In iOS , it is mainly reflected in the form of a mutex. After the acquisition of the mutex fails, it will enter a blocked state and wait for the lock to be released to be awakened.

Non-blocking synchronization : It means that the current thread will not enter the blocking state until the result cannot be obtained. It is an optimistic synchronization strategy. In iOS , it is mainly reflected in the form of a spin lock. After the lock acquisition fails, it will not enter the blocking state, but will continue to try to acquire the lock, and the lock will be released. Because it does not involve thread state switching, the efficiency is higher than that of the mutex.

In addition to locks, there are other synchronization tools, such as Atomic Operations , Memory Barries , and Volatile Variables . The following is a brief introduction:

- Atomic Operations : Atomic operations are a simple form of synchronization for simple data structures that don't block competing threads; OS X and iOS include many operations that perform basic mathematical and logical operations on 32-bit and 64-bit values. Such as atomic_fetch_add, atomic_exchange, etc., for details, please refer to.

- Memory Barries : In a single thread, because the hardware will perform the necessary records to ensure that the memory operations of the program are executed in the same order as the code; but in multithreading, because the compiler often rearranges assembly-level instructions for optimization , which may lead to potential errors. A memory barrier is a non-blocking synchronization tool used to ensure that memory operations occur in the correct order. For example:

// thread1:

while f == 0 {}

print(x)

// thread2:

x = 5

f = 1

The number 5 is not printed every time . If thread2 executes out of order, first execute f = 1, and then execute x = 5, unexpected values may appear, so a memory barrier is introduced, and OSMemoryBarrier is inserted in the need to ensure the execution order. Specifically Can refer to.

- Volatile Variables : Variables declared as volatile are not optimized. For example, variables are optimized by the compiler, placed in registers and read, which has a potential read risk, and volatile prevents this optimization, and the current value of the variable is read from memory every time. Both memory barriers and ** volatile ** variables reduce the number of compiler optimizations, so they only need to be used where they are guaranteed to be correct. In GCD , NSOperation has a lot of memory barrier code, so that we can encounter this situation is very small.

Locks in iOS

In iOS , locks are generally used for thread synchronization to achieve thread safety. The following is a brief introduction and performance comparison of the main types of locks.

@synchronized

@synchronized (obj) {

// 需要同步的代码

NSLog(@"nihao")!

}

@synchronized is a recursive lock, and its implementation uses recursive_mutex_t . A recursive lock is to acquire a lock multiple times in the same thread, and only one NSObject object can be passed. Remove this syntax in Swift and restore it to C++ , which can be found Its source code is similar to the following:

objc_sync_enter(_sync_obj);

// 需同步的代码

NSLog(@"nihao")!

objc_sync_exit(_sync_obj);

So in Swift, you can use these two functions to simulate @synchronized

func synchronized(lock: AnyObject, closure: () -> Void) {

objc_sync_enter(lock)

closure()

objc_sync_exit(lock)

}

NSLock & NSRecursiveLock

let lock = NSLock()

lock.lock()

// 需同步的代码

lock.unlock()

let recursiveLock = NSRecursiveLock()

recursiveLock.lock()

// 需同步的代码

recursiveLock.unlock()

NSLock and NSRecursiveLock are consistent in use. NSLock is a mutual exclusion lock, while NSRecursiveLock is a recursive lock, both of which encapsulate pthread_mutex . In the usage scenario, it is necessary to consider whether to lock multiple times in the same thread.

NSCondition & NSConditionLock

NSCondition is implemented based on pthread_mutex, which is a conditional lock, which maintains a lock and a thread checker inside: the lock is mainly for synchronizing the critical section; the thread checker mainly decides whether to continue running according to the condition.

- wait(): Keep the current thread waiting

- signal(): Notifies a thread to recover from the blocked state to the ready state

- broadcast(): Notify all other threads to recover from the blocked state to the ready state. A more common example is the producer-consumer model:

var condition = NSCondition() // 锁

var money = 5 // 共享变量

// thread1

func consume() {

condition.lock()

while money == 0 {

condition.wait()

}

money -= 1

condition.unlock()

}

// thread2

func product() {

condition.lock()

money += 1

condition.wait()

condition.unlock()

}

NSConditionLock defines a mutex that can be used for specific value locking and resolution. It is somewhat similar to the behavior of NSCondition , the above code can be converted to:

var condition = NSConditionLock(condition: 0)

var money = 0

// 0表示无数据 1表示有数据

func consume() {

condition.lock(whenCondition: 1)

money -= 1

condition.unlock(withCondition: money == 0 ? 0 : 1)

}

func product() {

condition.lock()

money += 1

condition.unlock(withCondition: 1)

}

NSDistrubutedLock

NSDistrubutedLock is a distributed lock, usually used by multiple applications on multiple hosts to restrict access to certain shared resources, such as files, which are implemented by file system items (such as files or directories), but due to iOS applications The sandbox mechanism does not have a corresponding API , which can be used in OS X.

DispatchSemaphore

DispatchSemaphore is introduced above , so I won't go into details here.

OSSpinLock

OSSpinLock is an optional lock, but because threads in the iOS system can have different priorities, priority inversion problems may occur. Specifically, when a low-priority thread acquires a lock and accesses a shared resource, a high-priority thread also tries to acquire it. Since OSSpinLock is an optional lock, it will enter a busy waiting state and occupy a large amount of CPU , which will cause the low-priority thread to Unable to preempt the CPU with high-priority threads , which leads to delays in completing tasks and unable to release locks . Therefore, OSSpinLock has been deprecated and replaced by os_unfair_lock , which is also a mutex.

OSSpinLockLock(&spinlock) // 获取锁,线程一直忙等待。阻塞当前线程执行。

OSSpinLockTry(&spinlock) // 尝试获取锁,返回bool。当前线程锁失败,也可以继续其它任务,不阻塞当前线程。

OSSpinLockUnlock(&spinlock) // 解锁,参数是OSSpinLock地址。

atomic

The keyword in the attribute in Objective-C will lock the storage and retrieval of the attribute. It is implemented based on os_unfair_lock. As mentioned above, this is a mutex. It cannot guarantee thread safety, but can only guarantee the security of accessing values.

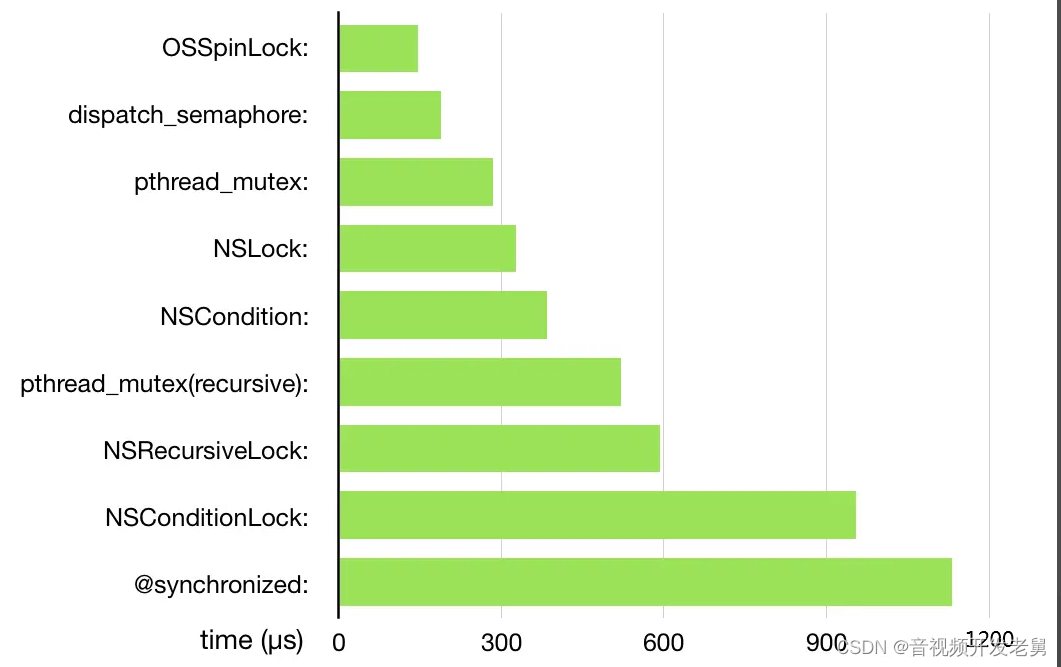

Performance comparison of various locks

The picture is taken from OSSpinLock, which is no longer safe, and I make a small guess:

Discuss locks first, and then analyze semaphores.

OSSpinLock ranks first due to the feature of self-selecting locks without thread state switching;

Subsequent locks are encapsulated based on POSIX thread -related thread APIs , and their performance varies according to the encapsulation strength. For example, the performance of mutex locks is better than that of recursive locks, and it is also better than conditional locks;

A semaphore is actually similar to a lock, but pthread_mutex supports multiple types, so there will be additional judgments, which causes a slightly lower efficiency.

However, although the performance of these locks is different, the gap is not large. In the coding process, specific scenarios and code robustness are also considered.

Summarize

In terms of multi-threading in iOS , this article outlines the use of NSThread , GCD , and NSOperation and their characteristics, and introduces some synchronization methods and some uses of locks about thread safety.

Benefits of this article, free C++ learning package, technical video/code, 1000 Dachang interview questions, including (C++ basics, network programming, database, middleware, back-end development, audio and video development, Qt development)↓↓↓ ↓↓↓See below↓↓Click at the bottom of the article to get it for free↓↓