question:

When training the model, I encountered an error RuntimeError: Trying to backward through the graph a second time, but the buffers have already been freed. Specify retain_graph=True when calling backward the first time

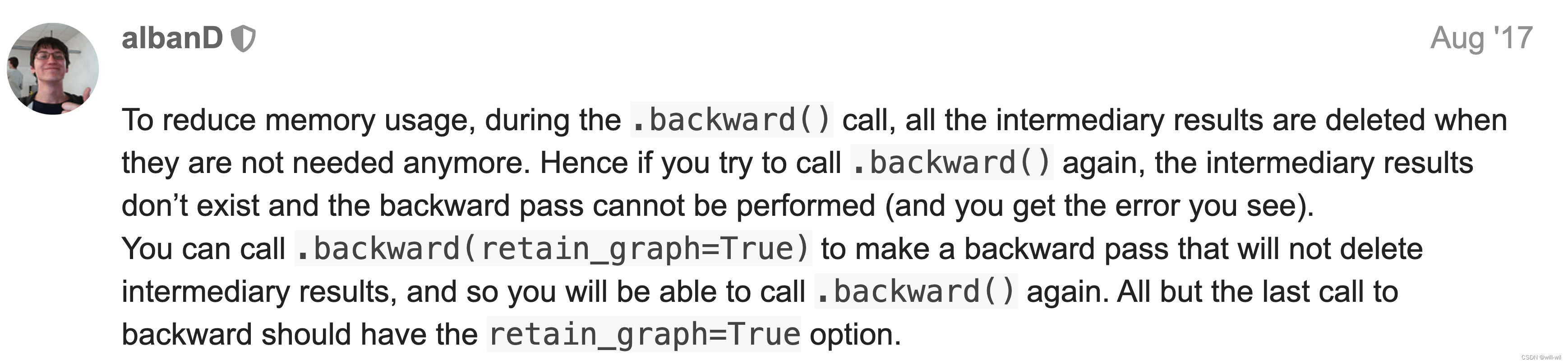

reason:

In order to reduce the use of video memory, pytorch will automatically release the intermediate result after calling backward() during training. When the second call is made, the intermediate result does not exist and cause an error. You can add the retain_graph=True parameter in backward() to keep Intermediate results

Wait a minute, this is not intuitive. Shouldn’t you go through the model and create a graph every time you forward? Someone just asked this question in the comment section

Focus on perform some computation just before the loop , which defines the variables outside the loop, which are released during the first backward, which leads to the release of the variables outside the loop and the inability to redo during the second forward, resulting in the loss of intermediate results and an error

import torch

a = torch.rand(3,3, requires_grad=True)

# This will be share by both iterations and will make the second backward fail !

b = a * a

for i in range(10):

d = b * b

res = d.sum()

# The first here will work but the second will not !

res.backward(

###运行报错

RuntimeError: Trying to backward through the graph a second time (or directly access saved tensors after they have already been freed). Saved intermediate values of the graph are freed when you call .backward() or autograd.grad(). Specify retain_graph=True if you need to backward through the graph a second time or if you need to access saved tensors after calling backward.

###改成以下形式,问题解决

import torch

#a = torch.rand(3,3, requires_grad=True)

# This will be share by both iterations and will make the second backward fail !

#b = a * a

for i in range(10):

a = torch.rand(3,3, requires_grad=True)

b = a * a

d = b * b

res = d.sum()

# The first here will work but the second will not !

res.backward()

another solution

If there are some untrained parameters in the model, such as Moco, etc., you can try to add torch.no_grad() to the variable creation function of this part, which can also solve this problem