1.bs3 crawls to Starbucks data

import urllib.request

url = 'https://www.starbucks.com.cn/menu/'

response = urllib.request.urlopen(url)

content = response.read().decode('utf-8')

from bs4 import BeautifulSoup

soup = BeautifulSoup(content,'lxml')

name_list = soup.select('ul[class="grid padded-3 product"] strong')

for name in name_list:

print(name.get_text())

2. Climb to the pictures in the webmaster material

import urllib.request

from lxml import etree

def create_request(page):

if(page == 1):

url = 'https://sc.chinaz.com/tupian/qinglvtupian.html'

else:

url = 'https://sc.chinaz.com/tupian/qinglvtupian_' + str(page) + '.html'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36',

}

request = urllib.request.Request(url = url, headers = headers)

return request

def get_content(request):

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

def down_load(content):

tree = etree.HTML(content)

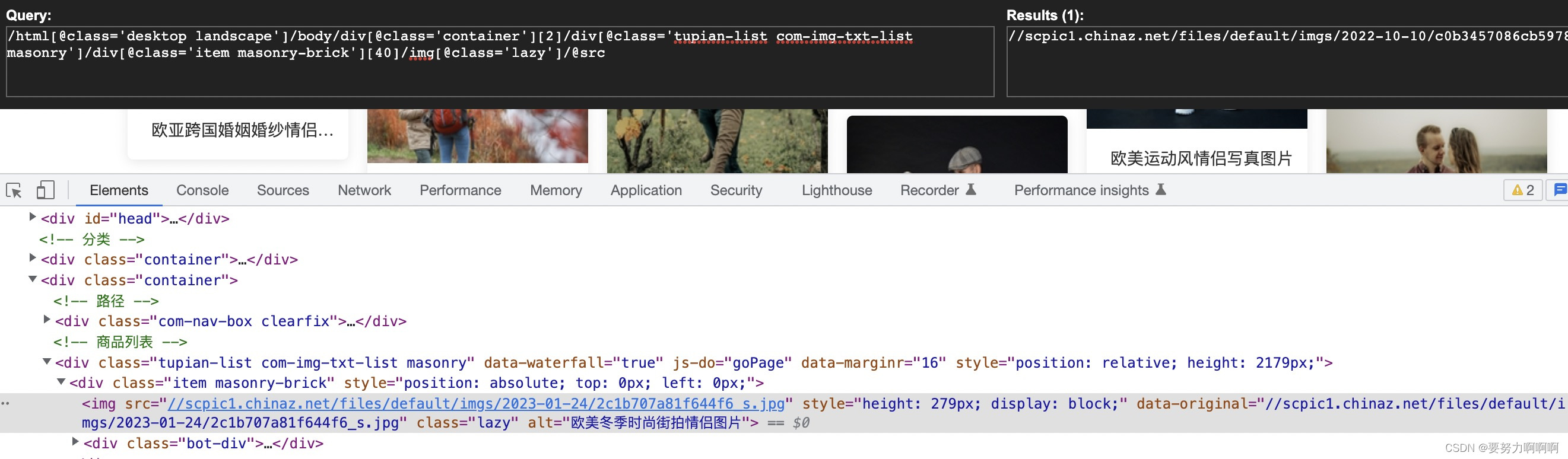

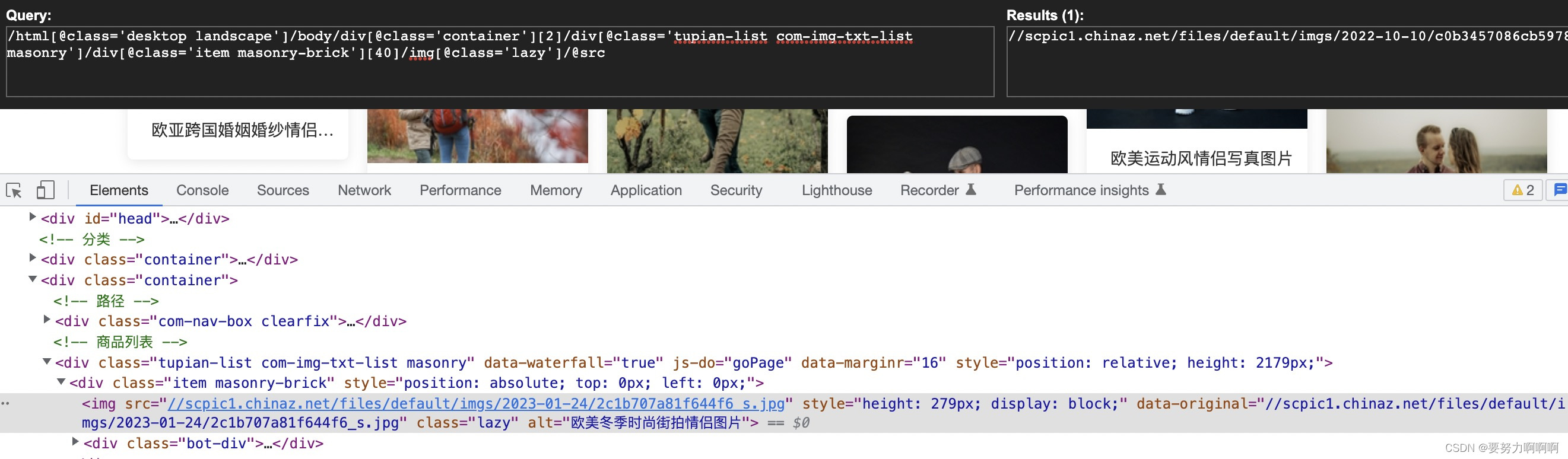

name_list = tree.xpath('//div[@class="container"]//div/img/@alt')

src_list = tree.xpath('//div[@class="container"]//div/img/@data-original')

print(name_list)

print(src_list)

for i in range(len(name_list)):

name = name_list[i]

src = src_list[i]

url = 'https:' + src

urllib.request.urlretrieve(url=url,filename='./loveImg/' + name + '.jpg')

if __name__ == '__main__':

start_page = 1

end_page = 3

for page in range(start_page,end_page+1):

request = create_request(page)

content = get_content(request)

down_load(content)