Article Directory

1. Background

In the training process of the neural network, we always need to choose three parameters of the compile step, loss, metrics, optimizer. So what do they mean?

Loss function (loss function): How the network measures the performance on the training data, that is, how the network is moving in the right direction.

Optimizer: A mechanism to update the network based on the training data and the loss function.

Metrics that need to be monitored during training and testing.

2、loss

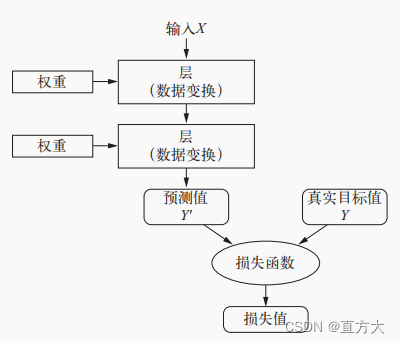

To control something, you first need to be able to observe it. To control the output of a neural network, you need to be able to measure how far that output is from the expected value. This is the task of the neural network loss function, also called the objective function.

The input of the loss function is the predicted value of the network and the real target value (that is, the result you want the network to output), and then calculates a distance value to measure the effect of the network on this example.

3、metrics

Metrics that need to be monitored during training and testing

# 分类任务中使用

metrics=['accuracy'])

# 回归任务中使用

metrics=['mae'])

metrics=['mse'])

4. Contrast

| loss | metric |

|---|---|

| is the goal of network optimization. Need to participate in the optimization operation of updating weight W | It is only used as an "indicator" to evaluate the performance of the network, which is convenient and intuitive to understand the effect of the algorithm, and does not participate in the optimization process |

| must have during training | training can be done without |

5. When the definitions of Loss and Metrics are both mse, why are they displayed differently?

Careful students will find that when both Loss and Metrics are defined as mse, the values are displayed differently. So I will be curious, since loss and metrics are the same calculation method, why are they different.

In fact, the reason for this small difference is that the evaluation of the model (the result of metrics) is the evaluation of the batch after the model has trained a batch. The displayed loss is the mean value of the loss generated by this batch of samples during the training process.

In one sentence, the model changes during the batch training process. The result of loss is the mean value of the loss of each sample dynamically, while metrics are the result of evaluating the data of this batch after the end of the batch.

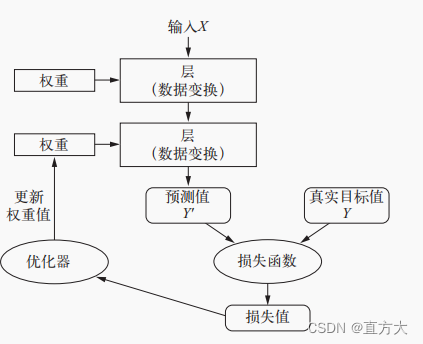

6、optimizer

The basic technique of deep learning is to use this distance value as a feedback signal to fine-tune the weight value to reduce the loss value corresponding to the current example (as shown in the figure below). This adjustment is done by the optimizer, which implements the so-called backpropagation algorithm, which is the core algorithm of deep learning.

7. Reference materials

"[Keras author's masterpiece] Python deep learning"