Details of DDE processing

-

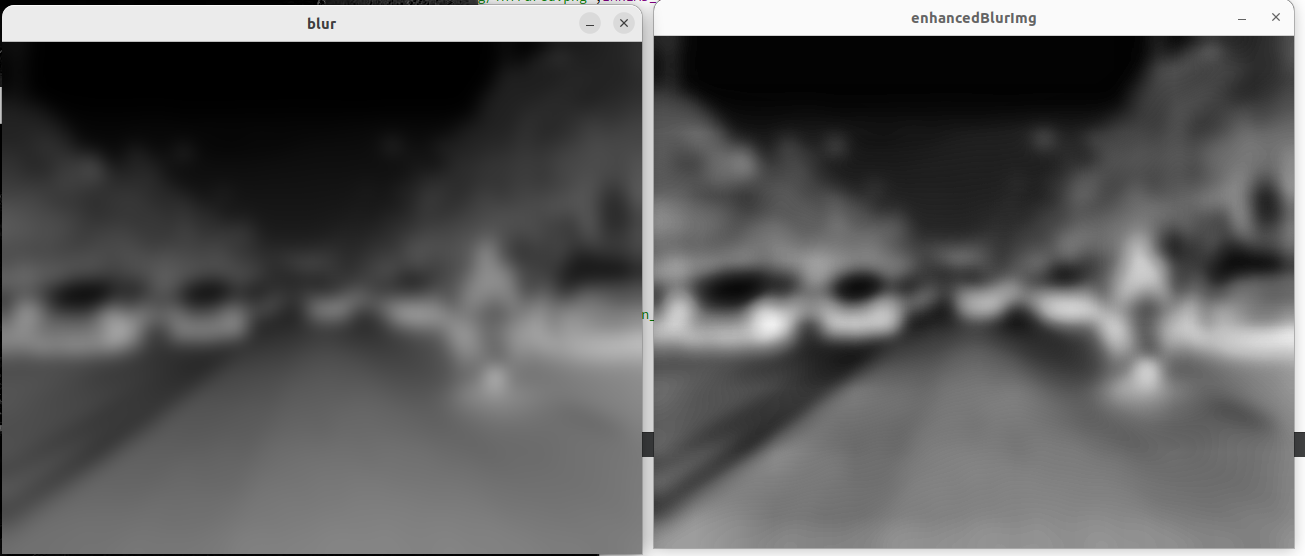

Separation of background and detail layers: Using special filters, the image is separated into background and detail layers. Background layers usually contain low-frequency information, while detail layers contain high-frequency information.

-

Grayscale enhancement on the background layer: Improve the contrast and visual perception of the background layer by applying an appropriate grayscale enhancement algorithm to the background layer.

-

Detail enhancement and noise suppression on the detail layer: The detail layer contains the detail information of the image, and nonlinear processing methods, such as enhanced sharpening or edge enhancement algorithms, can be used to enhance details and suppress noise.

-

Dynamic range adjustment: According to the overall dynamic range of the image, adjust and compress the dynamic range of the background layer and detail layer, so as to map the original image information with a high dynamic range to the range of the 8-bit output image.

-

Composite output image: Recompose the enhanced background layer and detail layer into an 8-bit output image to display large dynamic temperature differences and target local detail information.

As mentioned above, DDE technology can extract and highlight the details of the image through steps such as filter separation, background layer and detail layer processing, and dynamic range adjustment, and limit them to 8-bit output images to preserve large dynamic temperature differences and target parts details.

Separate background and detail layers:

Grayscale enhancement of the background layer:

The effect of the two methods

Detail enhancement on the detail layer

Result comparison:

Applies adaptive histogram equalization directly to the input image

What features of the image are learned by deep learning:

Edge features: edges, where grayscale or color changes

Texture feature: that is, the repeated local structure in the image

Shape features: including the outline of the object, the geometric features of the shape

Color features: Color features of different color spaces, including color distribution, color histogram

Spatial structure features: the spatial structure relationship between different objects, including the relative position, size, direction and other characteristics of objects

Hierarchical features: from low-level local features to high-level semantic features

Personal summary:

I did DDE data enhancement for infrared target detection

Personally, I feel that the DDE algorithm increases the discrimination between the background and the foreground, improves the contrast of the image , and at the same time the edge features and traits are very clear

However, the histogram equalization feels a bit too exposed, and the edge features and traits are not clear.

After adaptive histogram equalization, the edge features and trait features are also relatively clear

The DDE algorithm looks more comfortable to the naked eye than the adaptive histogram, but it still needs to be combined with model training to see whether the DDE algorithm is better than the adaptive histogram!

code:

#include <opencv2/opencv.hpp>

using namespace cv;

int main()

{

// 读取输入图像

Mat inputImage = imread("/home/jason/work/01-img/infrared.png",IMREAD_GRAYSCALE);

imshow("input", inputImage);

// -------------------

// 执行DDE细节增强

// -----------------

// 第一步:滤波器分离低频和高频信息

Mat blurImg, detailImg;

GaussianBlur(inputImage, blurImg, Size(0, 0), 10);

detailImg = inputImage - blurImg;

imshow("blur", blurImg);

imshow("detai", detailImg);

// 第二步,对低频信息应用合适的灰度增强算法

Mat enhancedBlurImg, enhancedDetailImg;

// double min_val, max_val;// 拉伸对比度

// cv::minMaxLoc(blurImg, &min_val, &max_val);

// cv::convertScaleAbs(blurImg, enhancedBlurImg, 255.0/ (max_val - min_val), -255.0 * min_val/ (max_val - min_val));

cv::Ptr<cv::CLAHE> clahe = cv::createCLAHE(2.0, cv::Size(8,8)); // 自适应直方图均衡化,用于灰度增强

clahe->apply(blurImg, enhancedBlurImg);

imshow("enhancedBlurImg", enhancedBlurImg);

Mat enhancedBlurImg_blur;

cv::bilateralFilter(enhancedBlurImg, enhancedBlurImg_blur, 9, 75, 75); // 双边滤波,用于去除噪声

imshow("enhancedBlurImg-blur", enhancedBlurImg_blur);

// 第三步,对高频信息应用合适的细节增强和噪声抑制算法

cv::Ptr<cv::CLAHE> clahe_ = cv::createCLAHE(); // 自适应直方图均衡化(局部对比度增强),可增强细节

clahe_->setClipLimit(4.0);

clahe_->apply(detailImg, enhancedDetailImg);

imshow("enhancedDetailImg", enhancedDetailImg);

// Mat enhancedDetailImg_blur;

// cv::fastNlMeansDenoising(enhancedDetailImg, enhancedDetailImg_blur, 10, 10, 7); // NL-Means非局部均值去噪,可抑制噪音

// imshow("enhancedDetailImg_blur", enhancedDetailImg_blur);

// 第四步

// 合成最终的输出图像

Mat output;

cv::addWeighted(enhancedBlurImg, 0.3, enhancedDetailImg, 0.7, 0,output);

imshow("output", output);

// -------------

// 自适应直方图均衡化、直方图均衡化来对比DDE算法效果

// -----------

Mat out2;

cv::Ptr<cv::CLAHE> clahe2 = cv::createCLAHE(2.0, cv::Size(8,8)); // 自适应直方图均衡化,用于灰度增强

clahe2->apply(inputImage, out2);

imshow("自适应直方图均衡化", out2);

Mat out3;

cv::equalizeHist(inputImage, out3);

imshow("直方图均衡化", out3);

// 等待按键退出

waitKey(0);

return 0;

}