1 Overview

This document mainly introduces the preparation of cluster construction, upgrade, and expansion operations based on the Apache doris database construction (1) .

2. Software and hardware requirements

As an open source MPP architecture OLAP database, Doris can run on most mainstream commercial servers. In order to take full advantage of the concurrency advantages of the MPP architecture and the high availability features of Doris, we recommend that the deployment of Doris follow the following requirements:

Linux operating system version requirements

| Linux system | Version |

|---|---|

| CentOS | 7.1 and above |

| Ubuntu | 16.04 and above |

Software Requirements

| software | Version |

|---|---|

| Java | 1.8 and above |

| GCC | 4.8.2 and above |

Operating System Installation Requirements

To set the maximum number of open file handles in the system, the command is as follows:

vi /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

Clock synchronization

The metadata of Doris requires a time accuracy of less than 5000ms, so all machines in the cluster must synchronize their clocks to avoid service anomalies caused by inconsistencies in metadata caused by clock problems.

Close the swap partition (swap)

Linux swap partition will bring serious performance problems to Doris, you need to disable the swap partition before installation

Linux file system

Both ext4 and xfs file systems are supported.

Development and testing environment

| module | CPU | Memory | disk | network | number of instances |

|---|---|---|---|---|---|

| Frontend | 8 cores + | 8GB+ | SSD or SATA, 10GB+* | Gigabit Ethernet | 1 |

| Backend | 8 cores + | 16GB+ | SSD or SATA, 50GB+ * | Gigabit Ethernet | 1-3 * |

Production Environment

| module | CPU | Memory | disk | network | Number of instances (minimum requirement) |

|---|---|---|---|---|---|

| Frontend | 16 cores+ | 64GB+ | SSD or RAID card, 100GB+ * | 10 Gigabit NIC | 1-3 * |

| Backend | 16 cores+ | 64GB+ | SSD or SATA, 100G+* | 10 Gigabit NIC | 3 * |

Note 1:

- The disk space of FE is mainly used to store metadata, including logs and images. Usually anywhere from a few hundred MB to several GB.

- The disk space of BE is mainly used to store user data. The total disk space is calculated by the total amount of user data * 3 (3 copies), and then an additional 40% of the space is reserved for background compaction and storage of

some intermediate data.- Multiple BE instances can be deployed on one machine, but only one FE can be deployed. If 3 copies of data are required, at least 3 machines are required to deploy a BE

instance (instead of 1 machine deploying 3 BE instances). The clocks of the servers where multiple FEs are located must be consistent (clock deviations of up to 5 seconds are allowed)- The test environment can also be tested with only one BE. In the actual production environment, the number of BE instances directly determines the overall query latency.

- All deployment nodes close Swap.

Note 2: Number of FE nodes

- FE roles are divided into Follower and Observer, (Leader is a role elected in the Follower group, hereinafter collectively referred to as

Follower).- FE node data is at least 1 (1 Follower). When deploying 1 Follower and 1 Observer

, read high availability can be achieved. When deploying 3 Followers, read and write high availability (HA) can be achieved.- The number of Followers must be an odd number, and the number of Observers is arbitrary.

- According to past experience, when the cluster availability requirements are high (such as providing online services), 3 Followers and 1-3 Observers can be deployed. For offline business, it is recommended to deploy 1 Follower and 1-3 Observers.

- Usually we recommend about 10 to 100 machines to give full play to the performance of Doris (3 of them are deployed with FE (HA), and the rest are deployed with BE)

- Of course, the performance of Doris is positively related to the number of nodes and configuration. Doris can still run smoothly with at least 4 machines (one FE, three BEs, one BE mixed with one Observer FE to provide metadata backup) and a lower configuration.

- If FE and BE are mixed, attention should be paid to resource competition, and ensure that the metadata directory and data directory belong to different disks.

Broker deployment

Broker is a process used to access external data sources such as hdfs. Usually, it is sufficient to deploy one broker instance on each machine.

network requirements

Instances of Doris communicate directly over the network. The table below shows all required ports

| instance name | port name | default port | Communication direction | illustrate |

|---|---|---|---|---|

| BE | be_port | 9060 | FE --> BE | The port of thrift server on BE, used to receive requests from FE |

| BE | webserver_port | 8040 | BE <–> BE | The port of the http server on BE |

| BE | heartbeat_service_port | 9050 | FE --> BE | Heartbeat service port (thrift) on BE, used to receive heartbeat from FE |

| BE | brpc_port | 8060 | FE <–> BE, BE <–> BE | brpc port on BE, used for communication between BEs |

| FE | http_port | 8030 | FE <–> FE, user <–> FE | http server port on FE |

| FE | rpc_port | 9020 | BE --> FE, FE <–> FE | The thrift server port on FE, the configuration of each FE needs to be consistent |

| FE | query_port | 9030 | user <–> FE | mysql server port on FE |

| FE | edit_log_port | 9010 | FE <–> FE | Port used for communication between bdbje on FE |

| Broker | broker_ipc_port | 8000 | FE --> Broker, BE --> Broker | The thrift server on the Broker is used to receive requests |

Note:

When deploying multiple FE instances, ensure that the http_port configuration of FE is the same.

Before deployment, please ensure that each port has access rights in the proper direction.

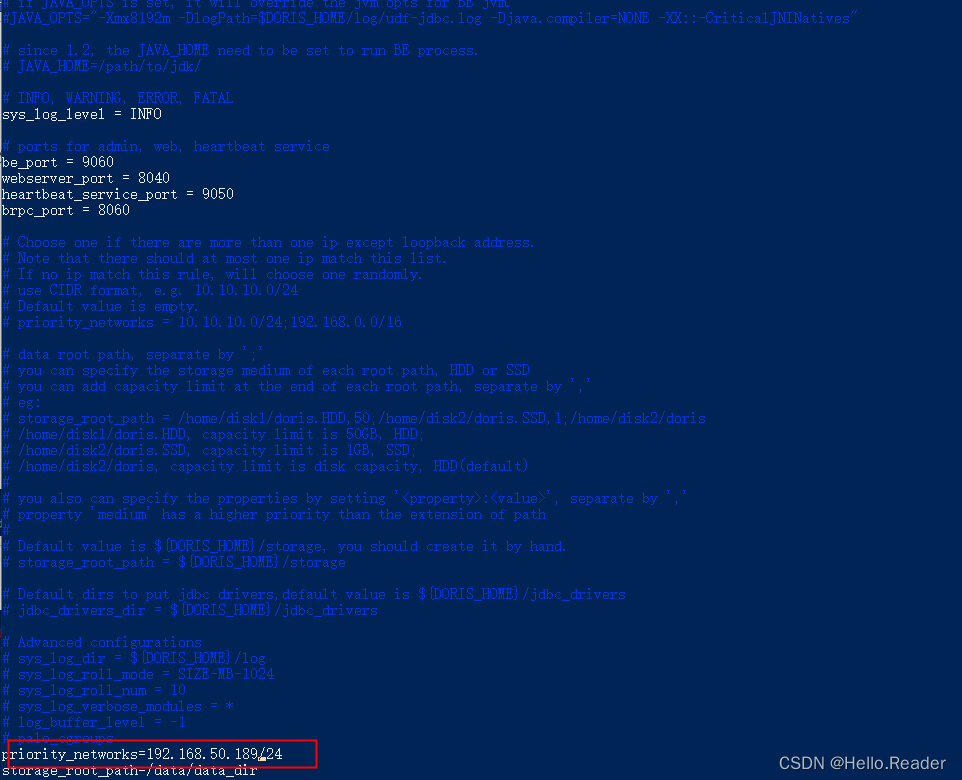

IP binding

Because of the existence of multiple network cards, or the existence of virtual network cards caused by the installation of docker and other environments, there may be multiple different ips on the same host. Currently Doris cannot automatically identify available IPs. Therefore, when there are multiple IPs on the deployment host, the priority_networks configuration item must be used to force the correct IP to be specified.

priority_networks is a configuration for both FE and BE, and the configuration items need to be written in fe.conf and be.conf. This configuration item is used to tell the process which IP should be bound when FE or BE starts. Examples are as follows:

priority_networks=192.168.50.190/24

This is a CIDR notation. FE or BE will look for a matching IP according to this configuration item as its own localIP.

Note: After configuring priority_networks and starting FE or BE, it only ensures that the IP of FE or BE is correctly bound. When using the ADD BACKEND or ADD FRONTEND statement, you also need to specify the IP that matches the priority_networks configuration, otherwise the cluster cannot be established. Example:

BE 的配置为:priority_networks=192.168.50.190/24

But when ADD BACKEND is used:

ALTER SYSTEM ADD BACKEND "192.168.0.1:9050";

Then FE and BE will not be able to communicate normally.

At this time, you must DROP the wrongly added BE, and execute ADD BACKEND again with the correct IP.

FE is the same.

BROKER does not currently have and does not need the priority_networks option. Broker's service is bound to 0.0.0.0 by default. Just execute the correct and accessible BROKER IP when ADD BROKER.

Table name case sensitivity setting

doris defaults to table name case sensitivity. If there is a requirement for table name case insensitivity, it needs to be set during cluster initialization. The case sensitivity of the table name cannot be modified after the cluster initialization is complete.

3. Cluster deployment

environmental planning

| server name | Server IP | Role |

|---|---|---|

| node0 | 192.168.50.190 | FE、BE、Master |

| node1 | 192.168.50.189 | FE、BE |

| node2 | 192.168.50.188 | FE、BE |

First, we distribute the file to the other two servers through the scp -r target file root@ip:/data command, and modify the corresponding ip address. The operation command is as follows:

vim be.conf #进入其余两台服务器的doris/be文件下,笔者这里的ip是192.168.50.189

mkdir -p /data/data_dir/ /data/doris-meta/ #在其余分别两个台服务器执行创建

BE add extension

Note: The other server is the same, I will not do a demonstration here (the other ip is 192.168.50.188)

After the modification is complete, use the following command to add the BE node to the cluster:

mysql -uroot -h 192.168.50.190 -P 9030 -p

#密码为root

#或者通过客户端登录

#通过MySQL 客户端连接到 FE 之后执行下面的 SQL,将 BE 添加到集群中

ALTER SYSTEM ADD BACKEND "192.168.50.188:9050";

ALTER SYSTEM ADD BACKEND "192.168.50.189:9050";

Go to the be file directory of node0 and node1 to start the corresponding be service, the specific command is shown in the following figure:

./bin/start_be.sh --daemon

Note: If prompted Please set vm.max_map_count to be 2000000 under root using 'sysctl -w vm.max_map_count=2000000'. Follow the prompts to execute the corresponding sysctl -w vm.max_map_count=2000000 command

The Alive field is true, indicating that the BE status is normal and has joined the cluster.

FE add extension

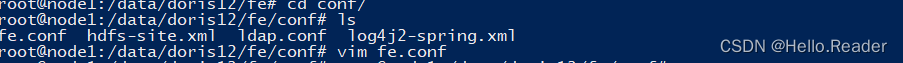

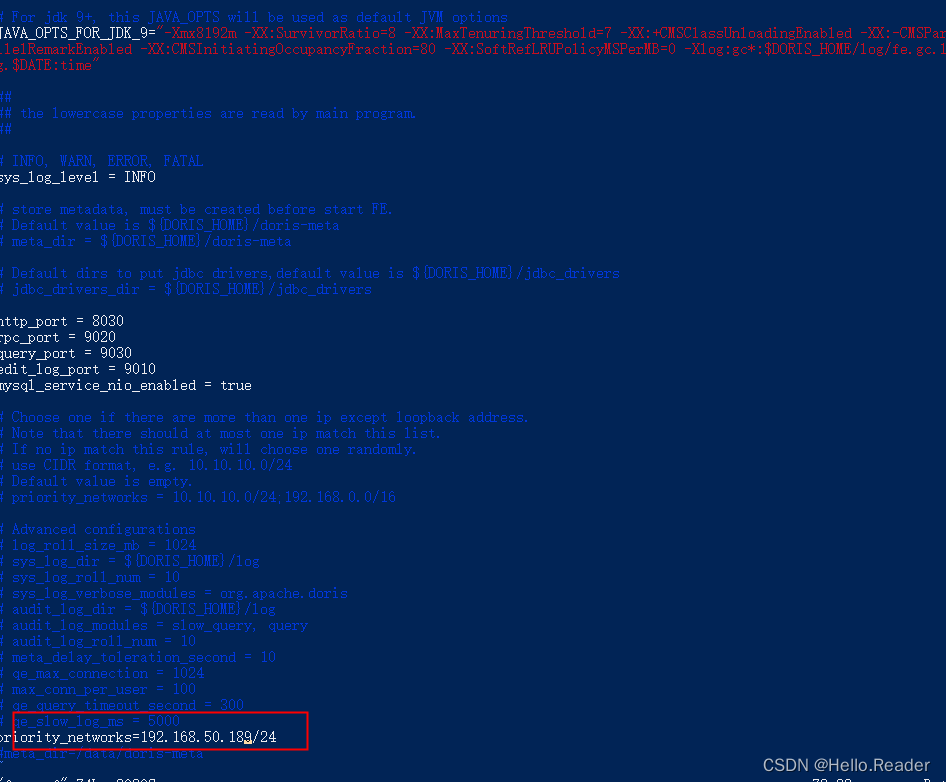

First enter the fe/conf directory of doris and use the following command to edit the fe.conf file and modify the ip:

vim fe.conf #进入其余两台服务器的doris/fe文件下,笔者这里的ip是192.168.50.189

Note: The other server is the same, the author will not do a demonstration here (the other ip is 192.168.50.188); the original historical ip is 192.168.50.190 [apache doris database construction (1)]

FE Notes:

1. The number of Follower FEs (including Master) must be an odd number. It is recommended to deploy up to 3 to form a high availability >> (HA) mode.

2. When FE is in a high-availability deployment (1 Master, 2 Followers), we recommend expanding the read service capability of FE by adding Observer FE. Of course, you can continue to increase Follower FE, but it is almost unnecessary.

3. Usually one FE node can handle 10-20 BE nodes. It is recommended that the total number of FE nodes be less than 10. Usually 3 can meet most needs.

4. The helper cannot point to the FE itself, but must point to one or more existing and running Master/Follower FEs.

operate

Start the FE service of node0 node1 (the 192.168.50.190 machine FE has been started as the leader role before). Special note: the --helper parameter is only required when the follower and observer are started for the first time.

## ./bin/start_fe.sh --helper leader_fe_host:edit_log_port --daemon

./bin/start_fe.sh --helper 192.168.50.190 --daemon

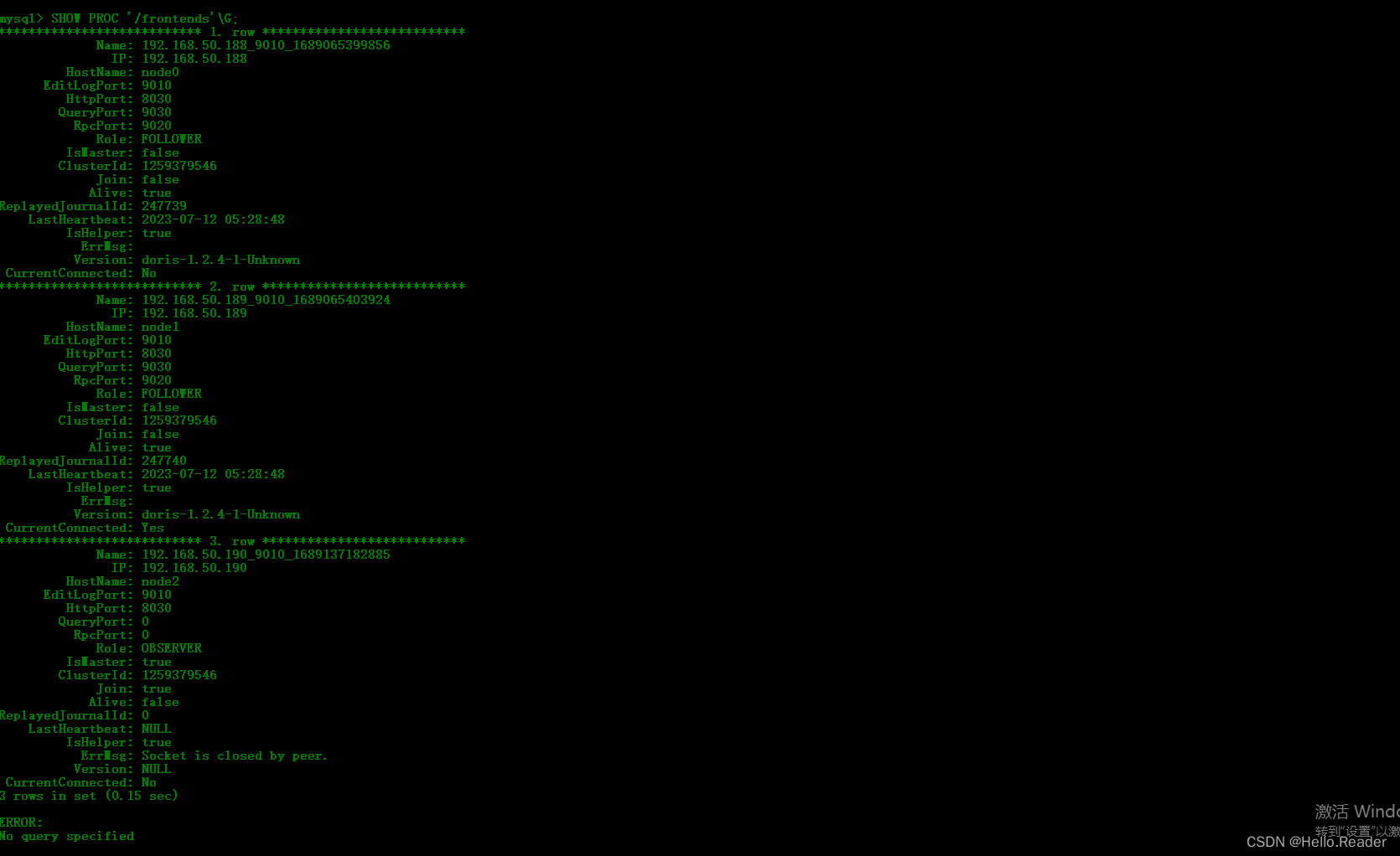

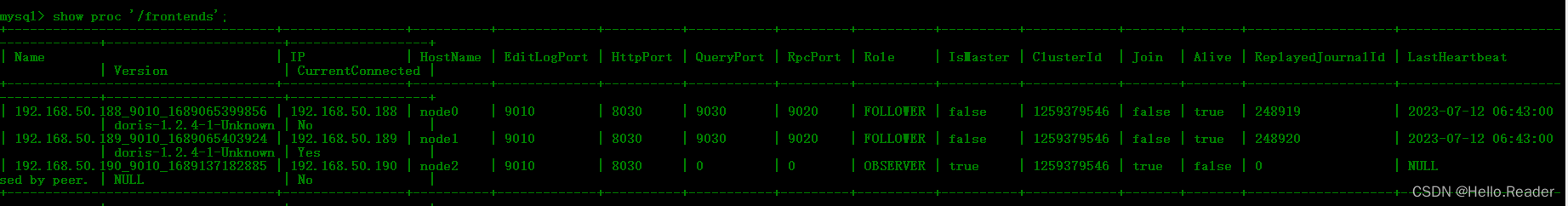

After the addition is complete, you can view the FE status through SHOW PROC '/frontends'\G; to see if Alive is true, and if it is True, it proves that the addition is successful.

Now all three nodes have started the FE instance, and you need to use mysql-client to connect to the started The FE of the node2 machine executes the SQL operation of adding the cluster. Since we expect to deploy two FEs, changing node2 to OBSERVER requires executing the following three commands in the SQL window:

#添加FE

ALTER SYSTEM ADD FOLLOWER "192.168.50.189:9010";

ALTER SYSTEM ADD FOLLOWER "192.168.50.189:9010";

#执行前需要删除node2的OBSERVER alter system,drop follower[observer] "ip:port"

ALTER SYSTEM ADD OBSERVER "192.168.50.190:9010";

View the FE instance again

show proc '/frontends';

Note: If you want to expand the corresponding FE node in the future , you can add it through the corresponding sql command

ALTER SYSTEM ADD FOLLOWER"ip:port"` , and the BE node through the sql command:

ALTER SYSTEM ADD BACKEND "ip:port";

So far, the author has finished explaining the construction of the doris database cluster. If you have any questions, please contact the author in time. The version of this section is 1.2.1.4