In recent years, driven by Google, Niantic, etc., the importance of visual positioning for AR applications has become more and more obvious, especially in indoor navigation scenarios, where the positioning accuracy can surpass traditional GPS solutions. In order to further improve the accuracy of visual positioning and depth visual map construction, Niantic Labs announced the ACE scheme (Accelerated Coordinate Encoding) during CVPR 2023. The Chinese literal translation is an accelerated coordinate encoding, claiming that it can achieve more efficient visual positioning effects.

In fact, visual relocalization technology has existed for decades. Traditional solutions build maps by identifying key points (corner contours) in images and generate 3D models based on sparse point clouds. In the relocalization phase, traditional solutions read key points in the map based on the 3D point cloud, and align the 3D map with the environment image based on the position of the camera. At this stage, machine learning and neural networks have been widely used in the field of computer vision. Neural networks are often used to find better key points and improve the matching results of images and maps.

Outperforms traditional DSAC* schemes

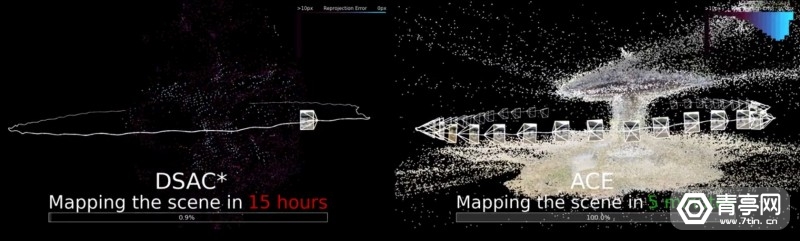

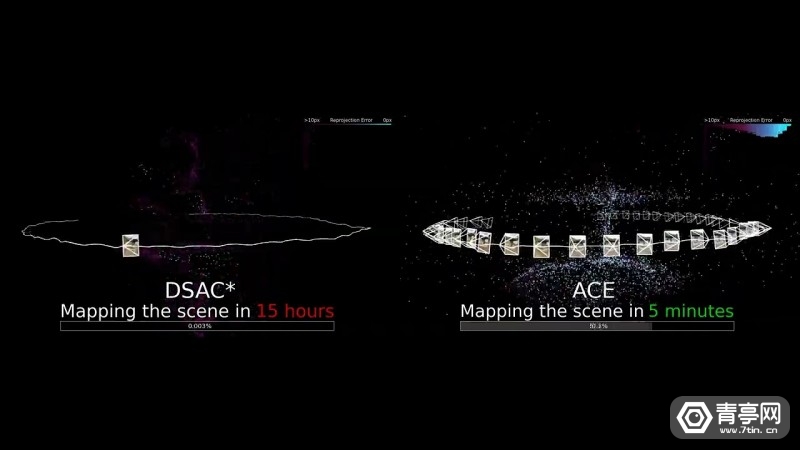

The more commonly used visual positioning scheme is based on DSAC* (differentiable sample consensus), which has the advantage of high accuracy, but the disadvantage is that it takes hours to days to train the network model. DSAC* can only process one mapped image at a time and requires a lot of redundant computation, so it takes about 15 hours to map a scene.

This is impractical for most AR applications and very costly to scale. In contrast, the ACE solution only takes 5 minutes to achieve precise visual positioning, which can increase the network training speed by 300 times while maintaining accuracy.

In simple terms, Niantic trains a neural network to learn what the world looks like, and then combines it with a camera to achieve high-precision, low-cost relocalization. It is reported that ACE relocalizer has been used in the Lightship VPS system for more than a year, and there are already 200,000 regions supporting VPS relocation around the world, which can be well combined with traditional positioning technology.

Active Learning Maps

Different from traditional solutions, ACE can better understand physical scenes and completely use 3D maps to replace neural networks.

Realistic and believable AR relies on high-precision positioning to determine the position and perspective of the user's device, and fix the virtual content in place to blend with the physical scene. Even if users revisit the content months later, they can find it in the same place.

Ideally, the accuracy of GPS and IMU sensors can reach a few meters, but it is not accurate enough for AR, and the error needs to be reduced to the centimeter level. ACE completes map creation in minutes and relocalization in milliseconds with high accuracy. The repositioning of the program is mainly divided into two phases:

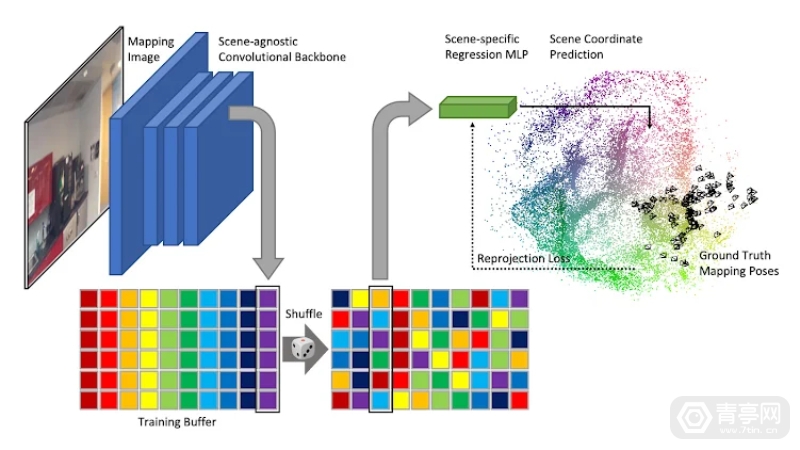

1) Construct a 3D map of the environment from a collection of images with known poses, the mapping phase;

2) Match the new query/visit image with the 3D map to determine the exact position and pose, i.e. the relocalization phase.

ACE completely replaces the neural network with a 3D map that is consistent with all mapped images and does not require point cloud reconstruction. When given a new image query task, the neural network can accurately tell us the corresponding point of each pixel in the scene space, and infer the camera pose by aligning the correspondence.

The neural network used by ACE is lightweight enough to represent the entire map in just 4MB of memory, running at speeds up to 40fps on a single GPU and 20fps on common smartphones.

In addition, it only takes 5 minutes to generate a 3D scene and create a neural map from an RGB image containing pose data. It is also possible to estimate the camera pose and reposition through a frame of RGB image.

Why is ACE fast?

DSAC* is based on the scene coordinate regression framework, a technique proposed ten years ago. Additionally, it requires two stages of training, optimizing the scene reprojection error one map image at a time: an image provides a large number of pixels for learning but has a high degree of reprojection error, as do losses and gradients.

In contrast, ACE only needs to be trained to reduce pixel-level frame interpolation errors, and can optimize the maps of all mapped images at the same time without dealing with image loss, so the training efficiency is higher.

The final optimization is very stable, Niantic notes: We can train ACE faster than 5 minutes to get results that are still usable. The training time is even shortened to 10 seconds (not including 20 seconds of data preparation).

It is worth noting that ACE can be very suitable for larger-scale outdoor scenes, but the memory footprint and short mapping time limit its functionality to some extent. Therefore, Niantic divides larger scenes into smaller chunks and trains an ACE model for each chunk. During relocalization, each ACE model independently estimates a pose and chooses the one with the highest inlier count. Training ACE models can be faster if multiple GPUs can be used simultaneously. Reference: Niantic