Make a fortune with your little hand, give it a thumbs up!

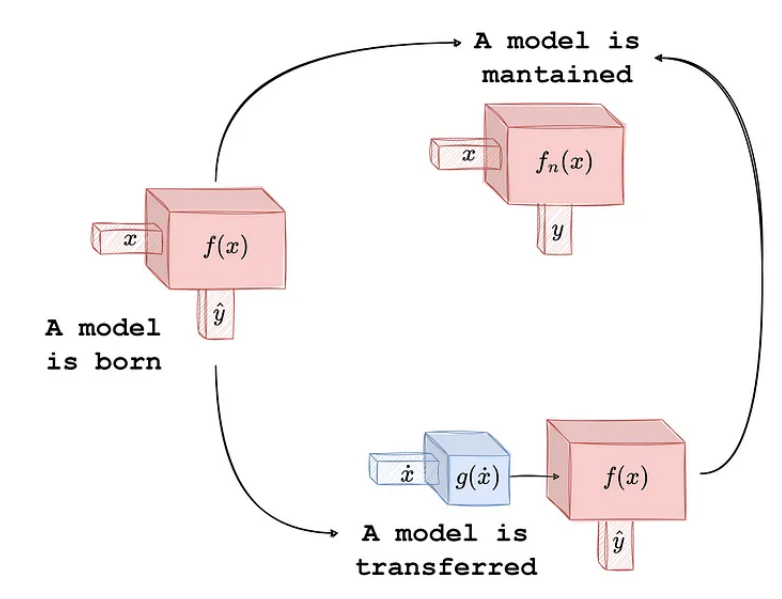

How does your model change? Source [1]

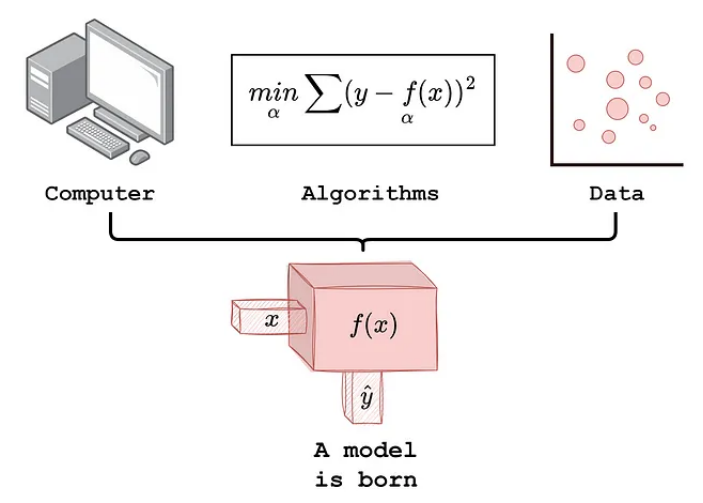

the birth

These digital tools are born when we build, train, fit or estimate our models. This stage pretty much starts with having the analysis objectives, data, computers, algorithms, and everything else that a data scientist knows very well by now. Whatever other tools you gather, never lose sight of your analytical or scientific goals so that your final model makes sense and meets specific needs. When was your model born? The life cycle of the tool begins when you complete the training and save it for employment/deployment.

What future does this newborn have? This will depend on the analysis goal, so we can't forget this part when building it. The model can serve forecasting tasks, indicator interpretation, or what-if scenario simulations, among many other options. This tool will be used for certain things. It can be something simple and quick, or something complex, time-consuming and long-term. This use will determine the remaining life of the model. If the model is used for a one-off interpretation of the parameters, there is not much life left. But if the model is used for prediction and is intended to serve a system with online data collection, life is at hand for this newborn. what's next

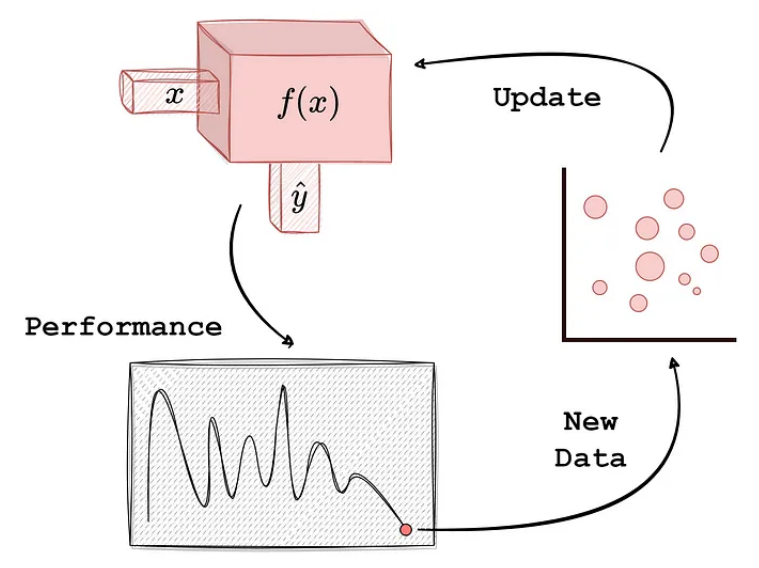

maintain

As we continue to use the model, the data conditions that support the model's training will begin to change. At that time of change, the model also starts to undergo changes. If we build a churn prediction model with high prediction accuracy at training time, the conditions or behaviors of the customers to be predicted will start to change in the near or distant future. This variation challenges the predictive performance of our learned models. When these changes occur, our model enters a new phase, which we call maintenance.

During the maintenance phase, we may need new data. With it, we can update the model's specifications. It's no different than taking another machine (like a car) and adjusting parts when the machine doesn't work as it should. We will not delve into strategies or solutions for performing model maintenance, but in general our models need to go through a tuning process to bring them back to satisfactory performance.

Maintaining a machine learning model is not quite the same as retraining a model. Some models may be so simple that retraining them with newer data is just as simple. This may be the case for linear architectures or networks with few layers and neurons. But when the model is so complex and has a large and deep architecture, the maintenance phase needs to be much simpler than the cost of retraining the model. This is one of the most important topics in machine learning today, because the tools are very powerful, but expensive to maintain in the long run.

Once a model is tweaked or updated, it can be brought back into use, so any process the model is serving can continue to use the updated version. Our machines can continue to be used. Still, the machine has changed. It's been used, consumed, and transformed into something slightly different from its original state, if you will. Just like pencils, our models come to a point where we need to sharpen their points to protect them so we can keep using them.

migrate

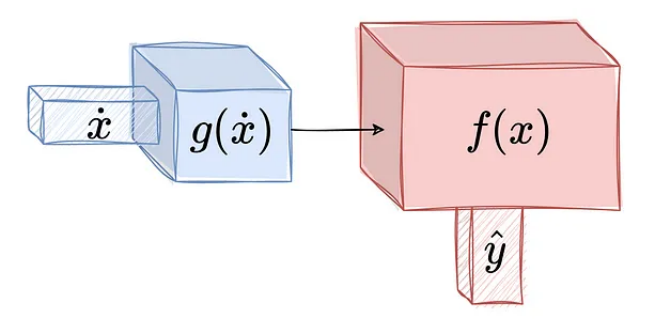

On the road of machine learning, we may need to take an exit: transfer. I once visited amazing Iceland when I first saw someone change their car tires and drive on an icy road. Then when they got back to the city, they switched back to stock tires. This concept became so clear when I started working on transfer learning while remembering the conversion of car tires in Iceland. When a new environment/domain comes into play, our model enters a new phase called transfer.

Just as the same car can be adapted to different terrains by changing tires without having to buy another separate car, we can add or tweak parts of our model to serve new purposes in new areas without having to build a new model. Transfer learning is another subfield of research in the machine learning literature that seeks to optimize model adjustments to simplify the task of training a model in a new environment. A popular example is image recognition models. We train them with certain classes of images, and then others transfer these models to recognize new classes of images. Many businesses now use models such as RegNet, VGG, Inception or AlexNet to tune them to their needs.

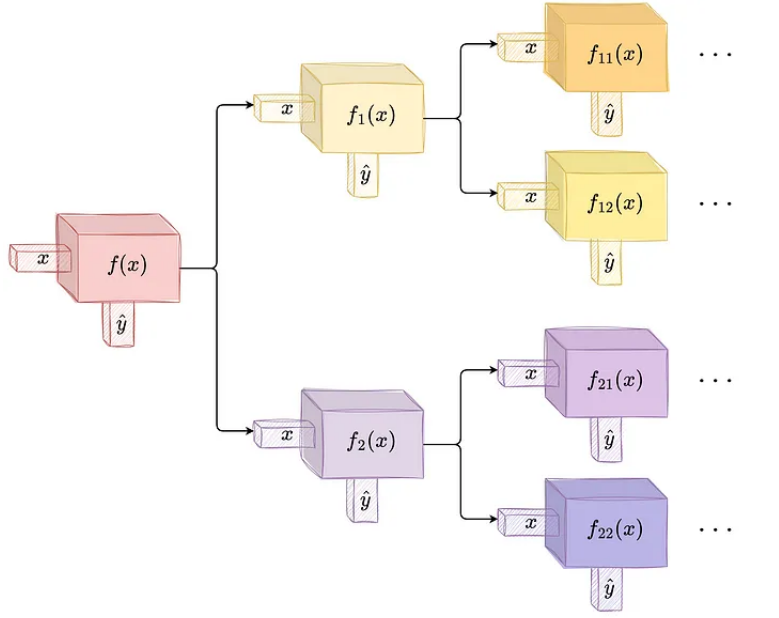

When we transfer a model, in a way, a new model is born, which has its own life cycle, separate from the original model. It will require maintenance just like the original model. With this, we've gone from having an initial entity to potentially creating a whole set of models. There is no doubt that there is indeed a life cycle behind these digital tools.

Will our model die?

The short answer is: yes. For example, they can indeed cease to exist when their analytical performance falls short of systematically, or when they become so large and so diverse that the original model is a thing of the past. As we said at the beginning, rocks, pencils, and cars all cease to exist at some point. In this regard, models are no different than these things.

While the model may become extinct, the answer to the question of when they reached this point is, to this day, the biggest question we want answered in the machine learning research community. Many developments in monitoring machine learning and model maintenance performance are related to the question of when a model no longer works.

One of the reasons this answer is not trivial is because we constantly need labels to quantify performance satisfaction. But the biggest paradox of machine and statistical learning is precisely that the labels are not available, and we build these tools to predict them. Another reason is that defining acceptance limits for performance changes can be very subjective. While scientists can come up with some limits, businesses may have different tolerances.

Here are some points that data scientists can also consider when answering this question (currently open):

-

Is the training data outdated? (what is "too outdated") -

How similar is the current version to the original version of the model? (What is "similar"?) -

Is the variability of the input features and relationship to the target variable completely drifted? (Covariates and concept drift, two of the biggest topics in machine learning maintenance research). -

Is the physical process for deploying the model still in use? If the physical infrastructure can no longer support the deployment of the model, this undoubtedly marks the end of its life cycle.

No longer living as a model is not necessarily a negative thing, it is more like an evolutionary path for them. We need to understand its life cycle to keep our physical and digital systems up to date and performing satisfactorily.

Reference

Source: https://towardsdatascience.com/the-lifetime-of-a-machine-learning-model-392e1fadf84a

This article is published by mdnice multi-platform