———————————————————————————————————————————————————

Learning summary:

1) Overview of Deep Learning; (2) Understanding of Convolutional Neural Network (CNN); (3) Minist Dataset Training and Testing;

————————————————————————— ————————————————————————

foreword

Deep learning is an emerging research direction in the field of machine learning. It imitates the structure of the human brain to achieve efficient processing of complex input data, intelligently learn different knowledge, and can effectively solve many types of complex intelligence problems. Practice has shown that deep learning is an efficient feature extraction method, which can extract more abstract features in data and achieve a more essential description of data. At the same time, deep models have stronger modeling and promotion capabilities. It represents a class of machine learning methods that use deep neural networks to achieve data fitting. Typical deep learning models include convolutional neural network, DBN and stacked auto-encoder network models, etc. . The next section will mainly introduce convolutional neural networks. Its learning methods can be mainly divided into supervised learning and unsupervised learning. Supervised and unsupervised is by checking whether the input data has a label, and if there is a label, it is supervised learning, or it is unsupervised learning.

At present, the practical application of deep learning is mainly in speech, image, and information retrieval. Today's speech recognition has a very ideal recognition effect through the powerful discriminative training and continuous modeling capabilities of the comprehensive deep learning model; the application on images is mainly in handwritten character recognition, face recognition, and image recognition and retrieval. , these aspects have achieved good results under the framework of deep learning; there are also a large number of research applications for information retrieval, through the method of deep learning to index documents, and then retrieve information from them. It is believed that deep learning will have good applications in more directions in the future, which will break through the bottleneck of traditional machine learning methods and promote the development of the field of artificial intelligence.

Convolutional Neural Networks (CNNs)

Convolutional neural network is an efficient recognition method that has been developed in recent years and has attracted widespread attention. Now, CNN has become one of the research hotspots in many scientific fields, especially in the field of pattern classification, because the network avoids the complex preprocessing of images and can directly input original images, so it has been more widely used.

Generally, the basic structure of CNN includes two layers, one is the feature extraction layer, the input of each neuron is connected to the local receptive field of the previous layer, and the local features are extracted. Once the local feature is extracted, the positional relationship between it and other features is also determined; the second is the feature map layer, each calculation layer of the network is composed of multiple feature maps, each feature map is a plane, All neurons on the plane have equal weights. The feature map structure uses the sigmoid function with a small influence function kernel as the activation function of the convolutional network, so that the feature map has displacement invariance. In addition, since neurons on a mapping plane share weights, the number of free parameters of the network is reduced. Each convolutional layer in the convolutional neural network is followed by a calculation layer for local averaging and secondary extraction. This unique two-time feature extraction structure reduces the feature resolution.

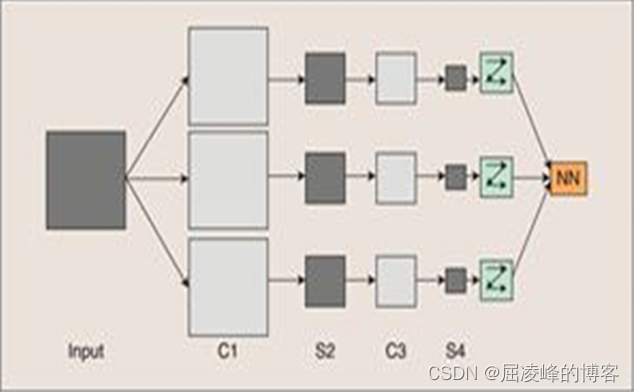

Figure 1 Conceptual demonstration diagram of convolutional neural network

Figure 1 is a conceptual demonstration diagram of CNN. It can be seen that the CNN structure can be divided into four major levels: input image, multiple convolution-downsampling layers, rasterization and multi-layer perceptron. Normalize the input image, normalize the input image to different intervals according to the different activation functions, and then convolve it with the weight w of the next layer to obtain each convolutional layer, and then down-sample to obtain each down-sampling Layers, the output of these layers is Feature Map. Next, rasterization is performed, and each pixel of all Feature Maps is expanded in turn to form a vector. The final multi-layer perceptron is a fully connected network, and the final classifier of the network generally uses the activation function Softmax.

The training process of CNN includes the initialization of weights before training, in which different small random numbers are applied to the weights to ensure the success of the entire training process. The next two stages are the forward propagation stage and the backward propagation stage. The final output in the network is calculated. The latter compares the difference between the actual output and the ideal output, and then uses the back propagation algorithm to update each value until the difference between the actual output and the ideal output satisfies our expectation of a value.

Minist dataset training and testing

Here, the LeNet model is trained and tested under the Minist dataset. There are two main parts. The first is to introduce LeNet, and the second is to realize the results on the computer.

(1) Introduction to the LeNet model.

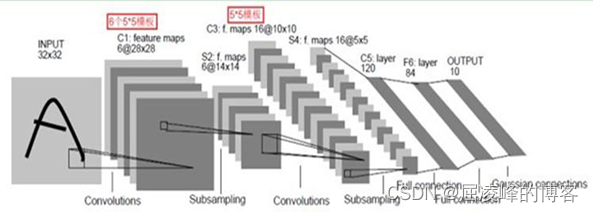

Figure 2 is the original LeNet model diagram, which is one of the most representative experimental systems in the early convolutional neural network. LeNet has 7 layers in addition to the input. First set the input image size to 32*32. Each layer is described below.

The C1 layer is a convolutional layer consisting of 6 feature maps (Feature Map). Each Feature Map extracts a feature of the input through a convolution filter, and then each Feature Map has multiple neurons. Each neuron in the feature map is connected to a 5×5 neighborhood in the input. The size of the feature map is 28×28.

**S2 layer is a down-sampling layer, **Using the principle of image local correlation to sub-sample the image, which can reduce the amount of data processing while retaining useful information), there are 6 14×14 feature maps.

Layer C3 is also a convolutional layer . It also uses a 5×5 convolution kernel to deconvolute layer S2, and then the obtained feature map has only 10×10 neurons, but it has 16 different convolution kernels, so There are 16 Feature Maps.

The S4 layer is a downsampling layer consisting of 16 feature maps of size 5×5. Each cell in a feature map is connected to a 2×2 neighborhood of the corresponding feature map in C3, just like the connection between C1 and S2.

Layer C5 is a convolutional layer with 120 feature maps. Each unit is connected to a 5×5 neighborhood of all 16 units in layer S4. Since the size of the S4 layer feature map is also 5×5 (same as the filter), the size of the C5 feature map is 1×1: this constitutes a full connection between S4 and C5. The reason why C5 is still marked as a convolutional layer instead of a fully connected layer is because if the input of LeNet-5 becomes larger and the others remain unchanged, then the dimensionality of the feature map will be larger than 1×1.

Layer F6 is a fully connected layer, there are 84 units (the reason for choosing this number comes from the design of the output layer), which is fully connected to the C5 layer. Like a classical neural network, the F6 layer computes the dot product between the input vector and the weight vector, plus a bias. This is then passed to the sigmoid function to generate a state for unit i.

The output layer consists of Euclidean Radial Basis Function (Euclidean Radial Basis Function) units, which have limited applications. In multi-classification tasks, the output layer of CNN is generally a Softmax regression model, which outputs the probability that a picture belongs to a certain category.

Figure 2 The original LeNet model diagram

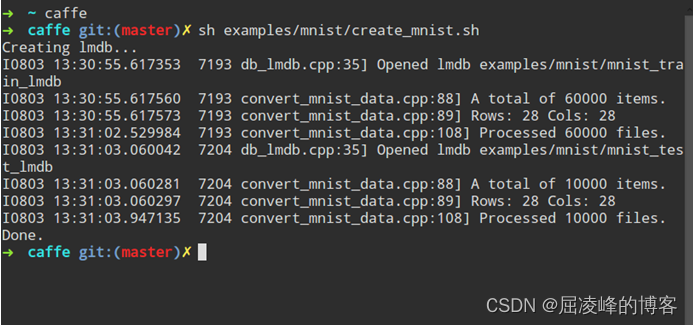

(2) First generate mnist-train-lmdb/ and mnist-test-lmdb/, and convert the data into lmdb format. The terminal result is shown in Figure 3.

Figure 3 Data conversion

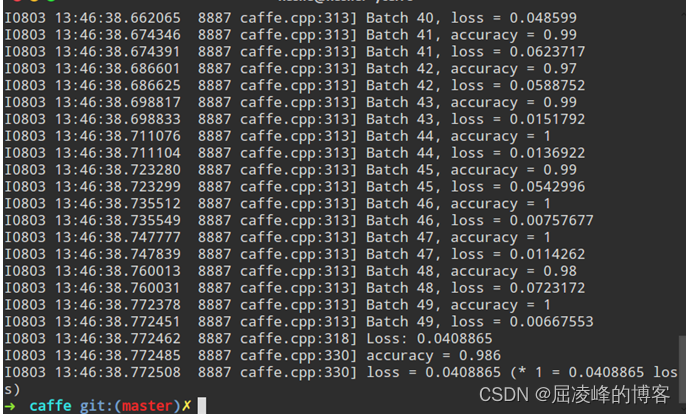

and then train the network, Figure 4 shows some terminal display results of the trained network.

Figure 4 Partial results of the training network

The final test, after the data is trained, is tested, and the results are shown in Figure 5

Figure 5 Test results

Summarize

Through this special topic, I have deepened my understanding of deep learning, and I have a more detailed understanding of the development of deep learning, especially how the entire process of CNN is carried out. By searching for information, I also have a certain understanding of the nouns in each process, and at the same time realize how the parameters change in the two stages. The implementation of Minist has deepened the understanding of how the entire CNN process is implemented programmatically. Next, we will study the various steps of Minist database testing and training, especially how to understand the training process and learning process. Learn and understand the entire CNN process theoretically, and understand how deep learning is implemented in applications by reading relevant literature.