Summarize the pitfalls of multi-angle template matching

1. Multi-angle matching involves the use of masks. First, the source code that comes with opencv matchTemplateMask is as follows:

static void matchTemplateMask( InputArray _img, InputArray _templ, OutputArray _result, int method, InputArray _mask )

{

CV_Assert(_mask.depth() == CV_8U || _mask.depth() == CV_32F);

CV_Assert(_mask.channels() == _templ.channels() || _mask.channels() == 1);

CV_Assert(_templ.size() == _mask.size());

CV_Assert(_img.size().height >= _templ.size().height &&

_img.size().width >= _templ.size().width);

Mat img = _img.getMat(), templ = _templ.getMat(), mask = _mask.getMat();

if (img.depth() == CV_8U)

{

img.convertTo(img, CV_32F);

}

if (templ.depth() == CV_8U)

{

templ.convertTo(templ, CV_32F);

}

if (mask.depth() == CV_8U)

{

// To keep compatibility to other masks in OpenCV: CV_8U masks are binary masks

threshold(mask, mask, 0/*threshold*/, 1.0/*maxVal*/, THRESH_BINARY);

mask.convertTo(mask, CV_32F);

}

Size corrSize(img.cols - templ.cols + 1, img.rows - templ.rows + 1);

_result.create(corrSize, CV_32F);

Mat result = _result.getMat();

// If mask has only one channel, we repeat it for every image/template channel

if (templ.type() != mask.type())

{

// Assertions above ensured, that depth is the same and only number of channel differ

std::vector<Mat> maskChannels(templ.channels(), mask);

merge(maskChannels.data(), templ.channels(), mask);

}

if (method == CV_TM_SQDIFF || method == CV_TM_SQDIFF_NORMED)

{

Mat temp_result(corrSize, CV_32F);

Mat img2 = img.mul(img);

Mat mask2 = mask.mul(mask);

// If the mul() is ever unnested, declare MatExpr, *not* Mat, to be more efficient.

// NORM_L2SQR calculates sum of squares

double templ2_mask2_sum = norm(templ.mul(mask), NORM_L2SQR);

crossCorr(img2, mask2, temp_result, Point(0,0), 0, 0);

crossCorr(img, templ.mul(mask2), result, Point(0,0), 0, 0);

// result and temp_result should not be switched, because temp_result is potentially needed

// for normalization.

result = -2 * result + temp_result + templ2_mask2_sum;

if (method == CV_TM_SQDIFF_NORMED)

{

sqrt(templ2_mask2_sum * temp_result, temp_result);

result /= temp_result;

}

}

else if (method == CV_TM_CCORR || method == CV_TM_CCORR_NORMED)

{

// If the mul() is ever unnested, declare MatExpr, *not* Mat, to be more efficient.

Mat templ_mask2 = templ.mul(mask.mul(mask));

crossCorr(img, templ_mask2, result, Point(0,0), 0, 0);

if (method == CV_TM_CCORR_NORMED)

{

Mat temp_result(corrSize, CV_32F);

Mat img2 = img.mul(img);

Mat mask2 = mask.mul(mask);

// NORM_L2SQR calculates sum of squares

double templ2_mask2_sum = norm(templ.mul(mask), NORM_L2SQR);

crossCorr( img2, mask2, temp_result, Point(0,0), 0, 0 );

sqrt(templ2_mask2_sum * temp_result, temp_result);

result /= temp_result;

}

}

else if (method == CV_TM_CCOEFF || method == CV_TM_CCOEFF_NORMED)

{

// Do mul() inline or declare MatExpr where possible, *not* Mat, to be more efficient.

Scalar mask_sum = sum(mask);

// T' * M where T' = M * (T - 1/sum(M)*sum(M*T))

Mat templx_mask = mask.mul(mask.mul(templ - sum(mask.mul(templ)).div(mask_sum)));

Mat img_mask_corr(corrSize, img.type()); // Needs separate channels

// CCorr(I, T'*M)

crossCorr(img, templx_mask, result, Point(0, 0), 0, 0);

// CCorr(I, M)

crossCorr(img, mask, img_mask_corr, Point(0, 0), 0, 0);

// CCorr(I', T') = CCorr(I, T'*M) - sum(T'*M)/sum(M)*CCorr(I, M)

// It does not matter what to use Mat/MatExpr, it should be evaluated to perform assign subtraction

Mat temp_res = img_mask_corr.mul(sum(templx_mask).div(mask_sum));

if (img.channels() == 1)

{

result -= temp_res;

}

else

{

// Sum channels of expression

temp_res = temp_res.reshape(1, result.rows * result.cols);

// channels are now columns

reduce(temp_res, temp_res, 1, REDUCE_SUM);

// transform back, but now with only one channel

result -= temp_res.reshape(1, result.rows);

}

if (method == CV_TM_CCOEFF_NORMED)

{

// norm(T')

double norm_templx = norm(mask.mul(templ - sum(mask.mul(templ)).div(mask_sum)),

NORM_L2);

// norm(I') = sqrt{ CCorr(I^2, M^2) - 2*CCorr(I, M^2)/sum(M)*CCorr(I, M)

// + sum(M^2)*CCorr(I, M)^2/sum(M)^2 }

// = sqrt{ CCorr(I^2, M^2)

// + CCorr(I, M)/sum(M)*{ sum(M^2) / sum(M) * CCorr(I,M)

// - 2 * CCorr(I, M^2) } }

Mat norm_imgx(corrSize, CV_32F);

Mat img2 = img.mul(img);

Mat mask2 = mask.mul(mask);

Scalar mask2_sum = sum(mask2);

Mat img_mask2_corr(corrSize, img.type());

crossCorr(img2, mask2, norm_imgx, Point(0,0), 0, 0);

crossCorr(img, mask2, img_mask2_corr, Point(0,0), 0, 0);

temp_res = img_mask_corr.mul(Scalar(1.0, 1.0, 1.0, 1.0).div(mask_sum))

.mul(img_mask_corr.mul(mask2_sum.div(mask_sum)) - 2 * img_mask2_corr);

if (img.channels() == 1)

{

norm_imgx += temp_res;

}

else

{

// Sum channels of expression

temp_res = temp_res.reshape(1, result.rows*result.cols);

// channels are now columns

// reduce sums columns (= channels)

reduce(temp_res, temp_res, 1, REDUCE_SUM);

// transform back, but now with only one channel

norm_imgx += temp_res.reshape(1, result.rows);

}

sqrt(norm_imgx, norm_imgx);

result /= norm_imgx * norm_templx;

}

}

}

It can be seen that four times of dft are used to calculate the convolution, and the target image needs to be convolved with the mask three times to calculate the sum and square sum of the target image in the template area. The last CCorr(I, mask2) can be omitted, it is the same as CCorr(I, mask), because the mask is binary.

// CCorr(I, T'*M)

crossCorr(img, templx_mask, result, Point(0, 0), 0, 0);

// CCorr(I, M)

crossCorr(img, mask, img_mask_corr, Point(0, 0), 0, 0);

crossCorr(img2, mask2, norm_imgx, Point(0,0), 0, 0);

crossCorr(img, mask2, img_mask2_corr, Point(0,0), 0, 0);

So the time-consuming part is the three convolutions, which can be accelerated with simd. Opencv and encapsulated simd instructions, how to use it to see this blogger OpenCV 4.x3.4.x version and above also provides a powerful unified vector instruction

test, when the high pyramid is used for global matching, use crossCorr to calculate the convolution , and use simd to calculate the local convolution, which is faster.

Second, the rotation of the template

- Create a paddingImg whose size is the diagonal length of the template + 1, and then copy the template image to the middle of the paddingImg, so that the rotation will not exceed the boundary, the code is as follows.

- Another point is that it is best to use INTER_NEAREST for interpolation of rotation. I have tried several other types, and when matching in a relatively high pyramid layer, there will be cases where the match cannot be found.

void NccMatch::RotateImg(Mat mImg, int nAngle, Mat& outImg, Mat& mask,RotatedRect* ptrMinRect, Point2d pRC)

{

if (mImg.depth() != CV_32F) {

mImg.convertTo(mImg, CV_32F); }

int nDiagonal = sqrt(pow(mImg.cols, 2) + pow(mImg.rows, 2)) + 1;

Mat paddingImg = -1 * Mat::ones(Size(nDiagonal, nDiagonal), mImg.type());

Rect roi(Point((nDiagonal - mImg.cols) * 0.5, (nDiagonal - mImg.rows) * 0.5), Size(mImg.cols, mImg.rows));

mImg.copyTo(paddingImg(roi));

Mat M = getRotationMatrix2D(Point2d(paddingImg.cols * 0.5, paddingImg.rows * 0.5), nAngle, 1.0);

warpAffine(paddingImg, outImg, M, paddingImg.size(), INTER_NEAREST, 0, Scalar::all(-2));

mask = outImg.clone();

threshold(mask, mask, -1, 1, THRESH_BINARY);

//RotatedRect rRect(Point2d(paddingImg.cols * 0.5, paddingImg.rows * 0.5), Size2f(mImg.cols, mImg.rows), -nAngle);

ptrMinRect->center = Point2d(paddingImg.cols * 0.5, paddingImg.rows * 0.5);

ptrMinRect->size = Size2f(mImg.cols, mImg.rows);

ptrMinRect->angle = -nAngle;

return;

}

3. Matching

Be sure to pad the target image to ensure that the template can slide over every pixel, otherwise you will find that some pictures will not match life or death.

Finally, the achieved effect is as follows. Some test images are used by this big guy https://github.com/DennisLiu1993/Fastest_Image_Pattern_Matching

. The calculation of step size and sub-pixel also refers to this big guy.

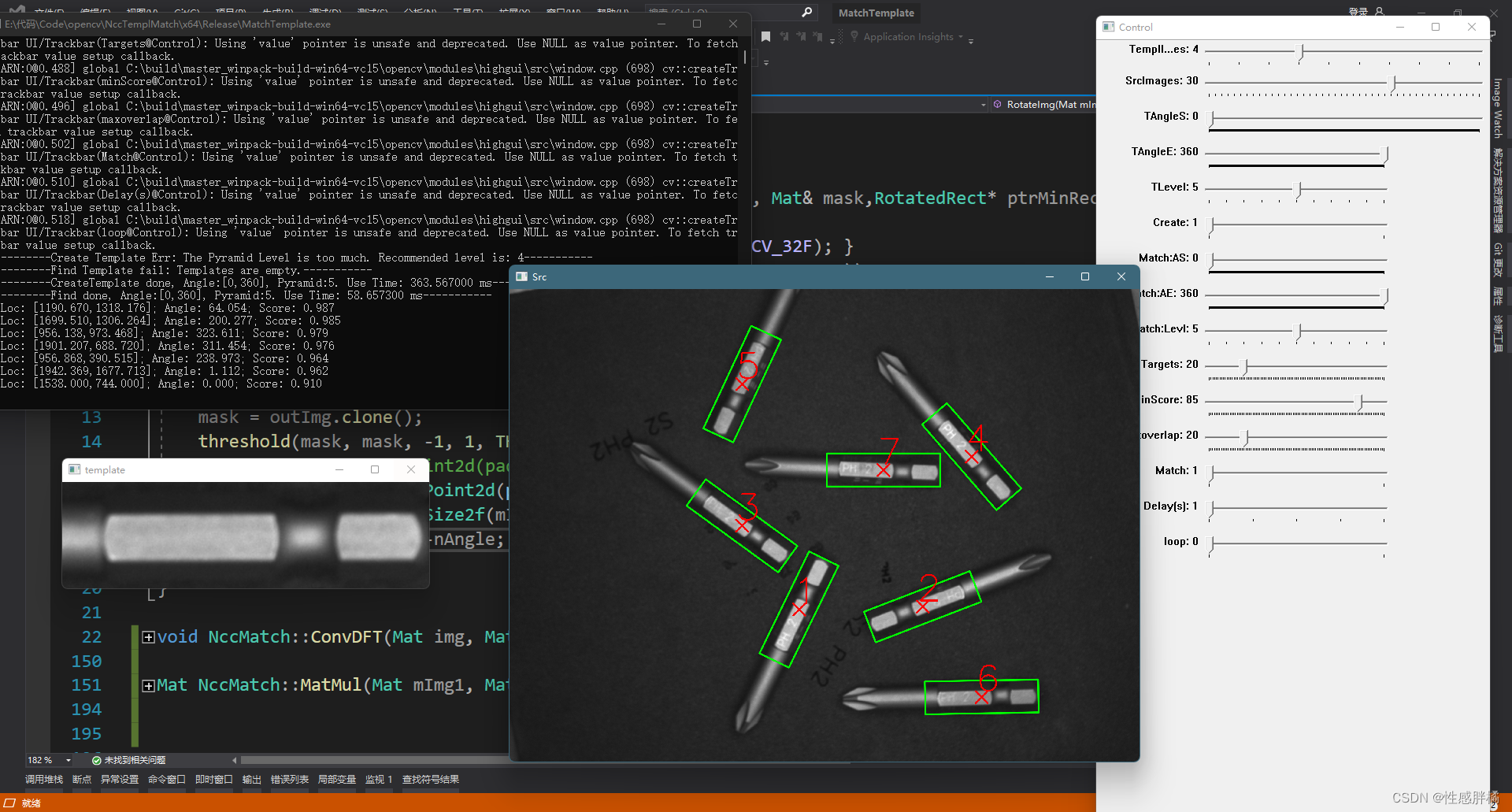

Target image 2592x1944, template 149x150, matching angle [0,360], time-consuming: about 34ms

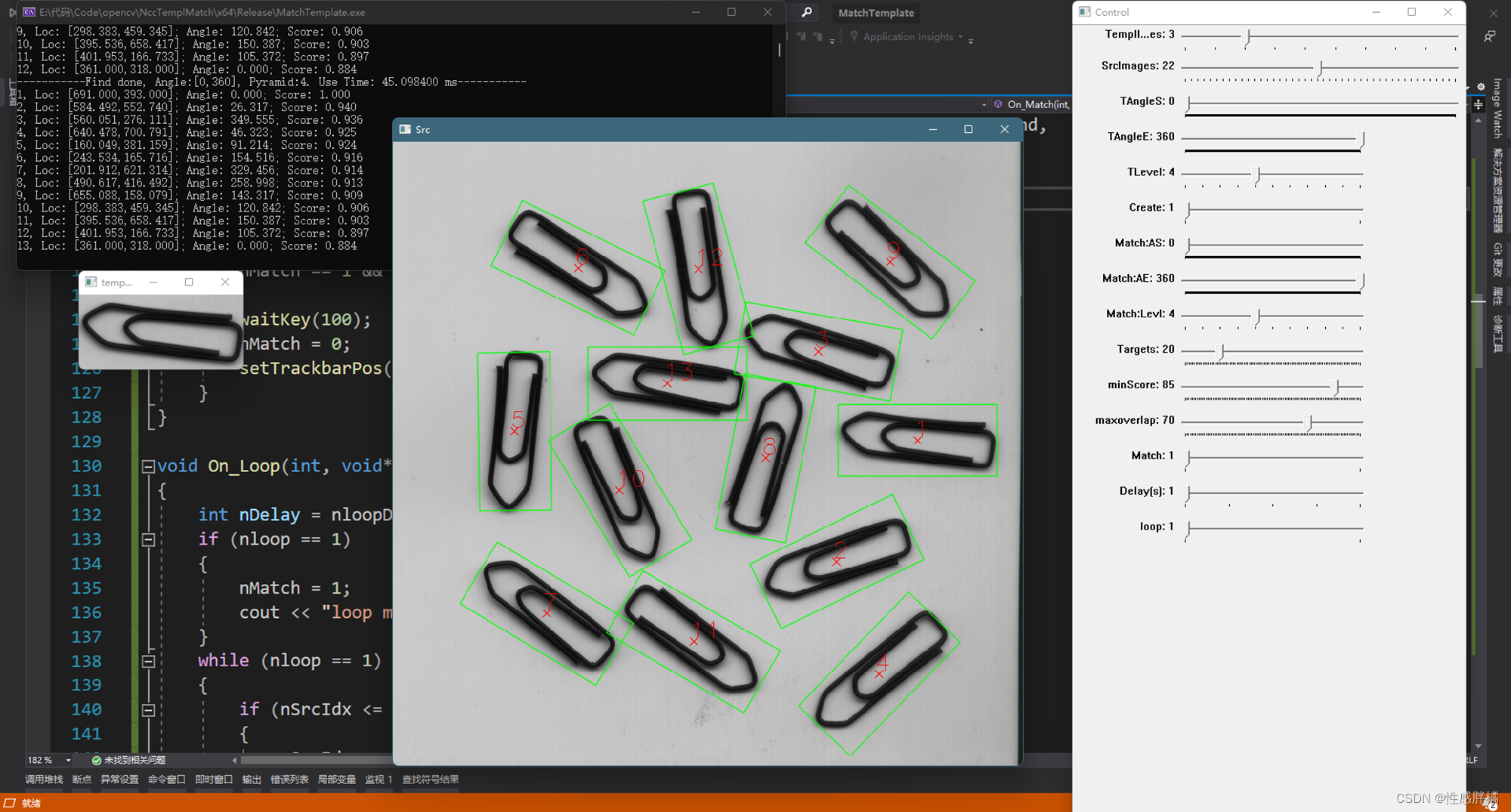

target image 646x492, template 214x98, matching angle [0,360], number of pyramid layers = 4, time-consuming about: 14ms target

image 2592x1944, template 466x135 , matching angle [0,360], number of pyramid layers=5, time-consuming about: 58ms

target image 830x822, template 209x95, matching angle [0,360], number of pyramid layers=4, time-consuming about: 45ms

target image 830x822, template 209x95, matching angle [0,360], number of pyramid layers=4, time-consuming about: 45ms

Update:

Added template file The serialization of the ncc match related functions is encapsulated into a dll, and a simple demo is made with WPF, as follows: The

interface of calling the dll is shown in the demo, and the demo is downloaded here ( the source code of Ncc match is not included )