The previous article briefly mentioned one of the template matching: NCC multi-angle template matching. The blogger found that its accuracy and stability need to be improved in combination with the actual detection project (already implemented), especially for some graphics with complex backgrounds, or templates. Improper selection will lead to unsatisfactory results; at the same time, I have also borrowed matching strategies based on gradient changes, but it is always unsatisfactory to implement them in actual projects (maybe my technology is not enough, haha); so the blogger shared two other comparisons today Applicable matches!

1). When there is a complete and easy-to-extract outline in the image, shapematch is a simple and fast method. It does not need to rotate the image at a fixed angle to search for the image, and there is no need to worry about the residual after the template image is rotated. The white area affects the matching score, just operate according to the fixed mode:

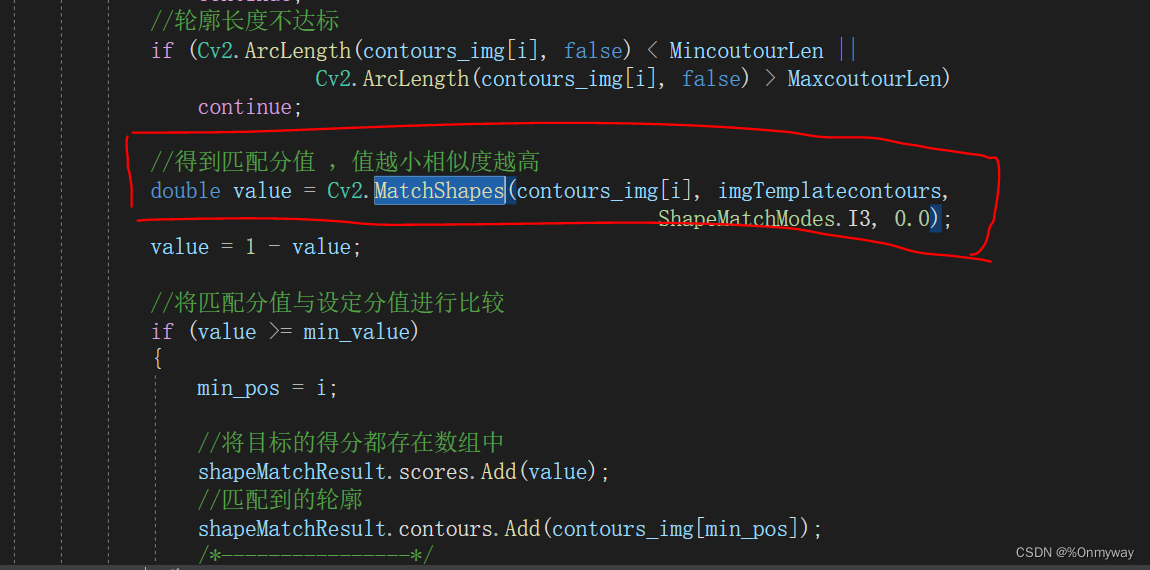

Use Opencv's existing method MatchShapes for matching, but the center of gravity and angle after matching need to be further processed. The formula for calculating the center of gravity is:

//获取重心点

Moments M = Cv2.Moments(bestcontour);

double cX = (M.M10 / M.M00);

double cY = (M.M01 / M.M00);

Angle calculation formula:

//-90~90度

//由于先验目标最小包围矩形是长方形

//因此最小包围矩形的中心和重心的向量夹角为旋转

RotatedRect rect_template = Cv2.MinAreaRect(imgTemplatecontours);

RotatedRect rect_search = Cv2.MinAreaRect(bestcontour);

//两个旋转矩阵是否同向

float sign = (rect_template.Size.Width - rect_template.Size.Height) *

(rect_search.Size.Width - rect_search.Size.Height);

float angle=0;

if (sign > 0)

// 可以直接相减

angle = rect_search.Angle - rect_template.Angle;

else

angle = (90 + rect_search.Angle) - rect_template.Angle;

if (angle > 90)

angle -= 180;The test results are as follows:

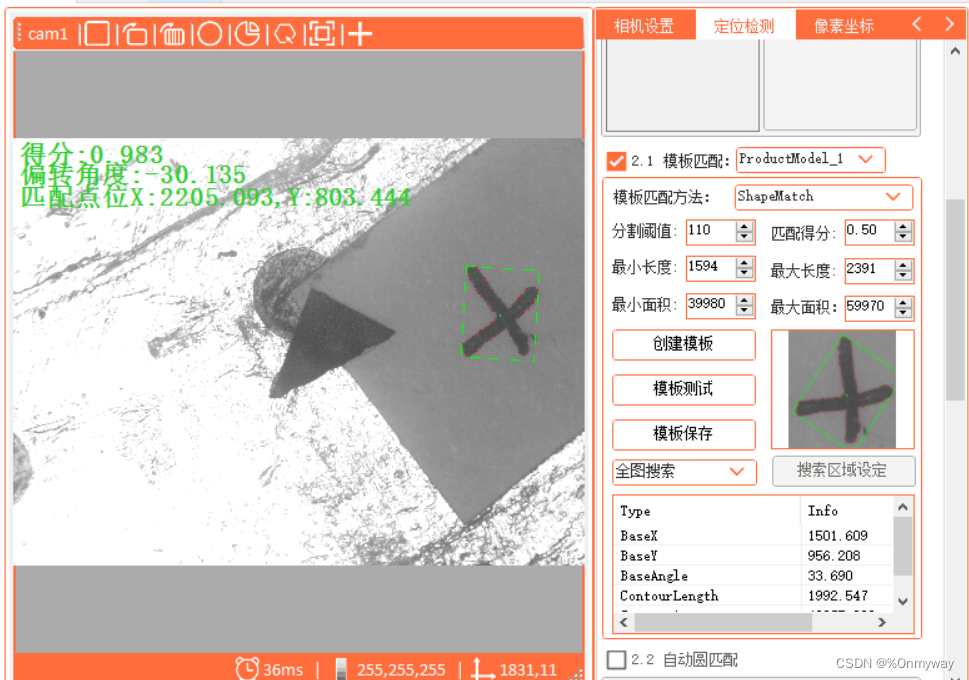

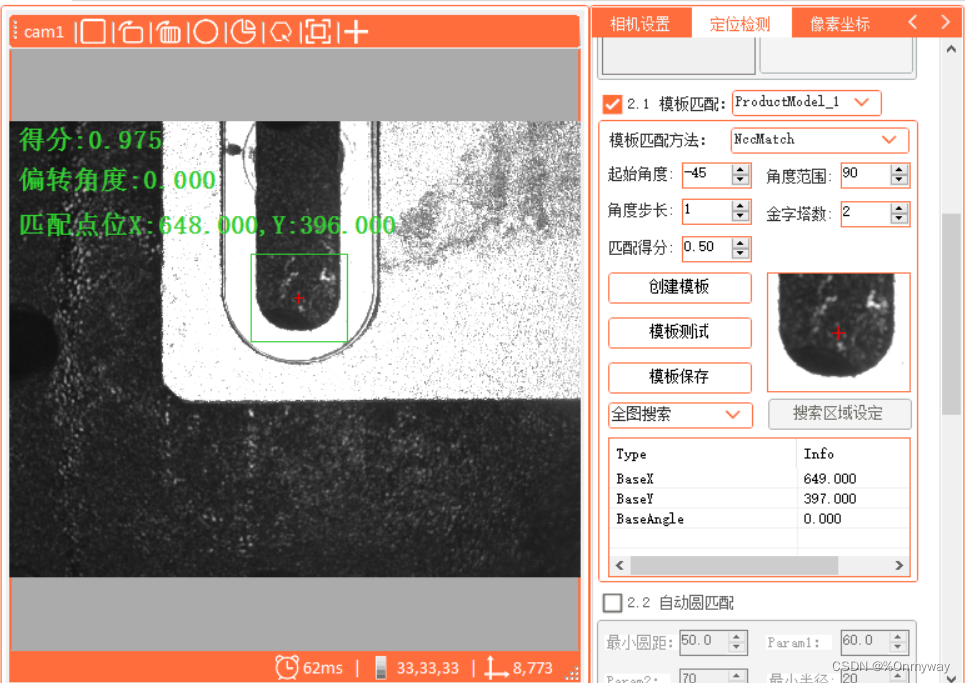

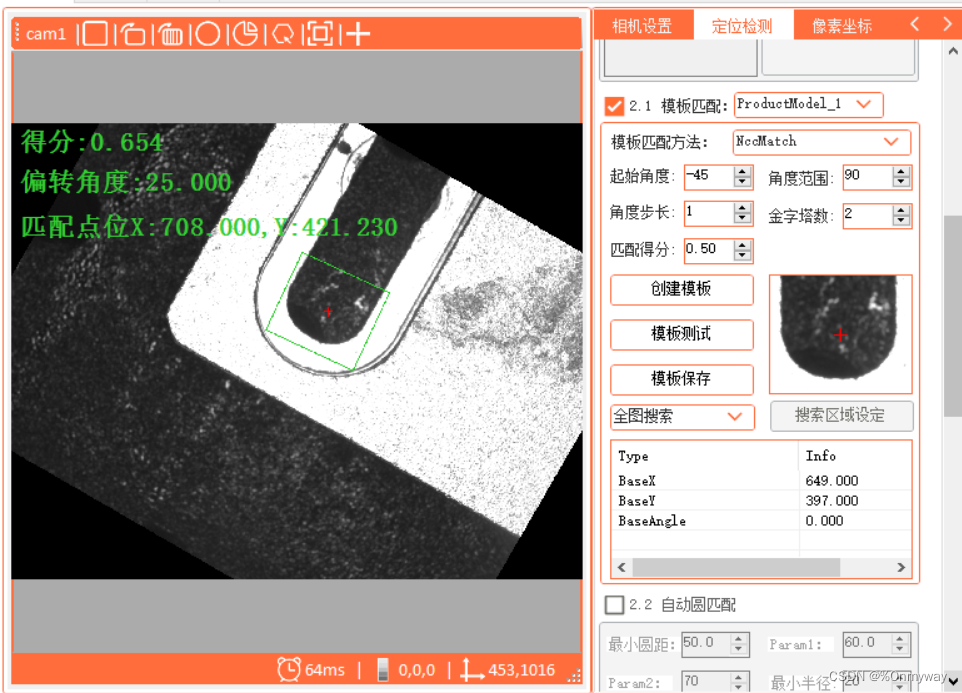

0 degrees:

-30 degrees:

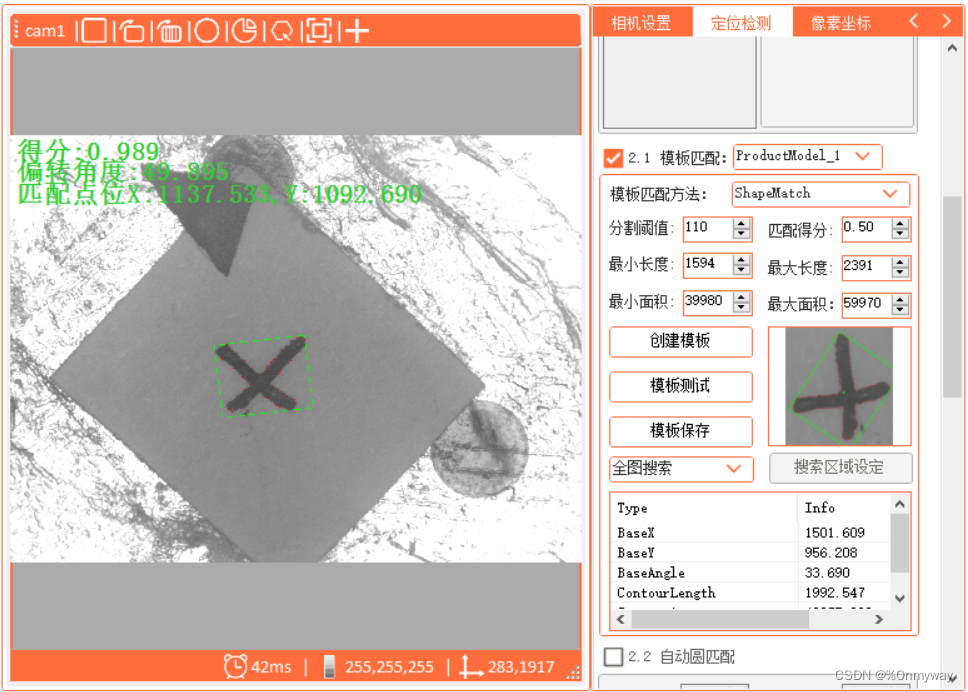

50 degrees:

Like the shape matching method for shapematch, the test time is about 40 milliseconds. Of course, this will also be related to the size of the image and the size of the outline. Regardless, you can also speed up the search by building a pyramid model.

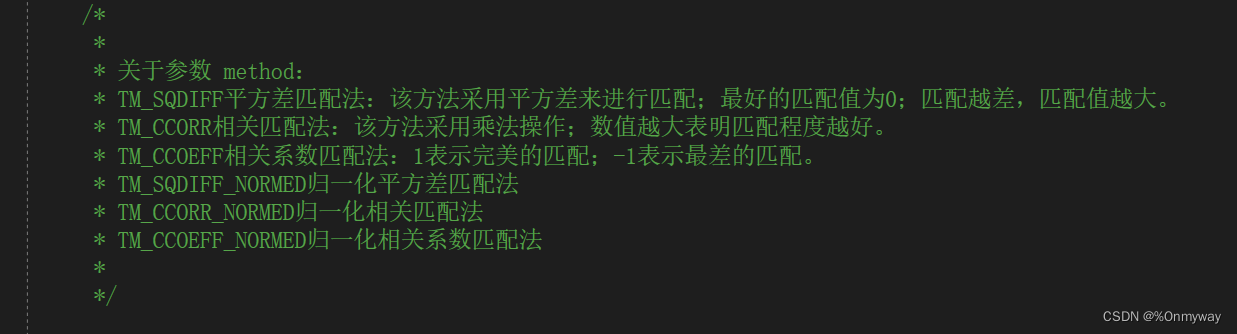

2). The following is a brief description of NCC normalized template matching . NCC has several different matching modes, which are:

The key matching methods are as follows:

for (int i = 0; i <= (int)range / step; i++)

{

newtemplate = ImageRotate(model, start + step * i, ref rotatedRect, ref mask);

if (newtemplate.Width > src.Width || newtemplate.Height > src.Height)

continue;

Cv2.MatchTemplate(src, newtemplate, result, matchMode, mask);

Cv2.MinMaxLoc(result, out double minval, out double maxval, out CVPoint minloc, out CVPoint maxloc, new Mat());

if (double.IsInfinity(maxval))

{

Cv2.MatchTemplate(src, newtemplate, result, TemplateMatchModes.CCorrNormed, mask);

Cv2.MinMaxLoc(result, out minval, out maxval, out minloc, out maxloc, new Mat());

}

if (maxval > temp)

{

location = maxloc;

temp = maxval;

angle = start + step * i;

modelrrect = rotatedRect;

}

}In order to improve the matching speed, it can be done by using pyramid downsampling->upsampling:

//对模板图像和待检测图像分别进行图像金字塔下采样

for (int i = 0; i < numLevels; i++)

{

Cv2.PyrDown(src, src, new Size(src.Cols / 2, src.Rows / 2));

Cv2.PyrDown(model, model, new Size(model.Cols / 2, model.Rows / 2));

} Rect cropRegion = new CVRect(0, 0, 0, 0);

for (int j = numLevels - 1; j >= 0; j--)

{

//为了提升速度,直接上采样到最底层

for (int i = 0; i < numLevels; i++)

{

Cv2.PyrUp(src, src, new Size(src.Cols * 2,

src.Rows * 2));//下一层,放大2倍

Cv2.PyrUp(model, model, new Size(model.Cols * 2,

model.Rows * 2));//下一层,放大2倍

}

location.X *= (int)Math.Pow(2, numLevels);

location.Y *= (int)Math.Pow(2, numLevels);

modelrrect = new RotatedRect(new Point2f((float)(modelrrect.Center.X * Math.Pow(2, numLevels)),//下一层,放大2倍

(float)(modelrrect.Center.Y * Math.Pow(2, numLevels))),

new Size2f(modelrrect.Size.Width * Math.Pow(2, numLevels),

modelrrect.Size.Height * Math.Pow(2, numLevels)), 0);

CVPoint cenP = new CVPoint(location.X + modelrrect.Center.X,

location.Y + modelrrect.Center.Y);//投影到下一层的匹配点位中心

int startX = cenP.X - model.Width;

int startY = cenP.Y - model.Height;

int endX = cenP.X + model.Width;

int endY = cenP.Y + model.Height;

cropRegion = new CVRect(startX, startY, endX - startX, endY - startY);

cropRegion = cropRegion.Intersect(new CVRect(0, 0, src.Width, src.Height));

Mat newSrc = MatExtension.Crop_Mask_Mat(src, cropRegion);

//每下一层金字塔,角度间隔减少2倍

step = 2;

//角度开始和范围

range = 20;

start = angle - 10;

bool testFlag = false;

for (int k = 0; k <= (int)range / step; k++)

{

newtemplate = ImageRotate(model, start + step * k, ref rotatedRect, ref mask);

if (newtemplate.Width > newSrc.Width || newtemplate.Height > newSrc.Height)

continue;

Cv2.MatchTemplate(newSrc, newtemplate, result, TemplateMatchModes.CCoeffNormed, mask);

Cv2.MinMaxLoc(result, out double minval, out double maxval,

out CVPoint minloc, out CVPoint maxloc, new Mat());

if (double.IsInfinity(maxval))

{

Cv2.MatchTemplate(src, newtemplate, result, TemplateMatchModes.CCorrNormed, mask);

Cv2.MinMaxLoc(result, out minval, out maxval, out minloc, out maxloc, new Mat());

}

if (maxval > temp)

{

//局部坐标

location.X = maxloc.X;

location.Y = maxloc.Y;

temp = maxval;

angle = start + step * k;

//局部坐标

modelrrect = rotatedRect;

testFlag = true;

}

}

if (testFlag)

{

//局部坐标--》整体坐标

location.X += cropRegion.X;

location.Y += cropRegion.Y;

}

}In order to improve the matching score, when the image and the template are rotated at a certain angle, invalid areas will be generated, which will affect the matching effect. At this time, we need to create a mask image.

/// <summary>

/// 图像旋转,并获旋转后的图像边界旋转矩形

/// </summary>

/// <param name="image"></param>

/// <param name="angle"></param>

/// <param name="imgBounding"></param>

/// <returns></returns>

public static Mat ImageRotate(Mat image, double angle,ref RotatedRect imgBounding,ref Mat maskMat)

{

Mat newImg = new Mat();

Point2f pt = new Point2f((float)image.Cols / 2, (float)image.Rows / 2);

Mat M = Cv2.GetRotationMatrix2D(pt, -angle, 1.0);

var mIndex = M.GetGenericIndexer<double>();

double cos = Math.Abs(mIndex[0, 0]);

double sin = Math.Abs(mIndex[0, 1]);

int nW = (int)((image.Height * sin) + (image.Width * cos));

int nH = (int)((image.Height * cos) + (image.Width * sin));

mIndex[0, 2] += (nW / 2) - pt.X;

mIndex[1, 2] += (nH / 2) - pt.Y;

Cv2.WarpAffine(image, newImg, M, new CVSize(nW, nH));

//获取图像边界旋转矩形

Rect rect = new CVRect(0, 0, image.Width, image.Height);

Point2f[] srcPoint2Fs = new Point2f[4]

{

new Point2f(rect.Left,rect.Top),

new Point2f (rect.Right,rect.Top),

new Point2f (rect.Right,rect.Bottom),

new Point2f (rect.Left,rect.Bottom)

};

Point2f[] boundaryPoints = new Point2f[4];

var A = M.Get<double>(0, 0);

var B = M.Get<double>(0, 1);

var C = M.Get<double>(0, 2); //Tx

var D = M.Get<double>(1, 0);

var E = M.Get<double>(1, 1);

var F = M.Get<double>(1, 2); //Ty

for(int i=0;i<4;i++)

{

boundaryPoints[i].X = (float)((A * srcPoint2Fs[i].X) + (B * srcPoint2Fs[i].Y) + C);

boundaryPoints[i].Y = (float)((D * srcPoint2Fs[i].X) + (E * srcPoint2Fs[i].Y) + F);

if (boundaryPoints[i].X < 0)

boundaryPoints[i].X = 0;

else if (boundaryPoints[i].X > nW)

boundaryPoints[i].X = nW;

if (boundaryPoints[i].Y < 0)

boundaryPoints[i].Y = 0;

else if (boundaryPoints[i].Y > nH)

boundaryPoints[i].Y = nH;

}

Point2f cenP = new Point2f((boundaryPoints[0].X + boundaryPoints[2].X) / 2,

(boundaryPoints[0].Y + boundaryPoints[2].Y) / 2);

double ang = angle;

double width1=Math.Sqrt(Math.Pow(boundaryPoints[0].X- boundaryPoints[1].X ,2)+

Math.Pow(boundaryPoints[0].Y - boundaryPoints[1].Y,2));

double width2 = Math.Sqrt(Math.Pow(boundaryPoints[0].X - boundaryPoints[3].X, 2) +

Math.Pow(boundaryPoints[0].Y - boundaryPoints[3].Y, 2));

//double width = width1 > width2 ? width1 : width2;

//double height = width1 > width2 ? width2 : width1;

imgBounding = new RotatedRect(cenP, new Size2f(width1, width2), (float)ang);

Mat mask = new Mat(newImg.Size(), MatType.CV_8UC3, Scalar.Black);

mask.DrawRotatedRect(imgBounding, Scalar.White, 1);

Cv2.FloodFill(mask, new CVPoint(imgBounding.Center.X, imgBounding.Center.Y), Scalar.White);

// mask.ConvertTo(mask, MatType.CV_8UC1);

//mask.CopyTo(maskMat);

//掩膜复制给maskMat

Cv2.CvtColor(mask, maskMat, ColorConversionCodes.BGR2GRAY);

Mat _maskRoI = new Mat();

Cv2.CvtColor(mask, _maskRoI, ColorConversionCodes.BGR2GRAY);

Mat buf = new Mat();

//# 黑白反转

Cv2.BitwiseNot(_maskRoI, buf);

Mat dst = new Mat();

Cv2.BitwiseAnd(newImg, newImg, dst, _maskRoI);

//Mat dst2 = new Mat();

//Cv2.BitwiseOr(buf, dst, dst2);

return dst;

}At this time, the preparatory work is almost completed. Of course, the matching accuracy (sub-pixel processing) will not be explained here, just refer to the previous article. The effect diagram of the test is as follows:

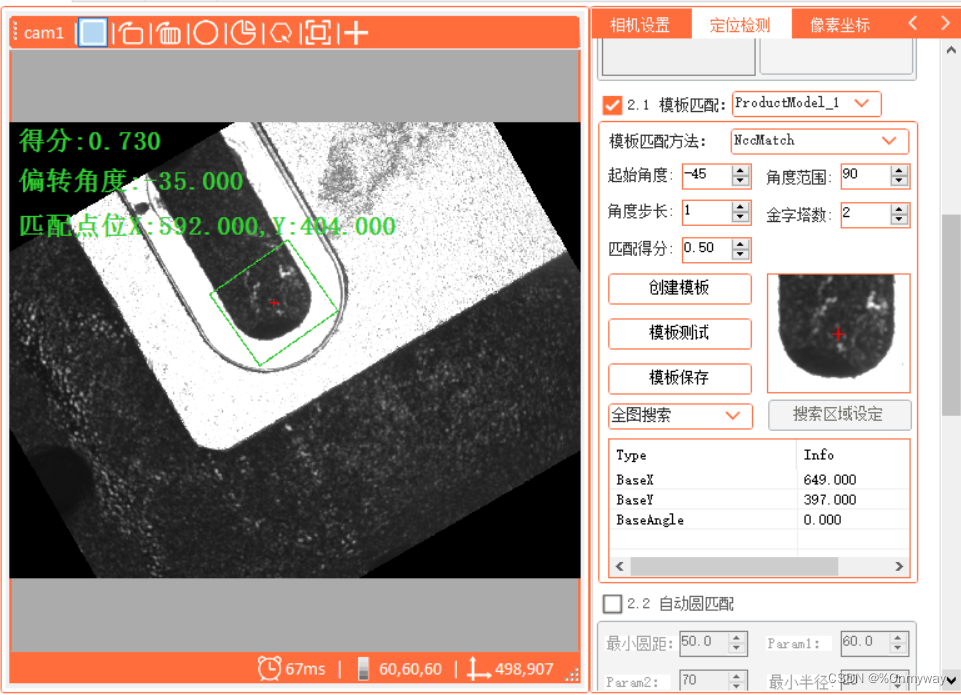

0 degrees:

25 degrees:

-35 °:

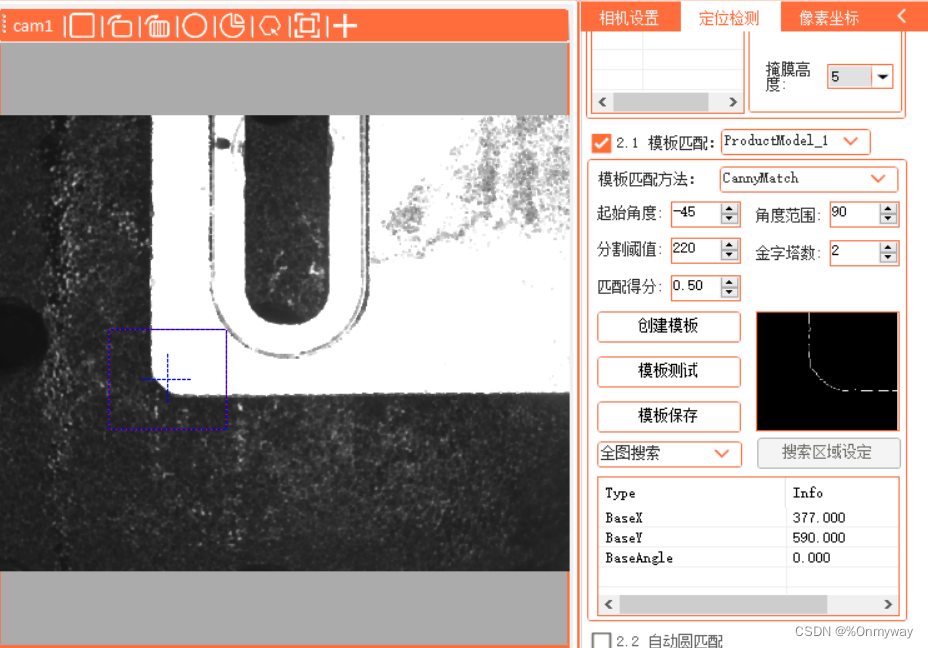

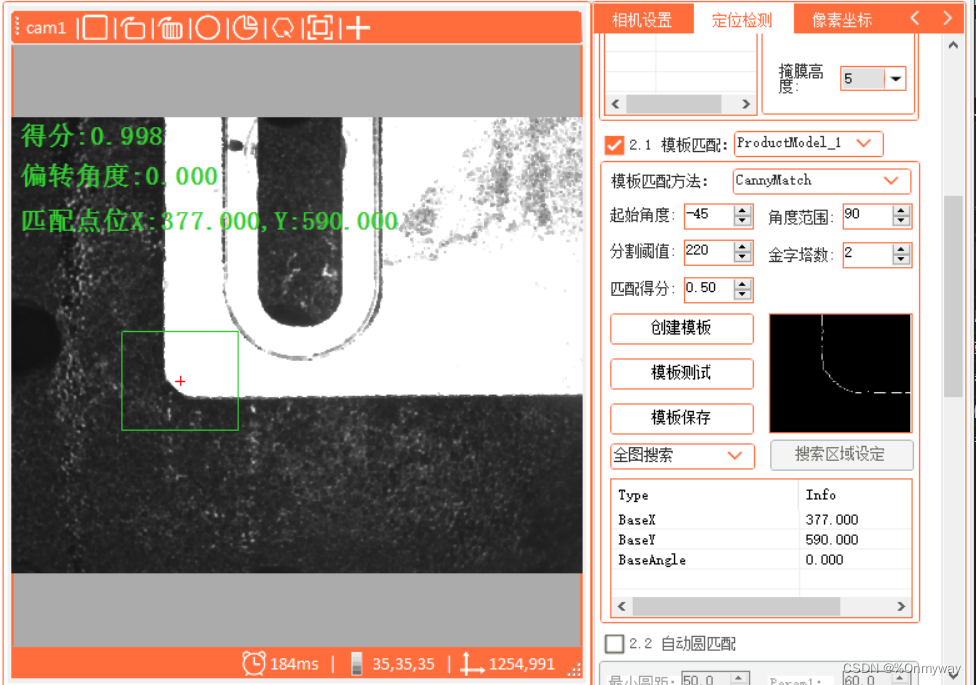

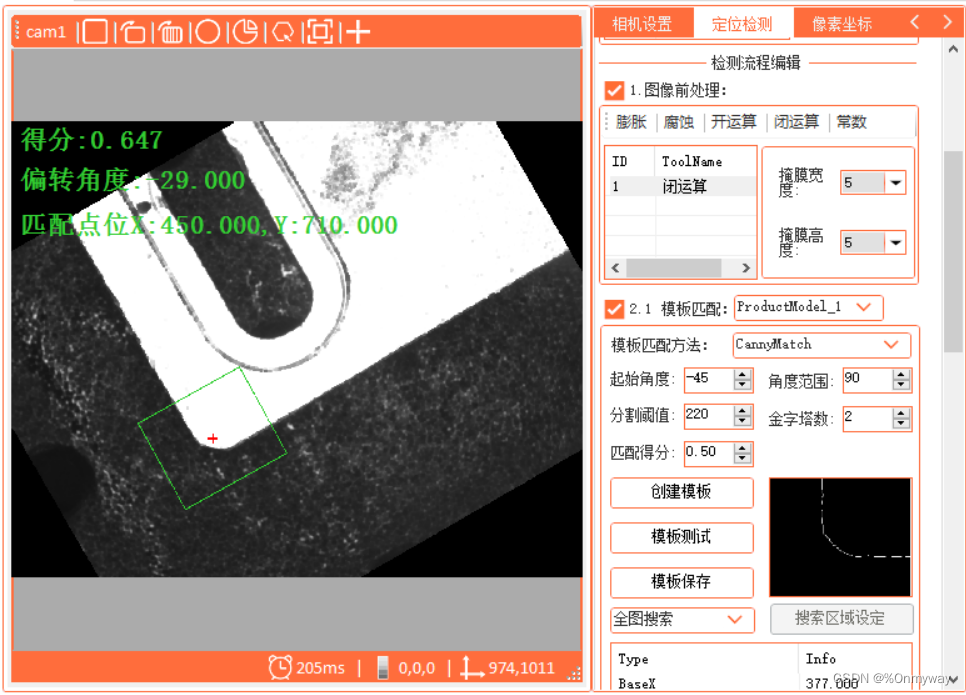

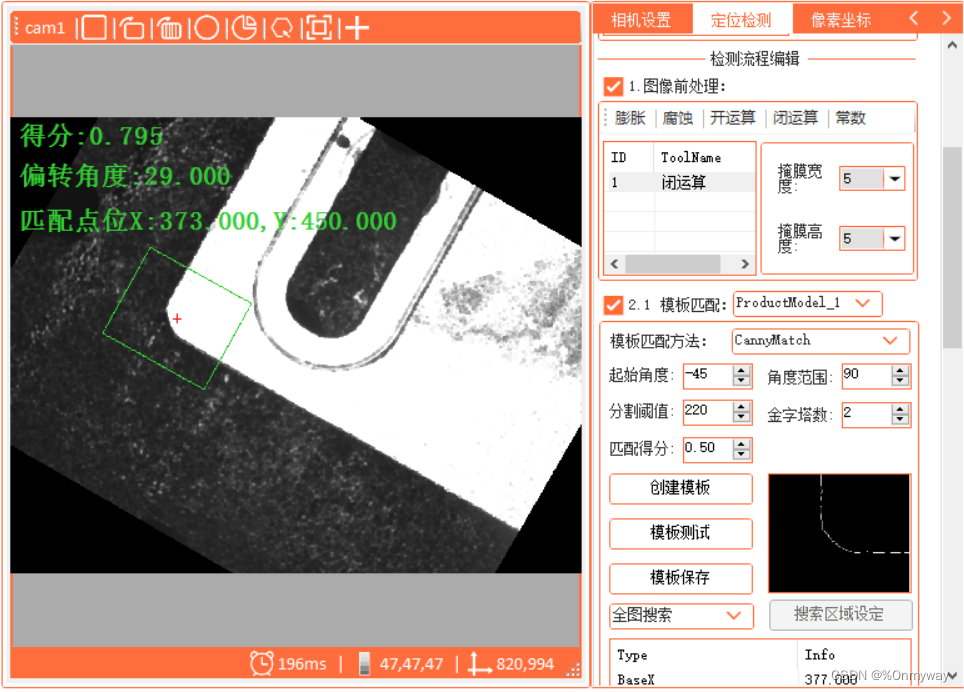

3). On the basis of the NCC model, it is also combined with shapematch to derive another matching method: canny template matching. You can understand it as creating a canny graph template first, and then using the MatchTemplate method, and adding a pyramid search strategy at the same time.

Create a Canny template:

/// <summary>

/// 创建Canny模板图像

/// </summary>

/// <param name="src"></param>

/// <param name="RegionaRect"></param>

/// <param name="thresh"></param>

/// <param name="temCannyMat"></param>

/// <returns></returns>

public Mat CreateTemplateCanny(Mat src,Rect RegionaRect,double thresh,ref Mat temCannyMat,

ref double modelX, ref double modelY)

{

Mat ModelMat = MatExtension.Crop_Mask_Mat(src, RegionaRect);

Mat Morphological = Morphological_Proces.MorphologyEx(ModelMat, MorphShapes.Rect,

new OpenCvSharp.Size(3, 3), MorphTypes.Open);

Mat binMat = new Mat();

Cv2.Threshold(Morphological, binMat, thresh, 255, ThresholdTypes.Binary);

temCannyMat = EdgeTool.Canny(binMat, 50, 240);

Mat dst = ModelMat.CvtColor(ColorConversionCodes.GRAY2BGR);

CVPoint cenP = new CVPoint(RegionaRect.Width / 2,

RegionaRect.Height / 2);

modelX = RegionaRect.X + RegionaRect.Width / 2;

modelY = RegionaRect.Y + RegionaRect.Height / 2;

Console.WriteLine(string.Format("模板中心点位:x:{0},y:{1}", RegionaRect.X + RegionaRect.Width / 2,

RegionaRect.Y + RegionaRect.Height / 2));

dst.drawCross(cenP, Scalar.Red, 20, 2);

return dst;

}Template diagram:

The test effect diagram is as follows:

4) Through the above three comparisons, it can be found that they have their own advantages and disadvantages. The shapematch shape matching emphasizes the integrity of the object shape, and the operation is simple. It does not need to build additional masks and corner step cycles; NCC does not need to select this object to make a template. It only needs to have a certain distinction between the foreground and the background, but the overall matching effect is average, and the desired effect may not be obtained in some complex environments; Canny template matching combines some of the advantages of the previous 2, which can select objects Local features, as well as NCC matching methods and strategies; you can combine the actual project and choose the appropriate method to achieve the optimal effect; of course, there are some academically better and more stable methods on the Internet, such as: based on gradient Changing template matching, you can also try it. Anyway, bloggers have limited ability to test and did not achieve the desired effect, especially for some complex images and large-sized images. The test results are not satisfactory. The requirements are relatively high, and we can't look far away, just choose a relatively suitable one. At the same time, we look forward to the big guys with common interests to explore, discover and make corrections together, so that everyone can make progress together.

PS: Knowledge knows no borders, sharing makes everyone happy!