Get the Chinese word vector and sentence vector through the bert Chinese pre-training model, the steps are as follows:

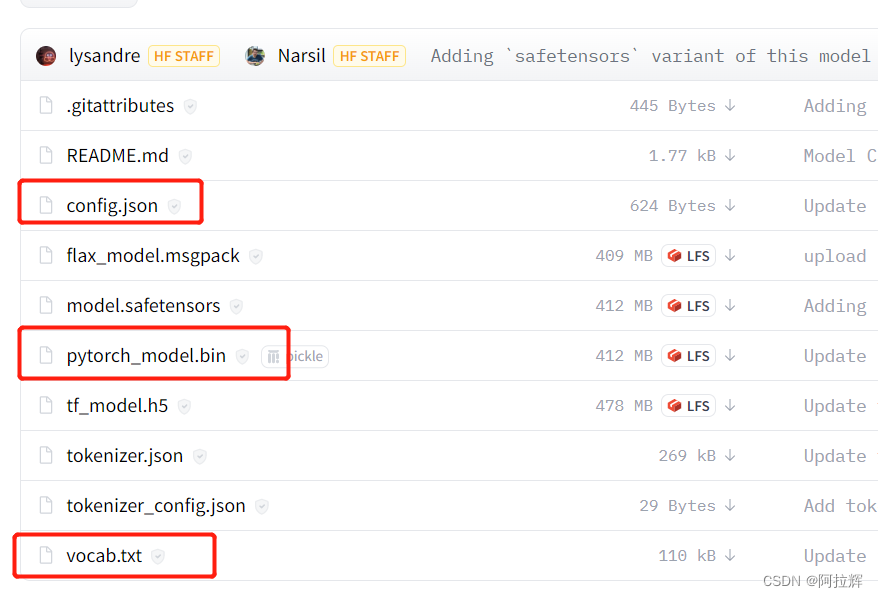

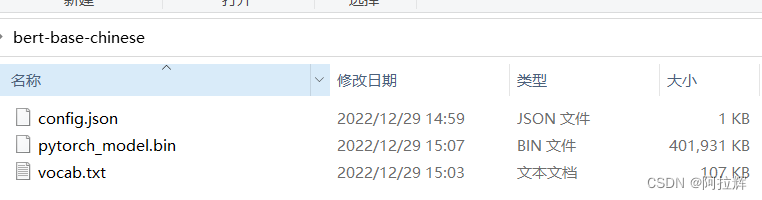

Download the bert-base-chiese model

Just download the following three files, and then put them in the folder named bert-base-chinese

to get the Chinese word vector code show as below

import torch

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-base-chinese') # 加载base模型的对应的切词器

model = BertModel.from_pretrained('bert-base-chinese')

print(tokenizer) # 打印出对应的信息,如base模型的字典大小,截断长度等等

token = tokenizer.tokenize("自然语言处理") # 切词

print(token) # 切词结果

indexes = tokenizer.convert_tokens_to_ids(token) # 将词转换为对应字典的id

print(indexes) # 输出id

tokens = tokenizer.convert_ids_to_tokens(indexes)# 将id转换为对应字典的词

print(tokens) # 输出词

# 使用这种方法对句子编码会自动添加[CLS] 和[SEP]

input_ids = torch.tensor(tokenizer.encode("自然语言处理")).unsqueeze(0)

print(input_ids)

outputs = model(input_ids)

# cls_id = tokenizer._convert_token_to_id('[CLS]')

# sep_id = tokenizer._convert_token_to_id('[SEP]')

# print(cls_id, sep_id)

sequence_output = outputs[0]

print(sequence_output)

print(sequence_output.shape) ## 字向量

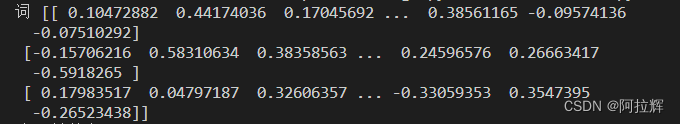

The output results are as follows

PreTrainedTokenizer(name_or_path='bert-base-chinese', vocab_size=21128, model_max_len=512, is_fast=False, padding_side='right', truncation_side='right', special_tokens={

'unk_token': '[UNK]', 'sep_token': '[SEP]', 'pad_token': '[PAD]', 'cls_token': '[CLS]', 'mask_token': '[MASK]'})

['自', '然', '语', '言', '处', '理']

[5632, 4197, 6427, 6241, 1905, 4415]

['自', '然', '语', '言', '处', '理']

tensor([[ 101, 5632, 4197, 6427, 6241, 1905, 4415, 102]])

tensor([[[-0.5707, 0.1999, -0.0637, ..., -0.0916, -0.3997, 0.1751],

[ 0.1549, 0.2454, 0.8372, ..., -0.7411, -0.8433, 0.5498],

[ 0.1983, -0.5007, -0.6416, ..., 0.0322, -0.2561, 0.0599],

...,

[ 0.1960, 0.4055, 1.6229, ..., 0.1070, -0.2448, 0.1766],

[ 0.0846, 0.9084, 0.5164, ..., 0.0235, 0.6487, -0.0858],

[-0.5326, -0.0390, 1.9163, ..., 0.1597, -0.2909, 0.6810]]],

grad_fn=<NativeLayerNormBackward0>)

torch.Size([1, 8, 768])

Of course, word vectors can also be obtained through bert-as-service. There are many on the Internet. The steps are as follows:

-

Install Dr. Xiao Han's bert-as-service:

pip install bert-serving-server

pip install bert-serving-client -

Download the trained Bert Chinese word vector:

https://storage.proxy.ustclug.org/bert_models/2018_11_03/chinese_L-12_H-768_A-12.zip -

Start bert-as-service:

Find the folder where bert-serving-start.exe is located (I installed it directly with anaconda prompt, bert-serving-start.exe is in the F:\anaconda\Scripts directory.) Find the training The word vector model and unzip it, the path is as follows: G:\python\bert_chinese\chinese_L-12_H-768_A-12

Open the cmd window, enter the file directory where bert-serving-start.exe is located, and then enter:

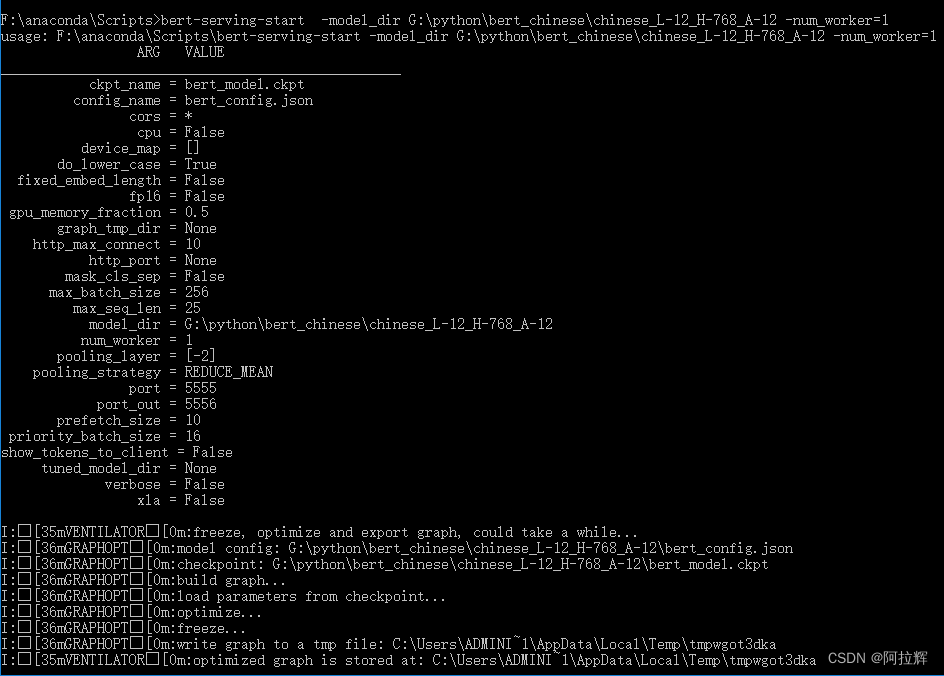

bert-serving-start -model_dir G:\python\bert_chinese\chinese_L-12_H-768_A-12 -num_worker=1

#后台启动服务(nohup .... &)

nohup bert-serving-start -model_dir G:\python\bert_chinese\chinese_L-12_H-768_A-12 -num_worker=1 &

You can start bert-as-service (num_worker seems to be the number of processes of the BERT service, for example num_worker = 2, which means that it can handle concurrent requests from up to 2 clients.) After starting, the result is as follows: Get Bert pre-

trained

Chinese word vector:

from bert_serving.client import BertClient

bc = BertClient()

print(bc.encode([“NONE”,“没有”,“偷东西”]))#获取词的向量表示

print(bc.encode([“none没有偷东西”]))#获取分词前的句子的向量表示

print(bc.encode([“none 没有 偷 东西”]))#获取分词后的句子向量表示

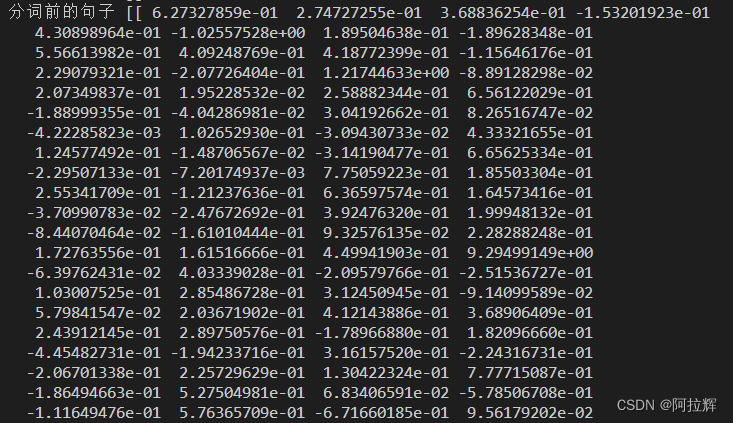

The result is as follows: each vector is 768 dimensions.