0. Introduction

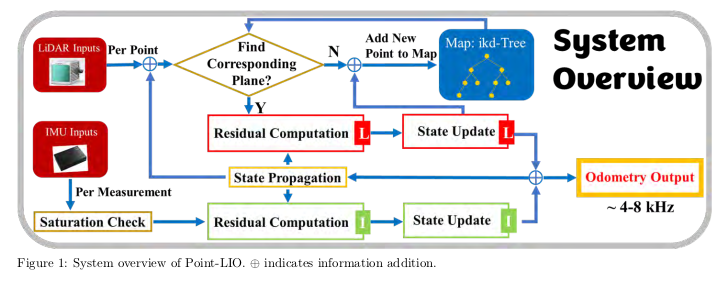

For the recently released Point-LIO (robust high-bandwidth laser inertial odometer), I am still very interested. For this reason, I spent some time analyzing the code of Point-LIO, and studied its comparison with Fast-LIO2. the difference

1. laserMapping.cpp

The first part is to realize the image segmentation of the lidar field of view. First, a local map (LocalMap_Points) of type BoxPointType and a variable of type bool (Localmap_Initialized) are defined, indicating whether the local map has been initialized. Then, in the lasermap_fov_segment() function, the position of the lidar (pos_LiD) is calculated according to the attitude of the lidar, and whether the local map needs to be moved is judged according to the movement threshold (MOV_THRESHOLD). If movement is required, a new local map boundary (New_LocalMap_Points) is calculated and the boxes that need to be removed are added to cub_needrm. Finally, delete the point cloud according to the box in cub_needrm to complete the image segmentation.

BoxPointType LocalMap_Points;

bool Localmap_Initialized = false;

void lasermap_fov_segment() //针对激光雷达视场角来完成图像分割

{

cub_needrm.shrink_to_fit(); //将容量设置为容器的长度

V3D pos_LiD;

if (use_imu_as_input) {

pos_LiD =

kf_input.x_.pos + kf_input.x_.rot.normalized() *

Lidar_T_wrt_IMU; //计算激光雷达在当前位姿下的位置

} else {

pos_LiD =

kf_output.x_.pos + kf_output.x_.rot.normalized() * Lidar_T_wrt_IMU;

}

if (!Localmap_Initialized) {

//判断是否需要初始化局部地图

//将局部地图的边界设置为当前位置的正方形区域,并将Localmap_Initialized设置为true

for (int i = 0; i < 3; i++) {

LocalMap_Points.vertex_min[i] = pos_LiD(i) - cube_len / 2.0;

LocalMap_Points.vertex_max[i] = pos_LiD(i) + cube_len / 2.0;

}

Localmap_Initialized = true;

return;

}

float dist_to_map_edge[3][2];

bool need_move = false;

//如果不需要初始化,则计算激光雷达当前位姿与局部地图边界的距离,并根据移动阈值判断是否需要移动局部地图

for (int i = 0; i < 3; i++) {

dist_to_map_edge[i][0] = fabs(pos_LiD(i) - LocalMap_Points.vertex_min[i]);

dist_to_map_edge[i][1] = fabs(pos_LiD(i) - LocalMap_Points.vertex_max[i]);

if (dist_to_map_edge[i][0] <= MOV_THRESHOLD * DET_RANGE ||

dist_to_map_edge[i][1] <= MOV_THRESHOLD * DET_RANGE)

need_move = true;

}

//如果需要移动,则计算新的局部地图边界

if (!need_move)

return;

BoxPointType New_LocalMap_Points, tmp_boxpoints;

New_LocalMap_Points = LocalMap_Points;

float mov_dist = max((cube_len - 2.0 * MOV_THRESHOLD * DET_RANGE) * 0.5 * 0.9,

double(DET_RANGE * (MOV_THRESHOLD - 1)));

for (int i = 0; i < 3; i++) {

tmp_boxpoints = LocalMap_Points;

if (dist_to_map_edge[i][0] <= MOV_THRESHOLD * DET_RANGE) {

New_LocalMap_Points.vertex_max[i] -= mov_dist;

New_LocalMap_Points.vertex_min[i] -= mov_dist;

tmp_boxpoints.vertex_min[i] = LocalMap_Points.vertex_max[i] - mov_dist;

cub_needrm.emplace_back(tmp_boxpoints); //将需要移除的框添加到cub_needrm中

} else if (dist_to_map_edge[i][1] <= MOV_THRESHOLD * DET_RANGE) {

New_LocalMap_Points.vertex_max[i] += mov_dist;

New_LocalMap_Points.vertex_min[i] += mov_dist;

tmp_boxpoints.vertex_max[i] = LocalMap_Points.vertex_min[i] + mov_dist;

cub_needrm.emplace_back(tmp_boxpoints);

}

}

LocalMap_Points = New_LocalMap_Points;

points_cache_collect();

if (cub_needrm.size() > 0)

int kdtree_delete_counter = ikdtree.Delete_Point_Boxes(

cub_needrm); //收集点云缓存,并根据cub_needrm中的框删除点云,返回删除的框数量。

}

Let's take a look at how to use this function. The following code mainly implements the following operations:

1. Perform spatial downsampling and time compression on the point cloud collected by the lidar;

2. Initialize the map kd-tree;

3. Update the map using iterative closest point algorithm (ICP) and Kalman filter. Among them, ICP is mainly used for point cloud registration, and Kalman filter is used to estimate and update the robot pose.

lasermap_fov_segment();

/*** downsample the feature points in a scan ***/

t1 = omp_get_wtime();

if (space_down_sample) {

//空间下采样

downSizeFilterSurf.setInputCloud(feats_undistort);

downSizeFilterSurf.filter(*feats_down_body);

sort(feats_down_body->points.begin(), feats_down_body->points.end(),

time_list); //按时间排序

} else {

feats_down_body = Measures.lidar;

sort(feats_down_body->points.begin(), feats_down_body->points.end(),

time_list);

}

time_seq = time_compressing<int>(feats_down_body); //时间压缩

feats_down_size = feats_down_body->points.size(); //点云数量

/*** initialize the map kdtree ***/

if (!init_map) {

if (ikdtree.Root_Node == nullptr) //

// if(feats_down_size > 5)

{

ikdtree.set_downsample_param(filter_size_map_min); //设置滤波参数

}

feats_down_world->resize(feats_down_size); //初始化点云

for (int i = 0; i < feats_down_size; i++) {

pointBodyToWorld(&(feats_down_body->points[i]),

&(feats_down_world->points[i])); //转换到世界坐标系

}

for (size_t i = 0; i < feats_down_world->size(); i++) {

init_feats_world->points.emplace_back(

feats_down_world

->points[i]); //将转换到世界坐标西的点云加入到init_feats_world

}

if (init_feats_world->size() < init_map_size) //等待构建地图

continue;

ikdtree.Build(init_feats_world->points); //构建地图

init_map = true;

publish_init_kdtree(pubLaserCloudMap); //(pubLaserCloudFullRes);

continue;

}

/*** ICP and Kalman filter update ***/

normvec->resize(feats_down_size);

feats_down_world->resize(feats_down_size);

Nearest_Points.resize(feats_down_size);

t2 = omp_get_wtime(); //初始化t2为当前时间

/*** iterated state estimation ***/

crossmat_list.reserve(feats_down_size);

pbody_list.reserve(feats_down_size);

// pbody_ext_list.reserve(feats_down_size);

//对于每个点,将其坐标转换为V3D类型的point_this

for (size_t i = 0; i < feats_down_body->size(); i++) {

V3D point_this(feats_down_body->points[i].x,

feats_down_body->points[i].y,

feats_down_body->points[i].z);

pbody_list[i] = point_this;

//如果使用外参估计

if (extrinsic_est_en) {

if (!use_imu_as_input) {

//对于每个点,使用卡尔曼滤波估计出的外参对其进行坐标变换

point_this = kf_output.x_.offset_R_L_I.normalized() * point_this +

kf_output.x_.offset_T_L_I;

} else {

point_this = kf_input.x_.offset_R_L_I.normalized() * point_this +

kf_input.x_.offset_T_L_I;

}

} else {

// 使用Lidar_R_wrt_IMU和Lidar_T_wrt_IMU对其进行变换

point_this = Lidar_R_wrt_IMU * point_this + Lidar_T_wrt_IMU;

}

M3D point_crossmat;

point_crossmat << SKEW_SYM_MATRX(point_this); //根据当前点生成矩阵

crossmat_list[i] = point_crossmat;

}

if (!use_imu_as_input) {

bool imu_upda_cov = false; //是否需要更新imu的协方差

effct_feat_num = 0;

/**** point by point update ****/

double pcl_beg_time =

Measures

.lidar_beg_time; //首先设置pcl_beg_time为Measures.lidar_beg_time,idx为-1

idx = -1;

for (k = 0; k < time_seq.size(); k++) {

PointType &point_body = feats_down_body->points[idx + time_seq[k]];

time_current =

point_body.curvature / 1000.0 +

pcl_beg_time; //找到对应的点并计算出当前时间time_current

if (is_first_frame) {

if (imu_en) {

//如果是第一帧,且启用了IMU,那么需要找到最近的IMU数据

while (time_current > imu_next.header.stamp.toSec()) {

imu_last = imu_next;

imu_next = *(imu_deque.front());

imu_deque.pop_front();

// imu_deque.pop();

}

//计算出对应的角速度和加速度

angvel_avr << imu_last.angular_velocity.x,

imu_last.angular_velocity.y, imu_last.angular_velocity.z;

acc_avr << imu_last.linear_acceleration.x,

imu_last.linear_acceleration.y,

imu_last.linear_acceleration.z;

}

is_first_frame = false;

imu_upda_cov = true;

time_update_last = time_current;

time_predict_last_const = time_current;

}

if (imu_en) {

bool imu_comes = time_current > imu_next.header.stamp.toSec();

// 如果启用了IMU,那么需要在当前时间之前的IMU数据都进行卡尔曼滤波更新

while (imu_comes) {

imu_upda_cov = true;

//将IMU数据中的角速度和线性加速度分别存储到angvel_avr和acc_avr中

angvel_avr << imu_next.angular_velocity.x,

imu_next.angular_velocity.y, imu_next.angular_velocity.z;

acc_avr << imu_next.linear_acceleration.x,

imu_next.linear_acceleration.y,

imu_next.linear_acceleration.z;

/*** 对协方差进行更新 ***/

imu_last = imu_next; //将当前IMU数据存储为imu_last

imu_next = *(imu_deque.front()); //将下一个IMU数据存储为imu_next

imu_deque.pop_front();

double dt = imu_last.header.stamp.toSec() -

time_predict_last_const; //接着计算时间差dt

kf_output.predict(dt, Q_output, input_in, true,

false); //通过kf_output.predict函数进行预测

time_predict_last_const =

imu_last.header.stamp.toSec(); // big problem

imu_comes = time_current > imu_next.header.stamp.toSec();

// if (!imu_comes)

{

double dt_cov = imu_last.header.stamp.toSec() -

time_update_last; //就计算时间差dt_cov

if (dt_cov > 0.0) {

time_update_last = imu_last.header.stamp.toSec();

double propag_imu_start = omp_get_wtime();

kf_output.predict(dt_cov, Q_output, input_in, false,

true); //行卡尔曼滤波预测

propag_time += omp_get_wtime() - propag_imu_start;

double solve_imu_start = omp_get_wtime();

kf_output.update_iterated_dyn_share_IMU(); //进行eskf迭代更新

solve_time += omp_get_wtime() - solve_imu_start;

}

}

}

}

double dt = time_current - time_predict_last_const;

double propag_state_start = omp_get_wtime();

if (!prop_at_freq_of_imu) {

double dt_cov = time_current - time_update_last;

if (dt_cov > 0.0) {

kf_output.predict(dt_cov, Q_output, input_in, false, true);

time_update_last = time_current;

}

}

kf_output.predict(dt, Q_output, input_in, true, false);

propag_time += omp_get_wtime() - propag_state_start;

time_predict_last_const = time_current;

// if(k == 0)

// {

// fout_imu_pbp << Measures.lidar_last_time - first_lidar_time <<

// " " << imu_last.angular_velocity.x << " " <<

// imu_last.angular_velocity.y << " " <<

// imu_last.angular_velocity.z \

// << " " << imu_last.linear_acceleration.x << " " <<

// imu_last.linear_acceleration.y << " " <<

// imu_last.linear_acceleration.z << endl;

// }

double t_update_start = omp_get_wtime();

if (feats_down_size < 1) {

ROS_WARN("No point, skip this scan!\n");

idx += time_seq[k];

continue;

}

if (!kf_output.update_iterated_dyn_share_modified()) {

idx = idx + time_seq[k];

continue;

}

if (prop_at_freq_of_imu) {

double dt_cov = time_current - time_update_last;

if (!imu_en &&

(dt_cov >=

imu_time_inte)) // //需要在当前时间之前的时间间隔大于imu_time_inte时进行卡尔曼滤波协方差更新

{

double propag_cov_start = omp_get_wtime();

kf_output.predict(

dt_cov, Q_output, input_in, false,

true); //对于每个时间片中的点,进行卡尔曼滤波更新,并将点转换到世界坐标系中

imu_upda_cov = false;

time_update_last = time_current;

propag_time += omp_get_wtime() - propag_cov_start;

}

}

solve_start = omp_get_wtime();

2.Estimator.cpp

Other than that, the other contents are almost the same. What is more distinctive is that the operation part of single-point laser optimization is added to Estimator.cpp.

esekfom::esekf<state_input, 24, input_ikfom>

kf_input; // EKF的输入,针对IMU优化

esekfom::esekf<state_output, 30, input_ikfom>

kf_output; // EKF的输出,针对单点激光雷达

The code defines a function process_noise_cov_output(), which is used to generate a 30x30 matrix cov, which represents the noise covariance matrix of the system. and get the input f matrix values

Eigen::Matrix<double, 30, 30> process_noise_cov_output() {

Eigen::Matrix<double, 30, 30> cov;

cov.setZero(); //创建一个30x30的矩阵cov,并将其所有元素初始化为0

cov.block<3, 3>(12, 12).diagonal() << vel_cov, vel_cov,

vel_cov; //从(12,12)开始的一个3x3的块,表示速度的协方差

cov.block<3, 3>(15, 15).diagonal() << gyr_cov_output, gyr_cov_output,

gyr_cov_output; //表示角速度的噪声协方差

cov.block<3, 3>(18, 18).diagonal() << acc_cov_output, acc_cov_output,

acc_cov_output; //表示加速度计的噪声协方差

cov.block<3, 3>(24, 24).diagonal() << b_gyr_cov, b_gyr_cov,

b_gyr_cov; // bg的噪声协方差

cov.block<3, 3>(27, 27).diagonal() << b_acc_cov, b_acc_cov,

b_acc_cov; // ba的噪声协方差

// MTK::get_cov<process_noise_output>::type cov =

// MTK::get_cov<process_noise_output>::type::Zero();

// MTK::setDiagonal<process_noise_output, vect3, 0>(cov,

// &process_noise_output::vel, vel_cov);// 0.03

// MTK::setDiagonal<process_noise_output, vect3, 3>(cov,

// &process_noise_output::ng, gyr_cov_output); // *dt 0.01 0.01 * dt * dt 0.05

// MTK::setDiagonal<process_noise_output, vect3, 6>(cov,

// &process_noise_output::na, acc_cov_output); // *dt 0.00001 0.00001 * dt *dt

// 0.3 //0.001 0.0001 0.01 MTK::setDiagonal<process_noise_output, vect3,

// 9>(cov, &process_noise_output::nbg, b_gyr_cov); //0.001 0.05 0.0001/out

// 0.01 MTK::setDiagonal<process_noise_output, vect3, 12>(cov,

// &process_noise_output::nba, b_acc_cov); //0.001 0.05 0.0001/out 0.01

return cov;

}

//这段代码的作用是将输入的IMU数据转化为状态转移方程中的输入向量f。其中状态向量s包含了位置、速度、姿态、陀螺仪bias和加速度计bias等信息,in包含了IMU测量得到的角速度和线性加速度数据。

Eigen::Matrix<double, 24, 1> get_f_input(state_input &s,

const input_ikfom &in) {

Eigen::Matrix<double, 24, 1> res = Eigen::Matrix<double, 24, 1>::Zero();

vect3 omega;

in.gyro.boxminus(

omega,

s.bg); //通过in.gyro.boxminus函数将in.gyro和s.bg进行运算,得到角速度向量omega

vect3 a_inertial =

s.rot.normalized() *

(in.acc -

s.ba); //计算惯性系下的加速度向量a_inertial,首先通过s.rot.normalized()将s.rot单位化,再通过乘法得到加速度向量,最后减去s.ba得到相对于惯性系的加速度。

for (int i = 0; i < 3; i++) {

res(i) = s.vel[i];

res(i + 3) = omega[i];

res(i + 12) = a_inertial[i] + s.gravity[i];

}

return res;

}

//这段代码的作用是将输入的单点激光数据转化为状态转移方程中的输入向量f。其中状态向量s包含了位置、速度、姿态、陀螺仪bias和加速度计bias等信息,in包含了IMU测量得到的角速度和线性加速度数据,没用到

Eigen::Matrix<double, 30, 1> get_f_output(state_output &s,

const input_ikfom &in) {

Eigen::Matrix<double, 30, 1> res = Eigen::Matrix<double, 30, 1>::Zero();

vect3 a_inertial =

s.rot.normalized() *

s.acc; //计算惯性系下的加速度向量a_inertial,首先通过s.rot.normalized()将s.rot单位化,再通过乘法得到加速度向量。

for (int i = 0; i < 3; i++) {

res(i) = s.vel[i];

res(i + 3) = s.omg[i];

res(i + 12) = a_inertial[i] + s.gravity[i];

}

return res;

}

This part corresponds to parts 4.2 and 5.2 of the original text. This function is used to calculate the observation value and Jacobian matrix of all feature points that meet the matching conditions at the current moment, and save them to ekfom_data. The main process is as follows:

- Traverse all feature points at the current moment, and calculate the normal vector and corresponding observation value of each feature point in turn.

- For each feature point, first convert it from the machine system to the world system, and calculate its position under the world system and its position under the machine system.

- Use the ikdtree.Nearest_Search function to search the NUM_MATCH_POINTS points closest to the current feature point in the map point cloud, and calculate the plane equation pabcd between them.

- If the number of searched points is less than NUM_MATCH_POINTS or the farthest point distance is greater than 5, it is considered that the feature point is not in the map, and point_selected_surf[idx+j+1] is marked as false.

- If the number of searched points is greater than or equal to NUM_MATCH_POINTS and the distance of the farthest point is less than or equal to 5, the feature point is considered to be in the map, and the distance pd2 between it and the map plane is calculated.

- Determine whether it meets the matching condition (ie p_body.norm() > match_s * pd2 * pd2), if so, mark point_selected_surf[idx+j+1] as true, and normal vector normvec->points of the feature point [j] Save to normvec.

- If there is no feature point that meets the matching conditions at the current moment, set ekfom_data.valid to false and return directly.

- Otherwise, for each feature point satisfying the matching condition, calculate its corresponding observation value z and Jacobian matrix H.

- Save the observed values z and Jacobian matrix H of all feature points that meet the matching conditions into ekfom_data.

- Finally, add effect_num_k to effct_feat_num, indicating the number of feature points that meet the matching conditions at the current moment.