Table of contents

Realize Chinese word segmentation based on conditional random field model

3. Find the characteristic 3 (P)

Complete code and screenshots of the project

Do not print screenshots of features 2, 3, and 4

Screenshot after printing features

Realize Chinese word segmentation based on conditional random field model

In the field of Chinese word segmentation, the method based on word tagging has been widely used. The word segmentation problem can be converted into a sequence tagging problem. Now the word segmentation effect is better and the tagging model based on conditional random fields (CRFs) is commonly used. The idea of the model is that the conditional random field model corresponds to an undirected graph ![]() ,

, ![]() and the elements in Y correspond to the vertices of the undirected graph one by one. Under the condition X,

and the elements in Y correspond to the vertices of the undirected graph one by one. Under the condition X, ![]() the conditional probability distribution of the random variable conforms to the Markov property of the graph, which can be called

the conditional probability distribution of the random variable conforms to the Markov property of the graph, which can be called ![]() a conditional random field. The conditional random field model is used to calculate the joint probability distribution of the entire editing sequence given the observation sequence that needs to be marked, and solve the joint probability distribution of the marked sequence of words in the sentence, so as to realize word segmentation.

a conditional random field. The conditional random field model is used to calculate the joint probability distribution of the entire editing sequence given the observation sequence that needs to be marked, and solve the joint probability distribution of the marked sequence of words in the sentence, so as to realize word segmentation.

Improvement of Chinese word segmentation based on conditional random field model: https://blog.csdn.net/admiz/article/details/109882968

word feature learning

1. Read the corpus

Use Python's open( ) function to read the ic file, set the read code to 'utf-8-sig' and open the corpus named "msr_training.utf8.ic", and then use the readlines( ) function to read each line of the corpus as An element of the wordFeed list, the length wordFeedLen of the corpus is obtained through the len( ) function. The format of each element in the wordFeed list is: [A|B], "A" is a word, and "B" is the state of the word. The data format is as shown below, the data download link: https://download.csdn.net/download/admiz/13132232

2. Find feature 2 (R)

In order to find the second feature, first count the total number of times it appears in the corpus for a certain word, and then calculate its status as the beginning of the word (B), the middle of the word (M), the end of the word (E), and the word into a word ( S) probability.

Before performing statistics on a certain word, first set the data structure for storing the results as a dictionary. " is the probability that the word is at the beginning of the word, "M" is the probability that the word is in the word, "E" is the probability that the word is at the end of the word, and "S" is the probability that the word becomes a word.

The pseudo code of this step is as follows:

i is a word that requires feature two

Initialize BDict as an empty dictionary

Initialize B, M, E, S, BMESsum to 0

for j in range(wordFeedLen):

if wordFeed[j][0] == i:

if wordFeed[j][2] == 'B':

B = B+1

elif wordFeed[j][2] == 'M':

M = M+1

elif wordFeed[j][2] == 'E':

E = E+1

elif wordFeed[j][2] == 'S':

S = S+1

BMESsum = B+M+E+S

BDict[i] = [B/BMESsum,M/BMESsum,E/BMESsum,S/BMESsum]3. Find the characteristic 3 (P)

The third feature is to calculate the probability of transferring to the next word state B, M, E, S when it is in the current state B, M, E, S for a word. Count the total number of occurrences of a certain word in the full text, and calculate the probability of its state being the beginning (B), middle (M), end (E), and single word (S). For the 4 states, a 4x4 matrix is formed, and the values in the matrix are the transition probabilities between them.

Before carrying out the statistics for a certain word, the data structure of storing the result is assumed to be a dictionary earlier, and its specific structure is as follows:

{ A:[ [0,0,0,0] ,[0,0,0,0],[0,0,0,0],[0,0,0,0]]} , where "A" is the targeted word, the first element in the list (i.e. the bold list) means: when "A" is state B, the probability of the next word being B, M, E, S and the element [0,0,0,0] in one-to-one correspondence, and so on.

Part of the code for this step is as follows:

(1) Create and initialize a 4x4 matrix (dictionary) of words

def setDict2(testWord): #testWord为句子每个字的列表

testDict = { }

for i in testWord:

testDict[i]=[[0,0,0,0],[0,0,0,0],[0,0,0,0],[0,0,0,0]] #[B,M,E,S]的四阶矩阵

return testDict(2) Judgment function in feature three

def charCJudge(i,mark1): #i为要判断的字符 mark1为i的状态

B=0

M=0

E=0

S=0

BMESsum=0

for j in range(wordFeedLen):

if i == wordFeed[j][0] and wordFeed[j][2]==mark1 and j+1<len(wordFeed):

if wordFeed[j+1][2] == 'B':

B=B+1

elif wordFeed[j+1][2] == 'M':

M=M+1

elif wordFeed[j+1][2] == 'E' :

E=E+1

elif wordFeed[j+1][2] == 'S' :

S=S+1

else:

pass

BMESsum=B+M+E+S

if BMESsum>0 :

return [B/BMESsum,M/BMESsum,E/BMESsum,S/BMESsum]

elif BMESsum==0 :

return [0,0,0,0](3) Calculate the transition frequency matrix value

Set the list of sentences to be segmented by testWord

testDict=setDict2(testWord)

for i in testWord:

j=0

for mark1 in ['B','M','E','S']:

testDict[i][j]=charCJudge(i,mark1)

j=j+14. Obtain feature 4 (W)

The fourth feature is to calculate the content of the next word in the state of B, M, E, and S for the word that needs word segmentation, and calculate the probability of two words appearing at the same time, that is, record the context of the previous word and the next word relation.

Before performing statistics on a certain word, first set the data structure for storing the results as a dictionary, and its specific structure is as follows:

{ A:{'B':{C:[B0,B1]},'M':{},'E':{},'S':{}}}, where "A" is the target character , "B" is the state of the word, and the dictionary in "B" means that the word "A" is in the state of "B", and the probability that the word "C" appears before and after the word "A" is B0, B1, respectively. and so on.

The pseudo code of this step is as follows:

(1) Create and initialize the context dictionary

def setDict3(testWord): #testWord为句子每个字的列表

testDict = { }

for i in testWord:

testDict[i]={'B':{},'M':{},'E':{},'S':{}} #[B,M,E,S]的四阶矩阵

return testDict(2) Context relation probability function in feature four

def charDJudge(i,mark1): #i为要判断的字符 mark1为i的状态

testDict = { }

lastSum=0

nextSum=0

for j in range(wordFeedLen):

#print(wordFeed[j][0])

if i == wordFeed[j][0] and wordFeed[j][2]==mark1 and j+1<len(wordFeed):

#特征四内的上文关系概率函数

if wordFeed[j-1][0] not in testDict:

testDict[wordFeed[j-1][0]]=[1,0]

lastSum=lastSum+1

elif wordFeed[j-1][0] in testDict:

testDict[wordFeed[j-1][0]][0]=testDict[wordFeed[j-1][0]][0]+1

lastSum=lastSum+1

#特征四内的下文关系概率函数

if wordFeed[j+1][0] not in testDict:

testDict[wordFeed[j+1][0]]=[0,1]

nextSum=nextSum+1

elif wordFeed[j+1][0] in testDict:

testDict[wordFeed[j+1][0]][1]=testDict[wordFeed[j+1][0]][1]+1

nextSum=nextSum+1

for key, value in testDict.items():

testDict[key][0]=testDict[key][0]/lastSum

testDict[key][1]=testDict[key][1]/nextSum

return testDictstart participle

After completing the word feature learning, Chinese word segmentation can be realized through the trained parameter values. For example, Chinese word segmentation is performed on the sentence "Greece has a special economic structure" input by the user.

The first step is to convert the "Greek economic structure is special" input by the user into a character list: ['Greece', 'Greek', 'of', 'Economic', 'Economy', 'Jie', 'Structure', 'Compare', 'Special', 'Special'].

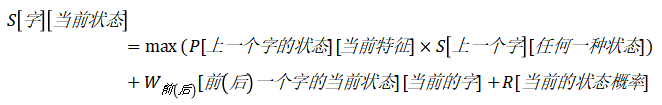

The second step is to obtain the corresponding features of each word, and return the initial matrix mapping relationship between the word and the state according to the state information. Calculate the state probabilities of all characters in the character list in B, M, E, and S, and the following problems can be solved easily. According to the Viterbi formula:

(Remarks: S is the value in the matrix, R is feature two, P is feature three, "W before" is the above part of feature four, "W after" is the following part of feature four.)

Finally, according to the formula, the matrix after calculating the corresponding relationship between words and states can be obtained.

Part of the code for this step is as follows:

(1) Initialize the string list first

testList=['希', '腊', '的', '经', '济', '结', '构', '较', '特', '殊'] (2) Obtain feature 2, feature 3, and feature 4 through the steps in the above 3.1 feature learning

testCharaB=charaB(testList) #特征二 字符BMES矩阵

testCharaC=charaC(testList) #特征三 转移频率

testCharaD=charaD(testList) #特征四 上下文关联关系(3) Calculate the word-state correspondence matrix and traceback path

column=['B','M','E','S']

relaDict=setDict(testList)

wayList=[] #保存回溯路径

a=0

b=0

c=0

d=0

for i in range(len(testList)):

oneWaylist=[] #临时保存回溯路径(4) Realize the Viterbi formula

(e is the probability of the characteristic four contexts of the targeted word, and e is the probability of the characteristic four contexts of the word)

for j in range(len(column)):

if i==0 :

if testList[i+1] not in testCharaD[testList[i]][column[j]] :

e=0

else:

e=testCharaD[testList[i]][column[j]][testList[i+1]][0]

relaDict[testList[i]][j]= e + testCharaB[testList[i]][j]

elif i>0 and i<len(testList)-1:

a=testCharaC[testList[i-1]][0][j] * relaDict[testList[i-1]][0]

b=testCharaC[testList[i-1]][1][j] * relaDict[testList[i-1]][1]

c=testCharaC[testList[i-1]][2][j] * relaDict[testList[i-1]][2]

d=testCharaC[testList[i-1]][3][j] * relaDict[testList[i-1]][3]

if testList[i-1] not in testCharaD[testList[i]][column[j]] : #特征四上文

e=0

else:

e=testCharaD[testList[i]][column[j]][testList[i-1]][0]

if testList[i+1] not in testCharaD[testList[i]][column[j]] : #特征四下文

f=0

else:

f=testCharaD[testList[i]][column[j]][testList[i+1]][1]

relaDict[testList[i]][j]=max(a,b,c,d) + testCharaB[testList[i]][j] + e + f

elif i==len(testList)-1:

a=testCharaC[testList[i-1]][0][j] * relaDict[testList[i-1]][0]

b=testCharaC[testList[i-1]][1][j] * relaDict[testList[i-1]][1]

c=testCharaC[testList[i-1]][2][j] * relaDict[testList[i-1]][2]

d=testCharaC[testList[i-1]][3][j] * relaDict[testList[i-1]][3]

if testList[i-1] not in testCharaD[testList[i]][column[j]] : #特征四上文

e=0

else:

e=testCharaD[testList[i]][column[j]][testList[i-1]][0]

relaDict[testList[i]][j]= max(a,b,c,d) + testCharaB[testList[i]][j] +eThrough the above code, the word-state correspondence matrix and the backtracking path can be obtained, and the tagging result of each word can be obtained, so as to realize word segmentation.

Complete code and screenshots of the project

project code

# -*- coding: utf-8 -*-

"""

Created on Fri Oct 18 08:36:46 2019

@author: JoeLiao‘s ASUS

"""

#全局变量

#读取语料库

wordFile=open("msr_training.utf8.ic",'r',encoding='utf-8')

wordFeed=wordFile.readlines() #[0]=字 [2]=标注

wordFile.close

wordFeedLen=len(wordFeed)

#print(wordFeed)

#创建字的列表

def setList(a): #a为要分词的句子

testWord = [ ]

for i in a:

testWord.append(i)

return testWord

#创建及初始化矩阵(字典)

def setDict(testWord): #testWord为句子每个字的列表

testDict = { }

for i in testWord:

testDict[i]=[0,0,0,0] #[B,M,E,S]

return testDict

####### 特征二 #######

#特征二 计算状态频率矩阵值

def charaB(testWord): #testDict为每个字的字典

testDict=setDict(testWord)

for i in testWord:

B=0

M=0

E=0

S=0

BMESsum=0

for j in range(wordFeedLen):

#print(wordFeed[j][0])

if i == wordFeed[j][0]:

if wordFeed[j][2] == 'B':

B=B+1

elif wordFeed[j][2] == 'M':

M=M+1

elif wordFeed[j][2] == 'E':

E=E+1

elif wordFeed[j][2] == 'S':

S=S+1

BMESsum=B+M+E+S

testDict[i]=[B/BMESsum,M/BMESsum,E/BMESsum,S/BMESsum]

return testDict

####### 特征三 #######

#创建及初始化字的4x4的矩阵(字典)

def setDict2(testWord): #testWord为句子每个字的列表

testDict = { }

for i in testWord:

testDict[i]=[[0,0,0,0],[0,0,0,0],[0,0,0,0],[0,0,0,0]] #[B,M,E,S]的四阶矩阵

return testDict

#特征三内的判断判断函数

def charCJudge(i,mark1): #i为要判断的字符 mark1为i的状态

B=0

M=0

E=0

S=0

BMESsum=0

for j in range(wordFeedLen):

#print(wordFeed[j][0])

if i == wordFeed[j][0] and wordFeed[j][2]==mark1 and j+1<len(wordFeed):

if wordFeed[j+1][2] == 'B':

B=B+1

elif wordFeed[j+1][2] == 'M':

M=M+1

elif wordFeed[j+1][2] == 'E' :

E=E+1

elif wordFeed[j+1][2] == 'S' :

S=S+1

else:

pass

BMESsum=B+M+E+S

if BMESsum>0 :

return [B/BMESsum,M/BMESsum,E/BMESsum,S/BMESsum]

elif BMESsum==0 :

return [0,0,0,0]

#特征三 计算转移频率矩阵值

def charaC(testWord):

testDict=setDict2(testWord)

for i in testWord:

j=0

for mark1 in ['B','M','E','S']:

testDict[i][j]=charCJudge(i,mark1)

j=j+1

#print(i,j)

#print(testDict)

return testDict

####### 特征四 #######

#创建及初始化上下文字典

def setDict3(testWord): #testWord为句子每个字的列表

testDict = { }

for i in testWord:

testDict[i]={'B':{},'M':{},'E':{},'S':{}} #[B,M,E,S]的四阶矩阵

return testDict

#特征四内的上下文关系概率函数

def charDJudge(i,mark1): #i为要判断的字符 mark1为i的状态

testDict = { }

lastSum=0

nextSum=0

for j in range(wordFeedLen):

#print(wordFeed[j][0])

if i == wordFeed[j][0] and wordFeed[j][2]==mark1 and j+1<len(wordFeed):

#特征四内的上文关系概率函数

if wordFeed[j-1][0] not in testDict:

testDict[wordFeed[j-1][0]]=[1,0]

lastSum=lastSum+1

elif wordFeed[j-1][0] in testDict:

testDict[wordFeed[j-1][0]][0]=testDict[wordFeed[j-1][0]][0]+1

lastSum=lastSum+1

#特征四内的下文关系概率函数

if wordFeed[j+1][0] not in testDict:

testDict[wordFeed[j+1][0]]=[0,1]

nextSum=nextSum+1

elif wordFeed[j+1][0] in testDict:

testDict[wordFeed[j+1][0]][1]=testDict[wordFeed[j+1][0]][1]+1

nextSum=nextSum+1

for key, value in testDict.items():

testDict[key][0]=testDict[key][0]/lastSum

testDict[key][1]=testDict[key][1]/nextSum

return testDict

#特征四计算特定的字与上下文关系

def charaD(testWord):

testDict=setDict3(testWord)

for i in testWord:

for mark1 in ['B','M','E','S']:

testDict[i][mark1]=charDJudge(i,mark1)

return testDict

####### 实现分词 #######

#返回分词结果

def getResult(signList):

resultString=''

for iList in signList:

if iList[1]=='B':

resultString=resultString+' '+iList[0]

elif iList[1]=='M':

resultString=resultString+iList[0]

elif iList[1]=='E':

resultString=resultString+iList[0]+' '

elif iList[1]=='S':

resultString=resultString+' '+iList[0]+' '

return resultString

#返回分词标记转换

def trans(num):

if num==0:

return 'B'

elif num==1:

return 'M'

elif num==2:

return 'E'

elif num==3:

return 'S'

#字与状态对应关系计算

def separateWords(testString):

testString=str1 #要测试的句子

testList=setList(testString) #字符串列表

#计算 特征二 特征三 特征四

print('字符串列表:',testList,'\n')

testCharaB=charaB(testList) #特征二 字符BMES矩阵

#print('特征二:',testCharaB,'\n')

testCharaC=charaC(testList) #特征三 转移频率

#print('特征三',testCharaC,'\n')

testCharaD=charaD(testList) #特征四 上下关联关系

print('特征四',testCharaD,'\n')

#生成字与状态对应关系矩阵值(字典)

column=['B','M','E','S']

relaDict=setDict(testList)

wayList=[]

a=0

b=0

c=0

d=0

for i in range(len(testList)):

oneWaylist=[]

for j in range(len(column)):

#print(testList[i],column[j])

if i==0 :

if testList[i+1] not in testCharaD[testList[i]][column[j]] :

e=0

else:

e=testCharaD[testList[i]][column[j]][testList[i+1]][0]

relaDict[testList[i]][j]= e + testCharaB[testList[i]][j]

elif i>0 and i<len(testList)-1:

a=testCharaC[testList[i-1]][0][j] * relaDict[testList[i-1]][0]

b=testCharaC[testList[i-1]][1][j] * relaDict[testList[i-1]][1]

c=testCharaC[testList[i-1]][2][j] * relaDict[testList[i-1]][2]

d=testCharaC[testList[i-1]][3][j] * relaDict[testList[i-1]][3]

if testList[i-1] not in testCharaD[testList[i]][column[j]] : #特征四上文

e=0

else:

e=testCharaD[testList[i]][column[j]][testList[i-1]][0]

if testList[i+1] not in testCharaD[testList[i]][column[j]] : #特征四下文

f=0

else:

f=testCharaD[testList[i]][column[j]][testList[i+1]][1]

relaDict[testList[i]][j]=max(a,b,c,d) + testCharaB[testList[i]][j] + e + f

elif i==len(testList)-1:

a=testCharaC[testList[i-1]][0][j] * relaDict[testList[i-1]][0]

b=testCharaC[testList[i-1]][1][j] * relaDict[testList[i-1]][1]

c=testCharaC[testList[i-1]][2][j] * relaDict[testList[i-1]][2]

d=testCharaC[testList[i-1]][3][j] * relaDict[testList[i-1]][3]

if testList[i-1] not in testCharaD[testList[i]][column[j]] : #特征四上文

e=0

else:

e=testCharaD[testList[i]][column[j]][testList[i-1]][0]

relaDict[testList[i]][j]= max(a,b,c,d) + testCharaB[testList[i]][j] +e

#wayDict={'a':a,'b':b,'c':c,'d':d}

findMax=[a,b,c,d]

oneWaylist.append(findMax.index(max(findMax)))

#print(oneWaylist)

wayList.append(oneWaylist)

print('\n关系矩阵:',relaDict,'\n\n回溯路径:',wayList)

signList=[]

lenList=[]

#lenList=list(range(len(testList))).reverse()

for i in range(len(testList)):

lenList.append(i)

lenList.reverse()

for i in lenList:

testWord=testList[i]

if i == len(testList)-1:

indexNum=relaDict[testWord].index(max(relaDict[testWord]))

sign=trans(indexNum)

signList.append([testWord,sign])

nextIndexNum=wayList[i][indexNum]

else:

sign=trans(nextIndexNum)

signList.append([testWord,sign])

indexNum=relaDict[testWord].index(max(relaDict[testWord]))

nextIndexNum=wayList[i][indexNum]

signList.reverse()

print("\n分词标记:",signList,'\n')

print("分词原句:",testString,'\n')

print("分词结果:",getResult(signList))

################主函数################

import time

#from multiprocessing.dummy import Pool as ThreadPool

#pool = ThreadPool(processes=8)

str1=input("请输入要分词的字符串:")

print('\n')

start = time.clock()

separateWords(str1)

#results2 = pool.map(separateWords, str1)

#pool.close()

#pool.join()

print('\n')

elapsed = (time.clock() - start)

print("分词用时:",elapsed,'秒')

#input('******回车后可退出界面******')Results screenshot

Screenshots without printing features two, three, and four:

Screenshot after printing features:

Feature 4 (partial screenshot)