This blog is used to record the process of learning how to use python to do word frequency statistics, followed by the English word frequency statistics in the previous article

Previous: python word frequency statistics and sort by word frequency

References: jieba reference documents

Table of contents

1. Introduction to jieba library

Jieba is an important third-party Chinese word segmentation function library in Python. It needs to

be installed through the pip command. By the way, use the -i parameter to specify the domestic mirror source, which is faster

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple jieba

The three common modes of jieba word segmentation are as follows

* Accurate mode, try to cut the sentence most accurately, suitable for text analysis, but the speed of word segmentation in precise mode is not satisfactory

* Full mode, scan all the words that can be formed into words in the sentence , the speed is very fast, but it cannot solve the ambiguity problem;

* Search engine mode, based on the precise mode, segment long words again, which is suitable for word segmentation in search engines.

Briefly introduce several commonly used methods of the jieba library.

jieba.lcut(s) Exact mode, returns a list type, general word segmentation uses this method

jieba.lcut(s, cut_all=True) Full mode, returns a list type,

jieba. lcut_for_search(s) search engine mode, returns a list type

Let's briefly look at the effects of the three methods

>>> import jieba

>>> jieba.lcut("青年一代是充满朝气、生机勃勃的")#精确模式

['青年一代', '是', '充满', '朝气', '、', '生机勃勃', '的']

>>> jieba.lcut("青年一代是充满朝气、生机勃勃的",cut_all=True)#全模式

['青年', '青年一代', '一代', '是', '充满', '满朝', '朝气', '、', '生机', '生机勃勃', '勃勃', '勃勃的']

>>> jieba.lcut_for_search("青年一代是充满朝气、生机勃勃的")#搜索引擎模式

['青年', '一代', '青年一代', '是', '充满', '朝气', '、', '生机', '勃勃', '生机勃勃', '的']

>>>

It can be seen that the precise mode is the most accurate for sentence segmentation, and is suitable for word frequency statistics in articles. The other two modes have their own emphasis. The full mode will provide as many words as possible, but it cannot resolve ambiguity. Search engine mode segmentation The resulting words are suitable as indexes or keywords for search engines.

Next, we try to segment "Water Margin" with precise mode, and count the word frequency after segmentation.

2. Some preparatory work

Similarly, it is also necessary to use the stop word list to deal with stop words and use related third-party libraries to solve the problem of Chinese punctuation.

Chinese punctuation can directly use the zhon library (not the built-in library, which needs to be installed manually)

import zhon.hanzi

punc = zhon.hanzi.punctuation #要去除的中文标点符号

print(punc)

#包括"#$%&'()*+,-/:;<=>@[\]^_`{|}~⦅⦆「」、 、〃〈〉《》「」『』【】〔〕〖〗〘〙〚〛〜〝〞〟〰〾〿–—‘’‛“”„‟…‧﹏﹑﹔·!?。。

In this way, the problem of Chinese punctuation is solved, but there is no Chinese stop word list in the stop word library of the nltk library I used in the previous article, so I found a new one on the Internet and added it to the nltk library Go to the inactive vocabulary library, so that it has a Chinese inactive vocabulary, I am really clever. jpg

Of course, you can also directly save the inactive vocabulary as a .txt file here, and just read it in when you use it , let’s introduce the above two operations

The stop word list shared by a big guy on github

I use the Baidu stop word list here, but the embarrassing thing is that it doesn't seem to be very effective in excluding the words in my selected text...

1. Add the Baidu inactive vocabulary to the inactive thesaurus in the nltk library

① First copy (download) the Baidu inactive vocabulary and save it as a .txt file. Pay attention to the document format, which must be one word (word) per line

② Next, find the stopwords path of nltk, usually in the lib of the python folder, the reference path is as follows, python3.9.7\Lib\nltk_data\corpora\stopwords You can directly

search for stopwords in lib, but the premise is You have installed the nltk library, of course there may be several stopwords under lib, don’t make a mistake, it’s the one under the nltk directory

③Copy the .txt file we mentioned earlier to stopwords, remove the suffix name .txt and you’re done

, Next, try to add the stop word list, whether it can be successfully loaded or not

>>> from nltk.corpus import stopwords

>>> baidu_stopwords = stopwords.words("baidu_stopwords")

>>> print(baidu_stopwords[:100])

['--', '?', '“', '”', '》', '--', 'able', 'about', 'above', 'according', 'accordingly', 'across', 'actually', 'after', 'afterwards', 'again', 'against', "ain't", 'all', 'allow', 'allows', 'almost', 'alone', 'along', 'already', 'also', 'although', 'always', 'am', 'among', 'amongst', 'an', 'and', 'another', 'any', 'anybody', 'anyhow', 'anyone', 'anything', 'anyway', 'anyways', 'anywhere', 'apart', 'appear', 'appreciate', 'appropriate', 'are', "aren't", 'around', 'as', "a's", 'aside', 'ask', 'asking', 'associated', 'at', 'available', 'away', 'awfully', 'be', 'became', 'because', 'become', 'becomes', 'becoming', 'been', 'before', 'beforehand', 'behind', 'being', 'believe', 'below', 'beside', 'besides', 'best', 'better', 'between', 'beyond', 'both', 'brief', 'but', 'by', 'came', 'can', 'cannot', 'cant', "can't", 'cause', 'causes', 'certain', 'certainly', 'changes', 'clearly', "c'mon", 'co', 'com', 'come', 'comes', 'concerning', 'consequently']

>>> print(baidu_stopwords[-100:])

['起', '起来', '起见', '趁', '趁着', '越是', '跟', '转动', '转变', '转贴', '较', '较之', '边', '达到', '迅速', '过', '过去', '过来', '运用', '还是', '还有', '这', '这个', '这么', '这么些', '这么样', '这么点儿', '这些', '这会儿', '这儿', '这就是说', '这时', '这样', '这点', '这种', '这边', '这里', '这麽', '进入', '进步', '进而', '进行', '连', '连同', '适应', '适当', '适用', '逐步', '逐渐', '通常', '通过', '造成', '遇到', '遭到', '避免', '那', '那个', '那么', '那么些', '那么样', '那些', '那会儿', '那儿', '那时', '那样', '那边', '那里', '那麽', '部分', '鄙人', '采取', '里面', '重大', '重新', '重要', '鉴于', '问题', '防止', '阿', '附近', '限制', '除', '除了', '除此之外', '除非', '随', '随着', '随著', '集中', '需要', '非但', '非常', '非徒', '靠', '顺', '顺着', '首先', '高兴', '是不是', '说说']

>>>

Very good, the loading is successful, which means that we have successfully added the Chinese stop word list to the nltk library. In fact, after learning this operation, we can define our own "stop word list" according to our own needs, and put punctuation marks, stop words Words, etc. are placed in a file, so that when used, the nltk library is directly imported, and there is no need to import other libraries

2. Save the Baidu stop word list as a .txt file and read it when needed

with open('E:\Python_code\\blog\\baidu_stopwords.txt',encoding="utf-8") as fp:

text = fp.read()

print(text[:100],text[-100:])

3. Chinese word frequency statistics

The whole processing process is roughly the same as the English word frequency statistics in the previous article, mainly in these steps:

* read the document

* word segmentation

* remove punctuation and stop words

* count word frequency

* sort

I don’t count words with a length of 1 here, so the step of removing punctuation marks can be omitted. In addition, using a third-party library can count word frequency more quickly, and often integrates a sorting function.

import jieba

import zhon.hanzi

from nltk.corpus import stopwords

punc = zhon.hanzi.punctuation #要去除的中文标点符号

baidu_stopwords = stopwords.words('baidu_stopwords') #导入停用词表

#读入文件

with open('E:\Python_code\Big_data\homework4\水浒传.txt',encoding="utf-8") as fp:

text = fp.read()

ls = jieba.lcut(text)#分词

#统计词频

counts= {

}

for i in ls:

if len(i)>1:

counts[i] = counts.get(i,0)+1

#去标点(由于我这里不统计长度为1的词,去标点这步可省略)

# for p in punc:

# counts.pop(p,0)

for word in baidu_stopwords: #去掉停用词

counts.pop(word,0)

ls1 = sorted(counts.items(),key=lambda x:x[1],reverse=True) #词频排序

print(ls1[:20])

Same as the previous method, we can also use a third-party library to complete word frequency statistics

with the help of collections library

import jieba

import zhon.hanzi

from nltk.corpus import stopwords

import collections

punc = zhon.hanzi.punctuation #要去除的中文标点符号

baidu_stopwords = stopwords.words('baidu_stopwords') #导入停用词表

#读入文件

with open('E:\Python_code\Big_data\homework4\水浒传.txt',encoding="utf-8") as fp:

text = fp.read()

ls = jieba.lcut(text)

#去掉长度为1的词,包括标点

newls = []

for i in ls:

if len(i)>1:

newls.append(i)

#统计词频

counts = collections.Counter(newls)

for word in baidu_stopwords: #去掉停用词

counts.pop(word,0)

print(counts.most_common(20))

With the pandas library

import jieba

import zhon.hanzi

from nltk.corpus import stopwords

import pandas as pd

punc = zhon.hanzi.punctuation #要去除的中文标点符号

baidu_stopwords = stopwords.words('baidu_stopwords') #导入停用词表

#读入文件

with open('E:\Python_code\Big_data\homework4\水浒传.txt',encoding="utf-8") as fp:

text = fp.read()

ls = jieba.lcut(text)

#去掉长度为1的词,包括标点

newls = []

for i in ls:

if len(i)>1:

newls.append(i)

#统计词频

ds = pd.Series(newls).value_counts()

for i in baidu_stopwords:

try: #处理找不到元素i时pop()方法可能出现的错误

ds.pop(i)

except:

continue #没有i这个词,跳过本次,继续下一个词

print(ds[:20])

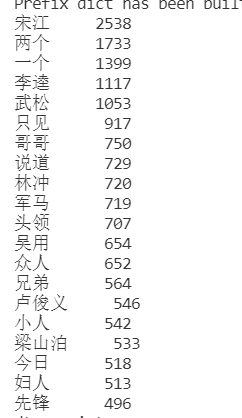

Post a test result

4. Jieba custom word segmentation and part-of-speech analysis

Jieba supports custom tokenizers, which can perform part-of-speech tagging, but it cannot guarantee that all words are tagged and the analysis speed is relatively slow. Here is only an example. The following uses a custom tokenizer to analyze the development plan of the "14th Five-Year Plan" information and communication industry . "Interpretation and part-of-speech analysis Part-

of-speech table

| mark | part of speech | describe |

|---|---|---|

| Ag | Morpheme | Adjective morpheme. The adjective code is a, and the morpheme code g is preceded by A. a The adjective takes the first letter of the English adjective adjective. |

| ad | adverb | Adjectives directly as adverbial. Adjective code a and adverb code d together. |

| an | adjective | Adjectives that function as nouns. Adjective code a and noun code n are combined. |

| b | distinguishing words | Take the initial consonant of the Chinese character "别". |

| c | conjunction | Take the first letter of the English conjunction conjunction. |

| dg submorpheme | adverbial morpheme | The adverb code is d, and the morpheme code g is preceded by D. d The adverb takes the second letter of adverb, because its first letter has been used as an adjective. |

| e | interjection | Take the first letter of the English interjection exclamation. |

| f | Position of the word | Take the Chinese character "square" |

| g | morpheme | Most of the morphemes can be used as the "root" of compound words, taking the initial consonant of the Chinese character "root". |

| h | Prefix | Take the first letter of the English head. |

| i | idiom | Take the first letter of the English idiom idiom. |

| j | Abbreviation | Take the initial consonant of the Chinese character "Jane". |

| k | Followed by ingredients | |

| l | idioms | The idiom has not yet become an idiom, it is a bit "temporary", and takes the initial consonant of "Lin". |

| m | numeral | Take the third letter of English numeral, n, u have been used in other ways. |

| Of | noun morpheme | Noun morpheme. The noun code is n, and the morpheme code g is preceded by N. The n noun takes the first letter of the English noun noun. |

| nr | person's name | The noun code n is combined with the initial consonant of "people (ren)". |

| ns | place name | Noun code n and place word code s are combined together. |

| nt | Institutional groups | The initial consonant of "tuan" is t, and the noun code n and t are combined together. |

| nz | other proper names | The first letter of the initial consonant of "special" is z, and the noun codes n and z are combined together. |

| o | Onomatopoeia | Take the first letter of the English onomatopoeia. |

| p | preposition | Take the first letter of the English preposition prepositional. |

| q | quantifier | Take the first letter of English quantity. |

| r | pronoun | Take the second letter of the English pronoun pronoun, because p has been used as a preposition. |

| s | place word | Take the first letter of the English space. |

| tg | tense | Time part of speech morpheme. The code of the time word is t, and T is placed in front of the code g of the morpheme. t time word takes the first letter of English time. |

| u | particle | Take the English auxiliary word auxiliary |

| vg | verb morpheme | Verb morpheme. The verb code is v. Put V in front of the code g of the morpheme. The v verb takes the first letter of the English verb verb. |

| vd | Adverb | Verbs that act directly as adverbials. Verbs and adverbs are coded together. |

| vn | noun verb | Refers to verbs that function as nouns. Verbs and nouns are coded together. |

| w | punctuation marks | |

| x | non-morpheme word | A non-morpheme word is just a symbol, and the letter x is usually used to represent an unknown number, a symbol. |

| y | Modal | Take the initial consonant of the Chinese character "language". |

| z | status word | Get the first letter of the initial consonant of Chinese character " shape ". |

| and | unknown word | Unrecognizable words and user-defined phrases. Take the first two letters of English Unkonwn. (non-Peking University standard, defined in CSW word segmentation) |

import jieba.posseg as pseg

with open('E:/Python_code/blog/通信行业规划.txt',encoding="utf-8") as f:

text = f.read()

wordit = pseg.cut(text) #自定义分词,返回一个可迭代类型

count_flag = {

}

for word ,flag in wordit:

if flag not in count_flag.keys(): #如果没有flag键,就添加flag键,对应的值为一个空列表,每个键代表一种词性

count_flag[flag] = []

elif len(word)>1: #有对应的词性键,就将词加入到键对应的列表中,跳过长度为1的词

count_flag[flag].append(word)

print(count_flag)