OceanBase is a financial-grade distributed relational database, which has the characteristics of strong data consistency, high availability, high performance, online expansion, high compatibility with SQL standards and mainstream relational databases, and low cost. However, it has high learning costs and lacks financial computing functions and the capabilities of streaming incremental computations.

DolphinDB is a domestic high-performance distributed time-series database product. It supports SQL and uses Python-like syntax to process data, and the learning cost is very low. And DolphinDB provides more than 1,500 functions, which have a strong expressive ability for complex data processing scenarios and greatly reduce user development costs. At the same time, DolphinDB also has powerful streaming incremental computing capabilities, which can meet users' real-time data processing needs.

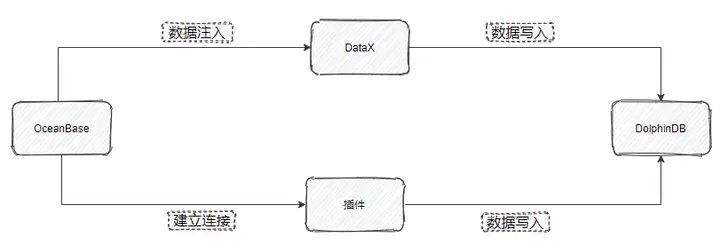

This article aims to provide a concise and clear reference for users who need to migrate from OceanBase to DolphinDB. The overall framework is as follows:

1. Application requirements

Many users who used OceanBase before inevitably need to synchronize data migration to DolphinDB. DolphinDB provides a variety of flexible data synchronization methods to help users easily perform full or incremental synchronization of massive data from multiple data sources. Based on this requirement, the practical case in this article provides a high-performance solution for migrating tick-by-tick data from OceanBase to DolphinDB.

The current tick-by-tick transaction data for 2021.01.04 is stored in OceanBase. Some of its data examples are as follows:

| SecurityID | TradeTime | TradePrice | TradeQty | TradeAmount | Buy No | SellNo | TradeIndex | ChannelNo | TradeBSFlag | BizIndex |

|---|---|---|---|---|---|---|---|---|---|---|

| 600035 | 2021.01.04T09:32:59.000 | 2.9 | 100 | 290 | 574988 | 539871 | 266613 | 6 | B | 802050 |

| 600035 | 2021.01.04T09:25:00.000 | 2.91 | 2900 | 8439 | 177811 | 36575 | 8187 | 6 | N | 45204 |

| 600035 | 2021.01.04T09:25:00.000 | 2.91 | 2600 | 7566 | 177811 | 3710 | 8188 | 6 | N | 45205 |

| 600035 | 2021.01.04T09:32:59.000 | 2.9 | 100 | 290 | 574988 | 539872 | 266614 | 6 | B | 802051 |

| 600035 | 2021.01.04T09:25:00.000 | 2.91 | 500 | 1455 | 177811 | 8762 | 8189 | 6 | N | 45206 |

| 600035 | 2021.01.04T09:32:59.000 | 2.9 | 100 | 290 | 574988 | 539873 | 266615 | 6 | B | 802052 |

| 600035 | 2021.01.04T09:30:02.000 | 2.9 | 100 | 290 | 18941 | 205774 | 44717 | 6 | S | 252209 |

| 600035 | 2021.01.04T09:32:59.000 | 2.9 | 100 | 290 | 574988 | 539880 | 266616 | 6 | B | 802053 |

| 600035 | 2021.01.04T09:30:02.000 | 2.9 | 100 | 290 | 18941 | 209284 | 46106 | 6 | S | 256679 |

| 600035 | 2021.01.04T09:32:59.000 | 2.9 | 200 | 580 | 574988 | 539884 | 266617 | 6 | B | 802054 |

2. Implementation method

There are three ways to migrate data from OceanBase to DolphinDB:

- MySQL plugin

The MySQL plug-in is a plug-in provided by DolphinDB for importing MySQL data, and it is also applicable to the MySQL mode of OceanBase. The MySQL plug-in works with the DolphinDB script, runs in the same process space as the server, and can efficiently write data from OceanBase to DolphinDB.

The MySQL plugin provides the following functions, please refer to DolphinDB MySQL Plugin for specific usage of the functions

- mysql::connect(host, port, user, password, db)

- mysql::showTables(connection)

- mysql::extractSchema(connection, tableName)

- mysql::load(connection, table_or_query, [schema], [startRow], [rowNum], [allowEmptyTable])

- mysql::loadEx(connection, dbHandle,tableName,partitionColumns,table_or_query,[schema],[startRow],[rowNum],[transform])

- ODBC plug-in

The ODBC (Open Database Connectivity) plug-in is an open source product provided by DolphinDB that accesses the ODBC protocol through the ODBC interface. Its usage is similar to that of the MySQL plug-in, and will not be described in this article. Interested readers can refer to the ODBC plug-in usage guide .

- DataX driver

DataX is an extensible data synchronization framework that abstracts the synchronization of different data sources into a Reader plug-in that reads data from the source data source and a Writer plug-in that writes data to the target end. In theory, the DataX framework can support any type of data source Data synchronization works.

DolphinDB provides open source drivers based on DataXReader and DataXWriter. The DolphinDBWriter plug-in implements writing data to DolphinDB. Using DataX's existing reader plug-in combined with the DolphinDBWriter plug-in, data can be synchronized from different data sources to DolphinDB. Users can include the DataX driver package in the Java project to develop data migration software from OceanBase data sources to DolphinDB.

DataX-driven development is based on the Java SDK and supports high availability.

| way to achieve | Data writing efficiency | high availability |

|---|---|---|

| MySQL plugin | high | not support |

| DataX driver | middle | support |

3. Migration case and operation steps

3.1 Create a table in DolphinDB

For the above test data, we need to create corresponding database tables in DolphinDB to store the migrated data. For the actual data, it is necessary to comprehensively consider the fields, types, and data volumes of the migrated data, whether DolphinDB needs to be partitioned, the partition scheme, whether to use OLAP or TSDB engine, etc., to design the database and table construction scheme. For some data storage table design practices, you can refer to the DolphinDB database partition tutorial

In this example, the content of the table creation file createTable.dos is as follows:

def createTick(dbName, tbName){

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

db1 = database(, VALUE, 2020.01.01..2021.01.01)

db2 = database(, HASH, [SYMBOL, 10])

db = database(dbName, COMPO, [db1, db2], , "TSDB")

db = database(dbName)

name = `SecurityID`TradeTime`TradePrice`TradeQty`TradeAmount`BuyNo`SellNo`ChannelNo`TradeIndex`TradeBSFlag`BizIndex

type = `SYMBOL`TIMESTAMP`DOUBLE`INT`DOUBLE`INT`INT`INT`INT`SYMBOL`INT

schemaTable = table(1:0, name, type)

db.createPartitionedTable(table=schemaTable, tableName=tbName, partitionColumns=`TradeTime`SecurityID, compressMethods={TradeTime:"delta"}, sortColumns=`SecurityID`TradeTime, keepDuplicates=ALL)

}

dbName="dfs://TSDB_tick"

tbName="tick"

createTick(dbName, tbName)The mapping relationship of data fields migrated from OceanBase to DolphinDB is as follows:

| OceanBase field meaning | OceanBase field | OceanBase data type | DolphinDB field meaning | DolphinDB field | DolphinDB data types |

|---|---|---|---|---|---|

| Securities code | SecurityID | varchar(10) | Securities code | SecurityID | SYMBOL |

| transaction hour | TradeTime | timestamp | transaction hour | TradeTime | TIMESTAMP |

| Trading price | TradePrice | double | Trading price | TradePrice | DOUBLE |

| Number of transactions | TradeQty | int(11) | Number of transactions | TradeQty | INT |

| Amount of the transaction | TradeAmount | double | Amount of the transaction | TradeAmount | DOUBLE |

| Buyer Order Index | Buy No | int(11) | Buyer Order Index | Buy No | INT |

| Seller Order Index | SellNo | int(11) | Seller Order Index | SellNo | INT |

| transaction number | TradeIndex | int(11) | transaction number | TradeIndex | INT |

| channel code | ChannelNo | int(11) | channel code | ChannelNo | INT |

| Transaction direction | TradeBSFlag | varchar(20) | Transaction direction | TradeBSFlag | SYMBOL |

| business serial number | BizIndex | int(11) | business serial number | BizIndex | INT |

3.2 Migrating via the MySQL plugin

3.2.1 Installing the MySQL plugin

For the installation of MySQL plugin, refer to DolphinDB MySQL Plugin

3.2.2 Synchronizing data

1. Run the following command to load the MySQL plugin

loadPlugin("ServerPath/plugins/mysql/PluginMySQL.txt")2. Run the following command to establish a connection with OceanBase

conn = mysql::connect(`127.0.0.1,2881,`root,`123456,`db1)3. Run the following command to start synchronizing data

mysql::loadEx(conn, database('dfs://TSDB_tick'), `tick, `TradeTime`SecurityID,"tick")There are 27211975 pieces of data in total, and it takes about 52 seconds to synchronize the data.

4. Synchronize incremental data

To achieve incremental synchronization, just replace mysql::loadEXthe name of the source data table with a query statement, and use the function provided by DolphinDB to scheduleJobset a scheduled task to achieve incremental synchronization. The example is as follows, the data of the previous day is synchronized at 00:05 every day:

def scheduleLoad(){

sqlQuery = "select * from tick where date(TradeTime) = '" +temporalFormat(today()-1, 'y-MM-dd') +"' ;"

mysql::loadEx(conn, database('dfs://TSDB_tick'), `tick, `TradeTime`SecurityID,sqlQuery)

}

scheduleJob(jobId=`test, jobDesc="test",jobFunc=scheduleLoad,scheduleTime=00:05m,startDate=2023.04.04, endDate=2024.01.01, frequency='D')Note: In order to prevent the timing task parsing failure when the node restarts, add it in the configuration file in advance preloadModules=plugins::mysql

3.3 Migration driven by DataX

3.3.1 Deploy DataX

After downloading the DataX compressed package from the DataX download address , extract it to a custom directory.

3.3.2 Deploy the DataX-DolphinDBWriter plug-in

将 DataX-DolphinDBWriter 中源码的 ./dist/dolphindbwriter 目录下所有内容拷贝到 DataX/plugin/writer 目录下。

3.3.3 执行 DataX 任务

1. 根据实际环境配置json文件。详情参考:#DataX DolphinDBWriter插件配置项,配置 json 文件

配置文件 OceanBase_tick.json 的具体内容如下,并将 json 文件置于自定义目录下,本教程中方放置于 datax-writer-master/ddb_script/ 目录下。

{

"job": {

"setting": {

"speed": {

"channel":1

}

},

"content": [

{

"writer": {

"parameter": {

"dbPath": "dfs://TSDB_tick",

"tableName": "tick",

"userId": "admin",

"pwd": "123456",

"host": "127.0.0.1",

"batchSize": 200000,

"table": [

{

"type": "DT_SYMBOL",

"name": "SecurityID"

},

{

"type": "DT_TIMESTAMP",

"name": "TradeTime"

},

{

"type": "DT_DOUBLE",

"name": "TradePrice"

},

{

"type": "DT_INT",

"name": "TradeQty"

},

{

"type": "DT_DOUBLE",

"name": "TradeAmount"

},

{

"type": "DT_INT",

"name": "BuyNo"

},

{

"type": "DT_INT",

"name": "SellNo"

},

{

"type": "DT_INT",

"name": "TradeIndex"

},

{

"type": "DT_INT",

"name": "ChannelNo"

},

{

"type": "DT_SYMBOL",

"name": "TradeBSFlag"

},

{

"type": "DT_INT",

"name": "BizIndex"

}

],

"port": 8800

},

"name": "dolphindbwriter"

},

"reader": {

"name": "oceanbasev10reader",

"parameter": {

"username": "root",

"password": "123456",

"batchSize":10000,

"column": [

"*"

],

"connection": [

{

"table": [

"tick"

],

"jdbcUrl": [

"jdbc:oceanbase://127.0.0.1:2883/db1"

]

}

]

}

}

}

]

}

}

2. Linux 终端中执行以下命令以执行 DataX 任务

cd ./dataX/bin/

python datax.py ../../datax-writer-master/ddb_script/ocean.json3. 查看 DataX 同步结果

任务启动时刻 : 2023-04-03 14:58:52

任务结束时刻 : 2023-04-03 15:00:52

任务总计耗时 : 120s

任务平均流量 : 12.32MB/s

记录写入速度 : 226766rec/s

读出记录总数 : 27211975

读写失败总数 : 04. 同步增量数据

使用 DataX 同步增量数据,可在 ocean.json 的 ”reader“ 中增加 "where" 条件对数据日期进行筛选,如此每次执行同步任务时至同步 where 条件过滤后的数据,以同步前一天的数据为例,示例如下:

"reader": {

"name": "oceanbasev10reader",

"parameter": {

"username": "root",

"password": "123456",

"batchSize":10000,

"column": [

"*"

],

"connection": [

{

"table": [

"tick"

],

"jdbcUrl": [

"jdbc:oceanbase://127.0.0.1:2883/db1"

]

}

],

"where":"date(TradeTime) = (SELECT DATE_ADD(CURDATE(), INTERVAL -1 DAY))"

}

}4. 基准性能

分别使用 MySQL 插件和 DataX 驱动进行数据迁移, 数据量 2721 万条,迁移耗时对比如下表所示:

| MySQL插件 | DataX |

|---|---|

| 52s | 120s |

综上,MySQL 插件与 DataX 均能实现 将 OceanBase 中数据迁移到 DolphinDB中,但是各有优缺点:

- MySQL 插件性能较好,适合批量数据的导入,但是运维管理不便。

- DataX 导入数据较慢,适合千万级别以下数据集导入,但是其日志追踪,可扩展性以及管理比较方便。

用户可以根据自己数据量的大小以及工程化的便捷性进行选择导入方式,同时,由于篇幅有限,涉及到DolphinDB 和 DataX 框架的一些其它操作未能更进一步展示,用户在使用过程中需要按照实际情况进行调整。也欢迎大家对本教程中可能存在的纰漏和缺陷批评指正。

附录

DataX DolphinDB-Writer 配置项

| 配置项 | 是否必须 | 数据类型 | 默认值 | 描述 |

|---|---|---|---|---|

| host | 是 | string | 无 | Server Host |

| port | 是 | int | 无 | Server Port |

| userId | 是 | string | 无 | DolphinDB 用户名导入分布式库时,必须要有权限的用户才能操作,否则会返回 |

| pwd | 是 | string | 无 | DolphinDB 用户密码 |

| dbPath | 是 | string | 无 | 需要写入的目标分布式库名称,比如"dfs://MYDB"。 |

| tableName | 是 | string | 无 | 目标数据表名称 |

| batchSize | 否 | int | 10000000 | datax每次写入dolphindb的批次记录数 |

| table | 是 | 写入表的字段集合,具体参考后续table项配置详解 | ||

| saveFunctionName | 否 | string | 无 | 自定义数据处理函数。若未指定此配置,插件在接收到reader的数据后,会将数据提交到DolphinDB并通过tableInsert函数写入指定库表;如果定义此参数,则会用指定函数替换tableInsert函数。 |

| saveFunctionDef | 否 | string | 无 | 数据入库自定义函数。此函数指 用dolphindb 脚本来实现的数据入库过程。 此函数必须接受三个参数:dfsPath(分布式库路径), tbName(数据表名), data(从datax导入的数据,table格式) |

table 配置详解

table 用于配置写入表的字段集合。内部结构为

{"name": "columnName", "type": "DT_STRING", "isKeyField":true}请注意此处列定义的顺序,需要与原表提取的列顺序完全一致。

- name :字段名称。

- isKeyField:是否唯一键值,可以允许组合唯一键。本属性用于数据更新场景,用于确认更新数据的主键,若无更新数据的场景,无需设置。

- type 枚举值以及对应 DolphinDB 数据类型如下

| DolphinDB 类型 | 配置值 |

|---|---|

| DOUBLE | DT_DOUBLE |

| FLOAT | DT_FLOAT |

| BOOL | DT_BOOL |

| DATE | DT_DATE |

| MONTH | DT_MONTH |

| DATETIME | DT_DATETIME |

| TIME | DT_TIME |

| SECOND | DT_SECOND |

| TIMESTAMP | DT_TIMESTAMP |

| NANOTIME | DT_NANOTIME |

| NANOTIMETAMP | DT_NANOTIMETAMP |

| INT | DT_INT |

| LONG | DT_LONG |

| UUID | DT_UUID |

| SHORT | DT_SHORT |

| STRING | DT_STRING |

| SYMBOL | DT_SYMBOL |

完整代码及测试数据

- DataX: OceanBase_tick.json

- DolphinDB: mysql插件导入数据.dos, createTable.dos

- 模拟产生数据: genTickCsv.dos