preface

This article mainly clarifies the impact of the group_replication_unreachable_majority_timeout and group_replication_member_expel_timeout parameters on the cluster network partition from the perspective of testing. First of all, here is a big picture, which was left when learning MGR many years ago, that is, the impact of these two parameters on the cluster. Test focus.

In the process of using mgr, we will often see the following error report, which probably means: "Due to a network failure, a member was expelled from the mgr cluster, and the status of the member was changed to ERROR". This is caused by the timeout of the heartbeat detection between the mgr cluster nodes and the expulsion of the minority nodes.

2022-07-31T13:07:30.464239-00:00 0 [Warning] [MY-011498] [Repl] Plugin group_replication reported: 'The member has resumed contact with a majority of the members in the group. Regular operation is restored and transactions are unblocked.'

2022-07-31T13:07:37.458761-00:00 0 [ERROR] [MY-011505] [Repl] Plugin group_replication reported: 'Member was expelled from the group due to network failures, changing member status to ERROR.'

Combined with two specific cases, the impact of the following three parameter configurations on mgr network faults and the fault detection process of mgr are analyzed below. Cluster Topology: Single-Primary Mode

节点 IP地址 主机名 状态

node1 172.16.86.61 mysqlwjhtest01 PRIMARY

node2 172.16.86.62 mysqlwjhtest02 SECONDARY

node3 172.16.86.63 mysqlwjhtest03 SECONDARY

This test mainly includes two steps:

- Simulate a network partition and see its impact on each node of the cluster.

- Restore the network connection and see how the nodes respond again.

1. The group_replication_unreachable_majority_timeout parameter is set to 0

3 relevant test parameters

#适用于8.0.21以上版本

group_replication_member_expel_timeout=30 #可疑节点超过30秒被驱逐集群

group_replication_unreachable_majority_timeout=0 #网络分区后少数派节点网络不可达超时时间

group_replication_autorejoin_tries=3 #error状态节点重新加入集群的次数

Case 1: Simulate secondary node network interruption

1.1 Simulate a network partition failure and execute it on node3 (currently node3 is the secondary node). Use the iptables command to disconnect the network connection between node3 and node1 and node2.

iptables -A INPUT -p tcp -s 172.16.86.61 -j DROP

iptables -A OUTPUT -p tcp -d 172.16.86.61 -j DROP

iptables -A INPUT -p tcp -s 172.16.86.62 -j DROP

iptables -A OUTPUT -p tcp -d 172.16.86.62 -j DROP

After the command is executed for 5s (mgr heartbeat detection time, fixed value, cannot be modified), each node starts to respond.

- First check the logs of the majority nodes (node1, node2) and cluster status information. It shows that node3 is in UNREACHABLE state.

[Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 has become unreachable.'

root@localhost: 19:38: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest02 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest01 | ONLINE | PRIMARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

The log shows that after detecting that the node3 network is unreachable, after 30 seconds (controlled by the parameter group_replication_member_expel_timeout=30), node3 is expelled from the cluster. The cluster state view has only 2 nodes.

2023-05-24T19:38:39.413051+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 has become unreachable.'

2023-05-24T19:39:09.413491+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] xcom_client_remove_node: Try to push xcom_client_remove_node to XCom'

2023-05-24T19:39:09.468173+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-24T19:39:09.468299+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.61:13307'

2023-05-24T19:39:09.468320+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.62:13307'

2023-05-24T19:39:09.468336+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 29726 0}, boot key synode is {88e4dbe0 29715 0}, configured event horizon=10, my node identifier is 0'

2023-05-24T19:39:10.399352+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] A configuration change was detected. Sending a Global View Message to all nodes. My node identifier is 0 and my address is 172.16.86.61:13307'

2023-05-24T19:39:10.404044+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Group is able to support up to communication protocol version 8.0.27'

2023-05-24T19:39:10.404139+08:00 0 [Warning] [MY-011499] [Repl] Plugin group_replication reported: 'Members removed from the group: mysqlwjhtest03:3307'

2023-05-24T19:39:10.404219+08:00 0 [System] [MY-011503] [Repl] Plugin group_replication reported: 'Group membership changed to mysqlwjhtest02:3307, mysqlwjhtest01:3307 on view 16849117016333239:4.'

2023-05-24T19:39:20.951668+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Failure reading from fd=-1 n=18446744073709551615 from 172.16.86.63:13307'

At this time, check the status information of the majority nodes (node1, node2), and the node3 node is no longer visible.

root@localhost: 20:02: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest02 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest01 | ONLINE | PRIMARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

2 rows in set (0.00 sec)

- Then check the minority node (node3) log and cluster status information, showing that node1 and node2 are in the UNREACHABLE state.

[Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest01:3307 has become unreachable.'

[Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 has become unreachable.'

[ERROR] [MY-011495] [Repl] Plugin group_replication reported: 'This server is not able to reach a majority of members in the group. This server will now block all updates. The server will remain blocked until contact with the majority is restored. It is possible to use group_replication_force_members to force a new group membership.'

And throughout the process, the cluster status information shows that node1 and node2 are always in the UNREACHABLE state. (This is due to group_replication_unreachable_majority_timeout=0, which will be discussed later.)

root@localhost: 19:54: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest02 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest01 | UNREACHABLE | PRIMARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

1.2 Restore network connection

Next, we try to restore the network between node3 and node1, node2.

iptables -F

- Check the log of the original minority node (node3), it shows that the heartbeat detects that the network of node1 and node2 returns to normal, then auto-rejoin joins the cluster again, GCS communication returns to normal, the xcom cache is applied, and the change master is updated.

2023-05-24T20:20:52.475071+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connected to 172.16.86.61:13307'

2023-05-24T20:20:53.655956+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connected to 172.16.86.62:13307'

2023-05-24T20:21:03.214971+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest01:3307 is reachable again.'

2023-05-24T20:21:03.215075+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 is reachable again.'

2023-05-24T20:21:03.215100+08:00 0 [Warning] [MY-011498] [Repl] Plugin group_replication reported: 'The member has resumed contact with a majority of the members in the group. Regular operation is restored and transactions are unblocked.'

2023-05-24T20:21:19.209028+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] A configuration change was detected. Sending a Global View Message to all nodes. My node identifier is 2 and my address is 172.16.86.63:13307'

2023-05-24T20:21:20.208934+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest01:3307 has become unreachable.'

2023-05-24T20:21:20.209098+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 has become unreachable.'

2023-05-24T20:21:20.209131+08:00 0 [ERROR] [MY-011495] [Repl] Plugin group_replication reported: 'This server is not able to reach a majority of members in the group. This server will now block all updates. The server will remain blocked until contact with the majority is restored. It is possible to use group_replication_force_members to force a new group membership.'

2023-05-24T20:21:23.097685+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest01:3307 is reachable again.'

2023-05-24T20:21:23.097871+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 is reachable again.'

2023-05-24T20:21:23.097916+08:00 0 [Warning] [MY-011498] [Repl] Plugin group_replication reported: 'The member has resumed contact with a majority of the members in the group. Regular operation is restored and transactions are unblocked.'

2023-05-24T20:21:23.107732+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-24T20:21:23.107836+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.61:13307'

2023-05-24T20:21:23.107854+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.62:13307'

2023-05-24T20:21:23.107872+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 29726 0}, boot key synode is {88e4dbe0 29715 0}, configured event horizon=10, my node identifier is 4294967295'

2023-05-24T20:21:26.125137+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sending a request to a remote XCom failed. Please check the remote node log for more details.'

2023-05-24T20:21:26.125323+08:00 0 [ERROR] [MY-011505] [Repl] Plugin group_replication reported: 'Member was expelled from the group due to network failures, changing member status to ERROR.'

2023-05-24T20:21:26.125534+08:00 0 [ERROR] [MY-011712] [Repl] Plugin group_replication reported: 'The server was automatically set into read only mode after an error was detected.'

2023-05-24T20:21:26.126663+08:00 5344 [System] [MY-013373] [Repl] Plugin group_replication reported: 'Started auto-rejoin procedure attempt 1 of 3'

2023-05-24T20:21:26.127111+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] xcom_client_remove_node: Try to push xcom_client_remove_node to XCom'

2023-05-24T20:21:26.127169+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] xcom_client_remove_node: Failed to push into XCom.'

2023-05-24T20:21:26.291624+08:00 0 [System] [MY-011504] [Repl] Plugin group_replication reported: 'Group membership changed: This member has left the group.'

2023-05-24T20:21:26.293673+08:00 13 [Note] [MY-010596] [Repl] Error reading relay log event for channel 'group_replication_applier': slave SQL thread was killed

2023-05-24T20:21:26.294631+08:00 13 [Note] [MY-010587] [Repl] Slave SQL thread for channel 'group_replication_applier' exiting, replication stopped in log 'FIRST' at position 0

2023-05-24T20:21:26.294877+08:00 11 [Note] [MY-011444] [Repl] Plugin group_replication reported: 'The group replication applier thread was killed.'

2023-05-24T20:21:26.296001+08:00 5344 [Note] [MY-011673] [Repl] Plugin group_replication reported: 'Group communication SSL configuration: group_replication_ssl_mode: "DISABLED"'

2023-05-24T20:21:26.299638+08:00 5344 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Debug messages will be sent to: asynchronous::/data/mysql/data/GCS_DEBUG_TRACE'

2023-05-24T20:21:26.300015+08:00 5344 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv4 localhost address to the allowlist. It is mandatory that it is added.'

2023-05-24T20:21:26.300062+08:00 5344 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv6 localhost address to the allowlist. It is mandatory that it is added.'

2023-05-24T20:21:26.300132+08:00 5344 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] SSL was not enabled'

2023-05-24T20:21:26.301794+08:00 5346 [System] [MY-010597] [Repl] 'CHANGE MASTER TO FOR CHANNEL 'group_replication_applier' executed'. Previous state master_host='<NULL>', master_port= 0, master_log_file='', master_log_pos= 3942, master_bind=''. New state master_host='<NULL>', master_port= 0, master_log_file='', master_log_pos= 4, master_bind=''.

2023-05-24T20:21:26.331516+08:00 5344 [Note] [MY-011670] [Repl] Plugin group_replication reported: 'Group Replication applier module successfully initialized!'

2023-05-24T20:21:26.331583+08:00 5348 [Note] [MY-010581] [Repl] Slave SQL thread for channel 'group_replication_applier' initialized, starting replication in log 'FIRST' at position 0, relay log '/data/mysql/relaylog/mysql-relay-bin-group_replication_applier.000002' position: 4213

2023-05-24T20:21:26.473164+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using XCom as Communication Stack for XCom'

2023-05-24T20:21:26.473594+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] XCom initialized and ready to accept incoming connections on port 13307'

2023-05-24T20:21:26.473792+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Successfully connected to the local XCom via anonymous pipe'

2023-05-24T20:21:26.474619+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] TCP_NODELAY already set'

2023-05-24T20:21:26.474690+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully connected to peer 172.16.86.61:13307. Sending a request to be added to the group'

At this time, check the cluster status information of the original minority node (node3), and it returns to normal.

root@localhost: 20:20: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest02 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest01 | ONLINE | PRIMARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

- Check the log of the original majority node (node1, node2), roughly establish a connection with node3, and then restore the cluster

2023-05-24T20:21:26.124082+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Node has already been removed: 172.16.86.63:13307 16849122691765501.'

2023-05-24T20:21:26.522275+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Adding new node to the configuration: 172.16.86.63:13307'

2023-05-24T20:21:26.522513+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-24T20:21:26.522574+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.61:13307'

2023-05-24T20:21:26.522609+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.62:13307'

2023-05-24T20:21:26.522624+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 2 host 172.16.86.63:13307'

2023-05-24T20:21:26.522641+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 32296 0}, boot key synode is {88e4dbe0 32285 0}, configured event horizon=10, my node identifier is 0'

2023-05-24T20:21:26.522899+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sender task disconnected from 172.16.86.63:13307'

2023-05-24T20:21:26.522931+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connecting to 172.16.86.63:13307'

2023-05-24T20:21:26.523349+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connected to 172.16.86.63:13307'

2023-05-24T20:21:27.452375+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] A configuration change was detected. Sending a Global View Message to all nodes. My node identifier is 0 and my address is 172.16.86.61:13307'

2023-05-24T20:21:27.453687+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Received an XCom snapshot request from 172.16.86.63:13307'

The above case is the mgr cluster change during the network failure of the mysql8.0 mgr secondary node. Finally, after the network is restored, the cluster recovers by itself, and the whole process does not require DBA intervention.

Case 2: Simulating a primary node network interruption

2.1 Continue to simulate a network partition failure and execute it on node1 (currently node1 is the primary node). Use the iptables command to disconnect the network connection between node1, node2, and node3.

iptables -A INPUT -p tcp -s 172.16.86.62 -j DROP

iptables -A OUTPUT -p tcp -d 172.16.86.62 -j DROP

iptables -A INPUT -p tcp -s 172.16.86.63 -j DROP

iptables -A OUTPUT -p tcp -d 172.16.86.63 -j DROP

date

2023年 05月 27日 星期六 09:29:46 CST

After the command is executed 5, each node starts to respond.

- First, check the log cluster status information of the majority nodes (node2, node3).

After the failure of the primary node, the switchover of the main library will be triggered. Let's take a look at the timeline in detail:

- 09:29:46 Network outage

- 09:29:51 Heartbeat timed out after 5 seconds

- 09:30:21 After 35 seconds, promote node3 to be the master node

023-05-27T09:29:51.229977+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest01:3307 has become unreachable.'

2023-05-27T09:30:20.947030+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-27T09:30:20.947163+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.62:13307'

2023-05-27T09:30:20.947191+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.63:13307'

2023-05-27T09:30:20.947217+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this co

nfiguration is {88e4dbe0 309739 1}, boot key synode is {88e4dbe0 309728 1}, configured event horizon=10, my node identifier is 1'

2023-05-27T09:30:21.689907+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Group is able to support up to communication protocol version 8.0.2

7'

2023-05-27T09:30:21.689994+08:00 0 [Warning] [MY-011499] [Repl] Plugin group_replication reported: 'Members removed from the group: mysqlwjhtest01:3307'

2023-05-27T09:30:21.690006+08:00 0 [System] [MY-011500] [Repl] Plugin group_replication reported: 'Primary server with address mysqlwjhtest01:3307 left the group. Electin

g new Primary.'

2023-05-27T09:30:21.690097+08:00 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member gtid_executed: debbaad4-1fd7-4652-80a4-5c87cfde0db

6:1-16'

2023-05-27T09:30:21.690108+08:00 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member applier channel received_transaction_set: debbaad4

-1fd7-4652-80a4-5c87cfde0db6:1-16'

2023-05-27T09:30:21.690123+08:00 0 [System] [MY-011507] [Repl] Plugin group_replication reported: 'A new primary with address mysqlwjhtest03:3307 was elected. The new pri

mary will execute all previous group transactions before allowing writes.'

2023-05-27T09:30:21.690509+08:00 0 [System] [MY-011503] [Repl] Plugin group_replication reported: 'Group membership changed to mysqlwjhtest03:3307, mysqlwjhtest02:3307 on

view 16849117016333239:10.'

2023-05-27T09:30:21.691815+08:00 6189 [System] [MY-013731] [Repl] Plugin group_replication reported: 'The member action "mysql_disable_super_read_only_if_primary" for eve

nt "AFTER_PRIMARY_ELECTION" with priority "1" will be run.'

2023-05-27T09:30:21.692135+08:00 6189 [System] [MY-011566] [Repl] Plugin group_replication reported: 'Setting super_read_only=OFF.'

2023-05-27T09:30:21.692186+08:00 6189 [System] [MY-013731] [Repl] Plugin group_replication reported: 'The member action "mysql_start_failover_channels_if_primary" for eve

nt "AFTER_PRIMARY_ELECTION" with priority "10" will be run.'

2023-05-27T09:30:21.693182+08:00 6181 [Note] [MY-011485] [Repl] Plugin group_replication reported: 'Primary had applied all relay logs, disabled conflict detection.'

2023-05-27T09:30:21.693200+08:00 68187 [System] [MY-011510] [Repl] Plugin group_replication reported: 'This server is working as primary member.'

2023-05-27T09:30:31.213720+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Failure reading from fd=-1 n=18446744073709551615 from 172.16.86.61

:13307'

The cluster status information of the majority nodes (node2, node3) shows that node3 has been elected as the new master.

#node2、node3显示状态

root@localhost: 09:30: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | ONLINE | PRIMARY | 8.0.28 |

| mysqlwjhtest02 | ONLINE | SECONDARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

2 rows in set (0.01 sec)

- Check the minority node (node1) log.

The log information provided by node1 is less, but the data writing is blocked after the heartbeat detection timeout, and the blocking time is not mentioned here.

2023-05-27T09:29:51.277851+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 has become unreachable.'

2023-05-27T09:29:51.277990+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 has become unreachable.'

2023-05-27T09:29:51.278007+08:00 0 [ERROR] [MY-011495] [Repl] Plugin group_replication reported: 'This server is not able to reach a majority of members in the group. This server will now block all updates. The server will remain blocked until contact with the majority is restored. It is possible to use group_replication_force_members to force a new group membership.'

View node1 cluster status information. We found that after the network partition, the minority node (node1) is still in the "online primary" state.

#node1显示状态

root@localhost: 09:30: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest02 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest01 | ONLINE | PRIMARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

Therefore, combined with the comparison of the majority node cluster information, after the network partition, both node1 and node3 are in the "online primary" state, but the minority node (node1) session blocks all updates, that is, node1 is in the read-only state. If the mgrvip switching detection logic only depends on the "online primary" state, there will be problems here.

At this time, we tried to write data in node1 and found that it was blocked. This is because node1 has only one node after partitioning, which belongs to the minority node, which does not meet the majority principle of group replication and meets expectations.

root@localhost: 10:10: [(none)]> create database db1;

...... 一直卡在这里

2.2 Restoring network connection

Next, we try to restore the network between node1 and node2, node3.

iptables -F

- Compare and view the cluster status changes on the minority node (node1) and the majority node (node2, node3).

Here I found something interesting. First, the UNREACHABLE status of each node changed to ONLINE. But at this time, the cluster information of the minority node (node1) shows that node1 is still in the "online primary" state, and after two or three seconds, the primary node in the original minority node (node1) changes from node1 to node3. From the log, after the network recovery, the node changed from UNREACHABLE state to ONLINE, and then due to the change of XCOM layer configuration, it finally reported an error and rejoined the cluster. The following are the cluster status changes I monitored on the original minority node (node1):

#首先,网络刚刚恢复时,primary为node1

root@localhost: 10:17: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest02 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest01 | ONLINE | PRIMARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

#大概两三秒后primary变为node3。

root@localhost: 10:24: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | ONLINE | PRIMARY | 8.0.28 |

| mysqlwjhtest02 | ONLINE | SECONDARY | 8.0.28 |

| mysqlwjhtest01 | ONLINE | SECONDARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

Logs of the original minority node (node1) network recovery process

2023-05-27T10:17:07.490608+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connected to 172.16.86.63:13307'

2023-05-27T10:17:08.491033+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connected to 172.16.86.62:13307'

2023-05-27T10:17:08.586063+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] A configuration change was detected. Sending a Global View Message to all nodes. My node identifier is 0 and my address is 172.16.86.61:13307'

2023-05-27T10:17:08.586486+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 is reachable again.'

2023-05-27T10:17:08.586546+08:00 0 [Warning] [MY-011498] [Repl] Plugin group_replication reported: 'The member has resumed contact with a majority of the members in the group. Regular operation is restored and transactions are unblocked.'

2023-05-27T10:17:09.202537+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 is reachable again.'

2023-05-27T10:17:20.197898+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 has become unreachable.'

2023-05-27T10:17:20.198008+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 has become unreachable.'

2023-05-27T10:17:20.198026+08:00 0 [ERROR] [MY-011495] [Repl] Plugin group_replication reported: 'This server is not able to reach a majority of members in the group. This server will now block all updates. The server will remain blocked until contact with the majority is restored. It is possible to use group_replication_force_members to force a new group membership.'

2023-05-27T10:17:20.815474+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] A configuration change was detected. Sending a Global View Message to all nodes. My node identifier is 0 and my address is 172.16.86.61:13307'

2023-05-27T10:17:20.815730+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 is reachable again.'

2023-05-27T10:17:38.361435+08:00 0 [Warning] [MY-011498] [Repl] Plugin group_replication reported: 'The member has resumed contact with a majority of the members in the group. Regular operation is restored and transactions are unblocked.'

2023-05-27T10:17:38.361659+08:00 0 [Warning] [MY-011494] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 is reachable again.'

2023-05-27T10:17:40.489269+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-27T10:17:40.489357+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.62:13307'

2023-05-27T10:17:40.489370+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.63:13307'

2023-05-27T10:17:40.489382+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 309739 1}, boot key synode is {88e4dbe0 309728 1}, configured event horizon=10, my node identifier is 4294967295'

2023-05-27T10:17:43.526534+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sending a request to a remote XCom failed. Please check the remote node log for more details.'

2023-05-27T10:17:43.526695+08:00 0 [ERROR] [MY-011505] [Repl] Plugin group_replication reported: 'Member was expelled from the group due to network failures, changing member status to ERROR.'

2023-05-27T10:17:43.526856+08:00 0 [Warning] [MY-011630] [Repl] Plugin group_replication reported: 'Due to a plugin error, some transactions were unable to be certified and will now rollback.'

2023-05-27T10:17:43.526929+08:00 0 [ERROR] [MY-011712] [Repl] Plugin group_replication reported: 'The server was automatically set into read only mode after an error was detected.'

2023-05-27T10:17:43.527329+08:00 53521 [ERROR] [MY-011615] [Repl] Plugin group_replication reported: 'Error while waiting for conflict detection procedure to finish on session 53521'

2023-05-27T10:17:43.527405+08:00 53521 [ERROR] [MY-010207] [Repl] Run function 'before_commit' in plugin 'group_replication' failed

2023-05-27T10:17:43.533122+08:00 53521 [Note] [MY-010914] [Server] Aborted connection 53521 to db: 'unconnected' user: 'root' host: 'localhost' (Error on observer while running replication hook 'before_commit').

2023-05-27T10:17:43.533134+08:00 53627 [System] [MY-011565] [Repl] Plugin group_replication reported: 'Setting super_read_only=ON.'

2023-05-27T10:17:43.533523+08:00 53628 [System] [MY-013373] [Repl] Plugin group_replication reported: 'Started auto-rejoin procedure attempt 1 of 3'

2023-05-27T10:17:43.533650+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] xcom_client_remove_node: Try to push xcom_client_remove_node to XCom'

2023-05-27T10:17:43.533682+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] xcom_client_remove_node: Failed to push into XCom.'

2023-05-27T10:17:44.837043+08:00 0 [System] [MY-011504] [Repl] Plugin group_replication reported: 'Group membership changed: This member has left the group.'

2023-05-27T10:17:44.838114+08:00 13 [Note] [MY-010596] [Repl] Error reading relay log event for channel 'group_replication_applier': slave SQL thread was killed

2023-05-27T10:17:44.838433+08:00 13 [Note] [MY-010587] [Repl] Slave SQL thread for channel 'group_replication_applier' exiting, replication stopped in log 'FIRST' at position 0

2023-05-27T10:17:44.838544+08:00 11 [Note] [MY-011444] [Repl] Plugin group_replication reported: 'The group replication applier thread was killed.'

2023-05-27T10:17:44.839024+08:00 53628 [Note] [MY-011673] [Repl] Plugin group_replication reported: 'Group communication SSL configuration: group_replication_ssl_mode: "DISABLED"'

2023-05-27T10:17:44.840989+08:00 53628 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Debug messages will be sent to: asynchronous::/data/mysql/data/GCS_DEBUG_TRACE'

2023-05-27T10:17:44.841282+08:00 53628 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv4 localhost address to the allowlist. It is mandatory that it is added.'

2023-05-27T10:17:44.841313+08:00 53628 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv6 localhost address to the allowlist. It is mandatory that it is added.'

2023-05-27T10:17:44.841357+08:00 53628 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] SSL was not enabled'

2023-05-27T10:17:44.842115+08:00 53630 [System] [MY-010597] [Repl] 'CHANGE MASTER TO FOR CHANNEL 'group_replication_applier' executed'. Previous state master_host='<NULL>', master_port= 0, master_log_file='', master_log_pos= 2046, master_bind=''. New state master_host='<NULL>', master_port= 0, master_log_file='', master_log_pos= 4, master_bind=''.

2023-05-27T10:17:44.860923+08:00 53628 [Note] [MY-011670] [Repl] Plugin group_replication reported: 'Group Replication applier module successfully initialized!'

2023-05-27T10:17:44.861050+08:00 53632 [Note] [MY-010581] [Repl] Slave SQL thread for channel 'group_replication_applier' initialized, starting replication in log 'FIRST' at position 0, relay log '/data/mysql/relaylog/mysql-relay-bin-group_replication_applier.000002' position: 2317

2023-05-27T10:17:45.006131+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using XCom as Communication Stack for XCom'

2023-05-27T10:17:45.006450+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] XCom initialized and ready to accept incoming connections on port 13307'

2023-05-27T10:17:45.006546+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Successfully connected to the local XCom via anonymous pipe'

2023-05-27T10:17:45.007262+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] TCP_NODELAY already set'

2023-05-27T10:17:45.007298+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully connected to peer 172.16.86.62:13307. Sending a request to be added to the group'

2023-05-27T10:17:45.007314+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sending add_node request to a peer XCom node'

2023-05-27T10:17:47.166146+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Node has not booted. Requesting an XCom snapshot from node number 1 in the current configuration'

2023-05-27T10:17:47.167161+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Installing requested snapshot. Importing all incoming configurations.'

2023-05-27T10:17:47.167358+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-27T10:17:47.167393+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Creating new server node 0 host 172.16.86.61:13307'

2023-05-27T10:17:47.167502+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Creating new server node 1 host 172.16.86.62:13307'

2023-05-27T10:17:47.167613+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 36985 0}, boot key synode is {88e4dbe0 36974 0}, configured event horizon=10, my node identifier is 0'

2023-05-27T10:17:47.167699+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-27T10:17:47.167723+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.61:13307'

2023-05-27T10:17:47.167747+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.62:13307'

2023-05-27T10:17:47.167761+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Creating new server node 2 host 172.16.86.63:13307'

2023-05-27T10:17:47.167866+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 37375 0}, boot key synode is {88e4dbe0 37364 0}, configured event horizon=10, my node identifier is 0'

2023-05-27T10:17:47.167964+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-27T10:17:47.167980+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.62:13307'

2023-05-27T10:17:47.167993+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.63:13307'

2023-05-27T10:17:47.168009+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 309739 1}, boot key synode is {88e4dbe0 309728 1}, configured event horizon=10, my node identifier is 4294967295'

2023-05-27T10:17:47.168089+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Updating physical connections to other servers'

2023-05-27T10:17:47.168104+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 0 host 172.16.86.62:13307'

2023-05-27T10:17:47.168117+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 1 host 172.16.86.63:13307'

2023-05-27T10:17:47.168131+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Using existing server node 2 host 172.16.86.61:13307'

2023-05-27T10:17:47.168146+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Sucessfully installed new site definition. Start synode for this configuration is {88e4dbe0 312618 0}, boot key synode is {88e4dbe0 312607 0}, configured event horizon=10, my node identifier is 2'

2023-05-27T10:17:47.168161+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Finished snapshot installation. My node number is 2'

2023-05-27T10:17:47.168269+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] The member has joined the group. Local port: 13307'

2023-05-27T10:17:47.168610+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connecting to 172.16.86.62:13307'

2023-05-27T10:17:47.168910+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connected to 172.16.86.62:13307'

2023-05-27T10:17:47.168950+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connecting to 172.16.86.63:13307'

2023-05-27T10:17:47.169306+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Connected to 172.16.86.63:13307'

2023-05-27T10:17:47.658374+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] This server adjusted its communication protocol to 8.0.27 in order to join the group.'

2023-05-27T10:17:47.658436+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Group is able to support up to communication protocol version 8.0.27'

2023-05-27T10:17:47.660220+08:00 53628 [System] [MY-013375] [Repl] Plugin group_replication reported: 'Auto-rejoin procedure attempt 1 of 3 finished. Member was able to join the group.'

2023-05-27T10:17:48.661179+08:00 0 [System] [MY-013471] [Repl] Plugin group_replication reported: 'Distributed recovery will transfer data using: Incremental recovery from a group donor'

2023-05-27T10:17:48.661739+08:00 53647 [Note] [MY-011576] [Repl] Plugin group_replication reported: 'Establishing group recovery connection with a possible donor. Attempt 1/10'

2023-05-27T10:17:48.661780+08:00 0 [System] [MY-011503] [Repl] Plugin group_replication reported: 'Group membership changed to mysqlwjhtest03:3307, mysqlwjhtest02:3307, mysqlwjhtest01:3307 on view 16849117016333239:11.'

2023-05-27T10:17:48.693433+08:00 53647 [System] [MY-010597] [Repl] 'CHANGE MASTER TO FOR CHANNEL 'group_replication_recovery' executed'. Previous state master_host='', master_port= 3306, master_log_file='', master_log_pos= 4, master_bind=''. New state master_host='mysqlwjhtest02', master_port= 3307, master_log_file='', master_log_pos= 4, master_bind=''.

2023-05-27T10:17:48.727090+08:00 53647 [Note] [MY-011580] [Repl] Plugin group_replication reported: 'Establishing connection to a group replication recovery donor 62a5b927-fa01-11ed-82ce-0050569c05d6 at mysqlwjhtest02 port: 3307.'

2023-05-27T10:17:48.727967+08:00 53648 [Warning] [MY-010897] [Repl] Storing MySQL user name or password information in the master info repository is not secure and is therefore not recommended. Please consider using the USER and PASSWORD connection options for START SLAVE; see the 'START SLAVE Syntax' in the MySQL Manual for more information.

2023-05-27T10:17:48.730254+08:00 53648 [System] [MY-010562] [Repl] Slave I/O thread for channel 'group_replication_recovery': connected to master 'repl@mysqlwjhtest02:3307',replication started in log 'FIRST' at position 4

2023-05-27T10:17:48.733307+08:00 53649 [Note] [MY-010581] [Repl] Slave SQL thread for channel 'group_replication_recovery' initialized, starting replication in log 'FIRST' at position 0, relay log '/data/mysql/relaylog/mysql-relay-bin-group_replication_recovery.000001' position: 4

2023-05-27T10:17:48.757900+08:00 53647 [Note] [MY-011585] [Repl] Plugin group_replication reported: 'Terminating existing group replication donor connection and purging the corresponding logs.'

2023-05-27T10:17:48.758896+08:00 53649 [Note] [MY-010587] [Repl] Slave SQL thread for channel 'group_replication_recovery' exiting, replication stopped in log 'mysql-bin.000001' at position 6217

2023-05-27T10:17:48.759258+08:00 53648 [Note] [MY-011026] [Repl] Slave I/O thread killed while reading event for channel 'group_replication_recovery'.

2023-05-27T10:17:48.759324+08:00 53648 [Note] [MY-010570] [Repl] Slave I/O thread exiting for channel 'group_replication_recovery', read up to log 'mysql-bin.000001', position 6217

2023-05-27T10:17:48.796509+08:00 53647 [System] [MY-010597] [Repl] 'CHANGE MASTER TO FOR CHANNEL 'group_replication_recovery' executed'. Previous state master_host='mysqlwjhtest02', master_port= 3307, master_log_file='', master_log_pos= 4, master_bind=''. New state master_host='<NULL>', master_port= 0, master_log_file='', master_log_pos= 4, master_bind=''.

2023-05-27T10:17:48.831253+08:00 0 [System] [MY-011490] [Repl] Plugin group_replication reported: 'This server was declared online within the replication group.'

2023-05-27T10:29:27.705642+08:00 53768 [Note] [MY-013730] [Server] 'wait_timeout' period of 300 seconds was exceeded for `root`@`localhost`. The idle time since last command was too long.

2023-05-27T10:29:27.705830+08:00 53768 [Note] [MY-010914] [Server] Aborted connection 53768 to db: 'unconnected' user: 'root' host: 'localhost' (The client was disconnected by the server because of inactivity.).

In the above two cases, I set the majority network unreachable timeout parameter (group_replication_unreachable_majority_timeout) to 0, so after the heartbeat detection times out, the suspicious node is marked as unreachable. Because the expel time for suspicious nodes is set to 30 seconds (group_replication_member_expel_timeout=30). So after 35 seconds of network outage, suspicious nodes in the majority node cluster are evicted. The suspicious nodes in the minority node cluster are always in the unreachable state.

Second, the impact of the parameter group_replication_unreachable_majority_timeout on the cluster

For the convenience of observation, here is set to 80 seconds, and then repeat the steps of Case 2.

group_replication_member_expel_timeout=30 #与上面案例保持不变,设为30秒

group_replication_unreachable_majority_timeout=80 #设置为80秒

注意理解,参数group_replication_unreachable_majority_timeout表示多数派节点不可达超时时间。即网络故障分区后,它只对少数派节点有效。

At this time, the primary node is node3, so the network between node3 and the other two nodes is disconnected.

iptables -A INPUT -p tcp -s 172.16.86.62 -j DROP

iptables -A OUTPUT -p tcp -d 172.16.86.62 -j DROP

iptables -A INPUT -p tcp -s 172.16.86.61 -j DROP

iptables -A OUTPUT -p tcp -d 172.16.86.61 -j DROP

date

#恢复网络:iptables -F

Observe the cluster status of each node during the network failure of the master node

- 13:15:56 Simulated network outage

- 13:16:01 At 5 seconds, the heartbeat detection network was interrupted, and the minority node (node3) log showed that all updates would be blocked for 85 seconds, and the status of the other two nodes was marked as unreachalbe. Meanwhile, the majority node (node1, node2) cluster state view marks node3 as unreachalbe.

- 13:16:31 At 35 seconds, the logs of the majority nodes (node1, node2) show that node3 is expelled from the cluster and a new master is elected at the same time. The minority node has no log output. At this time, the primary of the minority node (node3) view is still itself (but all updates are blocked).

- 13:17:26 At 85 seconds, the minority node (node3) changes its cluster view status from online primary to error. Majority nodes have no log output.

- 13:17:56 At 115 seconds, the minority node (node3) updates the cluster status view and kicks out node1 and node2 node information.

After 5 seconds, the heartbeat detects that the network is interrupted, all nodes detect that the network is unreachable, and update the cluster status view

#少数派节点(node3)

2023-05-27T13:16:01.716803+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest01:3307 has become unreachable.'

2023-05-27T13:16:01.716934+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 has become unreachable.'

2023-05-27T13:16:01.716951+08:00 0 [ERROR] [MY-011496] [Repl] Plugin group_replication reported: 'This server is not able to reach a majority of members in the group. This server will now block all updates. The server will remain blocked for the next 85 seconds. Unless contact with the majority is restored, after this time the member will error out and leave the group. It is possible to use group_replication_force_members to force a new group membership.'

2023-05-27T13:17:26.724015+08:00 71231 [ERROR] [MY-011711] [Repl] Plugin group_replication reported: 'This member could not reach a majority of the members for more than 85 seconds. The member will now leave the group as instructed by the group_replication_unreachable_majority_timeout option.'

#多数派节点(node1、node2)

2023-05-27T13:16:01.719345+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 has become unreachable.'

After 35 seconds, the majority nodes (node1, node2) expel node3 from the cluster, select node2 as the new master library, and update the status view. There is no log output for failed nodes.

2023-05-27T13:16:32.706137+08:00 0 [Warning] [MY-011499] [Repl] Plugin group_replication reported: 'Members removed from the group: mysqlwjhtest03:3307'

2023-05-27T13:16:32.706150+08:00 0 [System] [MY-011500] [Repl] Plugin group_replication reported: 'Primary server with address mysqlwjhtest03:3307 left the group. Electing new Primary.'

2023-05-27T13:16:32.706259+08:00 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member gtid_executed: debbaad4-1fd7-4652-80a4-5c87cfde0db6:1-19'

2023-05-27T13:16:32.706272+08:00 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member applier channel received_transaction_set: debbaad4-1fd7-4652-80a4-5c87cfde0db6:1-19'

2023-05-27T13:16:32.706284+08:00 0 [System] [MY-011507] [Repl] Plugin group_replication reported: 'A new primary with address mysqlwjhtest02:3307 was elected. The new primary will execute all previous group transactions before allowing writes.'

After 85 seconds, the minority node (node3) changes its status from online to error. Majority nodes have no log output.

2023-05-27T13:17:26.724015+08:00 71231 [ERROR] [MY-011711] [Repl] Plugin group_replication reported: 'This member could not reach a majority of the members for more than 85 seconds. The member will now leave the group as instructed by the group_replication_unreachable_majority_timeout option.'

2023-05-27T13:17:26.724483+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] xcom_client_remove_node: Try to push xcom_client_remove_node to XCom'

2023-05-27T13:17:26.724560+08:00 71231 [ERROR] [MY-011712] [Repl] Plugin group_replication reported: 'The server was automatically set into read only mode after an error was detected.'

2023-05-27T13:17:26.725179+08:00 71231 [System] [MY-011565] [Repl] Plugin group_replication reported: 'Setting super_read_only=ON.'

2023-05-27T13:17:26.725340+08:00 71231 [Note] [MY-011647] [Repl] Plugin group_replication reported: 'Going to wait for view modification'

After 115 seconds, the minority node (node3) kicks out node1 and node2 node information from its own cluster status view, and then auto-rejoin tries to join the cluster (if the network is not restored between 85s and 115s, the joining fails).

2023-05-27T13:17:56.296998+08:00 0 [ERROR] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Timeout while waiting for the group communication engine to exit!'

2023-05-27T13:17:56.297075+08:00 0 [ERROR] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] The member has failed to gracefully leave the group.'

2023-05-27T13:17:56.396287+08:00 0 [System] [MY-011504] [Repl] Plugin group_replication reported: 'Group membership changed: This member has left the group.'

2023-05-27T13:17:56.396530+08:00 72720 [System] [MY-013373] [Repl] Plugin group_replication reported: 'Started auto-rejoin procedure attempt 1 of 3'

2023-05-27T13:17:56.397353+08:00 71913 [Note] [MY-010596] [Repl] Error reading relay log event for channel 'group_replication_applier': slave SQL thread was killed

2023-05-27T13:17:56.397914+08:00 71913 [Note] [MY-010587] [Repl] Slave SQL thread for channel 'group_replication_applier' exiting, replication stopped in log 'FIRST' at position 0

2023-05-27T13:17:56.398188+08:00 71911 [Note] [MY-011444] [Repl] Plugin group_replication reported: 'The group replication applier thread was killed.'

2023-05-27T13:17:56.398830+08:00 72720 [Note] [MY-011673] [Repl] Plugin group_replication reported: 'Group communication SSL configuration: group_replication_ssl_mode: "DISABLED"'

2023-05-27T13:17:56.400717+08:00 72720 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Debug messages will be sent to: asynchronous::/data/mysql/data/GCS_DEBUG_TRACE'

2023-05-27T13:17:56.400984+08:00 72720 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv4 localhost address to the allowlist. It is mandatory that it is added.'

2023-05-27T13:17:56.401014+08:00 72720 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv6 localhost address to the allowlist. It is mandatory that it is added.'

Minority node (node3) cluster view changes

#5秒后

root@localhost: 14:05: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;system date;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest01 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest03 | ONLINE | PRIMARY | 8.0.28 |

| mysqlwjhtest02 | UNREACHABLE | SECONDARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

#85秒后

root@localhost: 14:07: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;system date;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest01 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest03 | ERROR | | 8.0.28 |

| mysqlwjhtest02 | UNREACHABLE | SECONDARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

#115秒后

root@localhost: 14:08: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;system date;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | ERROR | | 8.0.28 |

+----------------+--------------+-------------+----------------+

1 row in set (0.01 sec)

Analysis of test results

Scenario 1: Secondary node fails

- When the network is interrupted for 5 seconds, the heartbeat detection times out, and the minority node changes the status of the other two lost nodes to unreachable. The majority node will also change the peering status to unreachable.

- When the network is interrupted for 35 seconds, the majority node will expel the suspicious node from the cluster.

- When the network is interrupted for 85 seconds, the minority nodes (faulty nodes) change their status to error. (If the network recovers before 85 seconds, the cluster automatically recovers)

- When the network is interrupted for 115 seconds, the minority nodes (faulty nodes) drive the two disconnected nodes out of the cluster. (If the network recovers before 115 seconds, mgr will first evict the suspicious node at 115 seconds, and then auto-rejoin will try to rejoin the error node to the cluster, and it can join successfully.)

Scenario 2: Primary node fails

- When the network is interrupted for 5 seconds, the heartbeat detection times out, and the minority node changes the status of the other two lost nodes to unreachable. The majority node will also change the peering status to unreachable.

- When the network is interrupted for 35 seconds, the majority node will expel the suspicious node from the cluster, and will elect a new master library at the same time.

- When the network is interrupted for 85 seconds, the minority nodes (faulty nodes) change their status to error. (If the network recovers before 85 seconds, the cluster automatically recovers)

- When the network is interrupted for 115 seconds, the minority nodes (faulty nodes) drive the two disconnected nodes out of the cluster. (If the network recovers before 115 seconds, mgr will first evict the suspicious node at 115 seconds, and then auto-rejoin will try to rejoin the error node to the cluster, and it can join successfully.)

Calculated at several points in time

- 35 seconds: heartbeat detection 5s + group_replication_member_expel_timeout=35 seconds

- 85 seconds: heartbeat detection 5s + group_replication_unreachable_majority_timeout=85

- 115 seconds: heartbeat detection 5s + group_replication_unreachable_majority_timeout=80s + group_replication_member_expel_timeout=115 seconds

In summary, the relationship between the three parameters is as follows

- group_replication_member_expel_timeout indicates the time for most rows to expel unreable nodes, that is, how many seconds to tolerate to see if the network can recover.

- The minority node first reads the parameter group_replication_unreachable_majority_timeout, and enters the error state after the timeout is reached. Then count the suspicious node expel time (group_replication_member_expel_timeout) from zero, and delete all lost node information from the cluster status view after the timeout time is reached. Then trigger auto-rejoin retry (group_replication_autorejoin_tries);

- The value of the group_replication_unreachable_majority_timeout parameter must be greater than the group_replication_member_expel_timeout, otherwise the new master has not been elected after the failure of the main library.

It can be seen that when the values of the parameters group_replication_member_expel_timeout and group_replication_unreachable_majority_timeout are the same, that is, when the minority nodes enter the error state, the majority nodes just elect a new master, which can avoid the phenomenon of dual masters.

It can be seen that when the values of the parameters group_replication_member_expel_timeout and group_replication_unreachable_majority_timeout are the same, that is, when the minority nodes enter the error state, the majority nodes just elect a new master, which can avoid the phenomenon of dual masters.

3. Fault detection process

Combined with the above case, let's take a look at the fault detection process of Group Replication.

-

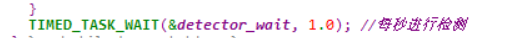

1. Each node in the cluster will periodically (once per second) send heartbeat information to other nodes. If no heartbeat information from other nodes is received within 5s (fixed value, no parameter adjustment), the node will be marked as a suspicious node, and the state of the node will be set to UNREACHABLE. If equal to or more than 1/2 of the nodes in the cluster are displayed as UNREACHABLE, the cluster cannot provide external write services.

-

2. If within group_replication_member_expel_timeout (starting from MySQL 8.0.21, the default value of this parameter is 5, the unit is s, and the maximum settable value is 3600, that is, 1 hour), if the suspicious node returns to normal, it will be directly applied to the XCom Cache news. The size of XCom Cache is determined by group_replication_message_cache_size, the default is 1G.

-

3. If the suspicious node does not return to normal within the group_replication_member_expel_timeout time, it will be expelled from the cluster. Minority nodes then,

- If the parameter group_replication_unreachable_majority_timeout is not set and the network returns to normal, it is set to error because it has been eliminated by the majority.

- When the limit of group_replication_unreachable_majority_timeout is reached, it is automatically set to error

If group_replication_autorejoin_tries is not 0, for nodes in ERROR state, it will automatically retry and rejoin the cluster (auto-rejoin). If group_replication_autorejoin_tries is 0 or the retry fails, the operation specified by group_replication_exit_state_action will be performed. Optional operations are:

-

READ_ONLY: Read-only mode. In this mode, super_read_only is set to ON. Defaults.

-

OFFLINE_MODE: Offline mode. In this mode, offline_mode and super_read_only will be set to ON. At this time, only users with CONNECTION_ADMIN (SUPER) authority can log in, and ordinary users cannot log in.

-

ABORT_SERVER: Shut down the instance.

4. XCom Cache

XCom Cache is a message cache used by XCom to cache messages exchanged between cluster nodes. Cached messages are part of the consensus protocol. If the network is unstable, nodes may lose connection.

If the node recovers within a certain time (determined by group_replication_member_expel_timeout), it will first apply the messages in the XCom Cache. If XCom Cache does not have all the messages it needs, the node will be evicted from the cluster. After being evicted from the cluster, if group_replication_autorejoin_tries is not 0, it will rejoin the cluster (auto-rejoin).

Rejoining the cluster will use Distributed Recovery to fill in the difference data. Compared with directly using the messages in the XCom Cache, joining the cluster through Distributed Recovery takes a relatively long time and the process is more complicated, and the performance of the cluster will also be affected.

Therefore, when we set the size of XCom Cache, we need to estimate the memory usage during group_replication_member_expel_timeout + 5s. How to estimate, the relevant system tables will be introduced later.

5. Proposals for production configuration

#适用于8.0.21以上版本

group_replication_member_expel_timeout=30 #可疑节点超过30秒被驱逐集群,默认5秒。注意:如果是少数派节点(故障节点),需要在网络不可达超时时间等待结束后,从零计时30秒。

group_replication_unreachable_majority_timeout=35 #网络不可达超时时间,在少数派节点(故障节点)将自己的集群状态视图改为error的时间。默认值为0,表示一直处于UNREACHABLE状态,如果要设置应该大于心跳超时和group_replication_member_expel_timeout参数的设置总和这里是30+5

group_replication_message_cache_size=5G #XCom cache使用的消息缓存,默认1G,建议设置为5G。

group_replication_autorejoin_tries=3 #error状态节点重新加入集群的次数

注意理解:参数group_replication_unreachable_majority_timeout表示多数派节点不可达,即网络故障分区后,它只对少数派节点有效。

At present, our vip script switching logic mainly judges the state of the old master, and when the old master changes from online primary to error state, the vip switch is triggered. Therefore, we set the first two parameters to the same value, so as to ensure that the moment the VIP switch is triggered, the mgr majority node just elects a new master. At the same time, the phenomenon of double masters is also avoided.

6. Matters needing attention

If there are UNREACHABLE nodes in the cluster, there are the following limitations and deficiencies:

- The topology of the cluster cannot be adjusted, including adding and removing nodes.

- If the consistency level of Group Replication is equal to AFTER or BEFORE_AND_AFTER, the write operation will wait until the UNREACHABLE node is ONLINE and apply the operation.

- Cluster throughput will drop. If it is a single-master mode, you can set group_replication_paxos_single_leader (introduced in MySQL 8.0.27) to ON to solve this problem, which changes the way the Mencius protocol takes turns.