Table of contents

Identifying Reference Cycles

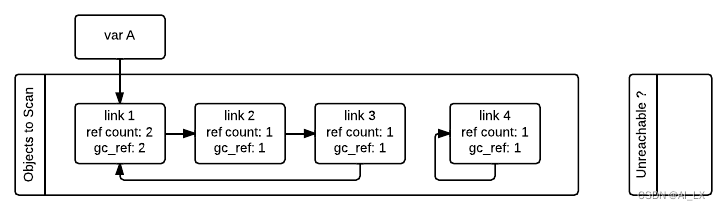

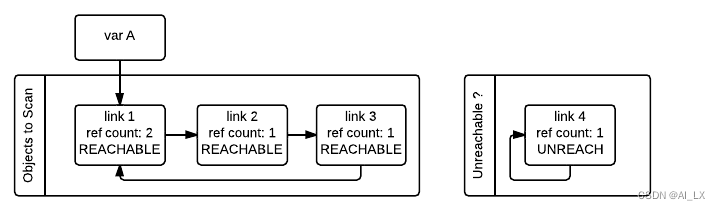

When the GC starts, it has all container objects to scan in the first linked list. The goal is to move all unreachable objects. Since most objects are reachable, it is much more efficient to move unreachable objects because fewer pointer updates are required.

Every object that supports garbage collection will have an additional reference count field that is initialized to the object's reference count (gc_ref in the diagram) when the algorithm starts. This is because the algorithm needs to modify the reference count to do the calculation so that the interpreter does not modify the actual reference count field.

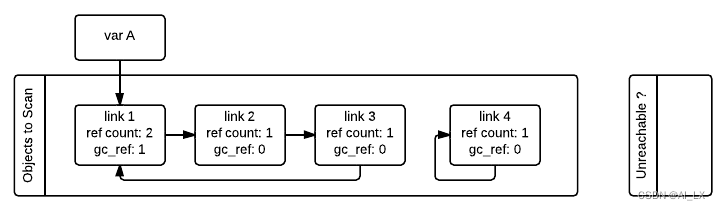

The GC then iterates over all containers in the first list and decrements the GC_ref field of any other objects referenced by the container. Doing so makes use of the tp_traverse slot in the container class (implemented using the C API or inherited by a superclass) to know what object each container refers to. After all objects are scanned, only objects referenced from outside the "objects to scan" list have gc_refs > 0.

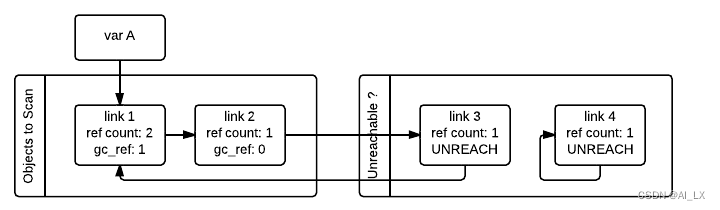

Note that gc_refs==0it doesn't mean the object is inaccessible. This is because another object (gc_refs > 0) accessible from the outside can still refer to it. For example, the link_2 object in our example gc_refs==0ends with but is still referenced by the link_1 object reachable from the outside. To get a set of truly unreachable objects, the garbage collector uses the tp_traverse slot to rescan the container objects; this time using a different traversal function, which gcrefs==0marks the objects as "temporarily unreachable" and then moves them to the temporarily unreachable arrival list. The diagram below depicts the state of the list when the GC has processed the link_3 and link_4 objects but not yet processed link_1 and link_2.

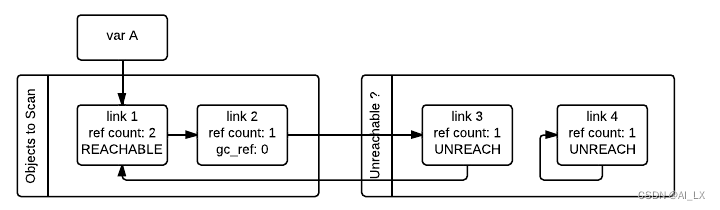

Then the GC scans for the next link_1 object. Since it has gc_refs==1, the GC doesn't do anything special because it knows it must be reachable (and is already on the reachable list):

When the GC encounters a reachable object (GC_refs > 0), it traverses its references using the tp_traverse slot to find all objects reachable from it, moving them to the end of the list of reachable objects (where they originally started ), and set its GC_refs field to 1. This is the case for links 2 and 3 below, as they are accessible from link 1. From the state in the previous image, after checking the object referenced by link_1, the GC knows that link_3 is reachable after all, so it is moved back to the original list, and its GC_refs field is set to 1, so that if the GC visits it again, it will know it is accessible. In order to avoid visiting an object twice, the GC marks all objects that have been visited once (by unsetting the PREV_MASK_COLLECTING flag), so that if an already processed object is referenced by other objects, the GC will not process it twice.

Note that objects marked "temporarily unreachable" and subsequently moved back to the reachable list will be revisited by the garbage collector, since all references to that object now need to be disposed as well. This process is actually a breadth-first search of the object graph. Once all objects have been scanned, the GC knows that all container objects in the temporarily unreachable list are unreachable, so they can be garbage collected.

Note that objects marked "temporarily unreachable" and subsequently moved back to the reachable list will be revisited by the garbage collector, since all references to that object now need to be disposed as well. This process is actually a breadth-first search of the object graph. Once all objects have been scanned, the GC knows that all container objects in the temporarily unreachable list are unreachable, so they can be garbage collected.

In practice, it's important to note that neither approach requires recursion, nor does it require additional memory proportional to the number of objects, the number of pointers, or the length of pointer chains. The object itself contains all the storage required by the GC algorithm, except O(1) storage for internal C needs.

Why moving unreachable objects is better

Moving unreachable objects sounds logical under the premise that most objects are usually reachable, until you think about it: the cost it pays is actually not obvious.

Suppose we create objects A, B, C in order. They appear in the young generation in the same order. If B points to A, and C points to B, and C is reachable from the outside, then after the first step of the algorithm run, the adjusted reference counts will be 0, 0, and 1, respectively, because the only object reachable from the outside is C.

When A is found in the next step of the algorithm, A is moved to the unreachable list. The same is true when encountering B for the first time. Then iterate over C, moving B back to the accessible list. Eventually B is traversed, and A is moved back to the accessible list.

So reachable objects B and A both move twice, instead of not moving at all. Why is this a win? Instead, a simple algorithm for moving reachable objects would move A, B, and C once each. The point is that this dance puts the objects in the order C, B, A, which is the reverse of the original order. On all subsequent scans, they will not move. This saves an infinite number of moves later in the collection since most objects are not in the loop. The only time it might cost more is to scan the chain for the first time.

Destroying an unreachable object

Once the GC knows the list of unreachable objects, a very delicate process begins with the goal of completely destroying these objects. Roughly, the process follows these steps:

Handle and clean up weak references (if any). If objects in an unreachable collection are to be destroyed and have weak references with callbacks, those callbacks need to be executed. This process is very delicate, as any error could cause the object in an inconsistent state to be reactivated or accessed by some Python function called by the callback. Additionally, weak references that also belong to the unreachable set (the object and its weak references are in an unreachable cycle) need to be cleaned up immediately without executing a callback. Otherwise, it will trigger when the tp_clear slot is called later, causing havoc. It's ok to ignore callbacks for weak refs, since both the object and the weakref will go away, so it's reasonable to say that the weak ref will go away first.

If an object has old finalizers (tp_del slots), move them to the gc.garbage list.

Call finalizers (tp_finalize slot) and mark objects as finalized to avoid calling them twice if they restart or other finalizers have already deleted the object first.

Handle resurrected objects. If some objects have been resurrected, the GC will find a new subset of objects that are still unreachable by running the cycle detection algorithm again, and continue to perform these operations.

Calling each object's tp_clear slot causes all internal links to be broken and the reference count to drop to 0, triggering the destruction of all inaccessible objects.

Once the GC knows the list of unreachable objects, a very delicate process begins with the goal of completely destroying these objects. Roughly, the process follows these steps:

Handle and clean up weak references (if any). If objects in an unreachable collection are to be destroyed and have weak references with callbacks, those callbacks need to be executed. This process is very delicate, as any error could cause the object in an inconsistent state to be reactivated or accessed by some Python function called by the callback. Additionally, weak references that also belong to the unreachable set (the object and its weak references are in an unreachable cycle) need to be cleaned up immediately without executing a callback. Otherwise, it will trigger when the tp_clear slot is called later, causing havoc. It's ok to ignore callbacks for weak refs, since both the object and the weakref will go away, so it's reasonable to say that the weak ref will go away first.

If an object has old finalizers (tp_del slots), move them to the gc.garbage list.

Call finalizers (tp_finalize slot) and mark objects as finalized to avoid calling them twice if they restart or other finalizers have already deleted the object first.

Handle resurrected objects. If some objects have been resurrected, the GC will find a new subset of objects that are still unreachable by running the cycle detection algorithm again, and continue to perform these operations.

Calling each object's tp_clear slot causes all internal links to be broken and the reference count to drop to 0, triggering the destruction of all inaccessible objects.

Optimization: Generations

To limit the time required for each garbage collection, GC uses a popular optimization: generation. The main idea behind this concept is the assumption that most objects are short-lived and thus can be collected shortly after creation. It turns out that this is very close to the reality of many Python programs, because many temporary objects are created and destroyed very quickly. The older an object is, the less likely it is to become inaccessible.

To take advantage of this fact, all container objects are divided into three spaces/generations. Every new object starts from the first generation (generation 0). The previous algorithm is only executed on objects of a particular generation, if an object survives collection in its generation, it will be moved to the next generation (generation 1), where it will be polled for collection less frequently . If the same object survives another round of GC in the new generation (generation 1), it will be moved to the previous generation (generation 2), where it will be checked the least number of times.

To decide when to run, the collector keeps track of the number of object allocations and deallocations since the last collection. Collection starts when the number of allocations minus the number of frees exceeds threshold_0. Initially only generation 0 is checked. If generation 0 has been checked more than the threshold 1 time since generation 1 was checked, then generation 1 is also checked. For the second generation, things are a bit more complicated; see Collecting the oldest generation for details. These thresholds can be checked using the gc.get_threshold() function:

To limit the time required for each garbage collection, GC uses a popular optimization: generation. The main idea behind this concept is the assumption that most objects are short-lived and thus can be collected shortly after creation. It turns out that this is very close to the reality of many Python programs, because many temporary objects are created and destroyed very quickly. The older an object is, the less likely it is to become inaccessible.

To take advantage of this fact, all container objects are divided into three spaces/generations. Every new object starts from the first generation (generation 0). The previous algorithm is only executed on objects of a particular generation, if an object survives collection in its generation, it will be moved to the next generation (generation 1), where it will be polled for collection less frequently . If the same object survives another round of GC in the new generation (generation 1), it will be moved to the previous generation (generation 2), where it will be checked the least number of times.

To decide when to run, the collector keeps track of the number of object allocations and deallocations since the last collection. Collection starts when the number of allocations minus the number of frees exceeds threshold_0. Initially only generation 0 is checked. If generation 0 has been checked more than the threshold 1 time since generation 1 was checked, then generation 1 is also checked. For the second generation, things are a bit more complicated; see Collecting the oldest generation for details. These thresholds can be checked using the gc.get_threshold() function:

import gc

gc.get_threshold()

(700, 10, 10)

The contents of these generations can be inspected using the gc.get_objects(generation=NUM) function, and collections can be triggered specifically within a generation by calling gc.collect(generation=NUM).

import gc

class MyObj:

pass

# Move everything to the last generation so it's easier to inspect

# the younger generations.

gc.collect()

0

# Create a reference cycle.

x = MyObj()

x.self = x

# Initially the object is in the youngest generation.

gc.get_objects(generation=0)

[..., <__main__.MyObj object at 0x7fbcc12a3400>, ...]

# After a collection of the youngest generation the object

# moves to the next generation.

gc.collect(generation=0)

0

gc.get_objects(generation=0)

[]

gc.get_objects(generation=1)

[..., <__main__.MyObj object at 0x7fbcc12a3400>, ...]

Collect the oldest generation

In addition to various configurable thresholds, the GC only triggers a full collection of the oldest generation if the long_lived_pending/long_lived_total ratio is above a given value (25% hardwired). The reason is that while "non-full" collections (i.e. collections of the young and middle generations) will always examine roughly the same number of objects (determined by the above threshold), the cost of a full collection is proportional to the total number of long-lived objects, which Actually unlimited. In fact, it has been pointed out that doing a full collection every time a <constant> object is created can lead to a drastic performance drop for workloads that include creating and storing large numbers of long-lived objects (e.g. building a GC-tracked object will show quadratic performance instead of the expected linear performance). Conversely, using the above ratio, yields amortized linear performance to the total number of objects (the effect can be summed up this way: "As the number of objects grows, each full garbage collection becomes more and more expensive, but we do more and more garbage few").