The whole process of Jetson nano from configuration environment to yolov5 successful reasoning and detection

Article directory

- 1. Burn image

- 2. Configure the environment and successfully infer

- Summarize

1. Burn image

The official image download address of the official website: https://developer.nvidia.com/embedded/downloads

may not be able to download, so I provide a Baidu cloud link to download, the download address is as follows:

Link: https://pan.baidu.com/s/1njWhDqNquyUqnDRCp31y8w

Extraction code: 6qqh

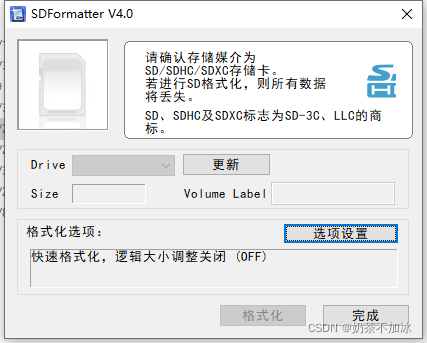

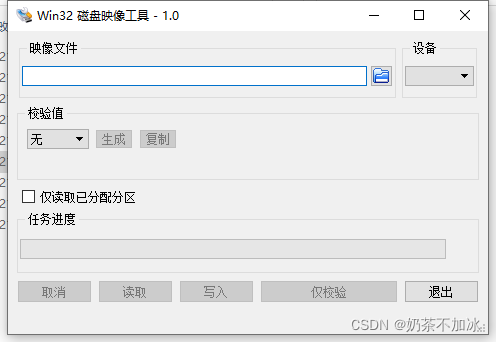

1. Need to download two burning configuration software, here are two recommended software, one software is to format SD card (SD card recommended at least 64G), one is burning software

1.1 SD Card Formatter: format the SD card (burn the image after formatting)

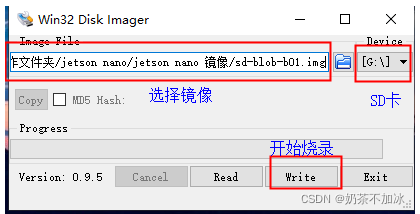

1.2 Win32 Diskimg: write the image into the SD card (be sure to decompress the downloaded image, see the .img file and follow the steps below It may take half an hour to burn the image)

(Ignore the difference between the English and Chinese of the two pictures, just follow the above steps to burn)

2. Configure the environment and successfully infer

1. Update system and packages

Open a terminal and enter the following codes in sequence

sudo apt-get update

sudo apt-get upgrade

You may also need to install the Chinese input method

ibus-pinyin

sudo apt-get install ibus-pinyin

Restart, you can see it in language support

reboot

You can also follow the link below to install the Chinese input method

https://blog.csdn.net/weixin_41275422/article/details/104500683

http://www.360doc.com/content/20/0501/13/40492717_909598661.shtml

2. Configuration environment

2.1 Configure CUDA

Open a terminal and type

sudo gedit ~/.bashrc

Add the following code at the end of the opened document:

export CUDA_HOME=/usr/local/cuda-10.2

export LD_LIBRARY_PATH=/usr/local/cuda-10.2/lib64:$LD_LIBRARY_PATH

export PATH=/usr/local/cuda-10.2/bin:$PATH

ctrl+s to save the document, then exit the document, and execute the following on the terminal above:

source ~/.bashrc

Check whether it is successful, and the version number of CUDA will appear if it is successful:

nvcc -V #如果配置成功可以看到CUDA的版本号

If the configuration is successful, you can see the version number of CUDA

2.2 Modify the video memory of the Nano board

1. Open the terminal and enter:

sudo gedit /etc/systemd/nvzramconfig.sh

2. Modify the nvzramconfig.sh file:

Find the mem value in the opened document and modify the value of mem as follows:

找到的 mem = $((("${totalmem}"/2/"${NRDEVICES}")*1024))

我们修改的 mem = $((("${totalmem}"*2/"${NRDEVICES}")*1024))

Change / to *

3. Restart:

reboot

4. Terminal input:

free -h

It can be seen that the swap has changed to 7.7G

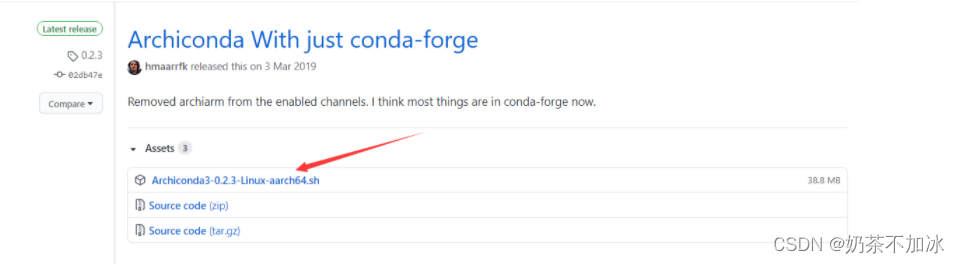

3. Install archiconda (that is, anaconda on the jetson nano board)

Because Anaconda does not support the arm64 architecture, the Jetson Nano development board cannot successfully install Anaconda (this is a huge pit, and I found out that Anaconda cannot run on jetson nano after stepping on this pit for a long time). The Conda release version of the ARM platform, after installing archiconda, we can use the idea of smoothly using conda on the win10 system to install packages and run scripts, does it feel familiar?

1. Download address:

https://github.com/Archiconda/build-tools/releases

2. Install:

Find the location of the folder where you downloaded the archiconda package. The opened terminal should be in your folder, or cd to this folder, and then execute:

sudo apt-get install Archiconda3-0.2.3-Linux-aarch64.sh

(If the above command cannot be installed, use the following command to install)

bash Archiconda3-0.2.3-Linux-aarch64.sh

When installing, you need to be sure all the time, press enter, all default, it may take a little time

3. Test conda:

Close the above installation terminal, open a new terminal on the desktop, and enter the conda environment:

source archiconda3/bin/activate

If you cannot enter the base environment, you need to configure environment variables.

The configuration process is as follows:

sudo gedit ~/.bashrc

Add the following code to the last line of the opened document:

export PATH=~/archiconda3/bin:$PATH

4. Create a virtual environment to run yolov5:

After entering the base environment of conda:

conda create -n yolov5_py36 python=3.6 #创建一个python3.6环境

conda info --envs # 查看所有环境

conda activate yolov5_py36 #进入环境

conda deactivate # 退出环境

5. Add Tsinghua source image to conda

Enter the following codes in sequence:

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/msys2/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/

Add Tsinghua source image as the default URL

First install pip:

sudo apt-get update

sudo apt-get upgrade

sudo apt-get dist-upgrade

sudo apt-get install python3-pip libopenblas-base libopenmpi-dev

pip3 install --upgrade pip #如果pip已是最新,可不执行

Add Tsinghuayuan as the default URL

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

If we install the package later and report a channel error, we can remove the Tsinghua source image, here is the removal command, install the wrong package or environment, and then add the above codes in sequence:

查看镜像源命令:conda config --show channels

移除镜像源命令:conda config --remove-key channels

4. Install pytorch and trochvision (the most important place)

Baidu cloud download link:

Link: https://pan.baidu.com/s/11NpLUKKDKbudn_JM6W4aGg

Extraction code: 3rpi

After downloading, you will see a whl file and torchvision file, pytorch is an installation package that can be installed directly, and torchvision is a folder,

manual installation is required.

1. Install pytorch

Enter our yolov5 operating environment

conda activate yolov5_py36

Then find the folder where the downloaded torch-1.8.0-cp36-cp36m-linux_aarch64.whl package is located, enter the folder, and enter the installation command:

pip3 install torch-1.8.0-cp36-cp36m-linux_aarch64.whl #注意安装包的位置

2. Test whether pytorch is installed successfully:

python #进入python编码

enter:

import torch

Then enter:

print(torch.__version__)

See the version number of torch, torch installation is successful

3. Install trochvision

Enter the trochvision folder and

execute the command:

export BUILD_VERSION=0.9.0

Then execute the installation command:

python setup.py install

wait wait, it takes a long time

4. Test whether trochvision is installed successfully

Enter python encoding:

python

implement:

import torchvision

Execute again:

print(torchvision.__version__)

See torchvision version number, success. The installation is almost successful here, just enter the yolov5 folder and you're done.

5. Build YOLOv5 environment

Still in our yolov5_py36 environment

Enter the YOLOv5 code folder, here is the author's version of the code, Baidu cloud download link:

Link: https://pan.baidu.com/s/1USNqOdzgiHLbfkiaXonjog

Extraction code:

yolov5s.pt has been placed in the oq81 folder The weight file does not need to be downloaded separately.

cd into our yolov5-maser folder

and execute the following command to install other operating environments:

pip install -r requirements.txt

Because we replaced the Tsinghua source mirror earlier, the download speed is still very fast, waiting for the installation to complete.

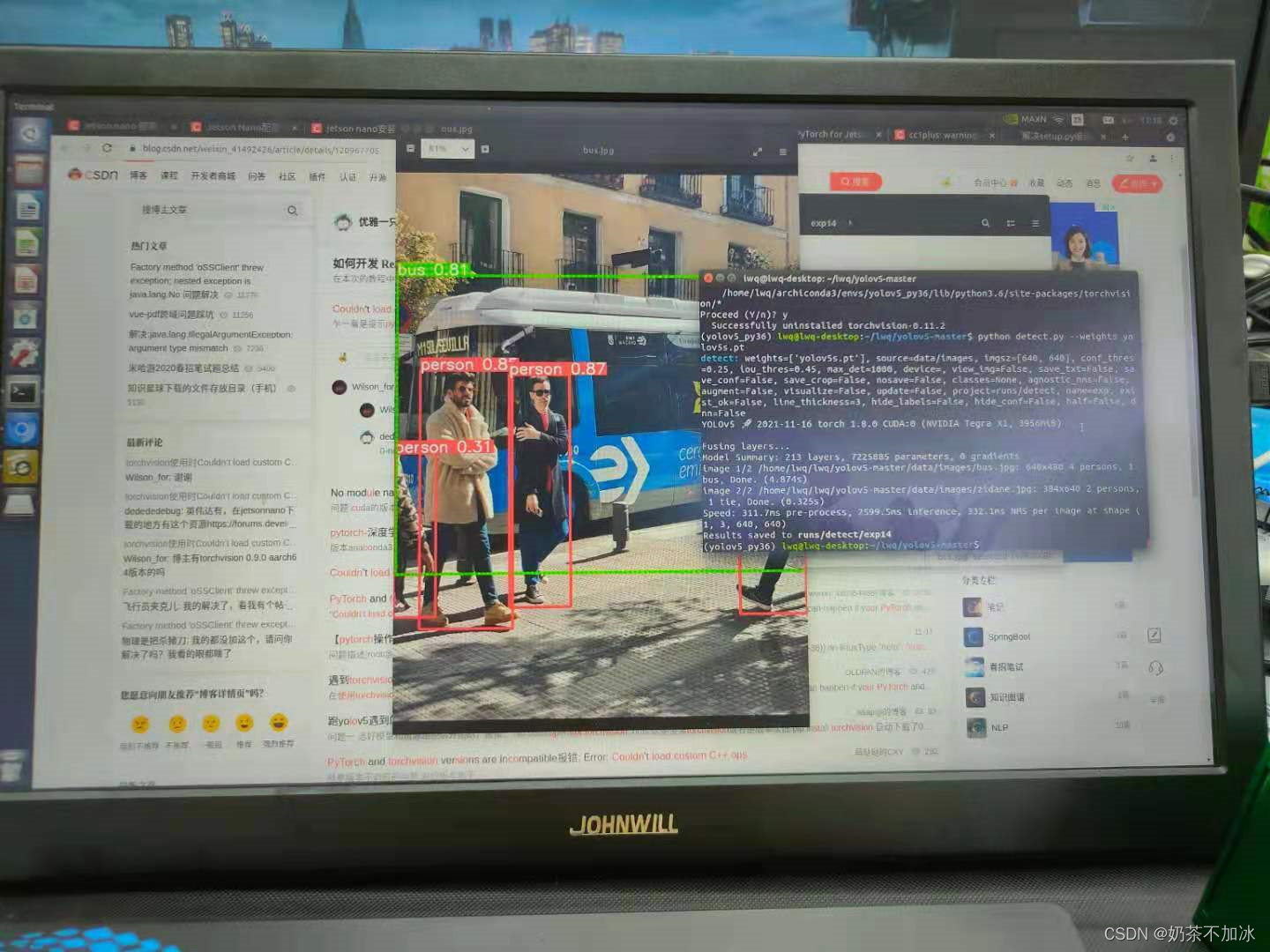

1. Test yolov5

python detect.py --weights yolov5s.pt

Wait and wait, the operation is successful as follows:

The author also tried to call the camera detection, the image resolution is set at 480, and the inference speed for each frame of image can reach about 0.09 seconds, which is several times faster than the pure CPU on the i7 system.

6, reference

1.https://blog.csdn.net/carrymingteng/article/details/120978053

2.https://blog.csdn.net/weixin_41275422/article/details/104500683

3.https://qianbin.blog.csdn.net/article/details/103760640

Summarize

After completing the deployment, there are a lot of pitfalls, and there are many online deployment tutorials. The author is also deploying this thing for the first time, and he is also a pure novice. There will be many mistakes in it. I hope you can correct me and let everyone learn and make progress together.

Follow-up should try tensorrtx for accelerated reasoning, and the deployment of YOLOv3-Tiny for lightweight networks.