I. Introduction

Project introduction: Use OpenCV to detect human eyes in real time and realize blink counting.

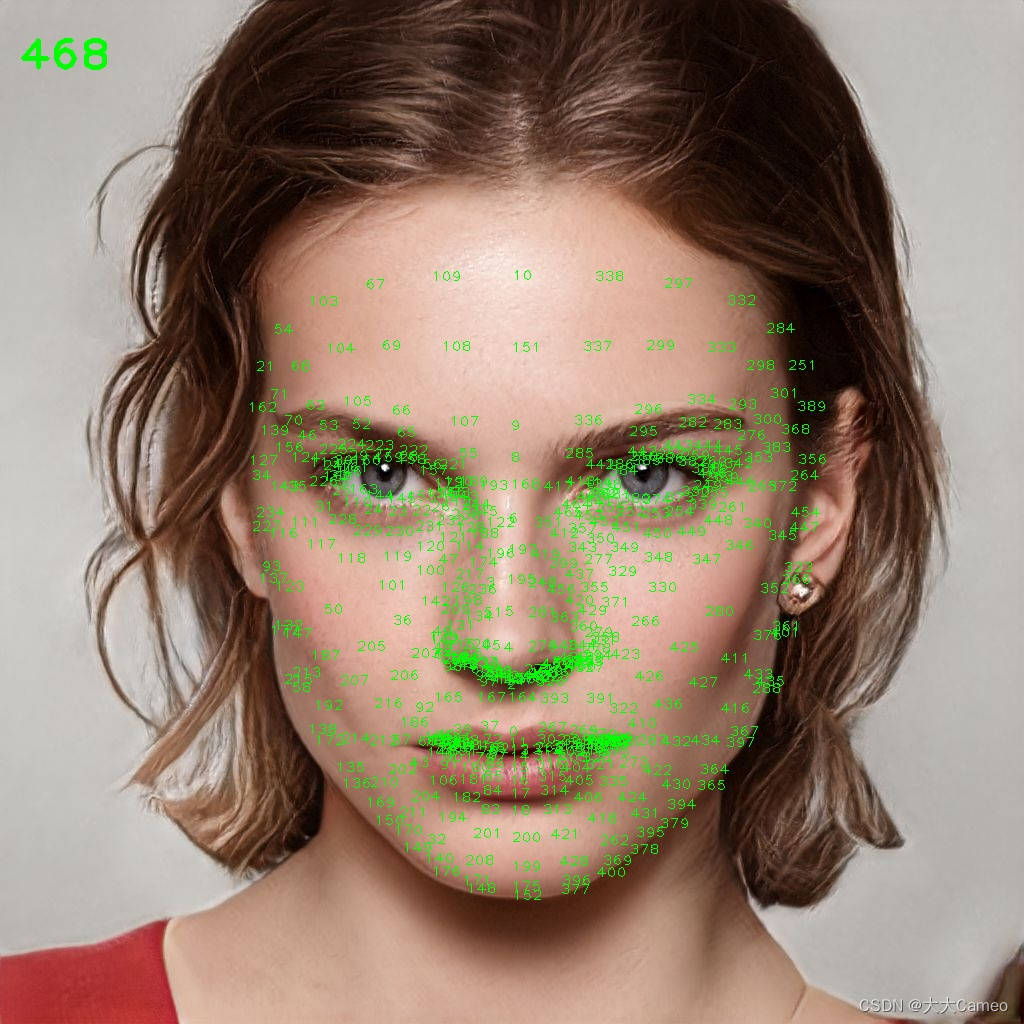

In the previous "OpenCV Face Feature Point Detection" , we learned that the face is composed of 468 feature points, as shown in the following figure:

From this, the coordinate information of the human eye can be obtained, and then the human eye can be detected in real time according to the coordinates.

Two, actual combat

(1) Installation environment

pip install opencv-python

pip install cvzone==1.5.6

pip install mediapipe==0.8.3.1(2) OpenCV loading video

import cv2

cap = cv2.VideoCapture('Videos/2.mp4') #加载视频

while True:

success, img = cap.read() #读取视频

img = cv2.resize(img, (640, 360)) # 设置视频高、宽

cv2.imshow('Image', img)

cv2.waitKey(1)

(3) Capture the human eye

Use cvzone's FaceMeshDetector method to quickly detect human eyes, the code is as follows:

import cv2

import cvzone

from cvzone.FaceMeshModule import FaceMeshDetector

cap = cv2.VideoCapture('Videos/2.mp4') # 加载视频

detector = FaceMeshDetector(maxFaces=1) # 创建检测器

idList = [22, 23, 24, 26, 110, 157, 158, 159, 160, 161, 130, 243] # 左眼坐标

color = (255, 0, 255)

while True:

success, img = cap.read() # 读取视频

img, faces = detector.findFaceMesh(img, draw=False)

if faces:

face = faces[0]

for id in idList:

cv2.circle(img, face[id], 20, color, cv2.FILLED) #标定左眼

img = cv2.resize(img, (640, 360)) # 设置视频高、宽

cv2.imshow('Image', img)

cv2.waitKey(1)Effect:

(4) Connection

Connect the upper, lower, left and right points of the eyes, and output the distance between the two points

import cv2

import cvzone

from cvzone.FaceMeshModule import FaceMeshDetector

cap = cv2.VideoCapture('Videos/2.mp4') # 加载视频

detector = FaceMeshDetector(maxFaces=1) # 创建检测器

idList = [22, 23, 24, 26, 110, 157, 158, 159, 160, 161, 130, 243] # 左眼坐标

color = (255, 0, 255)

while True:

success, img = cap.read() # 读取视频

img, faces = detector.findFaceMesh(img, draw=False)

if faces:

face = faces[0]

for id in idList:

cv2.circle(img, face[id], 20, color, cv2.FILLED)

# 眼睛四个点

leftUp = face[159]

leftDown = face[23]

leftLeft = face[130]

leftRight = face[243]

# 连接四个点

cv2.line(img, leftUp, leftDown, (0, 200, 0), 3)

cv2.line(img, leftLeft, leftRight, (0, 200, 0), 3)

# 两点距离

lengthVer = int(detector.findDistance(leftUp, leftDown)[0])

lengthHor = int(detector.findDistance(leftLeft, leftRight)[0])

print("垂直方向:", lengthVer)

print("水平方向:", lengthHor)

img = cv2.resize(img, (640, 360)) # 设置视频高、宽

cv2.imshow('Image', img)

cv2.waitKey(1)

Effect:

(5) Draw the image

When a person blinks, the distance between the upper and lower points of the eyelid changes dynamically, while the length of the two points in the horizontal direction remains unchanged. Therefore, the vertical/horizontal ratio can be taken as the detection value to determine whether the eyelid has blinked.

code:

import cv2

import cvzone

from cvzone.FaceMeshModule import FaceMeshDetector

from cvzone.PlotModule import LivePlot

cap = cv2.VideoCapture('Videos/2.mp4') # 加载视频

detector = FaceMeshDetector(maxFaces=1) # 创建检测器

plotY = LivePlot(640, 360, [20, 50], invert=True) # 绘图器

idList = [22, 23, 24, 26, 110, 157, 158, 159, 160, 161, 130, 243] # 左眼坐标

color = (255, 0, 255)

BlinkCounter = 0

ratioList = []

counter = 0

while True:

success, img = cap.read() # 读取视频

img, faces = detector.findFaceMesh(img, draw=False)

if faces:

face = faces[0]

for id in idList:

cv2.circle(img, face[id], 20, color, cv2.FILLED)

# 眼睛四个点

leftUp = face[159]

leftDown = face[23]

leftLeft = face[130]

leftRight = face[243]

# 连接四个点

cv2.line(img, leftUp, leftDown, (0, 200, 0), 3)

cv2.line(img, leftLeft, leftRight, (0, 200, 0), 3)

# 两点距离

lengthVer = int(detector.findDistance(leftUp, leftDown)[0])

lengthHor = int(detector.findDistance(leftLeft, leftRight)[0])

# print("垂直方向:", lengthVer)

# print("水平方向:", lengthHor)

ratio = int((lengthVer / lengthHor) * 100) # 垂直水平/水平方向的长度比

print('比例:', ratio)

ratioList.append(ratio)

if len(ratioList) > 3:

ratioList.pop(0)

ratioAvg = sum(ratioList) / len(ratioList) # 长度比均值

print(ratioAvg)

imgPlot = plotY.update(ratioAvg) # 绘图更新

img = cv2.resize(img, (640, 360)) # 设置视频高、宽

imgStack = cvzone.stackImages([img, imgPlot], 2, 1)

else:

img = cv2.resize(img, (640, 360)) # 设置视频高、宽

imgStack = cvzone.stackImages([img, img], 2, 1)

cv2.imshow('Image', imgStack)

cv2.waitKey(1)

Effect:

(6) Blink count

From (5), it can be seen that whether to blink or not can be judged by changing the ratio. The code is as follows:

import cv2

import cvzone

from cvzone.FaceMeshModule import FaceMeshDetector

from cvzone.PlotModule import LivePlot

cap = cv2.VideoCapture('Videos/2.mp4') # 加载视频

detector = FaceMeshDetector(maxFaces=1) # 创建检测器

plotY = LivePlot(640, 360, [20, 50], invert=True) # 绘图器

idList = [22, 23, 24, 26, 110, 157, 158, 159, 160, 161, 130, 243] # 左眼坐标

color = (255, 0, 255)

BlinkCounter = 0

ratioList = []

counter = 0

while True:

if cap.get(cv2.CAP_PROP_POS_FRAMES) == cap.get(cv2.CAP_PROP_FRAME_COUNT):

cap.set(cv2.CAP_PROP_POS_FRAMES, 0) # 重复播放视频

success, img = cap.read() # 读取视频

img, faces = detector.findFaceMesh(img, draw=False)

if faces:

face = faces[0]

for id in idList:

cv2.circle(img, face[id], 20, color, cv2.FILLED)

# 眼睛四个点

leftUp = face[159]

leftDown = face[23]

leftLeft = face[130]

leftRight = face[243]

# 连接四个点

cv2.line(img, leftUp, leftDown, (0, 200, 0), 3)

cv2.line(img, leftLeft, leftRight, (0, 200, 0), 3)

# 两点距离

lengthVer = int(detector.findDistance(leftUp, leftDown)[0])

lengthHor = int(detector.findDistance(leftLeft, leftRight)[0])

# print("垂直方向:", lengthVer)

# print("水平方向:", lengthHor)

ratio = int((lengthVer / lengthHor) * 100) # 垂直水平/水平方向的长度比

print('比例:', ratio)

ratioList.append(ratio)

if len(ratioList) > 3:

ratioList.pop(0)

ratioAvg = sum(ratioList) / len(ratioList) # 长度比均值

print(ratioAvg)

# 眨眼计数

if ratioAvg < 35 and counter == 0:

BlinkCounter += 1

color = (0, 200, 0)

counter = 1

if counter != 0:

counter += 1

if counter > 10:

counter = 0

cvzone.putTextRect(img, f'Blink Count:{BlinkCounter}', (50, 200), 6, 5, colorR=color) # 显示眨眼个数

imgPlot = plotY.update(ratioAvg) # 绘图更新

img = cv2.resize(img, (640, 360)) # 设置视频高、宽

imgStack = cvzone.stackImages([img, imgPlot], 2, 1)

else:

img = cv2.resize(img, (640, 360)) # 设置视频高、宽

imgStack = cvzone.stackImages([img, img], 2, 1)

cv2.imshow('Image', imgStack)

cv2.waitKey(1)

Effect:

OpenCV detection of human eyes, blink count

Three, finally

May we continue to study for a better tomorrow and a better ourselves.