Face applications account for a large part of the computer vision system. Before deep learning became popular, face applications based on traditional machine learning were already very mature, and there were many commercial application scenarios. This article uses a demo that can be run to illustrate the common technical concepts in face applications, including 'face detection', 'face comparison', 'face characterization detection (feature positioning)', 'blink detection', 'living body Detection 'and' Fatigue Detection '.

Source code: public account

Face Detection

Strictly speaking, face detection only includes locating the face in the photo, and face detection only obtains the rectangular frame (Left, Top, Right, Bottom) of the face in the photo, and there is no other content. Nowadays, most of the concepts of "face detection" on the Internet are vague and contain many things. For example, in addition to the positioning just mentioned, it also includes face comparison to be described later. This is strictly speaking wrong.

Face contrast

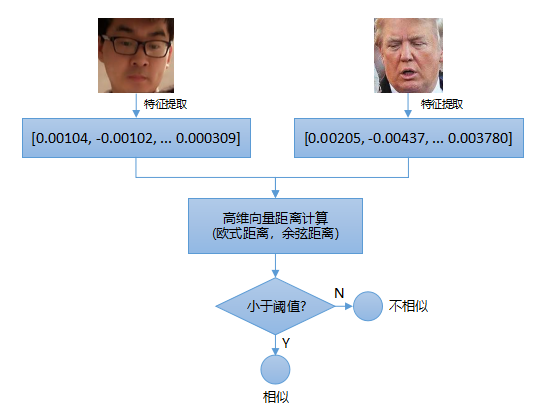

When you use face detection technology to find that the picture (video frame) contains a face, how to determine who the face is? This is our common face authorization application. Compare a face with other faces in the database to see which one is most similar to the face already in the database. In traditional machine learning, the face comparison process needs to first extract the face feature code, find a feature vector to replace the original face RGB image, and then calculate the distance between the two face feature vectors to determine whether the face is similar (in traditional machine learning Feature engineering can refer to the previous article). If the distance is less than a certain value, it is considered to be the same face, and vice versa.

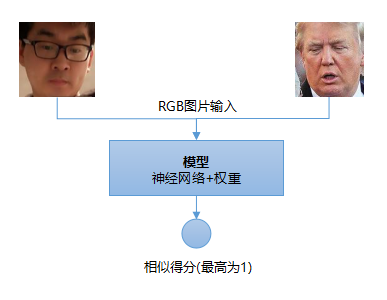

The above figure shows the process of face comparison in traditional machine learning methods. The process is relatively complicated, and it is necessary to extract a suitable feature vector. This feature is representative of the original RGB face image. Face comparison based on deep learning is relatively simple, and can be directly an end-to-end process:

Of course, some methods are to extract facial features through neural networks, and then use traditional machine learning methods to train similar SVM models for classification.

Face Representation Detection (Facial Sense Location)

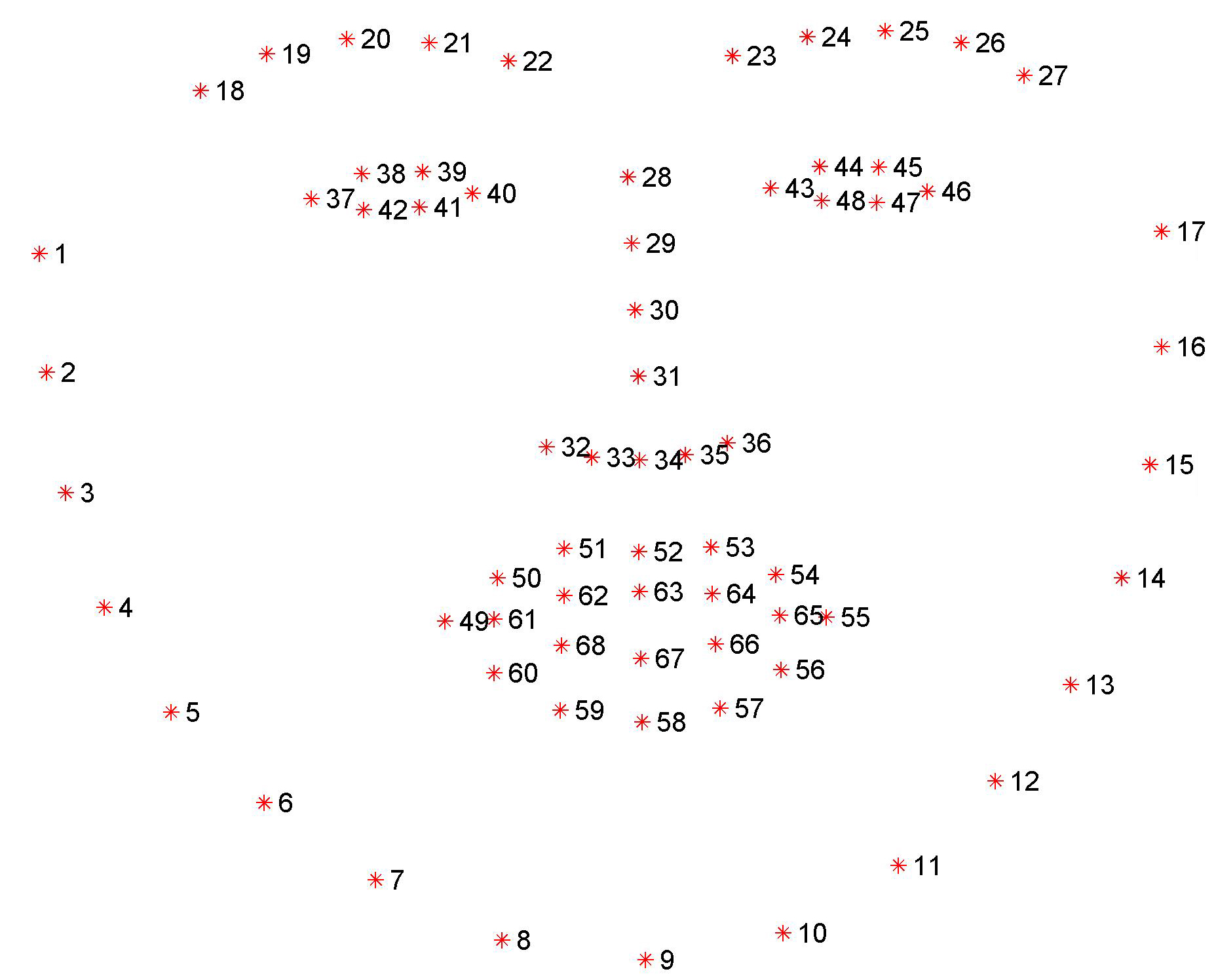

It is enough for general face applications to use the two technologies of 'face detection' and 'face comparison' mentioned above, such as face punching, face automatic mosaic, face unlocking and other applications. However, in some more complex face applications, only face detection and comparison are not enough, such as live detection, fatigue detection and other scenes to be described later. At these times, not only to determine the position of the face (rectangular box), but also to detect the position of the facial features, such as eyebrow shape, eye area, nose position, mouth position, etc. With the facial feature position data, we can based on These data have been used for more complex applications, such as judging in real time whether the person in the video blinks, closes his eyes, speaks, and looks down. The following figure shows the results of face characterization detection:

The above figure shows 68 points extracted by machine learning to represent a face, which are:

(1) 5 points on the left eyebrow

(2) 5 points on the right eyebrow

(3) 6 points on the left eye

(4) 6 points on the right eye

(5) 4 points of nose bridge

(6) 5 points on the tip of the nose

(7) 20 points on upper / lower lips

(8) Chin 17 points

We can quickly obtain the corresponding coordinate points through subscripts (slices can be used in Python). The coordinate points represent the actual position (pixel units) of the face representation in the original input picture.

Blink detection

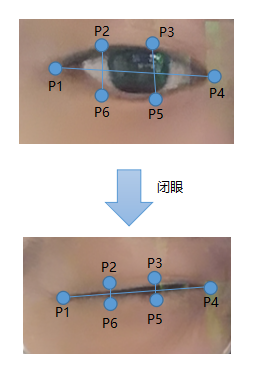

After performing facial characterization detection on each frame of the video and obtaining the position data of the facial features in each frame of the video, we can determine whether blinking occurs by analyzing the degree of closure of the human eye area (six points forming a closed ellipse). So how do you measure how close each eye is? According to previous research: http://vision.fe.uni-lj.si/cvww2016/proceedings/papers/05.pdf, we can know that the degree of eye closure can be judged by 6 points per eye

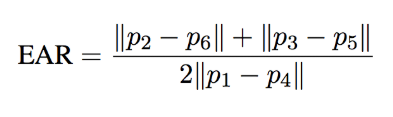

When the eyes are opened to closed, the value of the following expression quickly approaches zero:

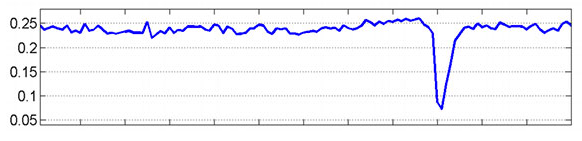

The value of the above expression EAR (Eye Aspect Ratio) is equal to the sum of the lengths of the two line segments in the vertical direction divided by the length of the line segments in the horizontal direction * 2. This value can approach zero at the moment of closing the eyes, and recover to The original value. The change process of EAR is shown in the figure below:

We only need to monitor whether the value of EAR fluctuates rapidly and approaches zero to determine whether the eyes are closed, and whether to recover quickly to determine whether the eyes are open. If the time interval between closing and opening the eyes is very short (only a few video frames, 25 frames per second), then it is considered to be blinking.

Biopsy

In the face application, in order to prevent people from using photos and other fake faces through authorization, it is generally necessary to perform live filtering on the faces in the picture, that is, to determine whether the face in the current video picture is a real person, rather than other substitutes such as photos . Through the blink detection technology introduced earlier, we can implement a very simple 'living body detection' algorithm. The algorithm requires that every other random time period (such as a few seconds, the time is not fixed, to prevent people from recording video to forge). The person blinks. If the person in the picture actively cooperates with the instructions issued by the algorithm, it can be considered as a real person in the picture, otherwise it may be a fake face.

It should be noted that live detection may not be safe enough just by the above method. Generally, it can also be combined with other live detection technologies, such as camera depth detection, facial light detection, and direct classification of human faces using deep learning technology. (Living / non-living).

Fatigue detection

The application scenario of fatigue detection is too large, for example, it can be used for long-distance bus driver fatigue monitoring warning, duty personnel fatigue monitoring warning, etc. The main principle is to use the blink detection technology introduced above to improve it a little bit, and we can detect closed eyes. If the closed eyes are closed for more than a period of time (such as 1 second), then it is considered that fatigue has occurred and an alarm is issued. The blink judgment is very simple, and the closed eyes judgment is simpler. All these judgment logics are all based on the data extracted by face representation:

Demo source code: on the public account

It should be clear that the accuracy of all the algorithms in this paper depends on the quality of the model training, whether the face detection is accurate, whether the face feature extraction is appropriate, and whether the face characterization detection results are accurate. This is the case with machine learning. The quality of the result depends entirely on the quality of the model training (the quality of the feature extraction). For example, in the demo of this article, it is easy to see that face representation detection is not very effective on faces with glasses, and more training data is needed in this regard. If you have any questions, please leave a message. If you are interested, please pay attention to the public account and share original CV / DL related articles.