introduction:

With the continuous increase in data volume and data complexity, more and more enterprises are beginning to use OLAP (Online Analytical Processing) engines to process large-scale data and provide instant analysis results. Performance is a very important factor when choosing an OLAP engine.

Therefore, this article will use the 99 query statements of the TPC-DS benchmark test to compare the performance of the four open-source OLAP engines , ClickHouse, Doris, Presto, and ByConity , so as to provide a reference for enterprises to choose the appropriate OLAP engine.

Introduction to the TPC-DS Benchmark

TPC-DS (Transaction Processing Performance Council Decision Support Benchmark) is a decision support system (Decision Support System, referred to as DSS) benchmark test, the tool is developed by the TPC organization, it simulates multidimensional analysis and decision support scenarios, and provides There are 99 query statements used to evaluate the performance of the database system in complex multi-dimensional analysis scenarios. Each query is designed to simulate complex decision support scenarios, including advanced SQL techniques such as joins across multiple tables, aggregation and grouping, subqueries, and more.

OLAP engine introduction

ClickHouse, Doris, Presto, and ByConity are currently popular open source OLAP engines, all of which feature high performance and scalability.

-

ClickHouse is a columnar database management system developed by Russian search engine company Yandex, which focuses on fast query and analysis of large-scale data.

-

Doris is a distributed columnar storage and analysis system that supports real-time query and analysis, and can be integrated with big data technologies such as Hadoop, Spark, and Flink.

-

Presto is a distributed SQL query engine developed by Facebook for fast query and analysis on large-scale datasets.

-

ByConity is a cloud-native data warehouse that is open-sourced by Byte. It adopts an architecture that separates storage and computing, realizes tenant resource isolation, elastic expansion and contraction, and has strong consistency in data reading and writing. It supports mainstream OLAP engine optimization technologies. , excellent read and write performance.

This article will use these four OLAP engines to test the performance of 99 query statements in the TPC-DS benchmark test , and compare their performance differences in different types of queries.

Test environment and method:

| Clickhouse | Doris | Presto | ByConity | |

| Environment configuration | Memory: 256GB Disk: ATA, 7200rpm, partitioned:gpt System: Linux 4.14.81.bm.30-amd64 x86_64, Debian GNU/Linux 9 |

|||

| Test data volume | Using a 1TB data table is equivalent to 2.8 billion rows of data | |||

| package version | 23.4.1.1943 | 1.2.4.1 | 0.28.0 | 0.1.0-GA |

| version release time | 2023/4/26 | 2023/4/27 | 2023/3/16 | 2023/3/15 |

| Number of nodes | 5 workers | 5 BEs, 1 FE | 5 Workers, 1 Coordinator | 5 Workers, 1 Server |

| other configuration | distributed_product_mode = 'global', partial_merge_join_optimizations = 1 |

bucket configuration: dimension table 1, returns table 10-20, sales table 100-200 |

Hive Catalog, ORC format, Xmx200GB |

enable_optimizer=1, dialect_type='ANSI' |

server configuration:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 48

On-line CPU(s) list: 0-47

Thread(s) per core: 2

Core(s) per socket: 12

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz

Stepping: 1

CPU MHz: 2494.435

CPU max MHz: 2900.0000

CPU min MHz: 1200.0000

BogoMIPS: 4389.83

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 30720K

NUMA node0 CPU(s): 0-11,24-35

NUMA node1 CPU(s): 12-23,36-47Test Methods:

-

Use the 99 query statements of the TPC-DS benchmark test and 1TB (2.8 billion rows) of data to test the performance of 4 OLAP engines.

-

Use the same test dataset in each engine, and keep the same configuration and hardware environment.

-

For each query, execute it multiple times and take the average value to reduce measurement errors, and set the timeout period of each query to 500 seconds.

-

Record the details of query execution, such as query execution plan, I/O and CPU usage, etc.

performance test results

We used the same data set and hardware environment to test the performance of these four OLAP engines.

The size of the test data set is 1TB, and the hardware and software environment are as described above. We used 99 query statements in the TPC-DS benchmark test to conduct three consecutive tests on four OLAP engines, and took the average results of the three times.

- Among them, ByConity ran through all 99 query tests.

- Doris crashed in SQL15, and there were 4 timeouts, namely SQL54, SQL67, SQL78 and SQL95.

- Presto only occurs Timeout in SQL67 and SQL72, and other query tests run through.

- Clickhouse only runs through 50% of the query statements, probably part of them are Timeout, and the other part is the system error. The reason for the analysis is that Clickhouse cannot effectively support multi-table associated queries, so such SQL statements can only be manually rewritten and split. implement.

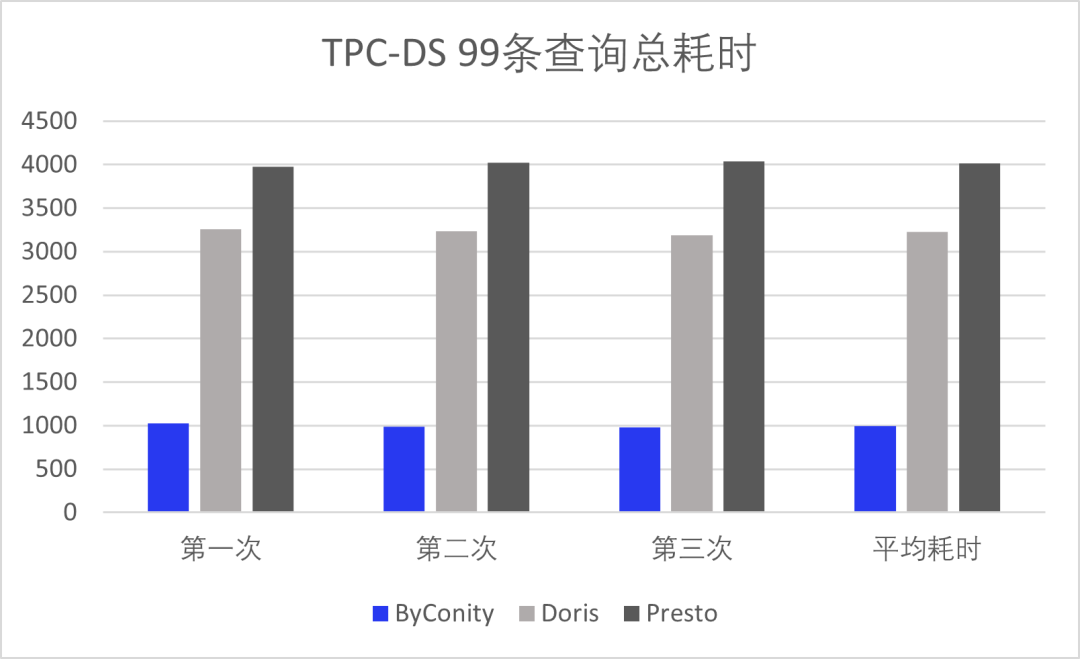

Therefore, we temporarily exclude Clickhouse in the comparison of the total time consumption. The total time consumption of the other three OLAP engines TPC-DS test is shown in Figure 1 below. From Figure 1, we can see that the open source ByConity query performance is significantly better than other engines, and the performance is about Is the other 3-4 times. (Note: The unit of the vertical axis of all the following charts is seconds)

Figure 1 TPC-DS 99 total query time consumption

For the 99 query statements in the TPC-DS benchmark test, we next classify them according to different query scenarios, such as basic query, join query, aggregate query, subquery, window function query, etc.

Below we will use these classification methods to analyze and compare the performance of ClickHouse, Doris, Presto and ByConity four OLAP engines:

In the basic query scenario

The scenario includes simple query operations such as querying data from a single table, filtering and sorting results, etc. Performance testing of basic queries focuses on the ability to process a single query.

Among them , ByConity performs best, and Presto and Doris also perform well. This is because basic queries usually only involve a small number of data tables and fields, so they can make full use of the distributed query features and memory computing capabilities of Presto and Doris. Clickhouse does not support multi-table associations well, and there are some phenomena that cannot work. Among them, SQL5, 8, 11, 13, 14, 17, and 18 all time out. We calculate Timeout=500 seconds, but hope to display more clearly and intercept Timeout=350 Second.

Figure 2 below shows the average query time of the four engines in the basic query scenario:

Figure 2 Performance comparison of TPC-DS basic query

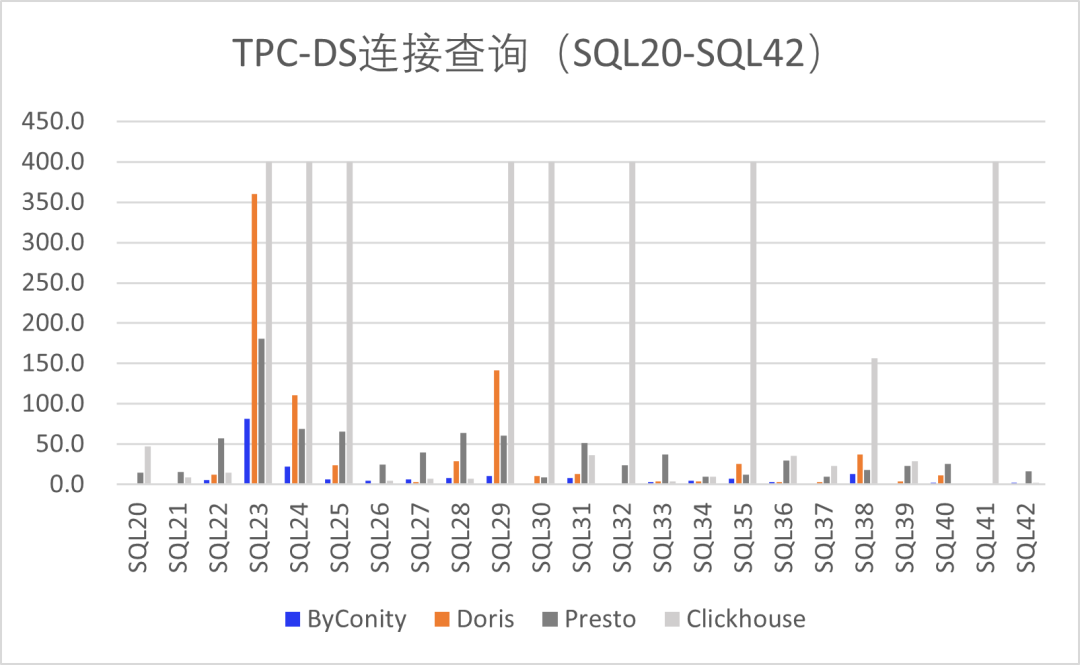

Connection query scene

Join query is a common multi-table query scenario. It usually uses JOIN statements to connect multiple tables and retrieve data based on specified conditions.

As shown in Figure 3, we can see that ByConity has the best performance, mainly due to the optimization of the query optimizer, which introduces the cost-based optimization capability (CBO), and performs re-order and other optimization operations during multi-table join. Followed by Presto and Doris, the performance of Clickhouse in multi-table Join is not very good compared with the other three, and the support for many complex statements is not good enough.

Figure 3 Performance comparison of TPC-DS connection query

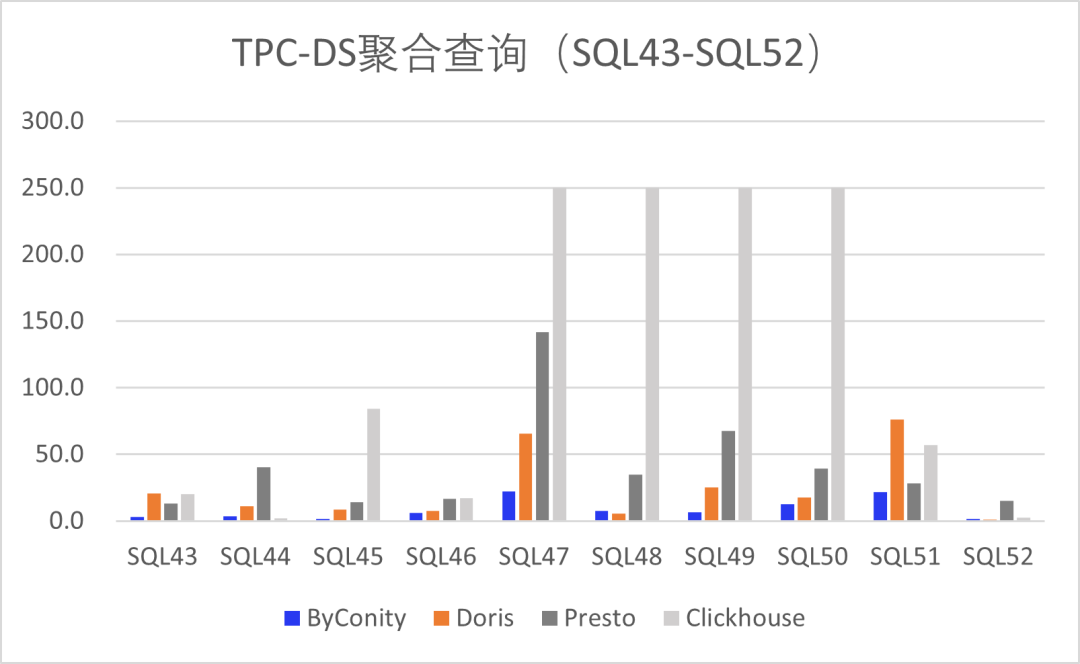

Aggregation query scenario

Aggregation query is a scenario for performing statistical calculations on data, such as testing the use of aggregate functions such as SUM, AVG, and COUNT.

ByConity still performed well, followed by Doris and Presto, and Clickhouse had four Timeouts. In order to easily see the difference, we intercepted the Timeout value to 250 seconds.

Figure 4 Performance comparison of TPC-DS aggregation query

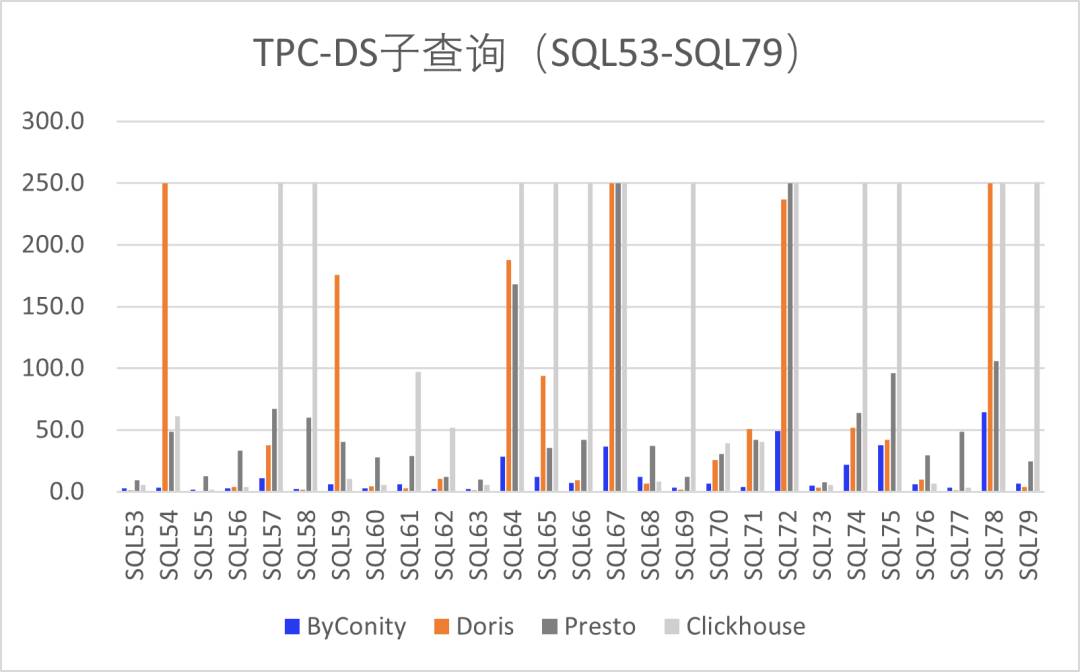

Subquery Scenario

A subquery is a query scenario used nested in an SQL statement, and it is usually used as a condition or restriction of the main query.

As shown in Figure 5 below, ByConity performs best because ByConity implements the rule-based optimization capability (RBO) for query optimization , and integrates complex nested queries as a whole through techniques such as operator pushdown, column pruning, and partition pruning. Optimization, replacing all subqueries, and converting common operators into the form of Join+Agg.

Secondly, Doris and Presto performed relatively well, but Presto had timeouts in SQL68 and SQL73, Doris also had timeouts in three SQL queries, and Clickhouse also had partial timeouts and system errors for the reasons mentioned above. Also for the convenience of seeing the difference, we intercept the Timeout value to be equal to 250 seconds.

Figure 5 Performance comparison of TPC-DS subqueries

Window function query scene

Window function query is an advanced SQL query scenario, which can perform operations such as ranking, grouping, and sorting on query results.

As shown in Figure 6 below, ByConity has the best performance, followed by Presto, Doris has a timeout situation, and some of Clickhouse still failed to pass the TPC-DS test.

Figure 6 Performance comparison of TPC-DS window function query

Summarize

This paper analyzes and compares the performance of the four OLAP engines ClickHouse, Doris, Presto and ByConity under the 99 query statements of the TPC-DS benchmark test. We found that in different query scenarios, the performance of the four engines is different.

- ByConity performs well in all 99 query scenarios of TPC-DS, surpassing the other three OLAP engines;

- Presto and Doris perform better in join query, aggregation query and window function query scenarios;

- Since the design and implementation of Clickhouse are not specifically optimized for associated queries , the overall performance in multi-table associated queries is not satisfactory.

It should be noted that performance test results depend on multiple factors, including data structure, query type, data model, etc. In practical applications, various factors need to be considered comprehensively to choose the most suitable OLAP engine.

When choosing an OLAP engine, other factors need to be considered, such as scalability, ease of use, stability, etc. In practical application, it is necessary to select according to the specific business requirements, and reasonably configure and optimize the engine to obtain the best performance.

In short, ClickHouse, Doris, Presto, and ByConity are all excellent OLAP engines with different advantages and applicable scenarios. In practical applications, it is necessary to select according to specific business needs, and perform reasonable configuration and optimization to obtain the best performance. At the same time, we need to pay attention to select representative query scenarios and data sets, and conduct tests and analysis for different query scenarios in order to more comprehensively evaluate the performance of the engine. , in order to more fully evaluate the performance of the engine.

For more information, you can visit Motianlun Community , which provides one-stop comprehensive services around the learning and growth of data people, and creates a collection of news information, online questions and answers, live broadcasts, online courses, document reading, resource downloads, knowledge sharing and online operations. It will continue to promote knowledge dissemination and technological innovation in the field of data.