Article Directory

- foreword

- 1. Download the original dataset VQA 2.0

- 2. Download the preprocessed data

- 3. Preparation

- Four, model articles

- Five, train articles

- Summarize

- Use of VQA related github code: [KaihuaTang/VQA2.0-Recent-Approachs-2018.pytorch](https://github.com/KaihuaTang/VQA2.0-Recent-Approachs-2018.pytorch)

foreword

This article records the whole process of my first research on the VQA 2.0 dataset.

In the process, I would like to thank a certain blogger for his guidance, which helped me a lot. Grateful!

What is VQA

VQA任务就是给定一张图片和一个问题,模型要根据给定的输入来进行回答。

Obviously, there are two inputs (image and question) for the VQA task. How to extract the feature of the image will not be described here. CNN can be used to extract features. CNN can choose backbone networks such as Resnet and VGG (removing pooling and fc layer).

As for how to extract the features of the question, the general method is that since the question itself is a text, it needs to be converted into the corresponding vector form , because the model does not understand the text, so first convert each word into a corresponding vector , simple The best way is to use one-hot encoding. Of course, you first need to create a dictionary (including all possible words in your task) one-hot encoding. For example:

初始有一个词典:['a','is','he','boy','she','dog','school','man','woman','king','queen']

The word that appears can be represented by one-hot encoding, such as 'he'->[0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0], the obvious disadvantage is When the dictionary is very large, one-hot encoding will be particularly sparse,

At this time, other encoding methods are generally used. For example, you can use pre-trained glove vectors

When we convert words into corresponding vectors , we need to send the corresponding vectors of the whole sentence to RNN to extract corresponding text features . For RNN, we usually choose LSTM or GRU (we need to explain LSTM and GRU, we can for another time).

After obtaining the features of the image and question, it can be trained through the model . The training method can use the form of multi-label classification, so its loss is also a multi-label loss, and cross-entropy loss can also be used .

The above content comes from the blog post of the blogger , which is also worth reading

1. Download the original dataset VQA 2.0

Link to download data: https://visualqa.org/download.html

The dataset mainly has three parts:

(1)VQA Annotations:

Training:4437570

Validation:2143540

The download link of the above data: https://visualqa.org/download.html

(2)VQA Input Questions:

Training:443757

Validation:214354

Testing:447793

(3)trainval_annotation和trainval_question:

In addition, in order to facilitate everyone to train the entire trainval split and generate a result.json file to upload to the vqa2.0 online evaluation server

The predecessors merged the question and annotation json files for train and validation splits,

The download link is as follows:

(4)VQA Input Images:

Training:82783

Validation:40504

Testing:81434

也就是说,每张图片会有5个左右的问题,每个问题会有10个左右的回答。

For the analysis of the dataset, refer to this blog post

2. Download the preprocessed data

1. Download the pre-trained glove word vector

wget -P data http://nlp.stanford.edu/data/glove.6B.zip

unzip data/glove.6B.zip -d data/glove

rm data/glove.6B.zip

Get word vectors of 4 dimensions (for example, a 50d file, which is a 50-dimensional vector corresponding to each word),

and you can choose to use it according to your own needs.

Function: First convert all possible words in your task into corresponding vectors through word vectors, and then send them to RNN to extract text features, because you cannot directly send words to RNN. Note: You can train by yourself Generate word vectors, if you don't use other people's pre-trained word vectors.

2. Download annotation and question

# Questions

wget -P data https://s3.amazonaws.com/cvmlp/vqa/mscoco/vqa/v2_Questions_Train_mscoco.zip

unzip data/v2_Questions_Train_mscoco.zip -d data

rm data/v2_Questions_Train_mscoco.zip

wget -P data https://s3.amazonaws.com/cvmlp/vqa/mscoco/vqa/v2_Questions_Val_mscoco.zip

unzip data/v2_Questions_Val_mscoco.zip -d data

rm data/v2_Questions_Val_mscoco.zip

wget -P data https://s3.amazonaws.com/cvmlp/vqa/mscoco/vqa/v2_Questions_Test_mscoco.zip

unzip data/v2_Questions_Test_mscoco.zip -d data

rm data/v2_Questions_Test_mscoco.zip

# Annotations

wget -P data https://s3.amazonaws.com/cvmlp/vqa/mscoco/vqa/v2_Annotations_Train_mscoco.zip

unzip data/v2_Annotations_Train_mscoco.zip -d data

rm data/v2_Annotations_Train_mscoco.zip

wget -P data https://s3.amazonaws.com/cvmlp/vqa/mscoco/vqa/v2_Annotations_Val_mscoco.zip

unzip data/v2_Annotations_Val_mscoco.zip -d data

rm data/v2_Annotations_Val_mscoco.zip

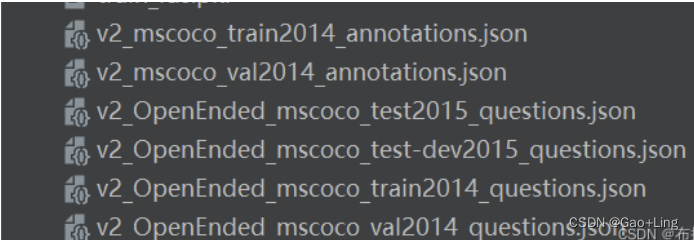

Get 6 json files

If you want to know the data structure format in the json file, you can view this blog post

3. Download the pre-trained image feature

Reference link: https://github.com/peteanderson80/bottom-up-attention#training

# Image Features # resnet101_faster_rcnn_genome

wget -P data https://imagecaption.blob.core.windows.net/imagecaption/trainval.zip

wget -P data https://imagecaption.blob.core.windows.net/imagecaption/test2014.zip

wget -P data https://imagecaption.blob.core.windows.net/imagecaption/test2015.zip

unzip data/trainval.zip -d data

unzip data/test2014.zip -d data

unzip data/test2015.zip -d data

rm data/trainval.zip

rm data/test2014.zip

rm data/test2015.zip

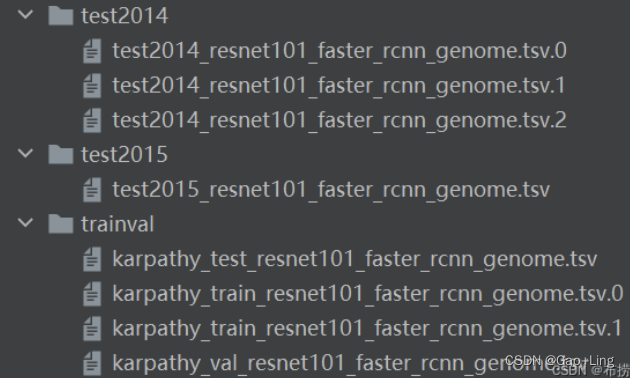

The pre-trained feature is directly used here, so it is no longer necessary to send the image to CNN to extract features. The pre-training is to use Resnet101 as the backbone network, and use the faster-RCNN method to pre-train and extract features on the genome.

The resulting file:

3. Preparation

The code used is in the tool folder

1. Create a dictionary and use the pre-trained glove vector (create_dictionary.py)

First of all, we need to create a dictionary, which contains all the words that appear, and convert them into corresponding glove word vectors. By loading the question file, take out the "question" part of concern, and then take out "question(str)" (a bit convoluted here , you can understand by carefully looking at the data structure of the question, which is to get the parts we need layer by layer), and then you get all the questions. With all the questions, you can take out the words in them, and then convert the uppercase to Lowercase and so on, and then remove the repeated words, you get all the possible words of the task.

dataroot = '../data' if args.task == 'vqa' else 'data/flickr30k'

dictionary_path = os.path.join(dataroot, 'dictionary.pkl')

d = create_dictionary(dataroot, args.task) # data,vqa

# <dataset.Dictionary object at 0x00000217857C7C70>

d.dump_to_file(dictionary_path) # 字典,包含所有单词

d = Dictionary.load_from_file(dictionary_path)

This will create a dictionary, and you can lookup "word to index (word2idx)" and "index to word (idx2word)" through the dictionary:

print(d.idx2word) # 所有单词 ['what', 'is', 'this',..., 'pegasus', 'bebe']

print(len(d.idx2word)) # 共有19901个单词

print(d.word2idx) # {'what': 0, 'is': 1, 'this': 2,...,'pegasus': 19899, 'bebe': 19900}

Just getting a dictionary is not enough. What we need to throw into the model for training must be tensor or vector form. Text is definitely not enough, so it needs to be converted into the corresponding word vector. In fact, it is to prepare for word embedding:

# 使用glove

glove_file = '../data/glove/glove.6B.%dd.txt' % emb_dim # 加载预训练好的data/glove/glove.6B.300d.txt

weights, word2emb = create_glove_embedding_init(d.idx2word, glove_file)

np.save(os.path.join(dataroot, 'glove6b_init_%dd.npy' % emb_dim), weights)

Weights is the pre-trained dictionary vector we finally got. We have 19901 words, and each word is converted into a 300-dimensional vector, so weights.shape is [19901,300]:

2. Annotation processing (compute_softscore.py)

Four, model articles

等待更新...

Five, train articles

等待更新...

Summarize

I am a beginner in VQA, if there are any mistakes, please point out and explain

Use of VQA related github code: KaihuaTang/VQA2.0-Recent-Approaches-2018.pytorch

1. Dataset download and path processing

Put the dataset under the /data/ path

or change the path of the dataset

in config.py and change output_size=36 in config.py

2. Data preprocessing

preprocess_features.py

preprocess_vocab.py

3. Train

command line: python train.py --trainval

4. Test

command line: python train.py --test --resume=./logs/model.pth