foreword

If you can build a ChatGPT locally and train an artificial intelligence AI dialogue system that belongs to your own knowledge base system, you can efficiently deal with the professional knowledge in your field, and even add the awareness of professional thinking to train people who can combine industry domain knowledge efficiently. The resulting AI. This must be a very efficient productivity tool, and local deployment can protect the privacy of personal data, and it is also very convenient to build an office within the intranet.

For ChatGLM, the biggest advantage is that it can be quickly deployed locally and requires less resources than ChatGPT. What bicycles are needed, just enough. It can even be deployed on some high-performance servers at a single point, and only 6GB of video memory is required at the INT4 quantization level. And we don't need such a broad knowledge area at all. We only need a vertical depth of knowledge field to deal with more business scenarios, so ChatGLM is indeed a better basic tool for secondary development.

Then I will not continue to express my opinions. Local deployment of ChatGLM will still encounter many problems. I suggest that you first figure out the local development environment and computer configuration, and then compare it with the component compatibility table I gave. Different configurations and environments require different installations. Strategy. Of course, I only have one computer to deploy. I haven’t encountered many situations and I don’t know how to deal with them. If you encounter difficulties in deploying according to my article, please let me know in the comment area or private message. Thank you for your support.

Deployment dependencies

1. Hardware requirements

This is the open source project of ChatGLM: https://github.com/Fanstuck/ChatGLM-6B. You can see the hardware requirements in it. This is a hard requirement. If it is not met, it will definitely not be deployed. You can only upgrade the computer configuration:

2. Environmental requirements

Look at the dependency file requirements.txt again:

Many people have already started pip install -r requirements.txt here, but what needs to be noted here is that there will be problems with the torch download, and there is a high probability that the cpu version will be downloaded. I do not recommend direct pip install here. For students with GPU, it is definitely the best to download the GPU version of torch, and it is not clear that the torh and cudnn versions that are easy to download under their cuda version are not compatible, so here is a separate talk about torch. How to install the appropriate version.

First look at the highest cuda version supported by NVIDIA:

cmd input nvidia-smi can see:

It shows that my cuda version is 11.7, just download a version lower than this.

But here it should be noted that the cuda version corresponds to the torch version:

pytorch historical version download:

https://pytorch.org/get-started/previous-versions/ , as long as the torch version is lower than 11.7, it is possible.

If pip downloads the download wheel of time out: https://download.pytorch.org/whl/torch_stable.html

Model download

There are two ways to download ChatGLM, one is to download directly through transformers, and you can download it locally by calling the code. The default local directory for downloading is:

C:\Users.cache\huggingface\modules\transformers_modules

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True)

model = AutoModel.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True).half().cuda()

model = model.eval()

Of course, if the download is too slow, you can also download it in Hugging Face Hub: https://huggingface.co/THUDM/chatglm-6b

You don't need frok to clone directly. The moderator also provides different compiled versions for download. I downloaded the compiled int4 version here. My memory is only 16G and the video memory is 128M, so I can’t afford the native model.

In this case, the file does not need to be downloaded to the C drive, and you can choose the directory to download by yourself.

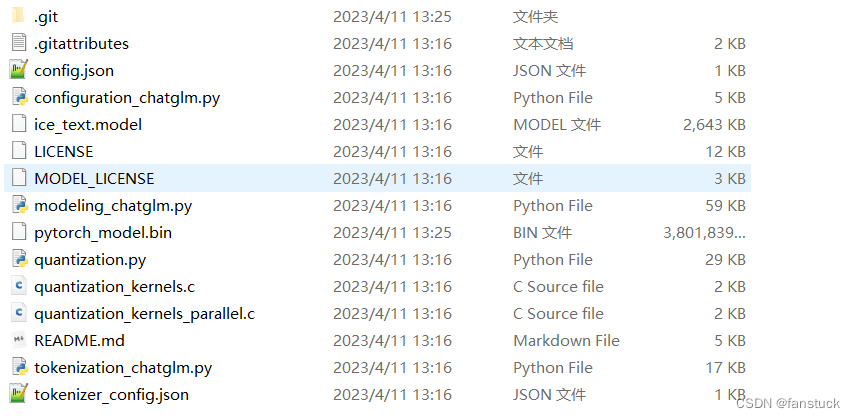

After the download is complete, there are two demos that can be tested. You need to modify the THUDM/chatglm-6b in the function AutoTokenizer.from_pretrained, that is, replace the pretrained_model_name_or_path with the directory where you downloaded chatGLM, and then you can use it.

If your video memory configuration is limited, you can choose to load the model in a quantized manner, for example:

# 按需修改,目前只支持 4/8 bit 量化

model = AutoModel.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True).quantize(4).half().cuda()

Model quantization will bring a certain performance loss. After testing, ChatGLM-6B can still perform natural and smooth generation under 4-bit quantization.

The quantization process needs to first load the model in FP16 format in the memory, which consumes about 13GB of memory. If your memory is insufficient, you can directly load the quantized model, which only requires about 5.2GB of memory:

model = AutoModel.from_pretrained("THUDM/chatglm-6b-int4", trust_remote_code=True).half().cuda()

The int4 model downloaded directly from my side does not need to load the entire model anymore.

If you don't have GPU hardware, you can also do inference on the CPU, but the inference speed will be slower. The method of use is as follows, which requires about 32GB of memory:

model = AutoModel.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True).float()

model use

As far as my computer is concerned, the usual memory has

11G is occupied. Running this model will definitely report OOM. It is recommended that you kill some temporarily unnecessary processes and save as much memory as possible before running the model:

Here it is recommended not to use pycharm to run on the terminal, with more memory:

Use web_demo.py to talk directly on the website:

There is no way for me to directly OOM here. If everyone's computer is better, there will be no problem.