This article is contributed by the author of the sound network RTE developer community, the author is @arige

Target

1. Set goals

Let's think about this scenario:

You walk into a coffee shop, see a beautiful woman or handsome guy, and want a WeChat ID to facilitate subsequent development.

So how do we do it?

Walk up to the other person and whisper to him: "Hello, you look a lot like my ex-girlfriend (ex-boyfriend), can you add your WeChat?".

The other party may whisper, "I'll sweep you."

At this time, you need to show him your QR code.

When you are in a successful relationship and propose marriage, you may need to find a more romantic environment, and your conversation hopes to be seen and heard by more people.

Through the imagination of the above scene, we can simply determine some basic elements

- Scenes that can be changed (cafe and proposal scene)

- Independent image shaping function (after all, appearance is the origin of love at first sight)

- Single chat (You can’t ask for a group of people at a time if you want WeChat ID. Otherwise, you may be beaten as a hooligan)

- File sharing (show your own QR code to the other party and let the other party add friends)

- Group chat, group video (show the proposal scene to other users)

2. Dismantling the target

In order to meet the requirements mentioned above, we need the following functions:

- Support the convenient access of unity. After all, unity is a very good 3D engine. If it can be easily accessed, there will be more room for expansion in subsequent scene creation and character creation.

- 1 vs 1 video, or 1 vs more live mode. Can meet the needs of single chat and group chat

- Synchronize the local video or remote video played locally to other users. It can meet the needs of sharing QR codes.

3. Effect preview

It will take a lot of time to fully implement a complete project, and I found that the current Demo of the sound network will provide simple character models and materials, but they are relatively open, and developers can use their own customized ones as needed For the model material, in order to achieve the above-mentioned requirements, I only made some minor modifications to meet our needs. This time we mainly look at the effect and make some technical reserves for the subsequent implementation in the production environment.

The following is the effect displayed after adjustment on the demo.

This is what a coffee shop looks like implemented with unity

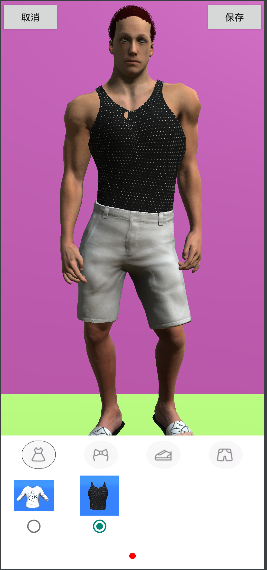

This is a clothing store, we can change clothes for the characters, this is also realized by unity.

The above two accesses are very convenient. We can connect to Unity very well. If necessary, we can also quickly access our own Unity scenes later.

This is the 1 vs 1 video test result of my own test

This is an effect of sharing local video to the other party in my own test

Imagine, is it possible to watch a drama with your object?

Simple implementation

The above effects are all based on the sdk of the sound network. If you want to try or use it, you can continue to view my access process and the problems and solutions encountered in the middle.

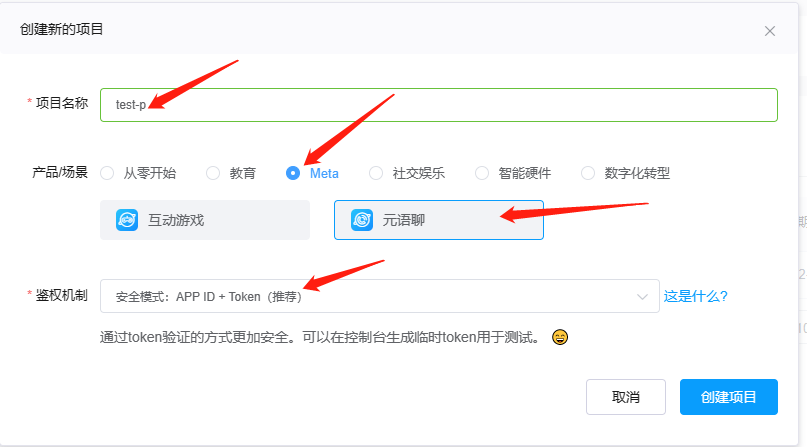

1. Account preparation

Since we use the sdk of the sound network, it is reasonable to register a sound network account and do some sound network account configuration.

- Register an account

- complete real name

- create project

- configuration items

Then enter the detailed configuration

Generate a token, and copy and save the appid, certificate, and token.

Notice! ! ! This is very important, you must read it carefully, it will be used in the project!

At this point, the preparation of the account has been completed.

2. Configuration items

1. Demo source code

2. Through the customer service of the official website of Shengwang, apply for Metaverse SDK, Unity project files, development guides, and apply for permission to use at the same time. For details, please visit shengwang.cn 3. Decompress and configure the sdk

into the project and be sure to pay attention to the directory , if the current directory does not exist in your own project, you need to create it yourself

4. Configure id Under normal circumstances, this file will not be uploaded to the remote warehouse, and it is mainly for security considerations. Copy and paste the appid and certificate applied in the background into /Android/local.properties, as follows:

5. Configure the channel When the token is generated in the background, the filled channel is written to the class, as follows

6. Configure the project permissions

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.ACCESS_WIFI_STATE" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.BLUETOOTH" />

<!-- 对于 Android 12.0 及以上设备,还需要添加如下权限: -->

<uses-permission android:name="android.permission.BLUETOOTH_CONNECT" />

Notice:

Manifest.permission.RECORD_AUDIO,

Manifest.permission.CAMERA

It is necessary to apply for permission dynamically, otherwise the video function cannot be completed normally.

The sdk itself will not apply for permissions, so we need to help the sdk apply for permissions in the right place.

- Confusing Configuration

Cafe

Making Image

Clothing Store

Here is the direct use of the effect provided by the sound network. If you need it for your own project, you can adjust it yourself.

Among them, changing clothes and pinching faces require some modifications on unity and communication between native and unity. The whole can refer to changing clothes and pinching face

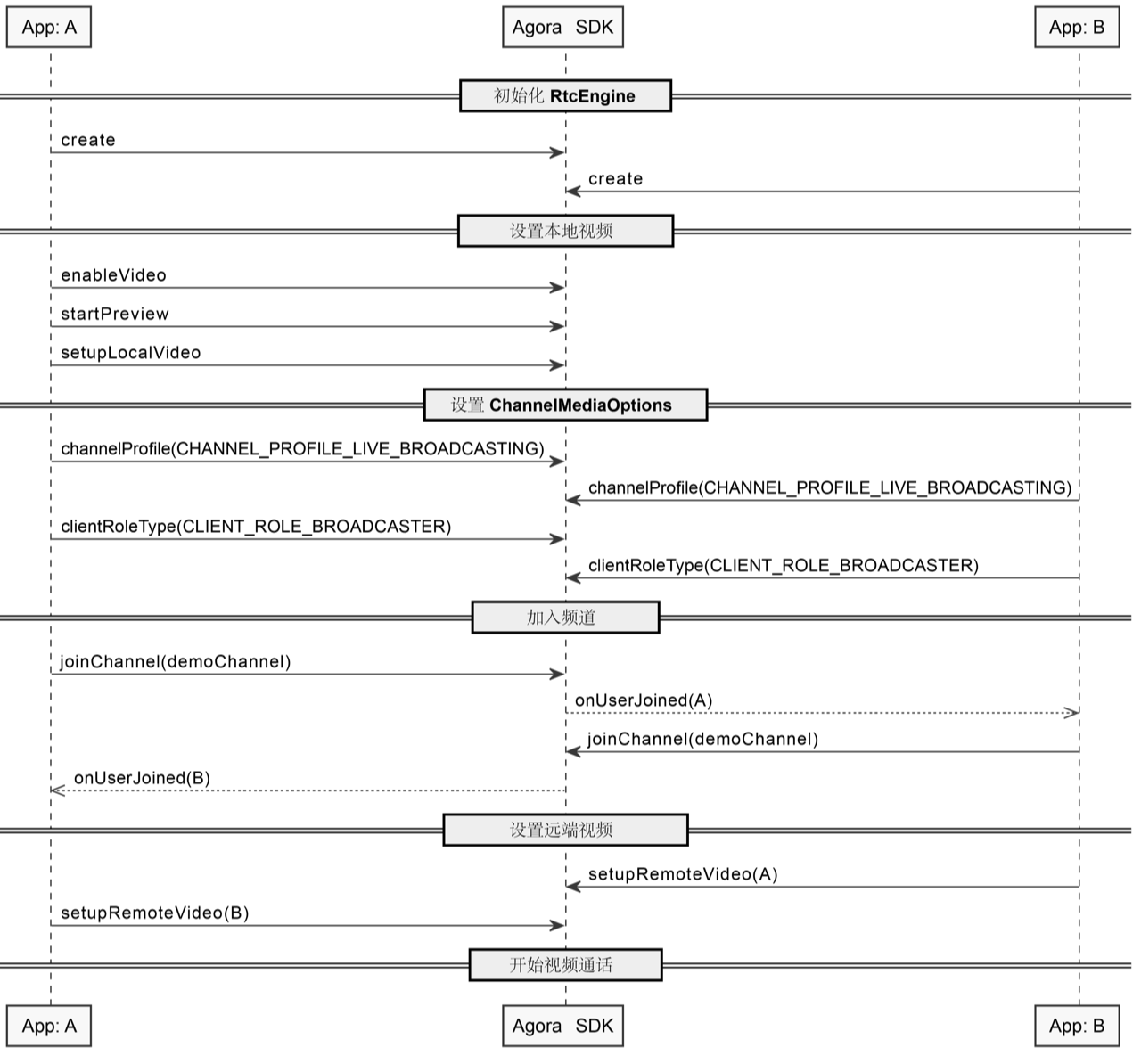

3. Video 1vs1

The construction of the overall project has been completed previously. And the unity content has been run, so let's see how to deal with the 1vs1 video if we want to achieve it. For the convenience of processing, I wrote a separate page to process video 1vs1, the effect is as follows:

Video chat

I suggest you read this flowchart several times. When we encounter problems, this diagram will inspire us and help us solve problems.

Detailed process:

- Create an RtcEngine object

This object manages the entire video scene and is a very core object.

try {

RtcEngineConfig config = new RtcEngineConfig();

config.mContext = getBaseContext();

config.mAppId = appId;

config.mEventHandler = mRtcEventHandler;

config.mAudioScenario = Constants.AudioScenario.getValue(Constants.AudioScenario.DEFAULT);

mRtcEngine = RtcEngine.create(config);

} catch (Exception e) {

throw new RuntimeException("Check the error.");

}

- Create RtcEngine property

// 视频默认禁用,你需要调用 enableVideo 启用视频流。

mRtcEngine.enableVideo();

// 录音默认禁用,你需要调用 enableAudio 启用录音。

mRtcEngine.enableAudio();

// 开启本地视频预览。

mRtcEngine.startPreview();

- Display local camera content on local_video_view_container

FrameLayout container = findViewById(R.id.local_video_view_container);

// 创建一个 SurfaceView 对象,并将其作为 FrameLayout 的子对象。

SurfaceView surfaceView = new SurfaceView (getBaseContext());

container.addView(surfaceView);

// 将 SurfaceView 对象传入声网,以渲染本地视频。

mRtcEngine.setupLocalVideo(new VideoCanvas(surfaceView, VideoCanvas.RENDER_MODE_FIT, KeyCenter.RTC_UID));

- set the current mode

ChannelMediaOptions options = new ChannelMediaOptions();

// 将用户角色设置为 BROADCASTER。

options.clientRoleType = Constants.CLIENT_ROLE_BROADCASTER;

// 视频通话场景下,设置频道场景为 BROADCASTING。

options.channelProfile = Constants.CHANNEL_PROFILE_LIVE_BROADCASTING;

Among them, there are two types of clientRoleType. If it is CLIENT_ROLE_BROADCASTER, it can broadcast and receive. If it is CLIENT_ROLE_AUDIENCE, it can only watch, and it is currently an anchor mode.

- join channel

// 使用临时 Token 加入频道。

// 你需要自行指定用户 ID,并确保其在频道内的唯一性。

int res = mRtcEngine.joinChannel(token, channelName, KeyCenter.RTC_UID, options);

if (res != 0)

{

// Usually happens with invalid parameters

// Error code description can be found at:

// en: https://docs.agora.io/en/Voice/API%20Reference/java/classio_1_1agora_1_1rtc_1_1_i_rtc_engine_event_handler_1_1_error_code.html

// cn: https://docs.agora.io/cn/Voice/API%20Reference/java/classio_1_1agora_1_1rtc_1_1_i_rtc_engine_event_handler_1_1_error_code.html

Log.e("video","join err:"+RtcEngine.getErrorDescription(Math.abs(res)));

}

The joinChannel method has a return value. You can see whether we have successfully joined the channel. If not, we can check the cause of the error and solve it accordingly; if it succeeds, you can observe the callback method of the IRtcEngineEventHandler object, focusing on onError( int err) and onJoinChannelSuccess(String channel, int uid, int elapsed) If we receive the callback of the onJoinChannelSuccess method, we can focus on the onUserJoined(int uid, int elapsed) method, and we can start displaying the remote content in this method.

- display remote content

@Override

// 监听频道内的远端主播,获取主播的 uid 信息。

public void onUserJoined(int uid, int elapsed) {

Log.e(TAG, "onUserJoined->" + uid);

runOnUiThread(new Runnable() {

@Override

public void run() {

// 从 onUserJoined 回调获取 uid 后,调用 setupRemoteVideo,设置远端视频视图。

setupRemoteVideo(uid);

}

});

}

private void setupRemoteVideo(int uid) {

FrameLayout container = findViewById(R.id.remote_video_view_container);

SurfaceView surfaceView = new SurfaceView (getBaseContext());

surfaceView.setZOrderMediaOverlay(true);

container.addView(surfaceView);

mRtcEngine.setupRemoteVideo(new VideoCanvas(surfaceView, VideoCanvas.RENDER_MODE_FIT, uid));

}

Finally, the remote video will be displayed on remote_video_view_container.

4. Display the locally played video to the remote user

Synchronize local playback to remote

How to synchronize local video to remote users? In fact, in essence, showing the local camera and the locally played video to the remote user is the same for the remote user. The difference is which data source the local user sends to the remote user. One is the camera and the other is the player.

- Make the following changes when setting up local video

FrameLayout container = findViewById(R.id.local_video_view_container);

// 创建一个 SurfaceView 对象,并将其作为 FrameLayout 的子对象。

SurfaceView surfaceView = new SurfaceView (getBaseContext());

container.addView(surfaceView);

// 将 SurfaceView 对象传入声网,以渲染本地视频。

VideoCanvas videoCanvas = new VideoCanvas(surfaceView, Constants.RENDER_MODE_HIDDEN, Constants.VIDEO_MIRROR_MODE_AUTO,

Constants.VIDEO_SOURCE_MEDIA_PLAYER, mediaPlayer.getMediaPlayerId(), KeyCenter.RTC_UID);

mRtcEngine.setupLocalVideo(videoCanvas);

- When int res = mRtcEngine.joinChannel(token, channelName, KeyCenter.RTC_UID, options); is 0, call the following method:

int res = mRtcEngine.joinChannel(token, channelName, KeyCenter.RTC_UID, options);

if (res != 0)

{

// Usually happens with invalid parameters

// Error code description can be found at:

// en: https://docs.agora.io/en/Voice/API%20Reference/java/classio_1_1agora_1_1rtc_1_1_i_rtc_engine_event_handler_1_1_error_code.html

// cn: https://docs.agora.io/cn/Voice/API%20Reference/java/classio_1_1agora_1_1rtc_1_1_i_rtc_engine_event_handler_1_1_error_code.html

Log.e("video","join err:"+RtcEngine.getErrorDescription(Math.abs(res)));

}else {

mediaPlayer = mRtcEngine.createMediaPlayer();

mediaPlayer.registerPlayerObserver(this);

mediaPlayer.open(MetaChatConstants.VIDEO_URL, 0);

}

3. Listen to the onPlayerStateChanged callback and execute the play method when the state is PLAYER_STATE_OPEN_COMPLETED, the code is as follows:

public void onPlayerStateChanged(io.agora.mediaplayer.Constants.MediaPlayerState state, io.agora.mediaplayer.Constants.MediaPlayerError error) {

if(state == io.agora.mediaplayer.Constants.MediaPlayerState.PLAYER_STATE_OPEN_COMPLETED){

mediaPlayer.play();

}

}

At this point, the functional use is completed.

other functions

In addition to the functions mentioned above, Acoustic Network also provides some other functions, which can be used directly when needed, or can be used with a small amount of modification.

For example, the spatial sound effect function , which is based on acoustic principles, simulates the propagation, reflection, and absorption effects of sound in different spatial environments. It can provide users with chatting sounds of passers-by, waves, wind, etc. during travel, making users more immersive experience

Another example is the real-time video sharing function . This function can be realized with almost no delay, remote viewing, listening to music and other functions. It can even realize the ability of karaoke.

More functions look forward to discovering together!

References

- Sign up and try out 10,000 minutes of free soundnet video SDK per month, experience four lines of code, and quickly build immersive real-time interactive scenes in 30 minutes

- Download and experience the related SDK & Demo of SonicNet