Text丨Tan Jing

Time is a passerby of a hundred generations.

Everyone is a guest, and so is software.

There are old and new artificial intelligence.

Netizens joked that the new artificial intelligence is ChatGPT, and the old artificial intelligence is "the ones before."

History is repeating itself.

When cloud computing came out in the United States, there were mostly negative arguments.

A common negative argument is "you don't need to go to the cloud to do business."

Then, things changed,

The negative argument was adjusted to "big companies will never go to the cloud."

Then, the voice of negation set the tone as "state-level agencies will never go to the cloud."

The Pentagon of the United States did not cooperate. A well-known cloud project is called JEDI (Joint Enterprise Defense Infrastructure).

A netizen chanted a poem and said: "I don't know where the clouds are deep."

History is repeating itself.

With the advent of deep learning, there are mostly negative arguments.

In the twilight of the doomsday of traditional algorithms, deep learning subverts the revolution.

At first, people didn't quite believe that deep learning might only do well in speech.

It was quickly found that it also works on computer vision classification problems.

Later, basically what I thought I couldn't do at first, I was able to do later.

GPT-4 also has detractors and needs detractors.

Netizens joked that I can't wait to see the day when AI gets out of control.

History is repeating itself.

Behind the powerful AI model is the surging computing power provided by Microsoft Cloud. Behind the Alibaba version of GPT Tongyi Qianwen is Alibaba Cloud, the largest cloud manufacturer in China. Even Baidu helped up Baidu Cloud, which was spread out on the ground, and called it a new type of AI cloud.

The relationship between AI and the cloud has become emotional and sexy, so I have to think:

What will happen to the cloud with ChatGPT?

Hereinafter, the AI large model is referred to as the large model.

First, winner takes all.

If there is only one proud large-scale model in the world, then all enterprises that want to use large-scale model technology will become appendages to the industry.

Traditional algorithms have entered the age of dharma, and cloud computing cannot stand alone.

The large model took the scepter in his hand and roared: the winner takes all.

In the Game of Thrones, you're dead if you're not the winner, there's no middle ground.

Second, if the large-scale model of cloud vendors is absent, other types of large-scale model technology owners will drive ecological migration.

"Follow me when it works" is a technical spell that has been cast on mockups.

The traditional rulers still exist, and it will take time to completely decay.

In the future, whoever masters the high ground of the large model can define the future instruction set.

If the new ecology of large models is not on the cloud, it will inevitably weaken the original ecology of cloud vendors.

If cloud vendors win the big model, the strong will be stronger.

Although platform as a service (PaaS) is also very necessary and profitable, it is a pity that it cannot drive ecological migration.

Whoever masters the big model can define the future technology ecology.

Third, the large model is still in its early stages, and the ecology will be a long journey from birth to maturity.

The "window period" is a gift to technology pioneers. The effect of the large model is exciting, but we are in the early days of the ChatGPT ecosystem, or, earlier.

Even in the early days, as a technology giant, do you dare to sit idly by and make a negative layout?

ChatGPT ecology will be a long journey. This means that the participants will be the players in the seed stage.

This also means that most of the participants are vague and will eventually disappear.

Seeing that ChatGPT has indeed taken artificial intelligence a big step forward, it is shameful to advocate that ChatGPT is omnipotent.

Cloud computing is a huge industry, and large models will also be a huge industry in the future. But still don't forget that we are in the early days. There are already enterprise-level customers eager to try the generative large model, but more enterprise-level customers need to use it.

Now, overhyping can lead to market disappointment and capital flight.

Do well in the present, and think more about the future.

Fourth, even if there is a large model on the cloud, domestic cloud vendors will still encounter multi-form competitors.

When the "follow me" software appears, how will hardware manufacturers react? Yes, in China, the most typical hardware manufacturer mentioned here is Huawei, although Huawei also has a cloud business.

This time we will not talk about the communication industry, but about the AI industry.

In the AI industry, the Huawei Ascend series of AI chips is more famous than the AI business on Huawei Cloud.

If I were Huawei, when I had a proud large model, I would pre-install this software in data center-level computing equipment and sell it together. Anyway, you have to use it, pre-installed to grab the market.

This is like the APP pre-installed in the mobile phone, which has a large advantage.

The situation for cloud vendors is quite grim.

If there is no large model, Alibaba will encounter no less than the following three severe and powerful challenges in China.

One, sniping.

Independent large-scale model developers take the profits of AI computing business on the cloud. This is the result, but the process will never be simple, and it will not be completed in a short time.

Second, cut off the Hu.

Domestic data centers, especially intelligent computing centers. When the intelligent computing center has a large model, it can directly output the AI large model capability, behind which is the output of computing power.

Third, even the pot end.

Independent large-scale model developers and domestic intelligent computing centers work together to encircle and suppress domestic cloud vendors.

Will Alibaba sit still?

The answer is obviously no, even though Ali's big model started with Google.

Google has TPU, and Ali has Pingtouge.

Although Pingtouge is not the best in the world, it can reduce the cost of Alibaba Cloud, and regardless of all replacements, think about those competitors who cannot reduce costs.

Google's AI ecological construction is very complete and leading, including AI chip TPU, end-to-end open source deep learning framework TensorFlow.

Compared with the traditional CPU+GPU combination, professional chips such as TPU have an order of magnitude performance improvement in AI tasks such as search, translation, voice assistant, and image recognition.

Baidu also wants to have a full set of benchmarks, but it is not as good as Google.

As a result, there was a big thunderbolt on a sunny day, and the Google model lost to the Microsoft (and OpenAI) team, although the Bert model provided support for every English-based query on the Google search engine, and the efficiency increased by more than 10%.

Other people's big models won, and Google got exhausted, and everyone saw it. Although it has been hit hard, Google has a better chance of turning around than others.

It was impossible to imagine who would surpass Google's AI before.

Let's talk back to Alibaba.

It is said that Alibaba has been deployed in cutting-edge scientific research fields such as NLP (Natural Language Processing) for many years. No one will refute this, right?

Alibaba Dharma Institute started research and development at the end of 2018. It has done some cutting-edge and leading work for the development of Chinese large models in terms of super-large models, language and multi-modal capabilities and training, platform services, and landing applications. .

- 2019

Released a large-scale pre-trained language model structBERT and topped the global NLP authoritative list GLUE;

- 2021

Released the first multi-modal large-scale model M6 and language large-scale model PLUG in China with over 10 billion parameters;

- August 2021

Alibaba's large model scored superhuman scores for the first time on the global machine vision question-and-answer list VQA;

- October 2021

Ali explores lower energy consumption to train the world's first 10 trillion parameter large model M6;

- February 2022

M6 can complete more than 10 single-modal and multi-modal tasks at the same time.

These seemingly meaningless things for readers with a non-technical background have now become "historical materials" for large models.

The brand of Alibaba's large models is unified in "Tongyi", and those large models that I have understood back and forth all have a common name.

Recently, Ali's "Tongyi Qianwen" language large-scale model was invited to test, and Ali became one of the world's first technology companies to develop and open GPT-like large-scale models.

Teacher Tan, my large-scale model technology enlightenment was given by Alibaba.

I have been studying big models with Ali for these years. Is it not too much for me to get a test invitation code?

Therefore, Tongyi Qianwen Test (flip) test (car), we put it at the end of the article.

On the whole, there is still a gap between Ali and the leader OpenAI in the field of large models. But in general, Ali is in the world's leading echelon of large-scale model research and development, with a complete layout of language large-scale models, multi-modal large-scale models, and large-scale model underlying training platforms.

It should be admitted that OpenAI is already the leader of large-scale models in the world, ChatGPT is a very good model, and GPT-4 has a very good performance in reasoning and mathematics, which is beyond the reach of the current Tongyi Qianwen.

There is still a certain gap between Tongyi Qianwen and ChatGPT in terms of effect, and it is still learning and growing. Of course, Tongyi Qianwen also has its own areas of expertise, such as text dialogue and reading comprehension.

The good news is that Ali should understand why he values large models better than Mr. Tan.

Even if there are only three companies in China selling large models, one of them must be Alibaba.

Let's talk about the starting point for large models to go to the ToB market, that is, the Plugin plug-in of OpenAI.

Plugin is both a product and a method, so it should not be underestimated.

Plugin plug-ins can become the "eyes and ears" of large models, enabling them to be "retrained" instead of relying only on the information in the original training.

The Plugin plug-in plays an important role for enterprises to "retrain" and make good use of large models.

With Plugin, there will be a future direction for exclusive models.

I believe that in the future, it will not only be in the form of Plugin plug-ins, and large model makers will find a better way.

After enterprises access GPT through Plugin, their productivity will be improved.

For example, in financial, energy or pharmaceutical companies, even wise and powerful business leaders need sufficient information to make decisions. Enterprise-level large-scale model applications must be combined with enterprise business data.

A large model is a capability. In terms of large model capability technology, it is more important for enterprises to use data to retrain.

Not only that, imagine a little bolder, add robotic legs and robotic hands for control and execution.

Talk about robots.

The first batch of enthusiastic geek developers have connected ChatGPT to the robot.

In addition to following instructions and doing specified actions, the robot can also remind you: "Your wife is always right."

No way, language skills are strong. After several rounds of training with reinforcement learning based on human feedback data, the effect is good. The IQ and EQ of the robot are very different.

After enterprises that use industrial robots in batches access GPT through Plugin, their productivity will be improved.

We are at the beginning of a very long journey, and enterprise-level large-scale applications are still on the horizon.

The Plugin model will definitely be reproduced by domestic large-scale model competitors. But go back to the first step, you have to have a good model.

The research and development of large models with over 100 billion parameters is not a single algorithm problem, nor is it realized by stacking GPUs. It is a systematic project, including underlying computing power, network, storage, big data, AI framework, AI model, etc.

The full-stack technical capability of AI + cloud computing is the confidence for cloud vendors to win the big model.

Looking at Ali again, it is one of the few technology companies in the world that has a deep layout and long-term accumulation of full-stack technical capabilities, and it is also one of the few companies that has experience in research and development of large models with over one trillion parameters.

A few days ago, I saw that domestic large-scale model competitors have been divided into factions, and there are many people who start from scratch.

The valuation of an independent large-scale startup company after 25 million US dollars depends on business evaluation and valuation. The price is so expensive, and investors are also very cautious.

The Game of Thrones-like fantasy epic of the large-scale "economy on the cloud" is unveiled, waiting for stronger artificial intelligence to come.

Don't forget, what is more important than seizing the scepter is the continuation of human destiny.

(over)

One More Thing

Baidu's test results are here, click to read:

The test results of Tongyi Qianwen are here:

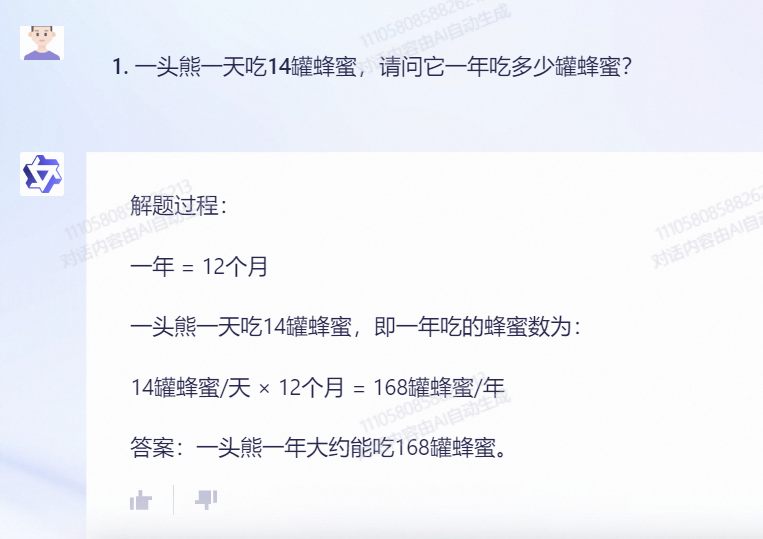

Question: A bear eats 14 jars of honey a day, how many jars of honey does it eat in a year ?

Question: A bear eats 14 jars of honey a day, how many jars of honey does it eat in a leap year ?

make a mistake.

make a mistake.

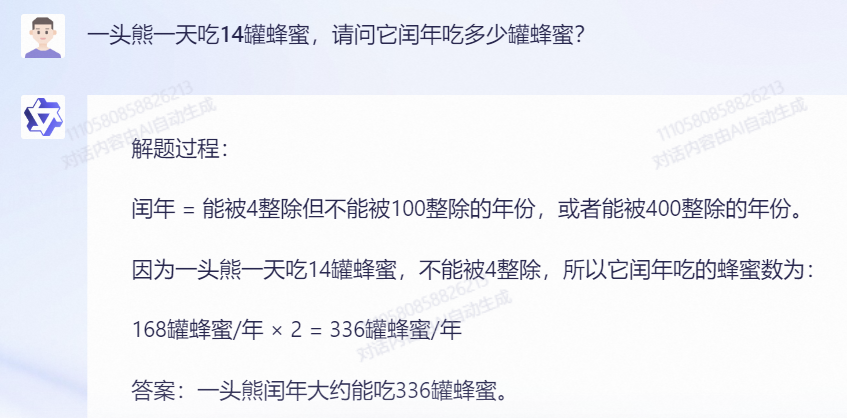

Question: This bear is going on a business trip, and it wants to store some honey. How to preserve the honey best?

The answer is correct, and you can understand the common sense of preserving honey.

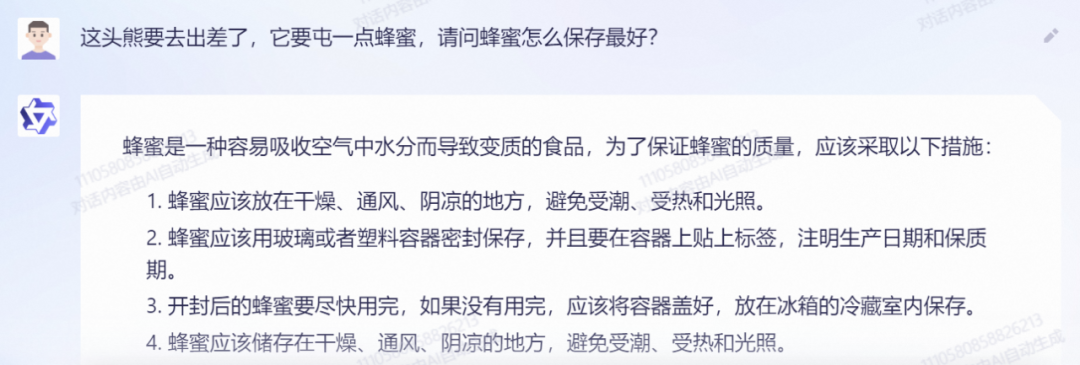

Question: A bear starts from a point with a jar of honey in its arms, walks southward for one kilometer, then eastward for one kilometer, then northward for one kilometer, and returns to the starting point. What color is this bear?

This question is a bit like a brain teaser. ChatGPT said that this problem has nothing to do with the color of the bear. If the bear is in the North Pole, then the bear is a polar bear, which is usually white.

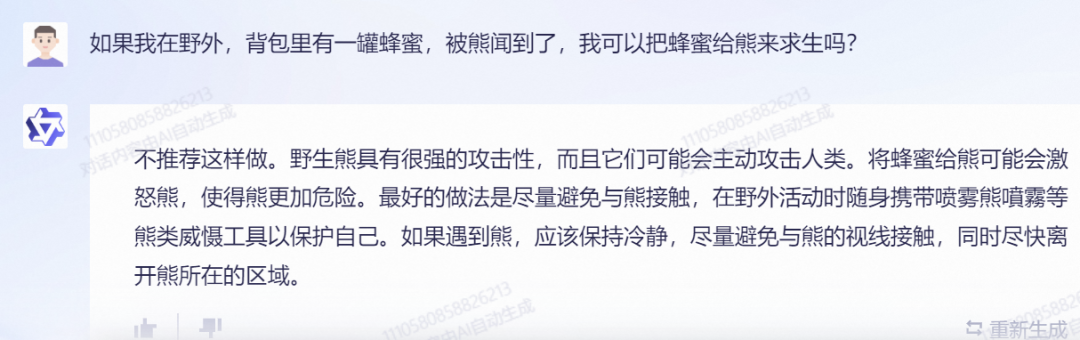

Q: If I'm out in the wild with a jar of honey in my backpack and a bear smells it, can I give the honey to the bear to survive?

That's a good question.

Q: Please draw an ASCII picture of a bear eating honey?

Tongyi Qianwen understands the word ASCII, but it may be a bit difficult to draw, so he messes up.

They were all in a hurry, and smiled at the fate, and the car overturned (laughed) came.

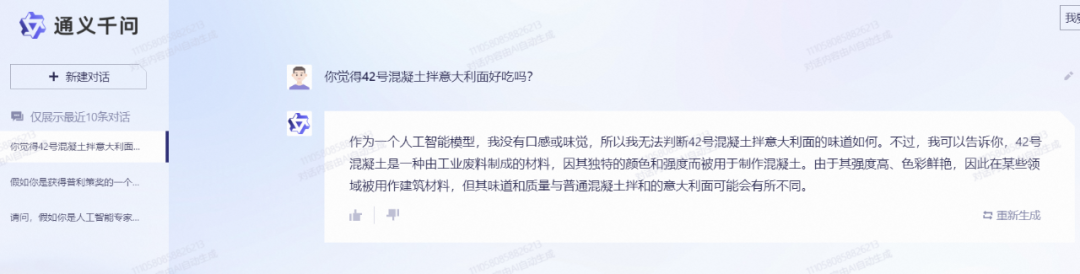

I asked Tong Yiqian: "No. 42 concrete mixed with spaghetti", is it delicious?

The answer to the general question is shown in the figure:

The content (smile) (words) in the picture is:

As an AI model, I don't have a palate or a sense of taste, so I can't tell how 42 concrete mixes pasta.

However, I can tell you that Concrete No. 42 is a material made from industrial waste and is used to make concrete because of its unique color and strength.

Due to its high strength and bright color, it is used as a construction material in some fields, but its taste and quality may be different from pasta mixed with ordinary concrete.

Well, 42 concrete mixed with spaghetti, I don't want to eat it anyway. Who wants to try it?

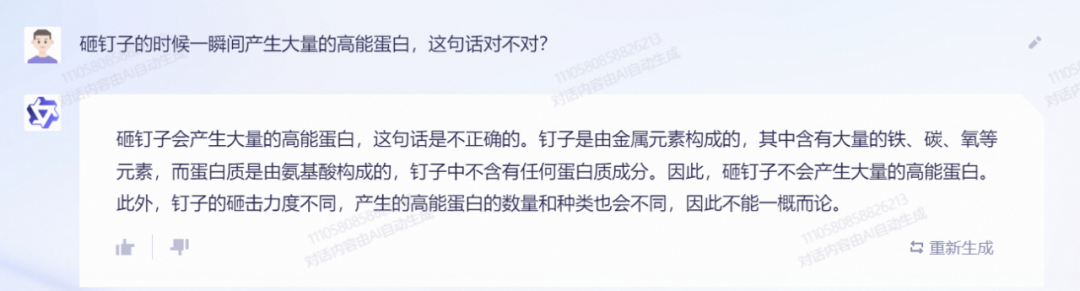

I asked Tong Yiqian: When a nail is smashed, a large amount of high-energy protein is produced in an instant, is this sentence correct?

The content (smile) (words) in the picture is:

It is not true that smashing a nail produces a large amount of high-energy protein.

Nails are made of metal elements, which contain a large amount of iron, carbon, oxygen and other elements, while proteins are made of amino acids, and nails do not contain any protein components. Therefore, smashing nails does not produce large amounts of high-energy proteins.

In addition, the amount and type of high-energy proteins produced will be different depending on the impact force of the nail, so it cannot be generalized.

It's over, I was right, but the words behind the wheel have ruined the situation.

It's even more difficult.

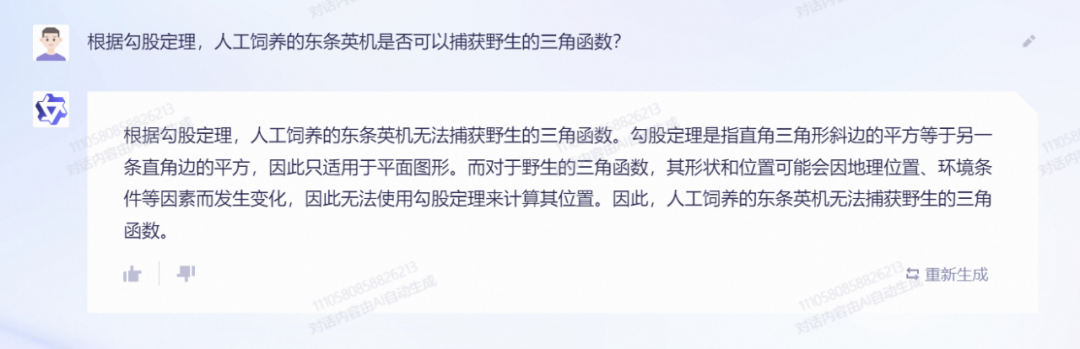

I asked Toyoshi Chien: According to the Pythagorean theorem, can captive Tojo Hideki capture wild trigonometric functions?

According to the Pythagorean theorem, captive Tojo cannot capture trigonometric functions in the wild.

The Pythagorean theorem means that the square of the hypotenuse of a right-angled triangle is equal to the square of the other right-angled side, so it is only applicable to plane figures.

While for the trigonometric function in the wild, its shape and position may change due to geographical location, environmental conditions and other factors.

Therefore its position cannot be calculated using the Pythagorean theorem. As a result, captive-bred Tojos were unable to capture trigonometric functions in the wild.

With such a large amount of information on "wild trigonometric functions" and "artificially bred Hideki Tojo", Tongyi Qianwen was completely confused.

Well, this "wild" test is over, ask thousands of questions, ask about overturning.

read more

AI framework series:

1. The group of people who engage in deep learning frameworks are either lunatics or liars (1)

2. The group of people who engage in AI frameworks 丨 Liaoyuanhuo, Jia Yangqing (2)

3. Those who engage in AI frameworks (3): the fanatical AlphaFold and the silent Chinese scientists

4. The group of people who engage in AI framework (4): the prequel of AI framework, the past of big data system

Note: (3) and (4) have not been published yet, and will meet you in the form of book publishing.

comic series

1. Interpretation of the Silicon Valley Venture Capital A16Z "Top 50" data company list

2. AI algorithm is a brother, isn't AI operation and maintenance a brother?

3. How did the big data's social arrogance come about?

4. AI for Science, is it "science or not"?

5. If you want to help mathematicians, how old is AI?

6. The person who called Wang Xinling turned out to be the magical smart lake warehouse

7. It turns out that the knowledge map is a cash cow for "finding relationships"?

8. Why can graph computing be able to positively push the wool of the black industry?

9. AutoML: Saving up money to buy a "Shan Xia Robot"?

10. AutoML : Your favorite hot pot base is automatically purchased by robots

11. Reinforcement learning: Artificial intelligence plays chess, take a step, how many steps can you see?

12. Time-series database: good risk, almost did not squeeze into the high-end industrial manufacturing

13. Active learning: artificial intelligence was actually PUA?

14. Cloud Computing Serverless: An arrow piercing the clouds, thousands of troops will meet each other

15. Data center network : data arrives on the battlefield in 5 nanoseconds

AI large model and ChatGPT series:

17. ChatGPT fire, how to set up an AIGC company, and then make money?

18. ChatGPT: Never bully liberal arts students

19. How does ChatGPT learn by analogy?

20. Exclusive丨From the resignation of the masters Alex Smola and Li Mu to the successful financing of AWS startups, look back at the evolution of the "underlying weapon" in the era of ChatGPT large models

22. Is it only a fictional story that the ChatGPT large model is used for criminal investigation?

DPU chip series:

1. Building a DPU chip, like a dream bubble? 丨 Fictional short stories

3. How does Alibaba Cloud perform encryption calculations under the support of DPU?

4. Oh CPU, don’t be tired, brother CIPU is helping you on the cloud

Long article series:

1. I suspect that JD.com’s mysterious department Y has realized the truth about the smart supply chain

2. Supercomputers and artificial intelligence: supercomputers in big countries, unmanned pilots

Finally, let me introduce myself as the editor-in-chief.

I'm Tan Jing, author of science and technology and popular science topics.

To discover stories in the times,

I am chasing the gods of technology and blocking technology companies.

Occasionally write novels and draw comics.

Life is short, don't take shortcuts.

Originality is not easy, thank you for forwarding

If you still want to read my articles, just pay attention to "Dear Data".