After leveraging OpenAI's GPT-4 to introduce ChatGPT-like functionality for Bing Chat, Bing Image Creator, Microsoft 365 Copilot, Azure OpenAI Service, and GitHub Copilot X. Microsoft has now announced the launch of DeepSpeed-Chat , a low-cost open source solution for RLHF training, based on Microsoft's open source deep learning optimization library DeepSpeed ; claiming that even with a single GPU, anyone can create high-quality ChatGPT-style Model.

The company said that despite the great efforts of the open source community, there is still a lack of a large-scale system that supports end-to-end reinforcement learning (RLHF) based on human feedback mechanisms, which makes it difficult to train a powerful ChatGPT-like model. The training of the ChatGPT model is based on the RLHF method in the InstructGPT paper, which is completely different from the pre-training and fine-tuning of common large language models, which makes the existing deep learning system have various limitations in training the ChatGPT-like model. Therefore, in order to make ChatGPT-type models more accessible to ordinary data scientists and researchers, and to make RLHF training truly popular in the AI community, they released DeepSpeed-Chat.

DeepSpeed-Chat has the following three core functions:

- Simplified training and enhanced inference experience for ChatGPT type models : multiple training steps with just one script, including models pretrained with Huggingface, running all three steps of InstructGPT training with DeepSpeed-RLHF systems, or even generating your own ChatGPT-like model. Additionally, an easy-to-use inference API is provided for users to test conversational interactions after model training.

- DeepSpeed-RLHF module : DeepSpeed-RLHF replicates the training model in the InstructGPT paper and ensures three-fold training including a) supervised fine-tuning (SFT), b) reward model fine-tuning, and c) reinforcement learning with human feedback (RLHF). Each step corresponds to it one by one. In addition, data abstraction and mixing functions are provided to support users to use multiple data sources from different sources for training.

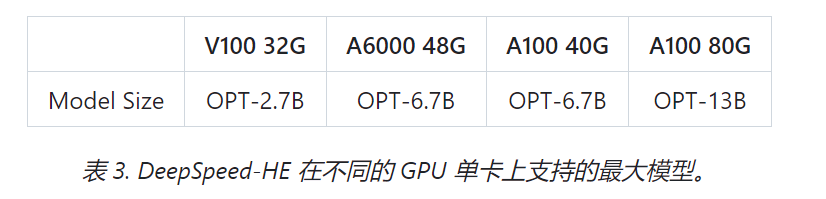

- DeepSpeed-RLHF system : It integrates DeepSpeed's training engine and inference engine into a unified hybrid engine (DeepSpeed Hybrid Engine or DeepSpeed-HE) for RLHF training. DeepSpeed-HE is able to seamlessly switch between inference and training modes in RLHF, enabling it to take advantage of various optimizations from DeepSpeed-Inference, such as tensor parallel computing and high-performance CUDA operators for language generation, while training Some also benefit from ZeRO- and LoRA-based memory optimization strategies. DeepSpeed-HE is also capable of intelligent memory management and data caching at different stages of RLHF automatically.

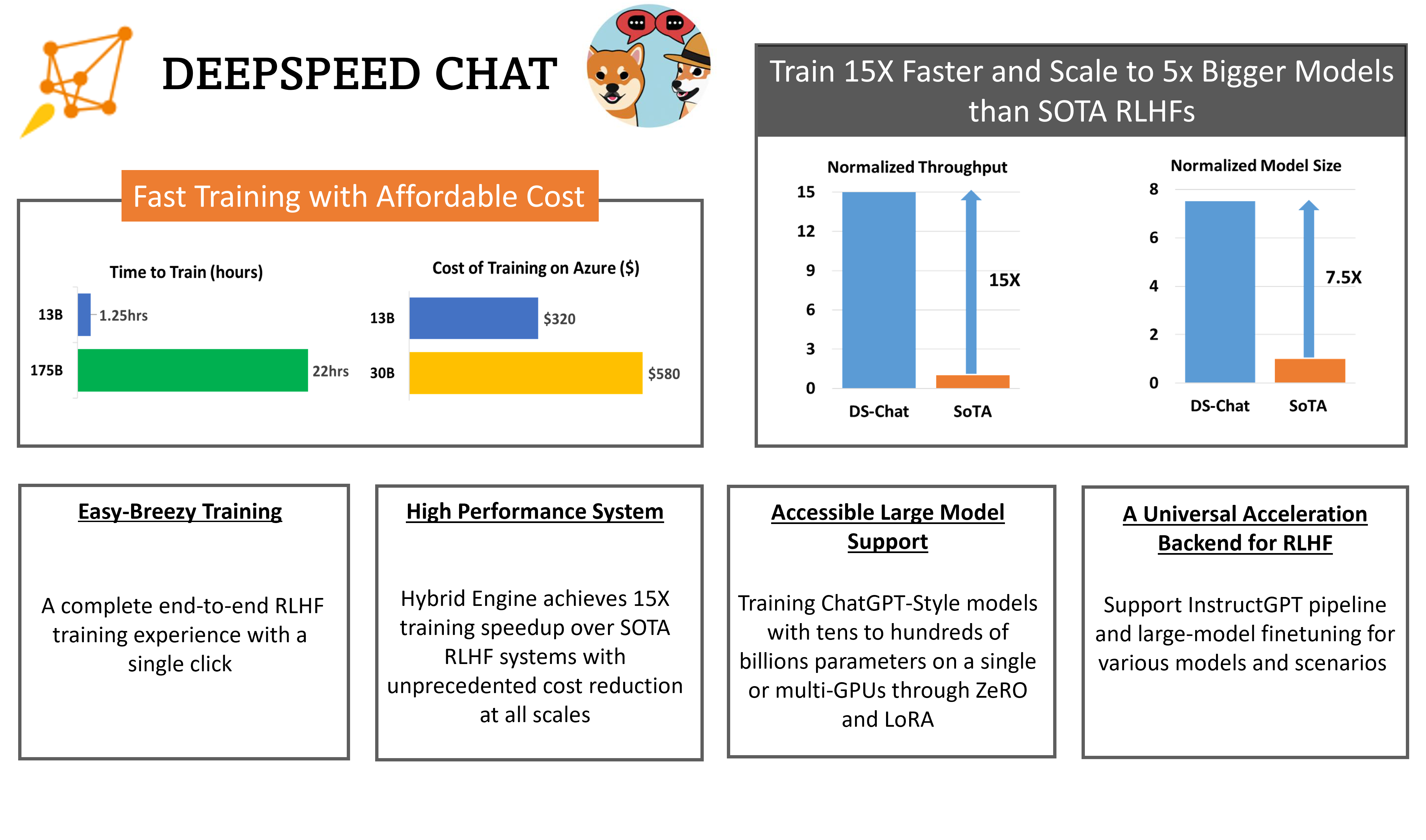

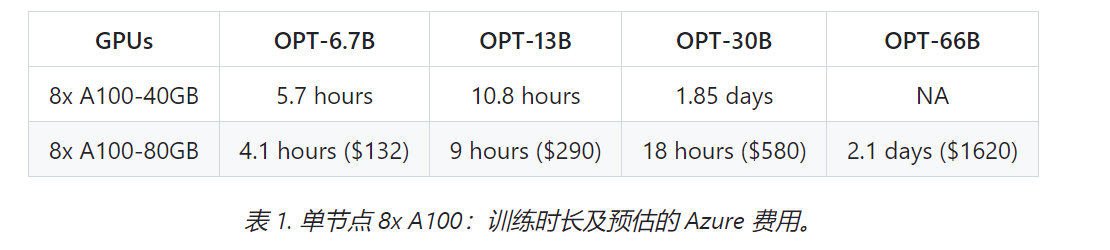

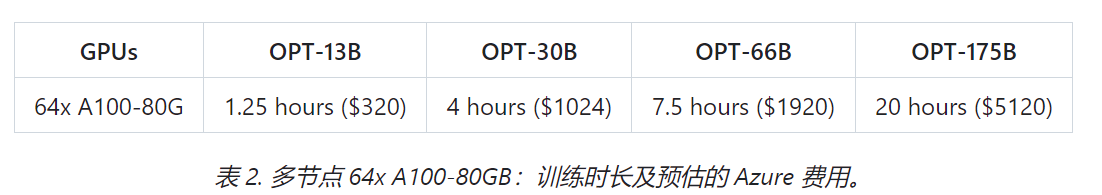

The content of the document points out that the advantage of DeepSpeed Chat compared with other advanced solutions is that it is more than 15 times faster than the existing system in terms of efficiency and economy . It only takes 9 hours to train an OPT-13B model on the Azure cloud, and only 18 Hours to train the OPT-30B model for less than $300 and $600, respectively.

In terms of speed and scalability, even a 13B model can be trained in 1.25 hours, and a huge 175B model can be trained in less than a day using a 64 GPU cluster. In terms of accessibility and popularization of RLHF, models with more than 13 billion parameters can be trained on a single GPU. In addition, it supports running 6.5B and 50B models respectively on the same hardware, achieving up to 7.5 times improvement.

Despite the recent opposition and concerns about the development of ChatGPT-like large language models, Microsoft seems to be still fully advancing its AI development . For Microsoft's release, former Meta AI expert Elvis also excitedly said that DeepSpeed Chat provides an end-to-end RLHF pipeline to train a model similar to ChatGPT, which is lacking in Alpaca and Vicuna, and solves the problem of cost and efficiency. challenge. It's "Microsoft's impressive open source effort ... is a big deal ".

More details can be found in the official documentation .