:::info This article is the first article in the series of articles "Building AIGC Applications on Serverless Platforms". :::

Preface

With the rise of new-generation AIGC applications such as ChatGPT, Stable Diffusion, and Midjourney, the development related to AIGC applications has become more and more extensive, with a tendency to explode. In the long run, this wave of application explosion is not just a formality. It has produced actual productivity value in various fields, such as copilot system 365 and DingTalk Intelligence in the office field; github copilot and cursor ide in the coding field; Miaoya Camera in the entertainment field; and what is certain is the future application of AIGC The number will be greater and the types will be richer. The company's internal software or SOP will be integrated with AI as much as possible. This will inevitably create a massive demand for AIGC application development, which also represents a huge market opportunity.

Challenges in developing AIGC applications

The application prospects of AIGC are so attractive that they may determine the future development direction of enterprises. However, for many small and medium-sized enterprises and developers, it is still very costly to start developing aigc applications:

- Acquisition of basic model services: chatgpt provides a very complete API development system, but it is not open to domestic customers. It is very difficult to deploy services using open source models.

- High costs. The shortage of GPUs has caused the cost of GPUs to skyrocket. Local purchase of high-specification graphics cards requires a lot of one-time costs, and online services are not available.

- End-to-end docking: The API of pure model service cannot be turned into direct productivity, and it is necessary to complete [Enterprise Data & Enterprise SOP] -> LLM Service -> Complete links on various end-sides

Solution for function computing AIGC application

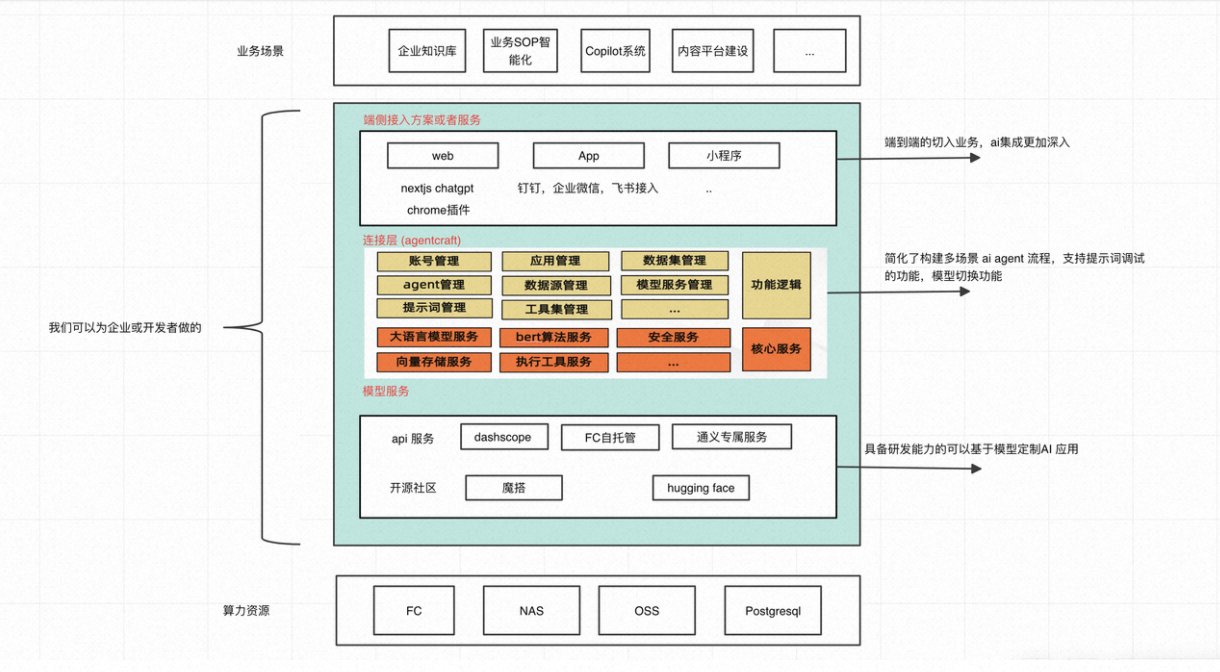

Function Computing centers around the creation and use of AIGC, and provides a complete package from infrastructure to application ecosystem, development end to user end. It

mainly consists of three parts:

- 1. Model service base. Function Compute can deploy AI models from Kaiyuan communities such as Moda, huggingface, etc. We have made special customizations for intelligent knowledge base/assistant scenarios such as LLM and Bert, and are connected to OpenAI compatible api specifications to provide an Key deployment templates and visual web access interface help developers or enterprises quickly get started with the deployment of llama2, chatglm2, Tongyi Qianwen and other models.

- 2. Business connection layer, connecting business requirements and basic resources such as model services, security services, database services, etc. This part has a lot of the same logic as the AIGC application part, such as account system, data set management, prompt word templates, and tool management. , model service management, etc. From the perspective of each business side, the only difference is the prompt words, knowledge base and tool set. The underlying model services, security services and database services are shared. This layer can simplify the construction of different businesses. The process of intelligent scenarios can quickly and cost-effectively build AIGC applications for different businesses.

- 3. Client side: The client is the part that uses AI applications and is also the part closest to the business. This part is more concerned with how to integrate AI services into existing users, such as DingTalk, Enterprise WeChat and other office IM systems. , as well as web browser plug-ins, etc., function computing + eventbridge can quickly help AI services access these clients.

This tutorial will first share the first part, how to quickly deploy AIGC related model services including LLM model and Embedding (Bert) model through function computing.

LLM model and Embedding service deployment tutorial

Preliminary preparation

To use this project, you need to activate the following services:

Perform CPU/GPU inference calculation on AIGC

https://free.aliyun.com/?pipCode=fcStores large language models and models required for Embedding services. New users please first receive the free trial resource package

https://free.aliyun.com/?product=9657388&crowd=personal

Application introduction

Application details

Use Alibaba Cloud Function Compute to deploy open source large model applications, providing interfaces and ChatGPT-Next-Web clients that are compatible with openai specifications.

Operational documentation

llm application template

Log in to the Alibaba Cloud Function Compute Console->Application->Create Application->Artificial Intelligence Select AI Large Language Model API Service Application Template Click "Create Now"

Apply template settings

After setting up, click "Create and deploy default environment"

Waiting for deployment

This process will be completed automatically for you

Service access

After the service is deployed successfully, two links will be returned.

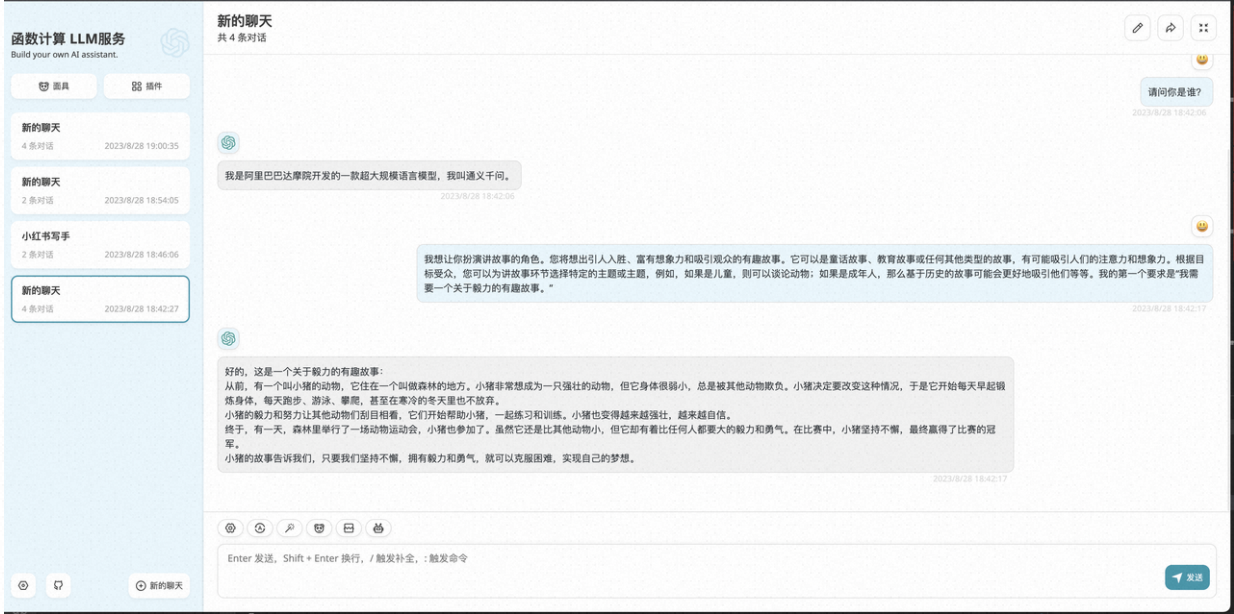

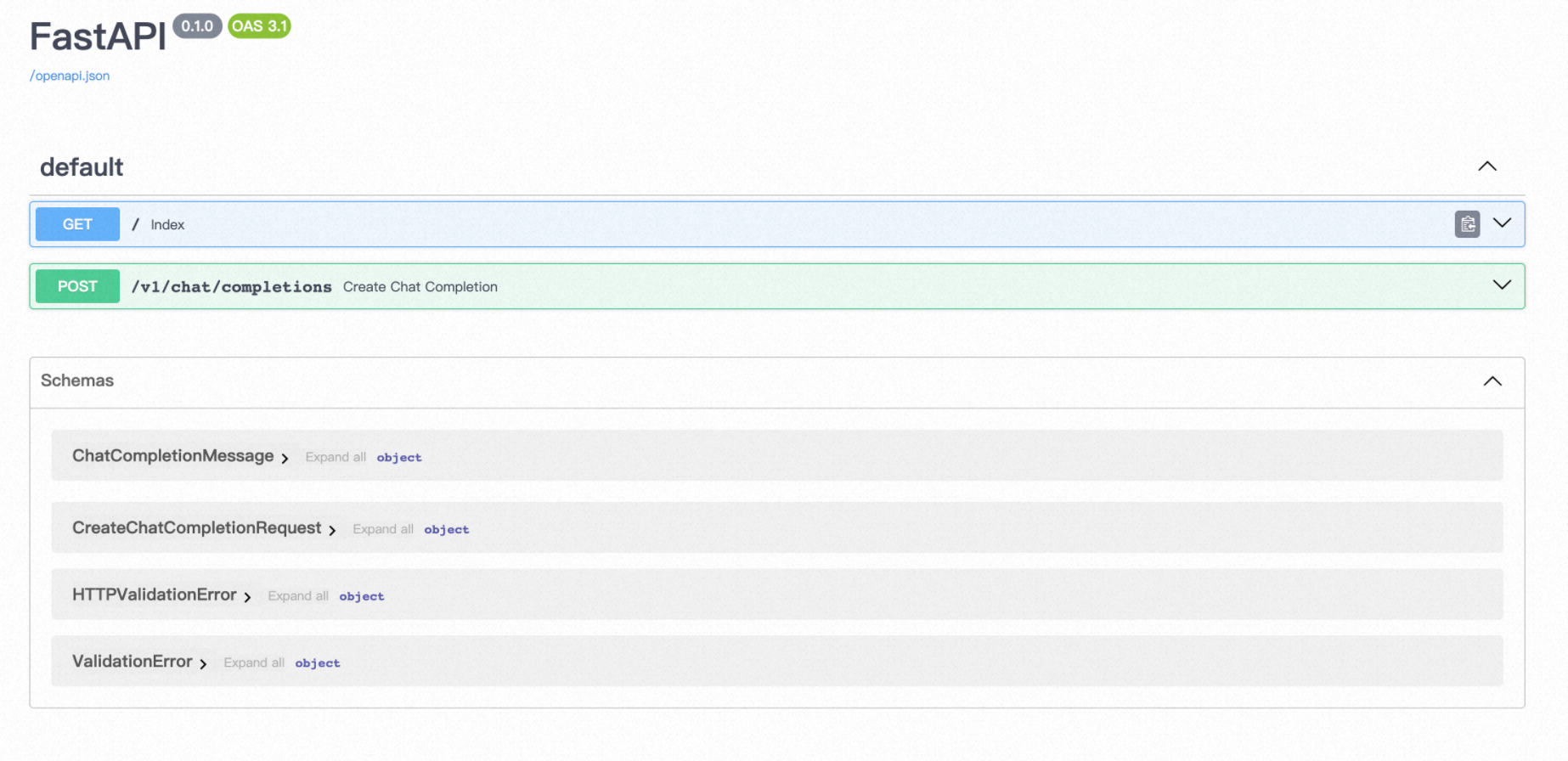

1. llm-server is the API service interface of the large language model, based on swagger.

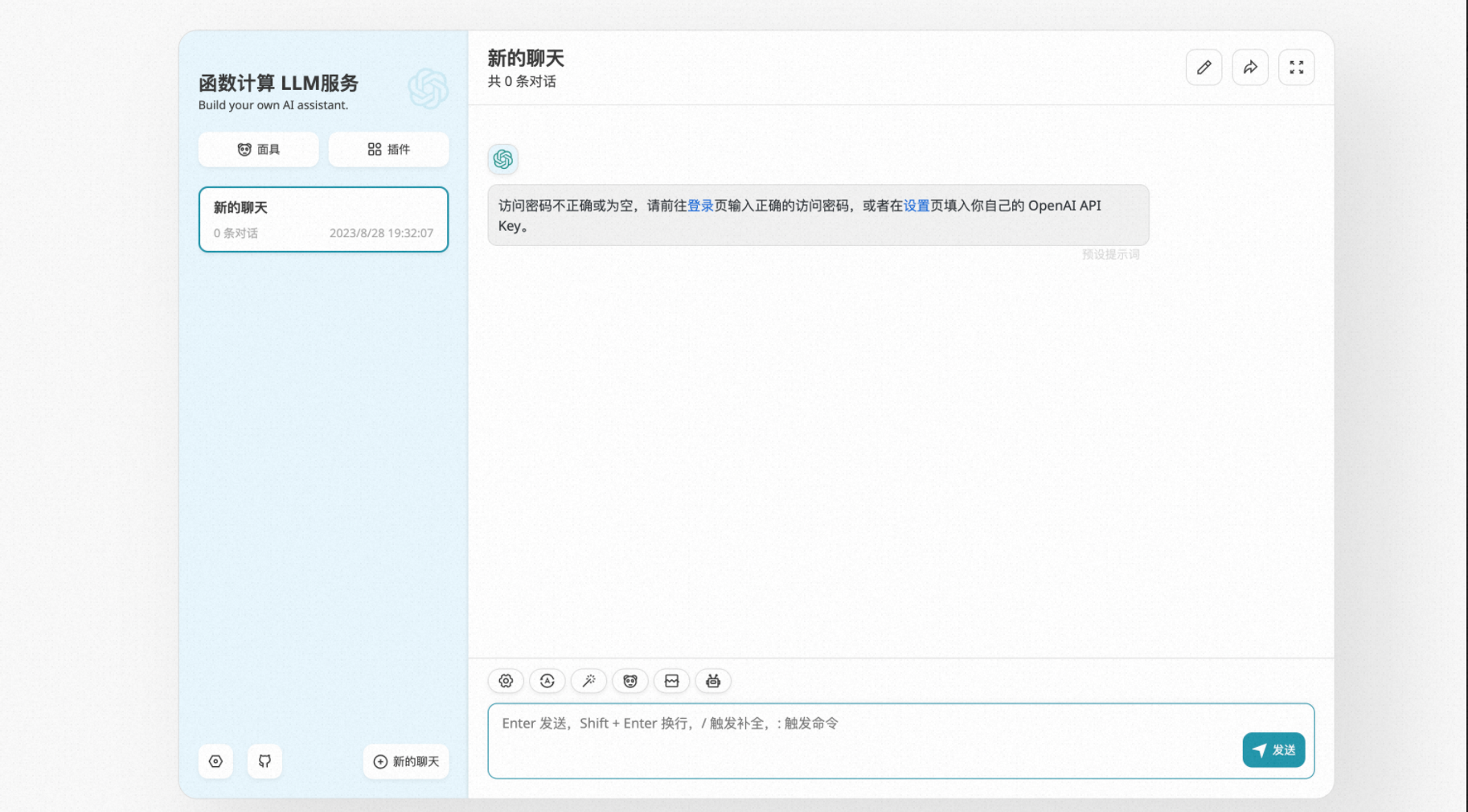

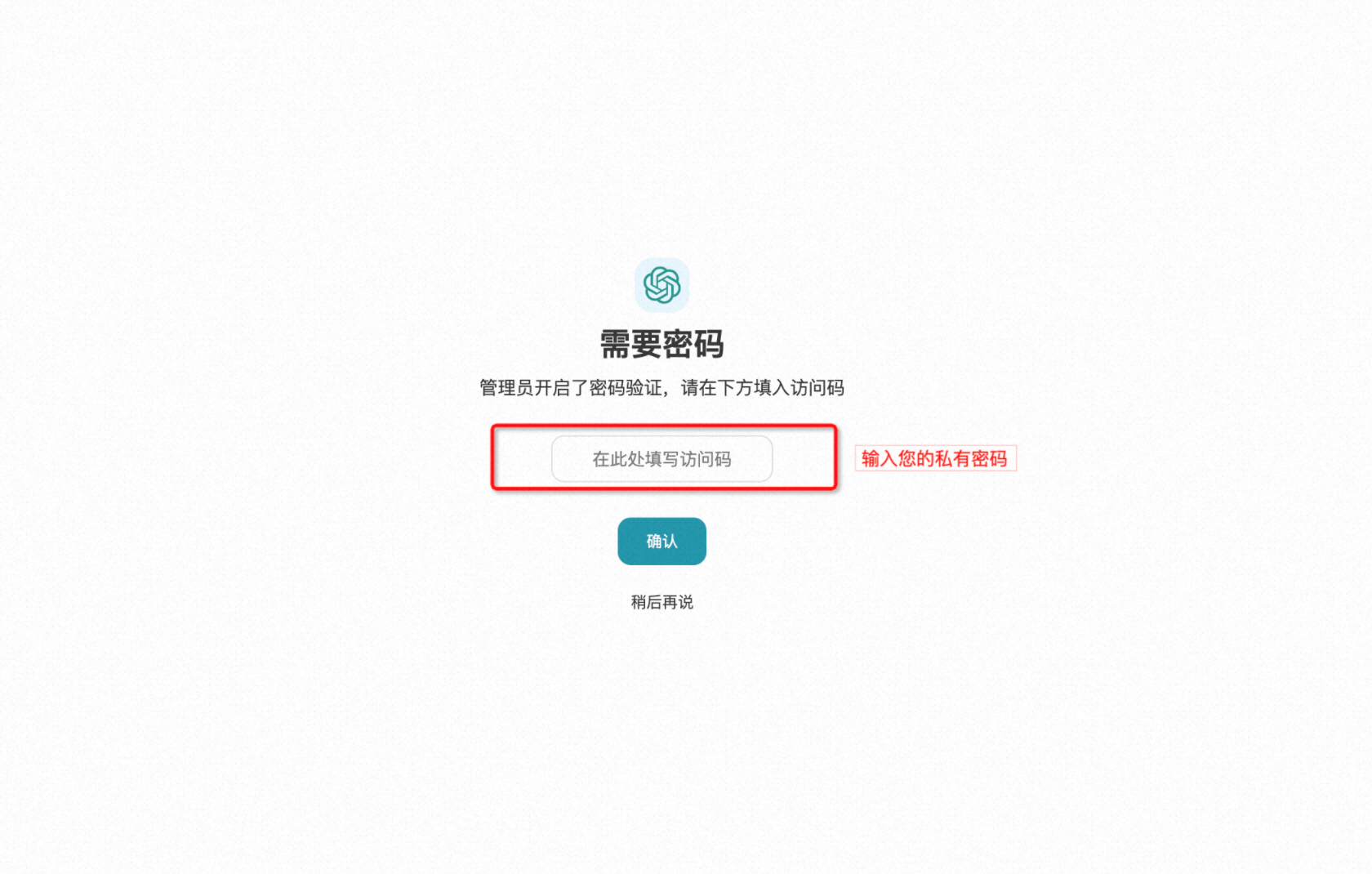

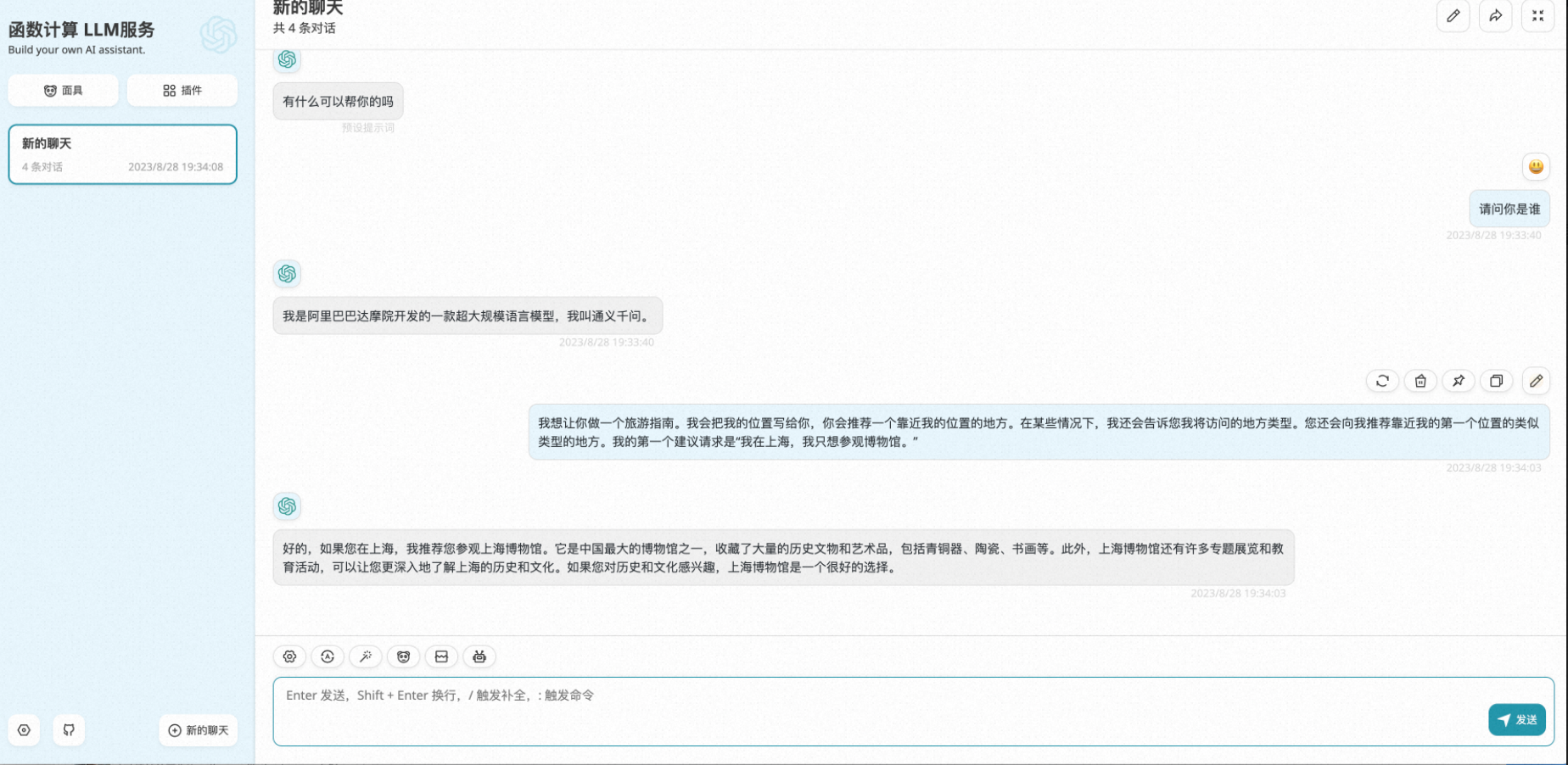

2. llm-client is for accessing the client. To access the client, you need to enter the client private password filled in previously. , after filling it out, you can test it and use it.

embedding template

Log in to the Alibaba Cloud Function Compute Console -> Application -> Create Application -> Artificial Intelligence, select the " Open Source Bert Model Service " application template and click "Create Now"

Apply template settings

Just select the region and create it

Waiting for deployment

This process will be completed automatically for you

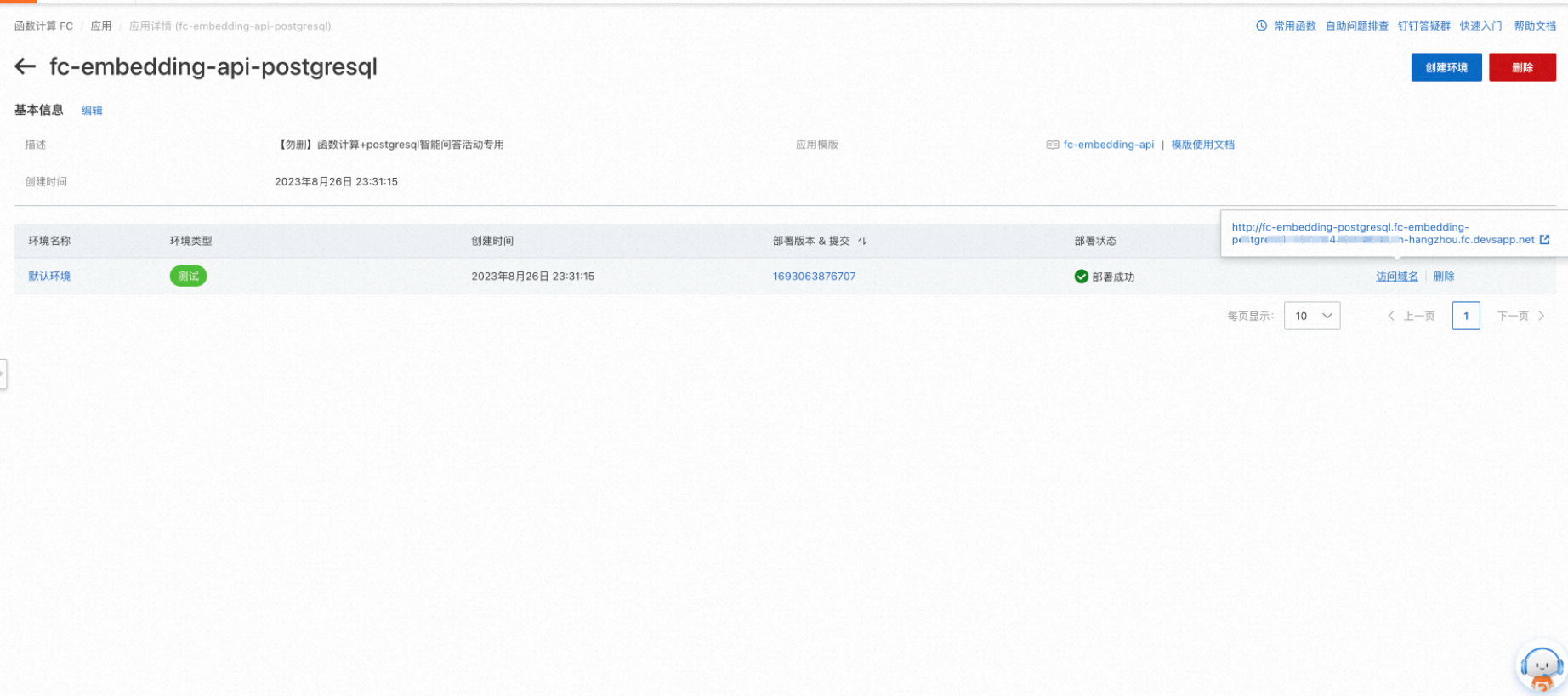

Service access

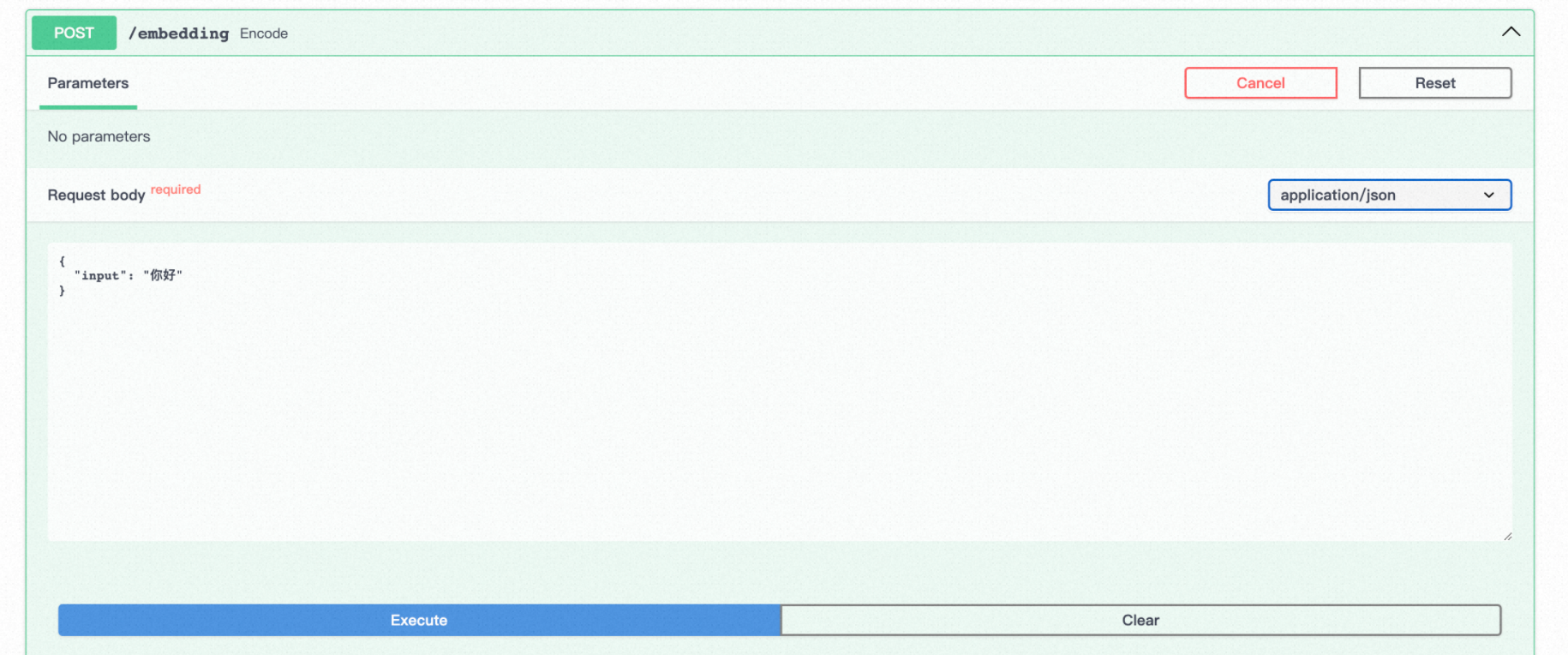

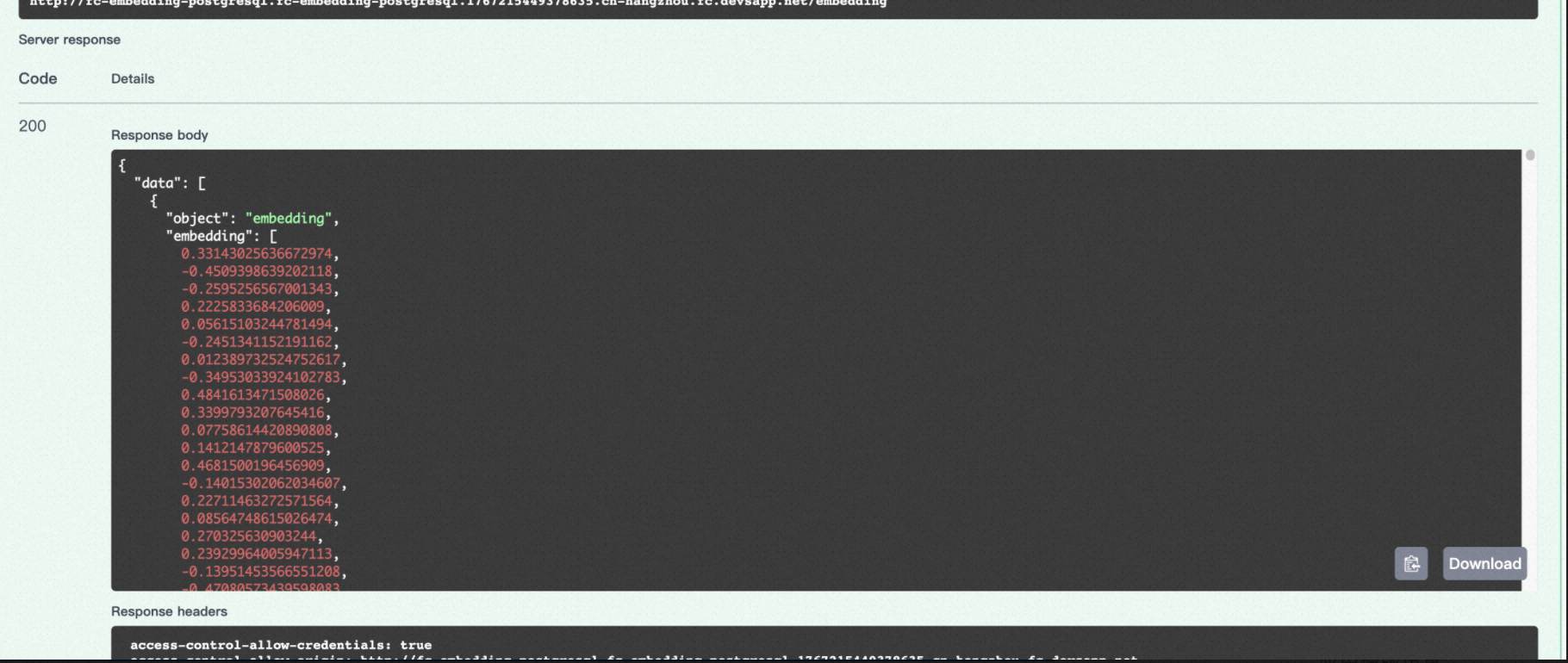

Test embedding interface

Summarize

Deploying the LLM service means that you have started the AIGC application development journey. Next, I will continue to share more AIGC-related content with you, including how to build a knowledge base Q&A application and how to use tools to improve the ability of large language models. How to access your own IM system and build an integrated plug-in for the web.

Odyssey of the Universe, Alibaba Cloud x Semir AIGC T-shirt Design Competition

one,

**【Semar

Quickly deploy Stable Diffusion through Function Compute FC: built-in model library + common plug-ins + ControlNet, supporting SDXL1.0

Participate now: https://developer.aliyun.com/adc/series/activity/aigc_design

to win three generations of Airpods , customized co-branded T -shirts, Semir luggage and other peripherals!

two,

You can also participate in topic activities to discuss the future development trends of AIGC. Users can communicate and share from any angle, and win prizes such as eye protection desk lamps, data cables, and silent purifiers!

Topic: "Compared to excellent fashion designers, how can AIGC push the boundaries of design inspiration?" Is it purely mechanical language or a little bit of inspiration? 》

https://developer.aliyun.com/ask/548537?spm=a2c6h.13148508.setting.14.4a894f0esFcznR