Intra Template Matching Prediction (Intra TMP, Intra Template Matching Prediction) is a special intra prediction coding tool, mainly used for screen content coding (screen content coding , SCC ).

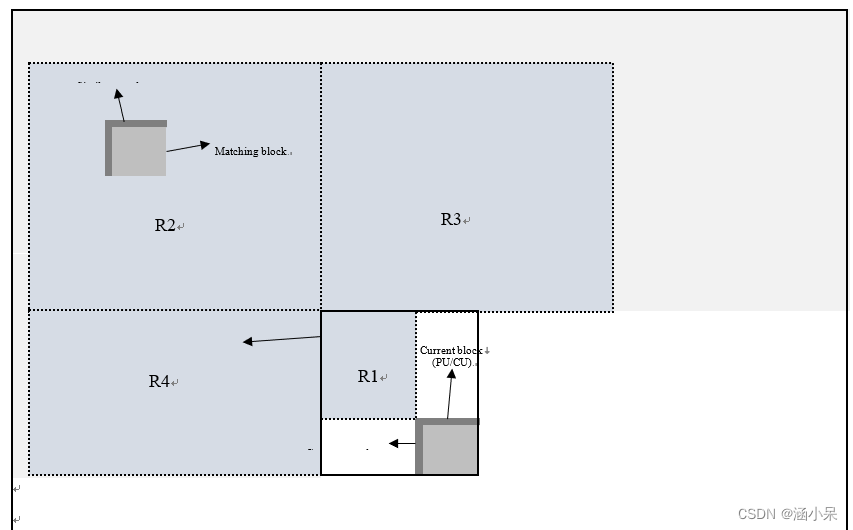

TMP matches the reconstructed part of the current frame through the L-shaped template, and uses the corresponding block as the prediction value of the current block. For a predefined search range, the encoder searches for the template most similar to the current template in the reconstructed part of the current frame, and uses the corresponding block as the prediction block. The encoder then signals the use of this mode, and the same prediction operation is performed on the decoder side. As shown below:

The prediction signal is generated by matching the L-shaped neighboring template of the current block with another block in the predefined search area in the above figure. Specifically, the search range is divided into 4 areas:

- R1: within the current CTU

- R2: top-left outside the current CTU

- R3: above the current CTU

- R4: left to the current CTU

Within each region, the reference template with the smallest SAD relative to the current template is searched, and its corresponding reference block is used as the prediction block.

The dimensions of all regions (SearchRange_w, SearchRange_h) are set proportional to the block dimensions (BlkW, BlkH) so that each pixel has a fixed number of SAD comparisons. Right now:

SearchRange_w = a * BlkW

SearchRange_h = a * BlkH

where "a" is a constant controlling the gain/complexity tradeoff. In fact, "a" is equal to 5.

Experimental performance: (Based on VTM10.0, the experimental results come from the proposal JVET-V0130)

| All Intra Main10 |

|||||

| Over VTM10 |

|||||

| Y |

U |

V |

EncT |

DecT |

|

| Class A1 |

-0.06% |

-0.01% |

-0.07% |

198% |

203% |

| Class A2 |

-0.60% |

-0.84% |

-0.87% |

182% |

171% |

| Class B |

-0.48% |

-0.51% |

-0.55% |

182% |

183% |

| Class C |

-0.15% |

-0.16% |

-0.13% |

159% |

134% |

| Class E |

-0.73% |

-0.92% |

-0.90% |

174% |

160% |

| Overall |

-0.40% |

-0.47% |

-0.49% |

178% |

168% |

| Class D |

0.03% |

-0.02% |

0.07% |

135% |

118% |

| Class F |

-2.16% |

-2.03% |

-2.05% |

132% |

285% |

| Class TGM |

-6.47% |

-6.13% |

-6.16% |

133% |

487% |

| Random Access Main 10 |

|||||

| Over VTM10 |

|||||

| Y |

U |

V |

EncT |

DecT |

|

| Class A1 |

0.02% |

0.19% |

0.17% |

126% |

119% |

| Class A2 |

-0.22% |

-0.18% |

-0.21% |

114% |

103% |

| Class B |

-0.17% |

-0.28% |

-0.02% |

119% |

108% |

| Class C |

0.01% |

0.05% |

0.08% |

113% |

79% |

| Class E |

|||||

| Overall |

-0.09% |

-0.08% |

0.01% |

118% |

101% |

| Class D |

0.08% |

0.24% |

0.19% |

112% |

109% |

| Class F |

-0.97% |

-1.07% |

-0.91% |

117% |

120% |

| Class TGM |

-2.86% |

-2.79% |

-2.74% |

117% |

138% |

It can be seen that the time complexity of the decoding end is very high under All Intra, and it works well on screen content sequences (Class F and Class TGM), and can also bring a certain gain (-0.4%) on CTC sequences.

TMP is divided into three templates according to the adjacent available pixels: L-shape template, left template and upper template

enum RefTemplateType

{

L_SHAPE_TEMPLATE = 1,

LEFT_TEMPLATE = 2,

ABOVE_TEMPLATE = 3,

NO_TEMPLATE = 4

};First call the getTargetTemplate function to obtain the target template (that is, the template of the current CU)

#if JVET_W0069_TMP_BOUNDARY

// 目标模板,即当前CU块模板

void IntraPrediction::getTargetTemplate( CodingUnit* pcCU, unsigned int uiBlkWidth, unsigned int uiBlkHeight, RefTemplateType tempType )

#else

void IntraPrediction::getTargetTemplate( CodingUnit* pcCU, unsigned int uiBlkWidth, unsigned int uiBlkHeight )

#endif

{

const ComponentID compID = COMPONENT_Y;

unsigned int uiPatchWidth = uiBlkWidth + TMP_TEMPLATE_SIZE;

unsigned int uiPatchHeight = uiBlkHeight + TMP_TEMPLATE_SIZE;

unsigned int uiTarDepth = floorLog2( std::max( uiBlkHeight, uiBlkWidth ) ) - 2;

Pel** tarPatch = m_pppTarPatch[uiTarDepth]; // 目标模板

CompArea area = pcCU->blocks[compID];

Pel* pCurrStart = pcCU->cs->picture->getRecoBuf( area ).buf; // 当前块左上角的重建像素

unsigned int uiPicStride = pcCU->cs->picture->getRecoBuf( compID ).stride;

unsigned int uiY, uiX;

//fill template 填充模板

//up-left & up

Pel* tarTemp;

#if JVET_W0069_TMP_BOUNDARY

if( tempType == L_SHAPE_TEMPLATE )

{

#endif

// 填充左上和上侧模板

Pel* pCurrTemp = pCurrStart - TMP_TEMPLATE_SIZE * uiPicStride - TMP_TEMPLATE_SIZE;

for( uiY = 0; uiY < TMP_TEMPLATE_SIZE; uiY++ )

{

tarTemp = tarPatch[uiY];

for( uiX = 0; uiX < uiPatchWidth; uiX++ )

{

tarTemp[uiX] = pCurrTemp[uiX];

}

pCurrTemp += uiPicStride;

}

//left 填充左侧模板

for( uiY = TMP_TEMPLATE_SIZE; uiY < uiPatchHeight; uiY++ )

{

tarTemp = tarPatch[uiY];

for( uiX = 0; uiX < TMP_TEMPLATE_SIZE; uiX++ )

{

tarTemp[uiX] = pCurrTemp[uiX];

}

pCurrTemp += uiPicStride;

}

#if JVET_W0069_TMP_BOUNDARY

}

else if( tempType == ABOVE_TEMPLATE ) // 填充上模板

{

Pel* pCurrTemp = pCurrStart - TMP_TEMPLATE_SIZE * uiPicStride;

for( uiY = 0; uiY < TMP_TEMPLATE_SIZE; uiY++ )

{

tarTemp = tarPatch[uiY];

for( uiX = 0; uiX < uiBlkWidth; uiX++ )

{

tarTemp[uiX] = pCurrTemp[uiX];

}

pCurrTemp += uiPicStride;

}

}

else if( tempType == LEFT_TEMPLATE ) // 填充左侧模板

{

Pel* pCurrTemp = pCurrStart - TMP_TEMPLATE_SIZE;

for( uiY = TMP_TEMPLATE_SIZE; uiY < uiPatchHeight; uiY++ )

{

tarTemp = tarPatch[uiY];

for( uiX = 0; uiX < TMP_TEMPLATE_SIZE; uiX++ )

{

tarTemp[uiX] = pCurrTemp[uiX];

}

pCurrTemp += uiPicStride;

}

}

#endif

}Afterwards the candidateSearchIntra function searches for the best template within the search area by calling searchCandidateFromOnePicIntra

#if JVET_W0069_TMP_BOUNDARY

void IntraPrediction::candidateSearchIntra( CodingUnit* pcCU, unsigned int uiBlkWidth, unsigned int uiBlkHeight, RefTemplateType tempType )

#else

void IntraPrediction::candidateSearchIntra( CodingUnit* pcCU, unsigned int uiBlkWidth, unsigned int uiBlkHeight )

#endif

{

const ComponentID compID = COMPONENT_Y;

const int channelBitDepth = pcCU->cs->sps->getBitDepth( toChannelType( compID ) );

unsigned int uiPatchWidth = uiBlkWidth + TMP_TEMPLATE_SIZE;

unsigned int uiPatchHeight = uiBlkHeight + TMP_TEMPLATE_SIZE;

unsigned int uiTarDepth = floorLog2( std::max( uiBlkWidth, uiBlkHeight ) ) - 2;

Pel** tarPatch = getTargetPatch( uiTarDepth );

//Initialize the library for saving the best candidates 初始化最大模板失真

m_tempLibFast.initTemplateDiff( uiPatchWidth, uiPatchHeight, uiBlkWidth, uiBlkHeight, channelBitDepth );

short setId = 0; //record the reference picture.

#if JVET_W0069_TMP_BOUNDARY

// 搜索最佳候选

searchCandidateFromOnePicIntra( pcCU, tarPatch, uiPatchWidth, uiPatchHeight, setId, tempType );

#else

searchCandidateFromOnePicIntra( pcCU, tarPatch, uiPatchWidth, uiPatchHeight, setId );

#endif

//count collected candidate number

int pDiff = m_tempLibFast.getDiff(); // 搜索到的候选模板的失真

int maxDiff = m_tempLibFast.getDiffMax(); // 最大失真

if( pDiff < maxDiff )

{

m_uiVaildCandiNum = 1; // 如果搜索到的候选模板失真小于最大失真,则存在可用候选项

}

else

{

m_uiVaildCandiNum = 0;

}

}Finally, in the generateTMPrediction function, the predicted value is obtained according to the searched best position offset

bool IntraPrediction::generateTMPrediction( Pel* piPred, unsigned int uiStride, unsigned int uiBlkWidth, unsigned int uiBlkHeight, int& foundCandiNum )

{

bool bSucceedFlag = true;

unsigned int uiPatchWidth = uiBlkWidth + TMP_TEMPLATE_SIZE;

unsigned int uiPatchHeight = uiBlkHeight + TMP_TEMPLATE_SIZE;

foundCandiNum = m_uiVaildCandiNum;

if( foundCandiNum < 1 )

{

return false;

}

// 坐标偏移

int pX = m_tempLibFast.getX();

int pY = m_tempLibFast.getY();

Pel* ref;

int picStride = getStride();

int iOffsetY, iOffsetX;

Pel* refTarget;

unsigned int uiHeight = uiPatchHeight - TMP_TEMPLATE_SIZE;

unsigned int uiWidth = uiPatchWidth - TMP_TEMPLATE_SIZE;

//the data center: we use the prediction block as the center now.

//collect the candidates

ref = getRefPicUsed();

{

iOffsetY = pY;

iOffsetX = pX;

refTarget = ref + iOffsetY * picStride + iOffsetX; // 移动

for( unsigned int uiY = 0; uiY < uiHeight; uiY++ )

{

for( unsigned int uiX = 0; uiX < uiWidth; uiX++ )

{

piPred[uiX] = refTarget[uiX];

}

refTarget += picStride;

piPred += uiStride;

}

}

return bSucceedFlag;

}