Redis application problem

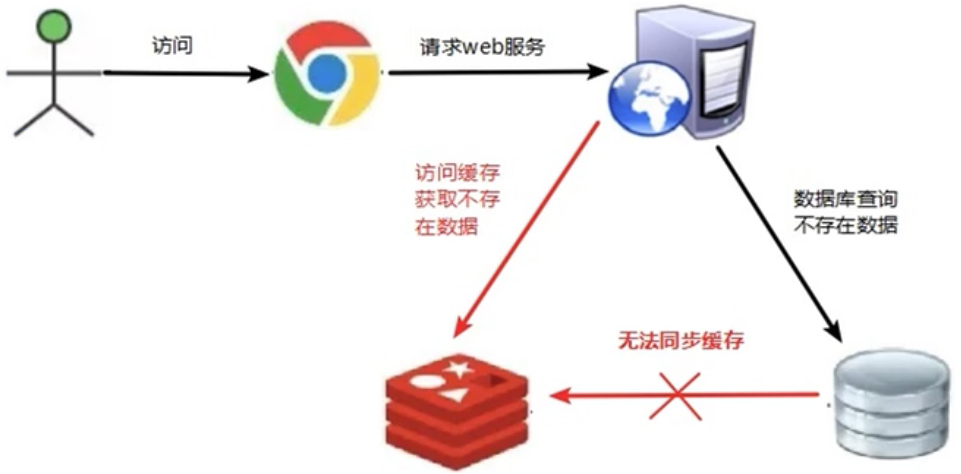

1 cache penetration

1.1 Reasons

Since there is no requested data in the cache, each request for the key cannot be obtained from the cache, and can only be requested from the database, which causes the pressure to increase and crash

1.2 Solutions

(1) Cache empty values:

If the data returned by the query is empty, we still cache the empty result and set the expiration time of the empty result to be very short to prevent excessive storage pressure

(2) Set the accessible list (white list):

Use the bitmaps type to define an accessible whitelist. The list id is used as the offset of the bitmaps. If the access id is not in the bitmaps, access is not allowed

(3) Using Bloom filter:

The Bloom filter can retrieve whether an element is in a collection. The advantage is that the space efficiency and query time far exceed the general algorithm. The disadvantage is that there is a certain rate of misjudgment and difficulty in deletion. You can use the Bloom filter to improve the white list query efficiency

(4) Real-time monitoring:

When it is found that the Redis hit rate begins to decrease rapidly, check the accessed objects and accessed data, and cooperate with the operation and maintenance personnel to set a blacklist to restrict services

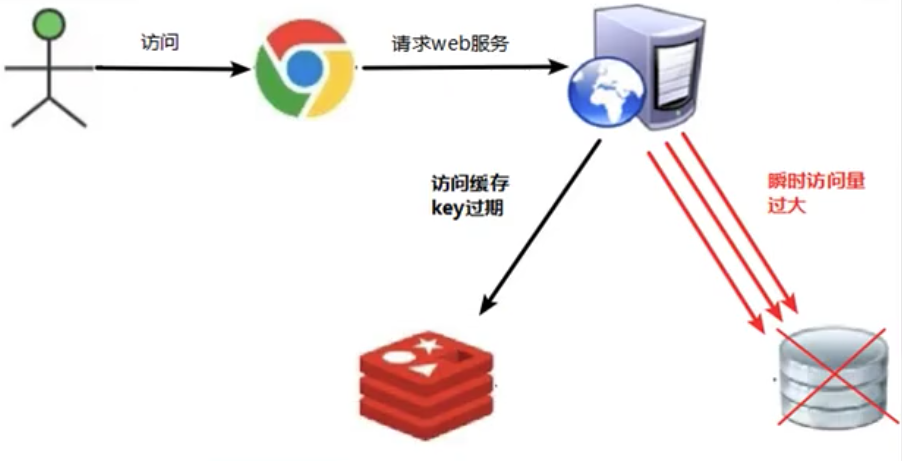

2 cache breakdown

2.1 Reasons

A certain key in redis has expired, and at the same time, a large number of accesses use this key, causing the pressure of database access to increase instantly, resulting in cache breakdown. The difference between it and the cache penetration is that redis is running normally when the breakdown occurs, and there is no large number of Key expirations.

2.2 Solutions

(1) Pre-set popular data: Before redis access peaks, store some popular data in redis in advance, and set the expiration time a little longer

(2) Real-time adjustment: The operation and maintenance personnel monitor which data is popular in real time, and adjust the expiration time of the key in real time

(3) Use locks: When the cache fails (that is, the query result is empty), instead of going to the database to query data immediately, set an exclusive lock for the query. If the lock fails, it means that other threads have already started querying the database and The cache is synchronized. Wait for a while and repeat the query operation just now. If the locking is successful, go to the database to query data, synchronize the cache, and delete the exclusive lock after success. This solution can definitely solve the cache breakdown problem, but the efficiency is low

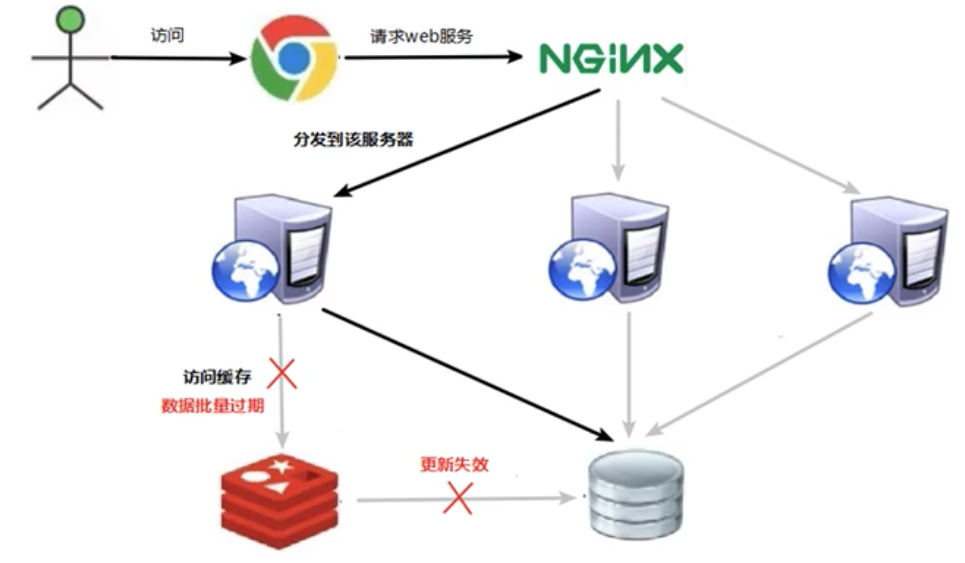

3 cache avalanche

3.1 Reasons

In a very small period of time, a large number of keys expired intensively, and the access request could not read the data, so the database access increased greatly, causing the server to crash

3.2 Solutions

(1) Build a multi-level cache architecture:

nginx cache + redis cache + other caches (ehcache, etc.)

(2) Use locks or queues:

Use locks or queues to ensure that there will not be a large number of threads reading and writing the database at one time, so as to avoid a large number of concurrent requests falling on the underlying storage system when failure occurs. This method is not suitable for high concurrency situations

(3) Set the expiration flag to update the cache:

Record whether the cache data expires (set the advance amount), if it expires, it will trigger a notification to update the actual key cache in the background

(4) Spread out the cache expiration time:

A random value can be added to the original failure time, so that the repetition rate of the expiration time will be reduced, reducing the probability of collective failure

4 Summary:

Penetration refers to a large number of invalid accesses, which do not exist in the cache, resulting in a decrease in redis hit rate and increased server pressure

Cache breakdown is the high-frequency key expiration, resulting in a large number of accesses directly querying the database, resulting in increased pressure

Cache avalanche refers to the centralized expiration of a large number of keys, resulting in a large number of access requests falling on the database, causing the server to crash